Still really busy these days haven’t had time to post much but I was just reading someone’s LinkedIn profile who works at a storage company and it got me thinking.

Who uses legacy storage? It seems almost everyone these days tries to benchmark their storage system against legacy storage. Short of something like maybe direct attached storage which has no functionality, legacy storage has been dead for a long time now. What should the new benchmark be? How can you go about (trying to) measuring it? I’m not sure what the answer is.

When is thin, thin?

One thing that has been in my mind a lot on this topic recently is how 3PAR constantly harps on about their efficient allocation at 16kB blocks. I think I’ve tried to explain this in the past but I wanted to write about it again. I wrote a comment on it in a HP blog recently I don’t think they published the comment though (haven’t checked for a few weeks maybe they did). But they try to say they are more efficient (by dozens or hundreds of times) than other platforms because of this 16kB allocation thing-a-ma-bob.

I’ve never seen this as an advantage to their platform. Whether you allocate in 16kB chunks or perhaps 42MB chunks in the case of Hitachi, it’s still a tiny amount of data in any case and really is a rounding error. If you have 100 volumes and they all have 42MB of slack hanging off the back of them, that’s 4.2GB of data, it’s nothing.

What 3PAR doesn’t tell you is this 16kB allocation unit is what a volume draws from a storage pool (Common Provisioning Group in 3PAR terms – which is basically a storage template or policy which defines things like RAID type, disk type, placement of data, protection level etc). They don’t tell you up front how much these storage pools provision storage on, which is in-part based on the number of controllers in the system.

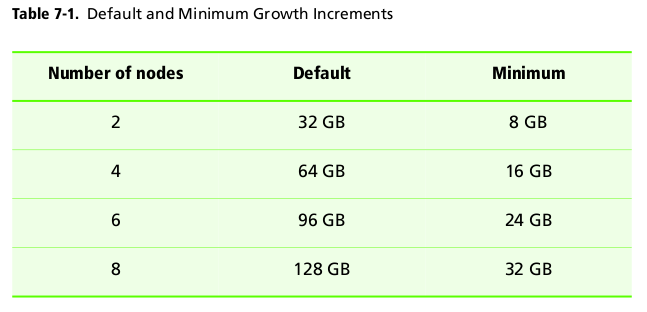

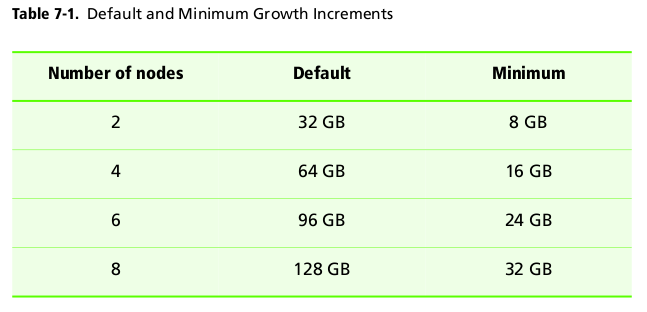

If your volumes max out a CPG’s allocated space and it needs more, it won’t grab 16kB, it will grab (usually at least) 32GB, this is adjustable. This is – I believe in part how 3PAR addresses minimizing impact of thin provisioning with large amounts of I/O, because it allocates these pools with larger chunks of data up front. They even suggest that if you have a really large amount of growth that you increase the allocation unit even higher.

Growth Increments for CPGs on 3PAR

I bet you haven’t heard HP/3PAR say their system grows in 128GB increments recently 🙂

It is important to note, or to remember, that a CPG can be home to hundreds of volumes, so it’s up to the user, if they only have one drive type for example maybe they only want 1 CPG. But I think as they use the system they will likely go down a similar path that I have and have more.

If you only have one or two CPGs on the system it’s probably not a big deal, though the space does add up. Still I think for the most part even this level of allocation can be a rounding error. Unless you have a large number of CPGs.

Myself, on my 3PAR arrays I use CPGs not just for determining data characteristics of the volumes but also for organizational purposes / space management. So I can look at one number and see all of the volumes dedicated to development purposes are X in size, or set an aggregate growth warning on a collection of volumes. I think CPGs work very well for this purpose. The flip side is you can end up wasting a lot more space. Recently on my new 3PAR system I went through and manually set the allocation level of a few of my CPGs from 32GB down to 8GB because I know the growth of those CPGs will be minimal. At the time I had maybe 400-450GB of slack space in the CPGs, not as thin as they may want you to think (I have around 10 CPGs on this array). So I changed the allocation unit and compacted the CPGs which reclaimed a bunch of space.

Again, in the grand scheme of things that’s not that much data.

For me 3PAR has always been more about higher utilizations which are made possible by the chunklet design and the true wide striping, the true active-active clustered controllers, one of the only(perhaps one of if not the first?) storage designs in the industry that goes beyond two controllers, and the ASIC acceleration which is at the heart of the performance and scalability. Then there is the ease of use and stuff, but I won’t talk about that anymore I’ve already covered it many times. One of my favorite aspects of the platform is the fact that they use the same design on everything from the low end to the high end, the only difference really is scale. It’s also part of the reason why their entry level pricing can be quite a bit higher than entry level pricing from others since there is the extra sauce in there that the competition isn’t willing or able to put on their low end box(s).

Sacrificing for data availability

I was talking to Compellent recently learning about some of their stuff for a project over in Europe and they told me their best practice (not a requirement) is to have 1 hot spare of each drive type (I think drive type meaning SAS or SATA, I don’t think drive size matters but am not sure) per drive chassis/cage/shelf.

They, like many other array makers don’t seem to support the use of low parity RAID (like RAID 50 3+1, or 4+1), they (like others) lean towards higher data:parity ratios I think in part because they have dedicated parity disks(they either had a hard time explaining to me how data is distributed or I had a hard time understanding, or both..), and dedicating 25% of your spindles to parity is very excessive, but in the 3PAR world dedicating 25% of your capacity to parity is not excessive(when compared to RAID 10 where there is a 50% overhead anyways).

There are no dedicated parity, or dedicated spares on a 3PAR system so you do not lose any I/O capacity, in fact you gain it.

The benefits to a RAID 50 3+1 configuration are a couple fold – you get pretty close to RAID 10 performance, and you can most likely (depending on the # of shelves) suffer a shelf failure w/o data loss or downtime(downtime may vary depending on your I/O requirements and I/O capacity after those disks are gone).

It’s a best practice (again, not a requirement) in the 3PAR world to provide this level of availability (losing an entire shelf), not because you lose shelves often but just because it’s so easy to configure and is self managing. With a 4, or 8-shelf configuration I do like RAID 50 3+1. In an 8-shelf configuration maybe I have some data volumes that don’t need as much performance so I could go with a 7+1 configuration and still retain shelf-level availability.

Or, with CPGs you could have some volumes retain shelf-level availability and other volumes not have it, up to you. I prefer to keep all volumes with shelf level availability. The added space you get with a higher data:parity ratio really has diminishing returns.

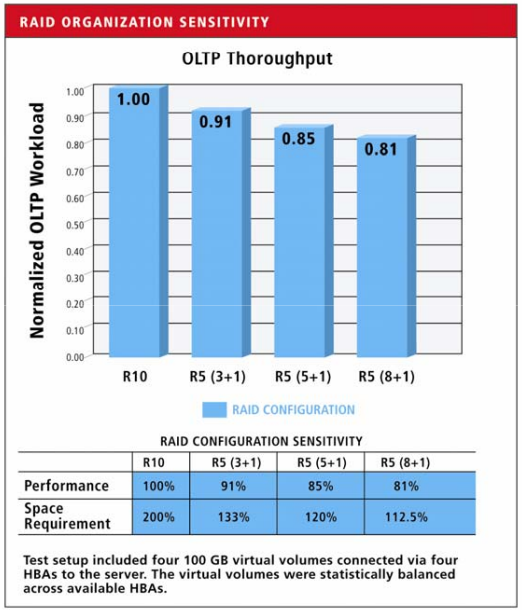

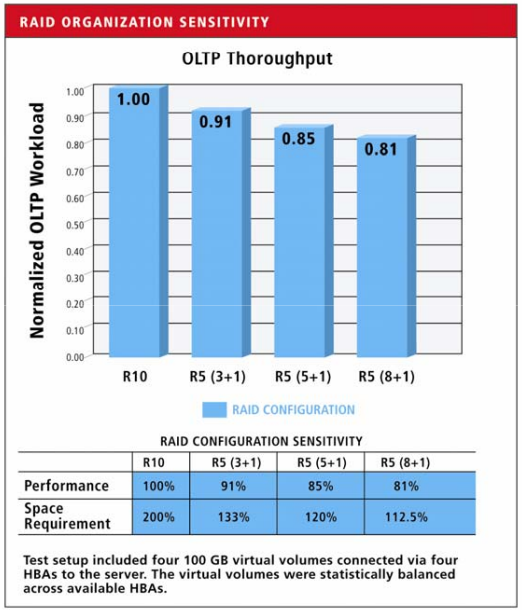

Here’s a graphic from 3PAR which illustrates the dimishing returns(at least on their platform, I think the application they used to measure was Oracle DB):

The impact of RAID on I/O and capacity

3PAR can take this to an even higher extreme on their lower end F-class series which uses daisy chaining in order to get to full capacity (max chain length is 2 shelves). There is a availability level called port level availability which I always knew was there but never really learned what it truly was until last week.

Port level availability applies only to systems that have daisy chained chassis and protects the system from the failure of an entire chain. So two drive shelves basically. Like the other forms of availability this is fully automated, though if you want to go out of your way to take advantage of it you need to use a RAID level that is compliant with your setup to leverage port level availability otherwise the system will automatically default to a lower level of availability (or will prevent you from creating the policy in the first place because it is not possible on your configuration).

Port level availability does not apply to the S/T/V series of systems as there is no daisy chaining done on those boxes (unless you have a ~10 year old S-series system which they did support chaining – up to 2,560 drives on that first generation S800 – back in the days of 9-18GB disks).