UPDATED I’ll be the first to admit – I’m not a network engineer. I do know networking, and can do the basics of switching, static routing, load balancing firewalls etc. But it’s not my primary background. I suppose you could call me a network engineer if you base my talents off of some past network engineers I’ve worked with (which is kinda sad really).

I’ve used quite a few different VPNs over the years, all of them without any special WAN Optimization, though the last “appliance” based VPN I was directly responsible for was a VPN between two sites connected by Cisco PIXs about 4-5 years ago. Since then either my VPN experience has been limited to using OpenVPN on my own personal stuff, or relying on other dedicated network engineer(s) to manage it.

In general, my experience has told me that site to site VPN performance generally equates to internet performance, you may get some benefit with the TCP compression and stuff, but without specialized WAN Optimization / protocol optimization / caching etc – throughput is limited by latency.

I conferred with a colleague on this and his experience was similar – he expects site to site VPN performance to about match that of Internet site to site performance when no fancy WAN Opt is in use.

So imagine my surprise when a few weeks ago I hooked up a site to site VPN in between Atlanta and Amsterdam (~95ms of latency between the two), and I get 10-30 fold improvement in throughput over the VPN than over the internet.

- Internet performance = ~600-700 Kilobytes/second sustained using HTTPS

- Site to site VPN performance = ~5 Megabytes/second using NFS, ~12 Megabytes/second sustained using SCP, and 20 Megabytes/second sustained using HTTP

The links on each end of the connection are 1Gbps, tier 1 ISP on the Atlanta side, I would guesstimate tier 2 ISP (with tons of peering connections) on the Amsterdam side.

It’s possible the performance could be even higher, I noticed that speed continued to increase the longer the transfer was running. My initial tests were limited to ~700MB files – 46.6 seconds for a 697MB file with SCP. Towards the end of the SCP it was running at ~17MB/sec (at the beginning only 2MB/sec).

A network engineer who I believe is probably quite a bit better than me told me

By my calculation – the max for a non-jittered 95ms connection is about 690KB/s so it looks like you already have a clean socket.

Keep in mind that bandwidth does not matter at this point since latency dictates the round-trip-time.

I don’t know what sort of calculation was done, but the throughput matches what I see on the raw Internet.

These are all single threaded transfers. Real basic tests. In all cases the files being copied are highly compressed(in my main test case the 697MB file uncompresses to 14GB), and in the case of the SCP test the data stream is encrypted as well. I’ve done multiple tests over several weeks and the data is consistent.

It really blew my mind, even with fancy WAN optimization I did not expect this sort of performance using something like SCP. Obviously they are doing some really good TCP windowing and other optimizations, despite there still being ~95ms of latency between the two sites within the VPN itself the throughput is just amazing.

I opened a support ticket to try to get support to explain to me what more was going on but they couldn’t answer the question. They said because there is no hops in the VPN it’s faster. There may be no hops but there’s still 95ms of latency between the systems even within the VPN.

I mean just a few years ago I wrote a fancy distributed multi threaded file replication system for a company I was at to try to get around the limits of throughput between our regional sites because of the latency. I could of saved myself a bunch of work had we known at the time (and we had a dedicated network engineer at the time) that this sort of performance was possible without really high end gear or specialized protocols (I was using rsync over HPN-SSH). I remember trying to setup OpenVPN between two sites at the company at that company for a while to test throughput there, and performance was really terrible(much worse than the existing Cisco VPN that we had on the same connection). For a while we had Cisco PIX or ASAs I don’t recall which but had a 100Mbit limit on throughput, we tapped them out pretty quickly and had to move on to something faster.

I ran a similar test between Atlanta and Los Angeles, where the VPN in Los Angeles was a Cisco ASA (vs the other sites are all Sonic Wall), and the performance was high there too – I’m not sure what the link speed is in Los Angeles but throughput was around 8 Megabytes/second for a compressed/encrypted data stream, easily 8-10x faster than over the raw Internet. I tested another VPN link between a pair of Cisco firewalls and their performance was the same as the raw Internet (15ms of latency between the two), I think the link was saturated in those tests(not my link so I couldn’t check it directly at the time).

I’m sure if I dug into the raw tcp packets the secrets would be there – but really even after doing all the networking stuff I have been doing for the past decade+ I still can’t make heads or tails of 90% of the stuff that is in a packet (I haven’t tried to either, hasn’t been a priority of mine, not something that really interests me).

But sustaining 10+ megabytes/second over a 95 millisecond link over 9 internet routers on a highly compressed and encrypted data stream without any special WAN optimization package is just amazing to me.

Maybe this is common technology now, I don’t know, I mean I’d sort of expect marketing information to advertise this kind of thing, if you can get 10-30x faster throughput over a VPN without high end WAN optimization vs regular internet I’d be really interested in that technology. If you’ve seen similar massive increases in performance w/o special WAN Optimization on a site to site VPN I’d be interested to hear about it.

In this particular case, the products I’m using are Sonic Wall NSA3500s. The only special feature licensed is high availability, other than that it’s a basic pair of units on each end of the connection. (WAN Optimization is a software option but is NOT licensed). These are my first Sonic Walls, I had some friends trying to push me to use Juniper (SRX I think) or in one case Palo Alto networks, but Juniper is far too complicated for my needs, and Palo Alto networks is not suitable for Site to Site VPNs with their cost structure (the quote I had for 4 devices was something like $60k). So I researched a few other players and met with Sonic Wall about a year ago and was satisfied with their pitch and verified some of their claims with some other folks, and settled on them. So far it’s been a good experience, very easy to manage, and I’m still just shocked by this throughput. I really had terrible experiences managing those Cisco PIXs a few years back by contrast. OpenVPN is a real pain as well (once it’s up and going it’s alright, configuring and troubleshooting are a bitch).

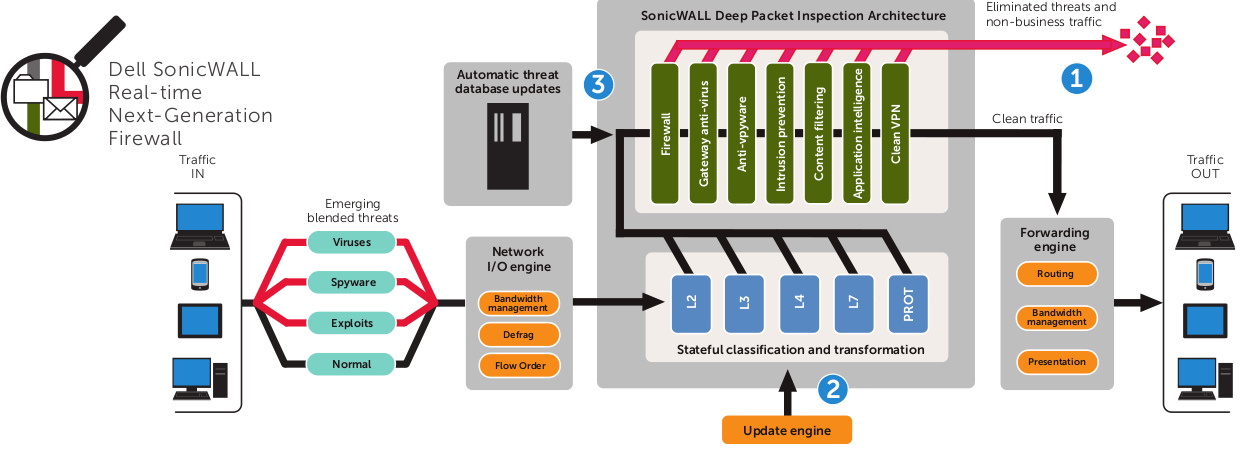

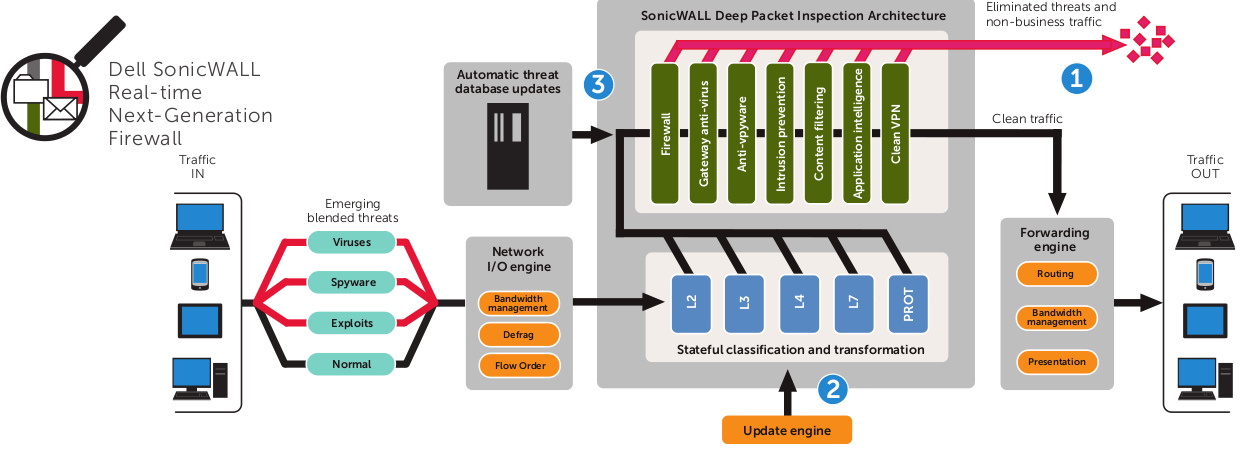

Sonic Wall claimed they were the only ones (2nd to Palo Alto Networks) who had true deep packet inspection in their firewalls (vs having other devices do the work). That claim interested me, as I am not well versed in the space. I bounced the claim off of a friend that I trust (who knows Palo Alto inside and out) and said it was probably true, Palo Alto’s technology is better (less false positives) but nobody else offers that tech. Not that I need that tech, this is for a VPN – but it was nice to know that we got the option to use it in the future. Sonic Wall’s claims go beyond that as well saying they are better than Palo Alto in some cases due to size limitations on Palo Alto (not sure if that is still true or not).

Going far beyond simple stateful inspection, the Dell®SonicWALL® Reassembly-Free Deep Packet Inspection™ (RFDPI) engine scans against multiple application types and protocols to ensure your network is protected from internal and external attacks as well as application vulnerabilities. Unlike other scanning engines, the RFDPI engine is not limited by file size or the amount of concurrent traffic it can scan, making our solutions second to none.

SonicWall Architecture - looked neat, adds some needed color to this page

The packet capture ability of the devices is really nice too, makes it very easy to troubleshoot connections. In the past I recall on Cisco devices at least I had to put the device in some sort of debug mode and it would spew stuff to the console(my Cisco experience is not current of course). With these Sonic Walls I can setup filters really easily to capture packets and it shows them in a nice UI and I can export the data to wireshark or plain text if needed.

My main complaint on these Sonic Walls I guess is they don’t support link aggregation(some other models do though). Not that I need it for performance I wanted it for reliability so that if a switch fails the Sonic wall can stay connected and not trigger a fail over there as well, but as-is I had to configure them so each Sonic wall is logically connected to a single switch (though they have physical connections to both – I learned of the limitation after I wired them up). Not that failures happen often of course but it’s too bad this isn’t supported in this model (which has 6x1Gbps ports on it).

The ONLY thing I’ve done on these Sonic Walls is VPN (site to site mainly, but have done some SSL VPN stuff too), so beyond that stuff I don’t know how well they work. Sonic wall traditionally has had a “SOHO” feel to it though it seems in recent years they have tried to shrug this off, with their high end reaching as high as 240 Gbps in an active-active cluster. Nothing to sneeze at.

UPDATE – I ran another test, and this time I captured a sample of the CPU usage on the Sonic Wall as well as the raw internet throughput as reported by my router, I mean switch, yeah switch.

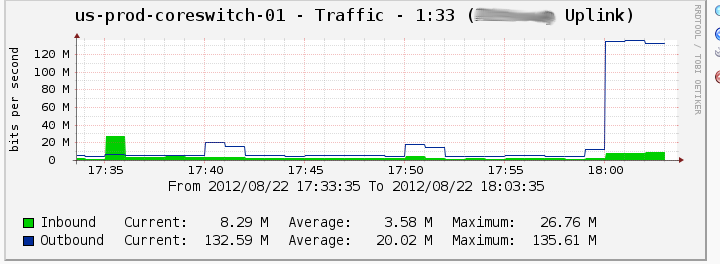

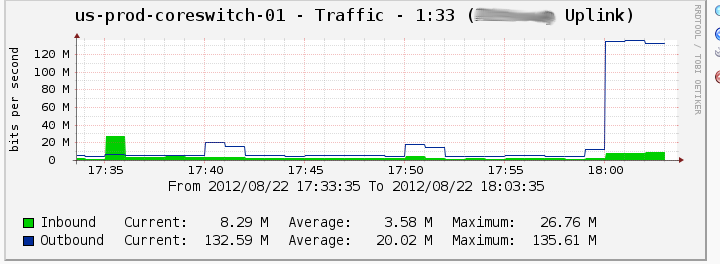

2,784MB gzip’d file copied in 3 minutes 35 seconds using SCP. If my math is right that comes to an average of roughly 12.94 Megabytes/second ? This is for a single stream, basic file copy.

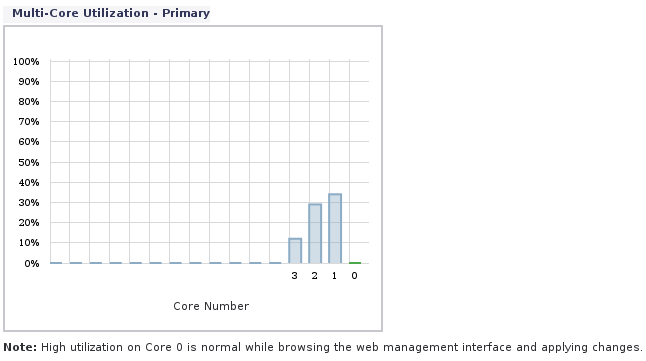

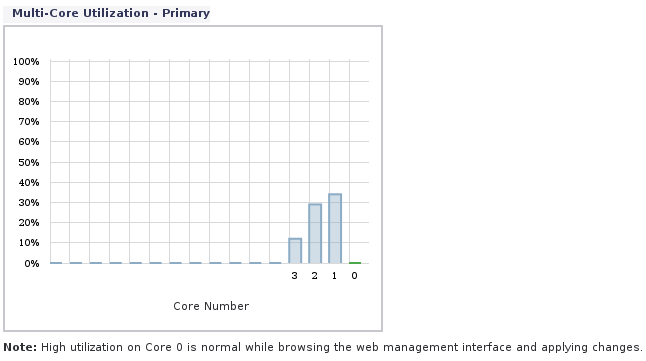

The firewall has a quad core 550 MHz Mips64 Octeon Processor (I assume it’s quad core and not four individual processors). CPU usage snapshot here:

SonicWall CPU usage snapshot across cores during big file xfer

The highest I saw it was CPU core #1 going to about 45% usage, with core #2 about 35% maybe, and core #3 maybe around 20%, with core #0 being idle (maybe that is reserved for management? given it’s low usage during the test.. not sure).

Raw network throughput topped out at 135.6 Megabits/second (well some of that was other traffic, so wager 130 Megabits for the VPN itself).

Raw internet throughput for VPN file transfer

Apparently this post found it’s way to Dell themselves and they were pretty happy to see it. I’m sorry I just can’t get over how bitchin’ fast this thing is! I’d love for someone at Dell/SonicWALL who knows more than the tier 1 support person I talked with a few weeks ago to explain it better.