Just read the source article, and the discussion on slashdot was far more interesting.

It’s been somewhat of a delicate topic for myself, having been a system admin of sorts for about sixteen years now, primarily on the Linux platform.

For me, more than anything else, you have to define what code is. Long ago I drew a line in the sand that I have no interest in being a software developer, I do plenty of scripting in Perl & Bash, primarily for monitoring purposes and to aid in some of the more basic areas of running systems.

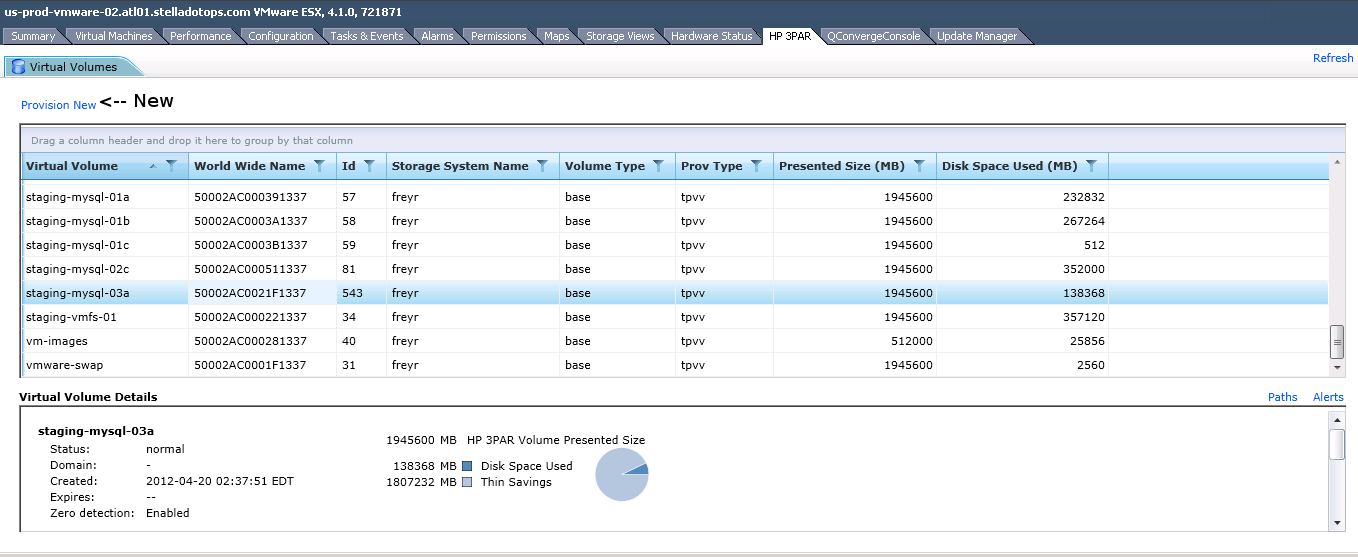

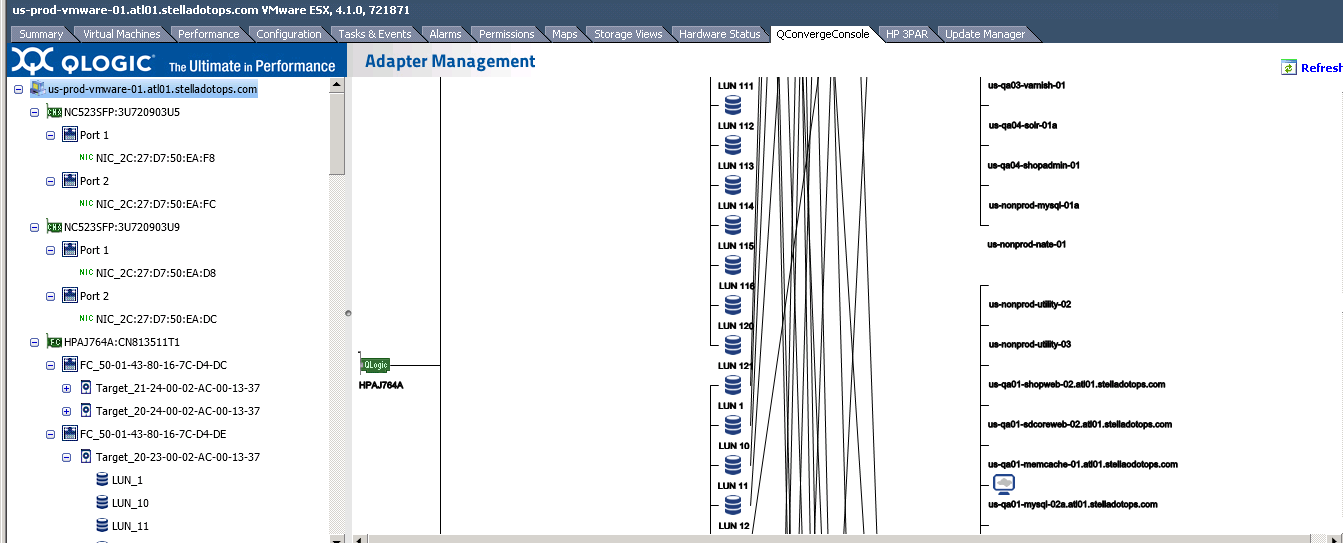

Since this blog covers 3PAR I suppose I should start there – I’ve written scripts to do snapshots and integrate them with MySQL (still in use today) and Oracle (haven’t used this side of things since 2008). This is a couple thousand lines of script (I don’t like to use the word code because to me it implies some sort of formal application). I’d wager 99% of that is to support the Linux end of things and 1% to support 3PAR. One company I was at I left, and turned these scripts over to people who were going to try to take on my responsibility. The folks had minimal scripting experience and their eyes glazed over pretty quick while I walked them through the process. They feared the 1,000 line script. Even though for the most part the system was very reliable and not difficult to recover from failures from, even if you had no scripting experience. In this case to manage snapshots with MySQL (integrated with a storage platform) – I’m not aware of any out of the box tool that can handle this. So you sort of have no choice but to glue your own together. With Oracle, and MSSQL tools are common, maybe even DB2 – but MySQL is left out in the cold.

I wrote my own perl-based tool to login to 3PAR arrays and get their metrics and populate RRD files (I use cacti to present that data – since it has a nice UI, but cacti could not collect data like I can so that stuff is run outside of cacti). Another thousand lines of script here.

Perhaps one of the coolest things I think I wrote was a file distribution system a few years ago to replace a product we used in house that was called R1 Repliweb. Though it looks like they got acquired by somebody else. Repliweb is a fancy file distribution system that primarily ran on Windows, but the company I was at was using the Linux agents to pass files around. I suppose I could write a full ~1200 word post about that project alone(if your interested in hearing that let me know), but basically I replaced it with an architecture of load balancers, VMs, a custom version of SSH, rsync, with some help from CFengine and about 200 lines of script which not only dramatically improved scalability but also reliability went literally to 100%. Never had a single failure (the system was self healing – though I did have to turn off rsync’s auto resume feature because it didn’t work for this project) while I was there (the system was in place about 12-16 months when I left).

So back to the point – to code or not to code. I say not to code (again back to what code means – in my context it means programming – if your directly using APIs then your programming, if your using tools to talk to APIs then your scripting) – for the most part at least. Don’t make things too complicated. I’ve worked with a lot of system admins over the years and the number that can script well, or code is very small. I don’t see that number increasing. Network engineers are even worse – I’ve never seen a network engineer do anything other than completely manually. I think storage is similar.

If you start coding your infrastructure you start making it even more difficult to bring new people on board, to maintain this stuff, and run it moving forward. If you happen to be in an environment that is experiencing explosive growth and your adding dozens or hundreds of servers constantly then yes this can make a lot of sense. But most companies aren’t like that and never will be.

It’s hard enough to hire people these days, if you go about raising the bar to even higher levels your never going to find anyone. I think to the Hadoop end of the market – those folks are always struggling to hire because the skill is so specialized, and there are so few people out there that can do it. Most companies can’t compete with the likes of Microsoft, Yahoo and other big orgs with their compensation and benefits packages.

You will, no doubt spend more on things like software, hardware for things that some fancy DevOps god could do in 10 lines of ruby while they sleep. Good luck finding and retaining such a person though, and if you feel you need redundancy so someone can take a real vacation, yeah that’s gonna be tough. There is a lot more risk, in my opinion in having a lot of code running things if you lack the resources to properly maintain it. This is a problem even at scale as well. I’ve heard on several occasions – the big Amazon themselves, customized CFengine v1 way back when with so much extra stuff. Then v2 (and since v3) came around with all sorts of new things, and guess what – Amazon couldn’t upgrade because they had customized it too much. I’ve heard similar things about other technologies Amazon has adopted. They are stuck because they customized it too much and can’t upgrade.

I’ve talked to a ton of system admin candidates over the past year and the number that I feel comfortable being able to take over the “code” on our end I think is fair to say is zero. Granted not even I can handle the excellent code written by my co-worker. I like to tell people I can do simple stuff in 10 minutes on CFengine and it will take me four hours to do things the chef way on chef, my eyes will bleed and my blood will boil in the process.

The method I’d use on CFengine you could say “sucks” compared to Chef, but it works, and is far easier to manage. I can bring almost anyone up to speed on the system in a matter of hours, vs chef takes a strong Ruby background to use (myself I am going on nearly two and a half years with Chef and I haven’t made much progress other than I feel I can speak with authority on how complex it is).

Sure it can be nice to have APIs for everything, fancy automation everywhere – but you need to pick your battles. When your dealing with a cloud organization like Amazon you almost have to code – to deal with all of their faults and failures and just overall stupid broken designs and everything that goes along with it. Learning to code makes the experience most likely from absolutely infuriating (where I stand) to almost manageable (costs and architecture aside here).

When your dealing with your own stuff, where you don’t have to worry about IPs changing at random because some host has died, or because you can change your CPU or memory configuration with a few mouse clicks and not have to re-build your system from scratch, the amount of code you need shrinks dramatically, lowering the barriers to entry.

After having worked in the Amazon cloud for more than two years both myself and my co-workers(who have much more experience in it than me) believe that it actually takes more effort and expertise to properly operate something in there vs doing it on your own. It’s the total opposite of how cloud is viewed by management.

Obviously it is easier said than done, just look at the sheer number of companies that go down every time Amazon has an outage or their service is degraded. Most recent one was yesterday. It’s easy for some to blame the customer for not doing the right thing, at the end of the day though most companies would rather work on the next feature to attract customers and let something else handle fault tolerance. Only the most massive companies have resources to devote to true “web scale” operation. Shoe horning such concepts onto small and medium businesses is just stupid, and the wrong set of priorities.

Someone made a comment recently that made me laugh (not at them, but more at the situation). They said they performed some task to make my life easier in the event we need to rebuild a server (a common occurrence in EC2). I couldn’t help but laugh because we hadn’t rebuilt a single server since we left EC2 (coming up on one year in a few months here).

I think it’s great that equipment manufacturers are making their devices more open, more programmatic. Adding APIs, and other things to make automation easier. I think it’s primarily great because then someone else can come up with the glue that can tie it all together.

I don’t believe system admins should have to interact with such interfaces directly.

At the same time I don’t expect developers to understand operations in depth. Hopefully they have enough experience to be able to handle basic concepts like load balancing(e.g. store session data in some central place, preferably not a traditional SQL database). The whole world often changes from running an application in a development environment to running it in production. The developers take their experience to write the best code that they can, and the systems folks manage the infrastructure (whether it is cloud based or home grown) and operate it in the best way possible. Whether that means separating out configuration files so people can’t easily see passwords, to inserting load balancers in between tiers, splitting out how application code is deployed, to something as simple as log rotation scripts.

If you were to look at my scripts you may laugh(depending on your skill level) – I try to keep them clean but they are certainly not up to programmer standards, no I’ve never “use strict” on Perl for example. My scripting is simple so to do things sometimes takes me many more lines than someone more experienced in the trade to do. This has it’s benefits though – it makes it easier for more people to be able to follow the logic should they need to, and it still gets the job done.

The original article seemed to focus more on scripts, while the discussion on slashdot at some points really got into programming with one person saying they wrote apache modules ?!

As one person in the discussion thread on slashdot pointed out, heavy automation can hurt just as much as help. One mistake in the wrong place and you could take the systems down far faster than you can recover them. This has happened to me on more than one occasion of course. One time in particular I was looking at a CFEngine configuration file, saw some logic that appeared to be obsolete, and removed a single character (a ! which told CFEngine don’t apply that configuration to that class), then CFengine went and wiped out my apache configurations. When I made the change I was very sure that what I was doing was right, but in the end it wasn’t. That happened seven years ago but I still remember it like it was yesterday.

System administrators should not have to program – scripting certainly is handy and I believe that is important(not critical – it’s not at the top of my list for skills when hiring), just keep an eye out for complexity and supportability when your doing that stuff.