I mentioned not long ago that I was going co-lo once again. I was co-lo for a while for my own personal services but then my server started to act up (the server was 6 years old if it was still alive today) with disk “failure” after failure (or at least that’s what the 3ware card was predicting eventually it stopped complaining and the disk never died again). So I thought – do I spent a few grand to buy a new box or go “cloud”. I knew up front cloud would cost more in the long run but I ended up going cloud anyways as a stop gap – I picked Terremark because it had the highest quality design at the time(still does).

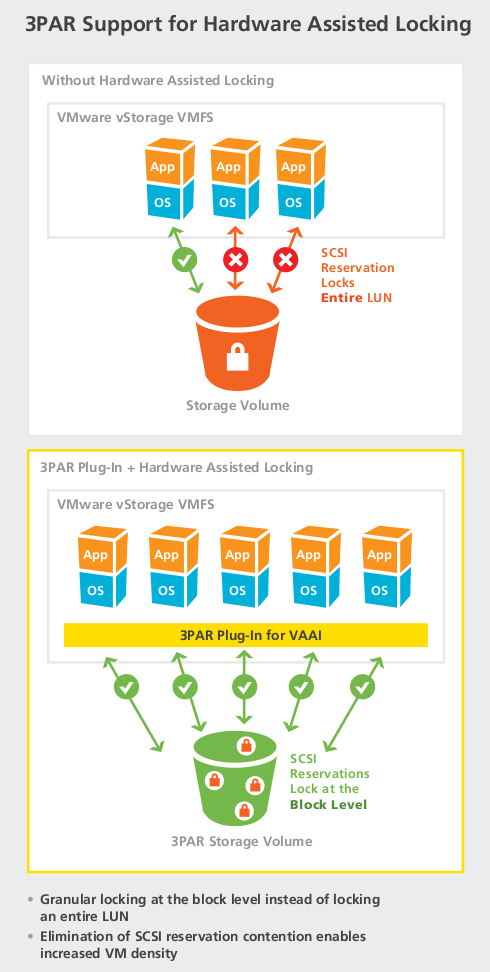

During my time with Terremark I never had any availability issues, there was one day where there was some high latency on their 3PAR arrays though they found & fixed whatever it was pretty quick (didn’t impact me all that much).

I had one main complaint with regards to billing – they charge $0.01 per hour for each open TCP or UDP port on their system, and they have no way of doing 1:1 NAT. For a web server or something this is no big deal, but for me I needed a half dozen or more ports open per system(mail, dns, vpn, ssh etc) after cutting down on ports I might not need, so it starts to add up, indeed about 65% of my monthly bill ended up being these open TCP and UDP ports.

Once both of my systems were fully spun up (the 2nd system only recently got fully spun up as I was too lazy to move it off of co-lo) my bill was around $250/mo. My previous co-lo was around $100/mo and I think I had them throttle me to 1Mbit of traffic (this blog was never hosted at that co-lo).

The one limitation I ran into on their system was that they could not assign more than 1 IP address for outbound NAT per account. In order to run SMTP I needed each of my servers to have their own unique outbound IP. So I had to make a 2nd account to run the 2nd server. Not a big deal(for me, ended up being a pain for them since their system wasn’t setup to handle such a situation), since I only ran 2 servers (and the communications between them were minimal).

As I’ve mentioned before, the only part of the service that was truly “bill for what you use” was bandwidth usage, and for that I was charged between 10-30 cents/month for my main system and 10 cents/month for my 2nd system.

Oh – and they were more than willing to setup reverse DNS for me which was nice (and required for running a mail server IMO). I had to agree to a lengthy little contract that said I wouldn’t spam in order for them to open up port 25. Not a big deal. The IP addresses were “clean” as well, no worries about black listing.

Another nice thing to have if they would of offered it is billing based on resource pools, as usual they charge for what you provision(per VM) instead of what you use. When I talked to them about their enterprise cloud offering they charged for the resource pool (unlimited VMs in a given amount of CPU/memory), but this is not available on their vCloud Express platform.

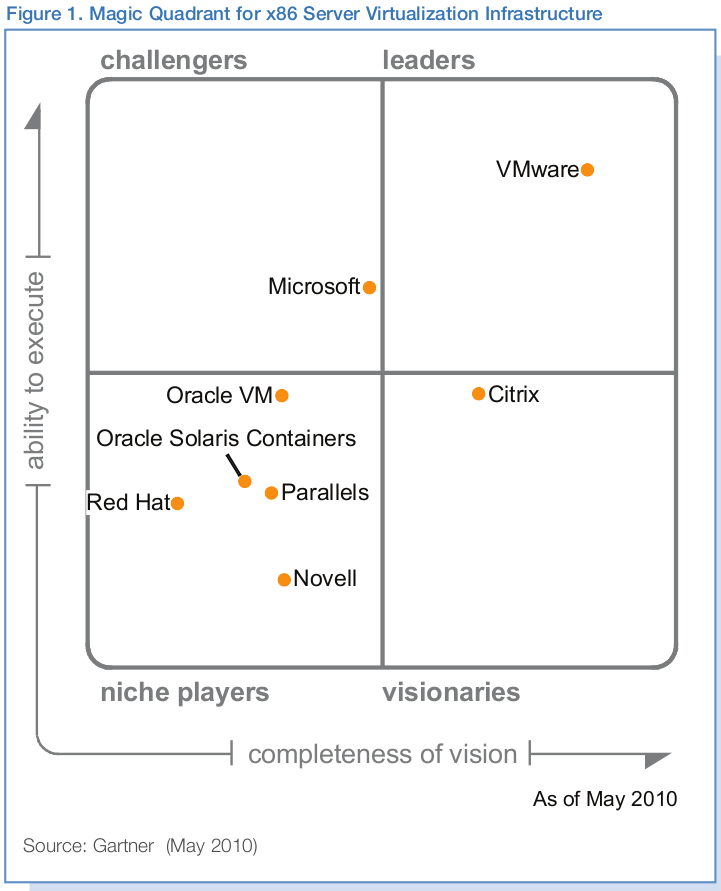

It was great to be able to VPN to their systems to use the remote console (after I spent an hour or two determining the VPN was not going to work in Linux despite my best efforts to extract linux versions of the vmware console plugin and try to use it). Mount an ISO over the VPN and install the OS. That’s how it should be. I didn’t need the functionality but I don’t doubt I would of been able to run my own DHCP/PXE server there as well if I wanted to install additional systems in a more traditional way. Each user gets their own VLAN, and is protected by a Cisco firewall, and load balanced by a Citrix load balancer.

A couple of months ago the thought came up again of off site backups. I don’t really have much “critical” data but I felt I wanted to just back it all up, because it would be a big pain if I had to reconstruct all of my media files for example. I have about 1.7TB of data at the moment.

So I looked at various cloud systems including Terremark but it was clear pretty quick no cloud company was going to be able to offer this service in a cost effective way so I decided to go co-lo again. Rackspace was a good example they have a handy little calculator on their site. This time around I went and bought a new, more capable server.

So I went to a company I used to buy a ton of equipment from in the bay area and they hooked me up with not only a server with ESXi pre-installed on it but co-location services (with “unlimited” bandwidth), and on-site support for a good price. The on-site support is mainly because I’m using their co-location services(which in itself is a co-lo inside Hurricane Electric) and their techs visit the site frequently as-is.

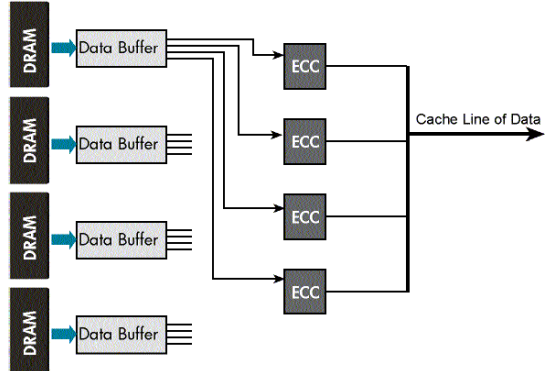

My server is a single socket quad core processor, 4x2TB SAS disks (~3.6TB usable which also matches my usable disk space at home which is nice – SAS because VMware doesn’t support VMFS on SATA though technically you can do it the price premium for SAS wasn’t nearly as high as I was expecting), 3ware RAID controller with battery backed write-back cache, a little USB thing for ESXi(rather have ESXi on the HDD but 3ware is not supported for booting ESXi), 8GB Registered ECC ram and redundant power supplies. Also has decent remote management with a web UI, remote KVM access, remote media etc. For co-location I asked (and received) 5 static IPs (3 IPs for VMs, 1 IP for ESX management, 1 IP for out of band management).

My bandwidth needs are really tiny, typically 1GB/month. Though now with off site backups that may go up a bit (in bursts). Only real drawback to my system is the SAS card does not have full integration with vSphere so I have to use a cli tool to check the RAID status, at some point I’ll need to hook up nagios again and run a monitor to check on the RAID status. Normally I setup the 3Ware tools to email me when bad things happen, pretty simple, but not possible when running vSphere.

The amount of storage on this box I expect to last me a good 3-5 years. The 1.7TB includes every bit of data that I still have going back a decade or more – I’m sure there’s a couple hundred gigs at least I could outright delete because I may never need it again. But right now I’m not hurting for space so I keep it there, on line and accessible.

My current setup

- One ESX virtual switch on the internet that has two systems on it – a bridging OpenBSD firewall, and a Xangati system sniffing packets(still playing with Xangati). No IP addresses are used here.

- One ESX virtual switch for one internal network, the bridging firewall has another interface here, and my main two internet facing servers have interfaces here, my firewall has another interface here as well for management. Only public IPs are used here.

- One ESX virtual switch for another internal network for things that will never have public IP addresses associated with them, I run NAT on the firewall(on it’s 3rd/4th interfaces) for these systems to get internet access.

I have a site to site OpenVPN connection between my OpenBSD firewall at home and my OpenBSD firewall on the ESX system, which gives me the ability to directly access the back end, non routable network on the other end.

Normally I wouldn’t deploy an independent firewall, but I did in this case because, well I can. I do like OpenBSD’s pf more than iptables(which I hate), and it gives me a chance to play around more with pf, and gives me more freedom on the linux end to fire up services on ports that I don’t want exposed and not have to worry about individually firewalling them off, so it allows me to be more lazy in the long run.

I bought the server before I moved, once I got to the bay area I went and picked it up and kept it over a weekend to copy my main data set to it then took it back and they hooked it up again and I switched my systems over to it.

The server was about $2900 w/1 year of support, and co-location is about $100/mo. So disk space alone the first year(taking into account cost of the server) my cost is about $0.09 per GB per month (3.6TB), with subsequent years being $0.033 per GB per month (took a swag at the support cost for the 2nd year so that is included). That doesn’t even take into account the virtual machines themselves and the cost savings there over any cloud. And I’m giving the cloud the benefit of the doubt by not even looking at the cost of bandwidth for them just the cost of capacity. If I was using the cloud I probably wouldn’t allocate all 3.6TB up front but even if you use 1.8TB which is about what I’m using now with my VMs and stuff the cost still handily beats everyone out there.

What’s the most crazy is I lack the purchasing power of any of these clouds out there, I’m just a lone consumer, that bought one server. Granted I’m confident the vendor I bought from gave me excellent pricing due to my past relationship, though probably still not on the scale of the likes of Rackspace or Amazon and yet I can handily beat their costs without even working for it.

What surprised me most during my trips doing cost analysis of the “cloud” is how cheap enterprise storage is. I mean Terremark charges $0.25/GB per month(on SATA powered 3PAR arrays), Rackspace charges $0.15/GB per month(I believe Rackspace just uses DAS). I kind of would of expected the enterprise storage route to cost say 3-5x more, not less than 2x. When I was doing real enterprise cloud pricing storage for the solution I was looking for typically came in at 10-20% of the total cost, with 80%+ of the cost being CPU+memory. For me it’s a no brainier – I’d rather pay a bit more and have my storage on a 3PAR of course (when dealing with VM-based storage not bulk archival storage). With the average cost of my storage for 3.6TB over 2 years coming in at $0.06/GB it makes more sense to just do it myself.

I just hope my new server holds up, my last one lasted a long time, so I sort of expect this one to last a while too, it got burned in before I started using it and the load on the box is minimal, would not be too surprised if I can get 5 years out of it – how big will HDDs be in 5 years?

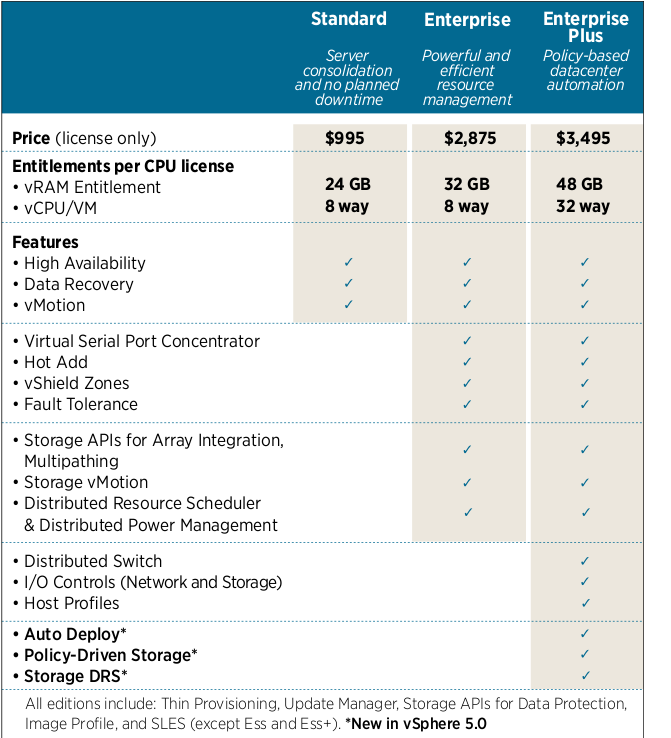

I will miss Terremark because of the reliability and availability features they offer, they have a great service, and now of course are owned by Verizon. I don’t need to worry about upgrading vSphere any time soon as there’s no reason to go to vSphere 5. The one thing I have been contemplating is whether or not to put my vSphere management interface behind the OpenBSD firewall(which is a VM of course on the same box). Kind of makes me miss the days of ESX 3, when it had a built in firewall.

I’m probably going to have to upgrade my cable internet at home, right now I only have 1Mbps upload which is fine for most things but if I’m doing off site backups too I need more performance. I can go as high as 5Mbps with a more costly plan. 50Meg down 5 meg up for about $125, but I might as well go all in and get 100meg down 5 meg up for $150, both plans have a 500GB cap with $0.25/GB charge for going over. Seems reasonable. I certainly don’t need that much downstream bandwidth(not even 50Mbps I’d be fine with 10Mbps), but really do need as much upstream as I can get. Another option could be driving a USB stick to the co-lo, which is about 35 miles away, I suppose that is a possibility but kind of a PITA still given the distance, though if I got one of those 128G+ flash drives it could be worth it. I’ve never tried hooking up USB storage to an ESX VM before, assuming it works? hmmmm..

Another option I have is AT&T Uverse, which I’ve read good and bad things about – but looking at their site their service is slower than what I can get through my local cable company (which truly is local, they only serve the city I am in). Another reason I didn’t go with Uverse for TV is due to the technology they are using I suspected it is not compatible with my Tivo (with cable cards). Though AT&T doesn’t mention their upstream speeds specifically I’ll contact them and try to figure that out.

I kept the motherboard/cpus/ram from my old server, my current plan is to mount it to a piece of wood and hang it on the wall as some sort of art. It has lots of colors and little things to look at, I think it looks cool at least. I’m no handyman so hopefully I can make it work. I was honestly shocked how heavy the copper(I assume) heatsinks were, wow, felt like 1.5 pounds a piece, massive.

While my old server is horribly obsolete, one thing it does have even on my new server is being able to support more ram. Old server could go up to 24GB(I had a max of 6GB at the time in it), new server tops out at 8GB (have 8GB in it). Not a big deal as I don’t need 24GB for my personal stuff but just thought it was kind of an interesting comparison.

This blog has been running on the new server for a couple of weeks now. One of these days I need to hook up some log analysis stuff to see how many dozen hits I get a month.

If Terremark could fix three areas of their vCloud express service – one being resource pool-based billing, another being relaxing the costs behind opening multiple ports in the firewall (or just giving 1:1 NAT as an option), and the last one being thin provisioning friendly billing for storage — it would really be a much more awesome service than it already is.