[ I will of course miss all my friends more than anything else, this post is not about friends but rather places ]

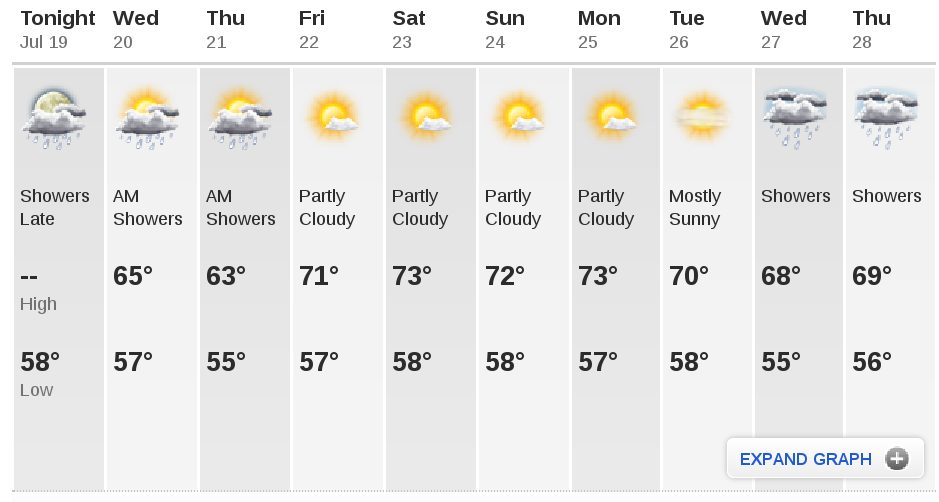

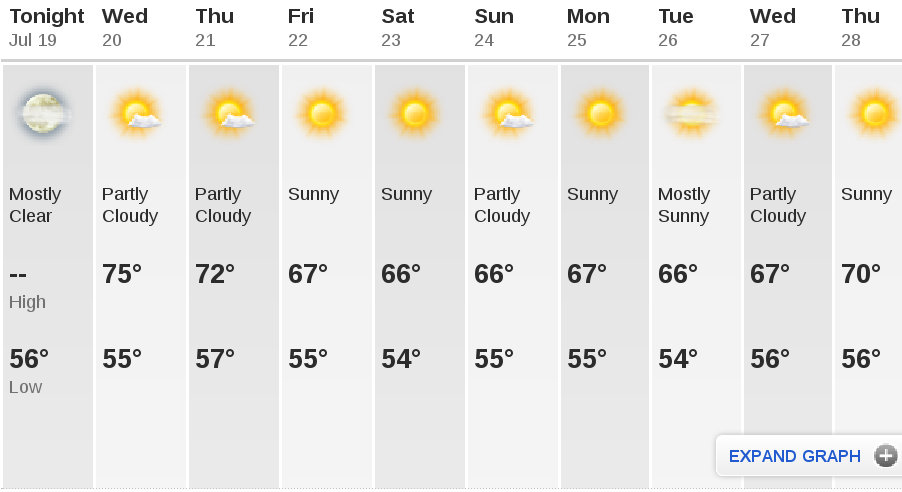

I have been thinking about my move coming up – my last full day in the area is this Thursday July 21st. (originally was going to be Saturday but because of moving issues it had to be moved sooner).

I mentioned in my original post that I don’t like Seattle, I can’t think of a single part of Seattle that I like, I don’t like the one way streets, the lack of parking (which on a recent news report was the #1 complaint of tourists), the traffic, and really am not a fan of the culture in general, I don’t think as a driver anything gets me more frustrated than driving in downtown Seattle. I was there yesterday in fact to try to find something in particular, I ended up just coming back home in frustration, never bothered to go in any stores because parking was such a pain. Part of the culture in Seattle that I don’t like is they are increasingly anti-car. Which is a fine view to have – you just won’t catch me dead living there!. I do like the east side though (where I live). It is interesting because a lot of folks I know in Seattle are the opposite, they hate the east side but love Seattle.

So I tried to think of if there was anything I did like about Seattle. I came up with two places in Seattle that I do love, and will miss. I’m not sure if I’ll be able to find a close replacement for either down in the Bay Area though.

The first place, which is far and away first place, there is no competition.

Cowgirls Inc

If you haven’t been to Cowgirls you really should check it out, words cannot properly describe the Cowgirls experience on a Friday or Saturday night after 9 PM. Don’t bother going in at 5-6-7 it’s pretty much like any other bar. After 9 things change though. I usually get there at 9, and by about midnight I have a tough time standing up so it’s time to go (I missed out on their 5th Anniversary party which I happened to go on that particular night but could not stay an extra 30 minutes to see the show because well I was destroyed). – oh and you’ll be doing a lot of standing as they remove the bar stools at around 9:30-10pm.

If you haven’t been to Cowgirls you really should check it out, words cannot properly describe the Cowgirls experience on a Friday or Saturday night after 9 PM. Don’t bother going in at 5-6-7 it’s pretty much like any other bar. After 9 things change though. I usually get there at 9, and by about midnight I have a tough time standing up so it’s time to go (I missed out on their 5th Anniversary party which I happened to go on that particular night but could not stay an extra 30 minutes to see the show because well I was destroyed). – oh and you’ll be doing a lot of standing as they remove the bar stools at around 9:30-10pm.

On these nights it is often wall to wall people, I stick close to the bar and defend my position (you’ll understand why if you go). The words kick ass don’t do it justice. I took another friend there for the first time this past Friday and he was blown away too, totally exceeded his expectations. I took another guy a couple of months ago he moved to Seattle last year, and he seemed like he was in shock most of the time he absolutely loved it.

I worked across the street from this place (which is on the corner of 1st and King street in Pioneer Square), back when it opened in 2004, though I was a very different person back then. We used to do software deployments that would start at 10pm and typically end around 2 or 3AM.

My co-workers liked to go there (at what seemed to be around 6-7pm) to play pool and eat the peanuts because they have you throw the shells on the floor and my co-workers loved that. The place is often pretty dead that early so no action.

My Favorite Cowgirl (moved away in 2010)

Even though I only went a few times a year the staff knew me pretty well when I came in, so that was nice. I am terrible with names and although I heard some of their names over the years I never retained them. My favorite cowgirl (left) was awesome, she did the craziest things including dancing on my shoulders on several occasions, the heels hurt the first couple of times but it was good pain 🙂

She moved away from Seattle last year which made me sad.

A funny story about the company I worked at that was across the street from this place. The last boss I had at that company was a really heavy drinker, one night him, the VP and a bunch of other folks (not including me I wasn’t at the company at that point) went to Cowgirls and drank a bunch.

The VP dared the director (my former boss) to jump up on the bar and start dancing. He apparently did it, and as a result one of the bouncers got pulled him off, got him in a headlock and kicked him out. Speaking of bouncers (they are called regulators there), there are quite a few, I would say upwards of 10 at any given time.

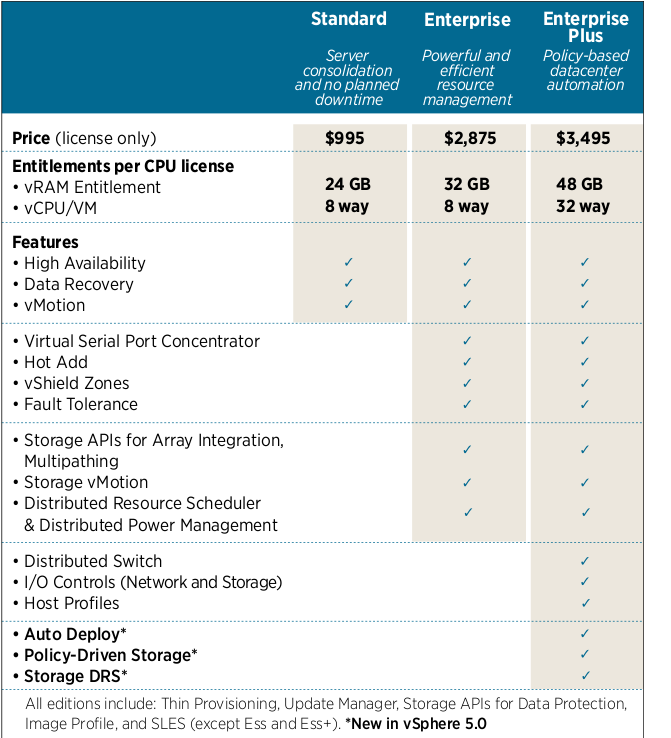

Another funny story about Cowgirls and VMware of all things – a local VMware rep was coming to meet me at the last company I was at, and his boss told him the wrong address(I gave the right address). So he called me up right when he was supposed to be there and asked where I was and told him he went to the wrong place. Here is how the conversation went (this was almost a year ago so the words are not completely accurate) –

- Him: so where are you located then?

- Me: on the corner of 1st and King street

- Him: where is that near?

- Me: it’s in Pioneer square

- Him: hmm, any more tips you can give?

- Me: It’s near the stadiums

- Him: Still not completely sure

- Me: Do you know where Cowgirls Inc is?

- Him: OH YEAH! Cowgirls!

- Me: I’m right across the street from that

- Him: I’ll be there in 10 minutes!

The place is run really smooth despite the crowds they really have it nailed down. I’ve never seen anything get out of hand while all the times I’ve been there. The staff is very talented, friendly and skilled at churning out drinks at a rapid rate.

If you go and have a good time don’t be afraid to tip big. Drink wise it’s a very affordable bar, even drinking with a friend I don’t think my tab(before tip) has ever been above $100. My tips at Cowgirls are anywhere from 80% to 130%Â (+/- I don’t try to do percentages just come up with some number based on the bill). I also tip in cash, I have had on a couple occasions companies reject my tips on my CC bill I guess because they thought it was too much, can’t reject cash though! They are worth every penny.

There is really no other bar I’d rather go to, at least from ones I have been to in the U.S. – It’s not a place to go to if you want to have a casual conversation, because you’ll just be yelling at each other to hear each other. So depending on the situation – perhaps go to a quiet bar first and talk about whatever you want to talk about then go to Cowgirls and have some real fun.

I have only two complaints about the place – one they don’t keep their web site up to date (they had a pop up running that was more than a year old at one point). The second, which has been more of a drawback for out of town friends than me is that they aren’t open every night. They are open every Friday and Saturday, and some other days if there is a sporting event on. I can’t tell you how many times I’ve driven folks there on off nights only to find them closed.

I will miss the place greatly, I do plan to come back and visit. I’ve known several of the staff there for several years now so I should see some familiar faces if I am back in town in a few months to a year.

On to number two, it is a distant but solid second.

Pecos Pit

I was introduced to this place while I was working at that same company in Pioneer Square back in 2003. I don’t know how famous it might be but it is the only place I will order a pulled pork bbq sandwich from. I’m actually going there today at noon to meet some friends.

I was introduced to this place while I was working at that same company in Pioneer Square back in 2003. I don’t know how famous it might be but it is the only place I will order a pulled pork bbq sandwich from. I’m actually going there today at noon to meet some friends.

I don’t believe I had ever had pulled pork until I had it at Pecos Pit, I’m not even sure if I had even heard of pulled pork until Pecos.

Pecos Pit is located on 1st ave, about a mile south of the stadiums in Seattle. They are open Monday – Friday only as far as I know and hours are something like 11-3PM. Outdoor seating only (or take out). Parking can be limited at times, oh and it’s cash only too (there is an ATM across the street in a pinch though I’ve never used it).

Probably the main reason I love Pecos is the sauce & spice. My standard order (which some of the staff know me so well that I don’t even have to say it) is Pecos Pork, Hot, Spike & Beans. Yes I order the hottest thing on their menu, few people do but I have been having it for so long I got used to it a long time ago. I started out with medium way back when, but at some point it didn’t seem hot enough (I think they adjusted their recipe to make things less spicy but not sure). I switched to hot, and while it really is hot, for me at least it’s by no means too hot. Of all my friends that I have taken there or met there, I think maybe only one or two others have gotten hot, most usually seem to get mild(I know of at least one that complains that mild is even too hot).

I live in Bellevue, very close to Dixie’s BBQ which is much more famous in the area because of the man. While the man passed away a year or two ago they still have the man sauce, which really is the hottest thing I have ever had. I enjoy the heat it gives but I really do not enjoy the flavor. I also don’t generally enjoy the flavor of the pulled pork at Dixie’s either. I’d much, MUCH rather drive to Seattle and get Pecos over Dixie’s. The only reason I would go to Dixie’s myself is to get a jar of the man sauce to use at home, they sell, what I think is 2 ounce jars of the sauce for something like $10. While I haven’t used it at home in many years, the time when I did, one jar of that stuff literally lasted me a year. I would apply it with toothpicks to meat to get the heat inside the meat and cook it. Really was good (and very hot). The man sauce I would have to say is probably 3-4-5x+ hotter than what the hottest is at Pecos.

I’ve had pulled pork at a couple other places as well but for me, nothing compares to Pecos (I’m sure real bbq from down south or east or whatever is as good or better but I haven’t been able to try any of that). So for the most part when I see pulled pork on a menu I don’t bother ordering it, unless I’m at Pecos.

Pecos is not a place to go if your not a meat eater, their menu is limited to pork, beef, and beans for the most part(which have meat in them). I’ve never tried the beef, never felt the need. I have heard that sometimes they run out of pork if the days are really busy but I haven’t come across that myself. Lines can be long on good weather days so be prepared to wait.

Well there you have it – there are more places I will miss from up here, but they aren’t unique and I’ll be able to find replacements for them pretty easily down in the Bay Area.

These places will be harder. I know there is a bunch of other bars that are sort of like Cowgirls around the country, I haven’t been to any myself, one of my friends who travels a bunch does, and told me at least of the ones he’s been to, nothing compares to Cowgirls Inc.