It seems HDS has finally decided to buy out BlueArc after what was either two or three failed attempts at an IPO.

BlueArc, along with my buddies over at 3PAR is among the few storage companies that puts real silicon to work in their system for the best possible performance. Their architecture is quite impressive and the performance (that is for their mid range system) shows.

I have only been exposed to their older stuff (5-6 year old technology) directly, not their newer technology. But even their older stuff was very fast and efficient, very reliable and had quite a few nifty features as well. I think they were among the first to do storage tiering (for them at the file level).

[ warning – a wild tangent gets thrown in here somewhere ]

While their NAS technology was solid(IMO), their disk technology was not. They relied on LSI storage, and the quality of the storage was very poor over all. First off whoever setup the system we had set it up with everything running RAID 5 12+1, then there was the long RAID rebuild times, the constant moving hot spots because of the number of different tiers of storage we had, the fact that the 3 Titan head units were not clustered so we had to take hard downtime for software upgrades(not BlueArc’s fault other than perhaps making it too expensive to be able to do clustered heads when the company bought the stuff – long before I was there). Every time we engaged with BlueArc 95% of our complaints were about the disk. For the longest time they tried to insist that “disk doesn’t matter”. That you could put any storage system behind the BlueArc and it would be the same.

After the 3rd or 4th engagement BlueArc significantly changed their tune (not sure what prompted it), but now acknowledged the weakness of the low tier storage and was promoting the use of HDS AMS storage (USP was well, waaaaaaaay out of our price range) since they were a partner of HDS back then as well. The HDS proposal fell far short of the design I had with 3PAR and at the time Exanet was their partner of choice.

If I could of chosen I would of used BlueArc for NAS and 3PAR for disk. 3PAR was open to the prospect of course, BlueArc claimed they had contacted 3PAR to start working with them but 3PAR said that never happened. Later BlueArc acknowledged they were not going to try to work with 3PAR (or any other storage company other than LSI or HDS – I think 3PAR was one digit too long for them to handle).

Given the BlueArc system lacked the ability to provide us with any truly useful disk performance statistics, it was tough coming up with a configuration that I thought would work as a replacement. There was a large number of factors involved, and any one of them had a fairly wide margin of error. You could say I pulled a number out of my ass, but I did do more calculations than that I have about a dozen pages of documentation I wrote at the time on the project, but really at the end of the day it was a stab in the dark as far as initial configuration.

BlueArc as a company, at the time didn’t really have their support stuff all figured out yet. The first sign was when we had scheduled downtime for a software upgrade that was intended to take 2-3 hours ended up taking 10-11 hours because there was a problem and BlueArc lacked the proper escalation procedures to resolve it quick enough. Their CEO sent us a letter later saying that they fixed that process in the company. The second sign was when I went to them and asked them to confirm the drive type/size of all of our disks so I could do some math for the replacement system. They did a new audit(had to be on site to do it for some reason), and turns out we had about 80 more spindles than they thought we had(we bought everything through them). I don’t know how you lose track of that amount of disks for support but somehow it fell through the cracks. Another issue we had was we paid BlueArc to relocate the system to another facility(again before I was at the company), and whomever moved it didn’t do a good job, they accidentally plugged both power supplies of a single shelf into the same PDU. Fortunately it was a non production system. A PSU blew at one point that took out the PDU, which then took out that shelf which then took out the file system the shelf was on.

Even after all of that my main problem with their solution was the disks. LSI was not up to snuff and the proposal from HDS wasn’t going to cut it. I told my management that there is no doubt that HDS could come up with a solution that would work — it’s just what they have proposed will not(they didn’t even have thin provisioning at the time. 3PAR was telling me HDS was pairing USP-Vs along with AMSs in order to try to compete in the meantime. They did not propose that to us). A combination of poor performing SATA on RAID-6 no less for bulk storage and higher performing 15k RPM disks for higher tier/less storage. HDS/BlueArc felt it was equivalent to what I had specified through 3PAR and Exanet, not understanding the architectural advantages the 3PAR system had over the proposed HDS design(going into specifics will take too long you probably know them by now anyways if your here). Not to mention what seemed like sheer incompetence among the HDS team that was supporting us, it seemed nothing I asked them they could answer without engaging someone from Japan and even then I rarely got a comprehensible answer.

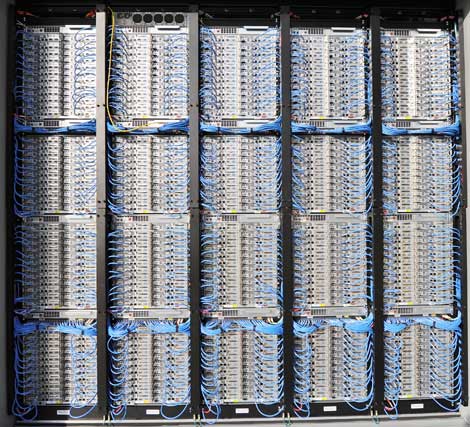

So in the end we ended up replacing a 4-rack BlueArc system with what could of been a single rack 3PAR + a few rack units for the Exanet but we had to put the 3PAR in two racks due to weight constraints in the data center. We went from 500+ disks (mix of SATA-I and 10k RPM FC) to 200 disks of SATA-II (RAID 5 5+1). With the change we got the advantage of being able to run fibre channel (which we ran to all of our VM boxes as well as primary databases), iSCSI (which we used here and there 3PAR’s iSCSI support has never been as good as I would of liked to have seen it though for anything serious I’d rather use FC anyways and that’s what 3PAR’s customers did which led to some neglect on the iSCSI front).

Half the floor space, half the power usage, roughly the same amount of usable storage, about the same amount of raw storage. We put the 3PAR/Exanet system through it’s paces with our most I/O intensive workload at the time and it absolutely screamed. I mean it exceeded everyone’s expectations(mine included). But that was only the begining.

This is a story I like to tell on 3PAR reference calls when I do them which is becoming more and more rare these days. In the early days of our 3PAR/Exanet deployment the Exanet engineer tried to assure me that they were thin provisioning friendly, he had personally used 3PAR+Exanet in the past and it worked fine. So with time constraints and stuff I provisioned a file system on the Exanet box not thinking too much about the 3PAR end of things. It’s thin provisioning friendly right? RIGHT?

Well not so much, before you knew it the system was in production and we started dumping large amounts of data on it, and deleting large amounts of data on it, I found out in a few weeks the Exanet box was preferring to allocate new space rather than reclaim deleted space. I did some calculations and the result was not good. If we let the system continue at this rate we were going to exceed the amount of capacity on the 3PAR box if the Exanet file system was allowed to grow to it’s full potential. Not good.. Compound that with the fact that we were at the maximum addressable capacity of a 2-node 3PAR box, if I had to add even 1 more disk to the system(not that adding 1 disk is possible in that system due to the way disks are added, minimum is 4), I would of had to put in 2 more controllers. Which as you might expect is not exactly cheap. So I was looking at what could of been either a very costly downtime to do data migration or a very costly upgrade to correct my mistake.

Dynamic optimization to the rescue. This really saved my ass. I mean really, it did. When I built the system I used RAID 5 3+1 for performance (for 3PAR that is roughly ~8% slower than RAID 10, and 3PAR fast RAID 5 is probably on par with many other vendors RAID 10 due to the architecture).

So I ran some more calculations and determined if I could get to RAID 5 5+1 I would have enough space to survive. So I began the process, converting roughly a half dozen LUNs at a time. 24 hours a day, 7 days a week. It took longer than I expected, the 3PAR was routinely getting hammered from daily activities from all sides. It took about 5 months in the end to convert all of the volumes. Throughout the process nobody noticed a thing. The array was converting volumes for 24 hours a day for 5 months straight and nobody noticed (except me who was baby sitting it hoping I could beat the window). If I recall right I probably had 3-4 weeks of buffer space, if my conversions was going to take an extra month I would of exceeded the capacity of the system. So, I got lucky I suppose, but also bought the system knowing I could make such course corrections online without impacting applications for just that kind of event — I just didn’t expect the event to be so soon and on such a large scale.

One of the questions I had for HDS at the time we were looking at them was could they do the same online RAID conversions. The answer? Absolutely they can. But the fine print was (assuming it still is) you needed blank disks to do the migration to. Since 3PAR’s RAID is performed at the sub disk level no blank disks are required, only blank “chunklets” as they call them. Basically you just need enough empty space on the array to mirror the LUN/Volume to the new RAID level and then you break the mirror and eliminate the source (this is all handled transparently with a single command and some patience depending on system load and volume of data in flight).

As time went on we loaded the system with ever more processes and connected ever more systems to it as we got off the old BlueArc(s). I kept seeing the IOPS (and disk response times) on the 3PAR going up..and up.. I really thought it was going to choke, I mean we were pushing the disks hard, with sustained disk response times in the 40-50ms range at times(with rare spikes to well over 100ms). I just kept hoping for the day when we would be done and the increase would level off, and it did for the most part eventually. I built my own custom monitoring system for the array for performance trending, since I didn’t like the query based tool they provided as much as what I could generate myself(despite the massive amount of time it took to configure my tool).

I did not know a 7200RPM SATA disk could do 127 IOPS of random I/O.

We had this one process that would dump up to say 50GB of data from upwards of 40-50 systems simultaneously as fast as they could go. Needless to say when this happened it blew out all of the caches across the board and brought things to a grinding halt for some time(typically 30-60 seconds). I would see the behavior on the NAS system and login to my monitoring tool and just see it hang while it tried to query the database(which was on the storage). I would cringe, and wait for the system to catch up. We tried to get them to re-design the application so it was more thoughtful of the storage but they weren’t able to. Well they did re-design it one time (for the worse). I tried to convince them to put it on fusion IO on local storage in the servers but they would have no part of it. Ironically not long after I left the company they went out and bought some Fusion IO for another project. I guess as long as the idea was not mine it was a good one.. The storage system was entirely a back office thing, no real time end user transactions ever touched it, which meant we could live with the higher latency by pushing the SATA drives 30-50% beyond engineering specifications.

At the end of the first full year of operation we finally got budget to add capacity to the system, we had shrunk the overall theoretical I/O capacity by probably 2/3rds vs the previous array, and had absorbed almost what seemed like a 200% growth on top of that during the first year and the system held up. I probably wouldn’t of believed it if I didn’t see it(and live it) personally. I hammered 3PAR as often as I could to increase the addressable capacity of their systems which was limited by the operating system architecture. Doesn’t take a rocket scientist to see that their systems had 4GB of control cache(per controller) which is a common limit to 32-bit software. But the software enhancement never came while I was there at least, it is there in some respect in the new V-class, though as mentioned the V-class seems to have had an arbitrary raw capacity limit placed on it that does not align with the amount of control cache it can have (up to 32GB per controller). With 64-bit software and more control cache I could of doubled or tripled the capacity of the system without adding controllers.

Adding the two extra controllers did give us one thing I wanted – Persistent cache, that’s just an awesome technology to have and you simply can’t do that kind of thing on a 2-controller system. Also gave us more ports than I knew what to do with.

What happened to the BlueArc? Well after about 10 months of trying to find someone to sell it to – or give it to — we ended up paying someone to haul it away. When HDS/BlueArc was negotiating with us on their solution they tried to harp on how we could leverage our existing disk from BlueArc in the new solution as another tier. I didn’t have to say it my boss did which made me sort of giggle – he said the operational costs of running the old BlueArc disk (support was really high, + power and co-lo space) was more than the disks were worth, BlueArc/HDS didn’t have any real response to that. Other than perhaps to nod their heads acknowledging that we’re smart enough to realize that fact.

I still would like to use BlueArc again, I think it’s a fine platform, I just want to use my own storage on it 🙂

This ended up being a lot longer than I expected! Hope you didn’t fall asleep. Just got right to 2600 words.. there.