I stumbled upon this a few days ago and just got around to reading it now. It came out about a month ago, I forgot where I saw it, I think from Planet V12n or something.

Anyways it’s two people who sound experienced(I don’t see information on their particular backgrounds) each talking up their respective solution. Two things really stood out to me:

- The guy pimping Microsoft was all about talking about a solution that doesn’t exist yet (“it’ll be fixed in the next version, just wait!”)

- The guy pimping VMware was all about talking about how cost doesn’t matter because VMware is the best.

I think they are both right – and both wrong.

It’s good enough

I really believe that in Hyper-V’s case and also in KVM/RHEV’s case that for the next generation of projects these products will be “good enough” (in Hyper-V’s case – whenever Windows 8 comes out) for a large(majority) number of use cases out there. I don’t see Linux-centric shops considering Hyper-V or Windows-centric considering KVM/RHEV/etc so VMware will still obviously have a horse in the race (as the pro-VMware person harps on in the debate).

Cost is becoming a very important issue

One thing that really got me the wrong way was when the pro-VMware person said this

Some people complain about VMware’s pricing but those are not the decision makers, they are the techies. People who have the financial responsibility for SLAs and customers aren’t going to bank on an unproven technology.

I’m sorry but that is just absurd. If cost wasn’t an issue then the techies wouldn’t be complaining about it because they know, first hand that it is an issue in their organizations. They know, first hand that they have to justify the purchase to those decision makers. The company I’m at now was in that same situation – the internal IT group could only get the most basic level of vSphere approved for purchase at the time for thier internal IT assets(this was a year or two or three ago). I hear them constantly complaining about the lack of things like vMotion, or shared storage etc. Cost was a major issue so the environment was built with disparate systems and storage and the cheap version of vSphere.

Give me an unlimited budget and I promise, PROMISE you will NEVER hear me complain about cost. I think the same is true of most people.

I’ve been there, more than once! I’ve done that exact same thing (Well in my case I managed to have good storage in most of the cases).

Those decision makers weigh the costs of maintaining that SLA with whatever solution they’re going to provide. Breaking SLAs can be more cost effective then achieving them. Especially if they are absurdly high SLAs. I remember at one company I was at they signed all these high level SLAs with their new customers — so I turned around and said – hey, in order to achieve those SLAs we need to do this laundry list of things. I think maybe 5-10% of the list got done until the budget ran out. You can continue to meet those high SLAs if your lucky, and don’t actually have the ability to sustain failure and maintain uptime. More often than not such smaller companies prefer to rely on luck then doing things right.

Another company I was at had what could of been called a disaster in itself, during the same time I was working on a so-called disaster recovery project (no coincidence). Despite the disaster, at the end of the day the management canned the disaster recovery project (which everyone agreed if it was in place it would of saved a lot of everything had it been in place at the time of the disaster). It’s not that budget wasn’t approved – it was approved. The problem was management wanting to do another project that they massively under budgeted for and decided to cannibalize the budget from DR to give to this other pet project.

Yet another company I was at signed a disaster recovery contract with Sun Guard just to tick the check box to say they have DR. The catch was – the entire company knew up front before they signed – that they would never be able to utilize the service. IT WOULD NEVER WORK. But they signed it anyways because they needed a plan, and they didn’t want to pay for a plan that would of worked.

VMware touting VM density as king

I’ve always found it interesting how VMware touts VM density, they show an automatic density advantage to VMware which automatically reduces VMware’s costs regardless of the competition. This example was posted to one of their blogs a few days ago.

They tout their memory sharing, their memory ballooning, their memory compression all as things that can increase density vs the competiton.

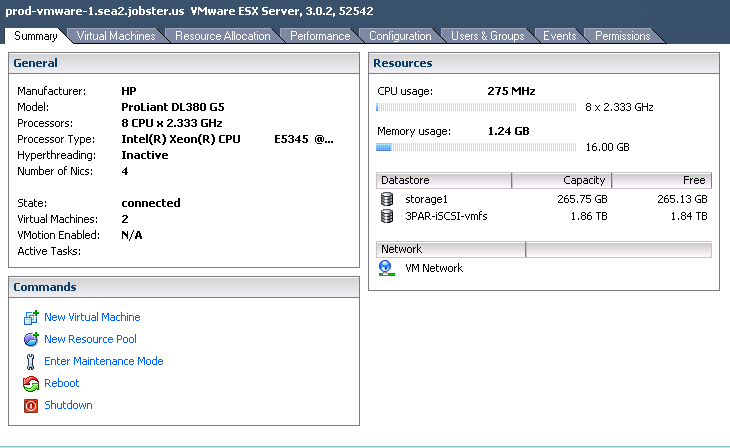

My own experience with memory sharing on VMware at least with Linux is pretty simple – it doesn’t work. It doesn’t give results. Looking at one of my ESX 4.1 servers (yes, no ESXi here) which has 18 VMs on it and 101GB of memory in use, how much memory am I saving with the transparent page sharing?

3,161 MB – or about 3%. Nothing to write home about.

For production loads, I don’t want to be in a situation where memory ballooning kicks in, or when memory compression kicks in, I want to keep performance high – that means no swapping of any kind from any system. Last thing I want is my VMs to start thrashing my storage with active swapping. Don’t even think about swapping if your running Java apps either, once that garbage collection kicks in your VM will grind to a halt while it performs that operation.

I would like a method to keep the Linux buffer cache under control however, whether it is ballooning that specifically targets file system cache, or some other method, that would be a welcome addition to my systems.

Another welcome addition would be the ability to flag VMs and/or resource pools to pro-actively utilize memory compression (regardless of memory usage on the host itself). Low priority VMs, VMs that sit at 1% cpu usage most of the time, VM’s where the added latency of compression on otherwise idle CPU cores isn’t that important (again – stay away from actively swapping!). As a bonus provide the ability to limit the CPU capacity consumed by compression activities, such as limiting it to the resource pool that the VM is in, and/or having a per-host setting where you could say – set aside up to 1 CPU core or whatever for compression, if you need more than that, don’t compress unless it’s an emergency.

YAWA with regards to compression would be to provide me with compression ratios – how effective is the compression when it’s in use? Recommend to me VMs that have low utilization that I could pro-actively reclaim memory by compressing these, or maybe only portions of the memory are worth compressing? The Hypervisor with the assistance of the vmware tools has the ability to see what is really going on in the guest by nature of having an agent there. The actual capability doesn’t appear to exist now but I can’t imagine it being too difficult to implement. Sort of along the lines of pro-actively inflating the memory balloon.

So, for what it’s worth for me, you can take any VM density advantages for VMware off the table when it comes from a memory perspective. For me and VM density it’s more about the efficiency of the code and how well it handles all of those virtual processors running at the same time.

Taking the Oracle VM blog post above, VMware points out Oracle supports only 128 VMs per host vs VMware at 512, good example – but really need to show how well all those VMs can work on the same host, how much overhead is there. If my average VM CPU utilization is 2-4% does that mean I can squeeze 512 VMs on a 32-core system (memory permitting of course)Â — when in theory I should be able to get around 640 – memory permitting again.

Oh the number of times I was logged into an Amazon virtual machine that was suffering from CPU problems only to see that 20-30% of the CPU usage was being stolen from the VM by the hypervisor. From the sar man page

%steal

Percentage of time spent in involuntary wait by the virtual CPU or CPUs while the hypervisor was servicing another virtual processor.

Not sure if Windows has something similar.

Back to costs vs Maturity

I was firmly in the VMware camp for many years, I remember purchasing ESX 3.1 (Standard edition – no budget for higher versions) for something like $3,500 for a two-socket license. I remember how cheap it felt at the time given the power it gave us to consolidate workloads. I would of been more than happy(myself at least) to pay double for what we got at the time. I remember the arguments I got in over VMware vs Xen with my new boss at the time, and the stories of the failed attempts to migrate to Xen after I left the company.

The pro-VMware guy in the original ZDNet debate doesn’t see the damage VMware is doing to itself when it comes to licensing. VMware can do no wrong in his eyes. I’m sure there are plenty of other die hards out there that are in the same boat. The old motto of you never got fired for buying IBM right. I can certainly respect the angle though as much as it pains my own history to admit that I think the tides have changed and VMware will have a harder and harder time pitching it’s wares in the future, especially if it keeps playing games with licensing on a technology which it’s own founders (I think — I wish I could find the article) predicted would become commodity by about now. With the perceived slow uptake of vSphere 5 amongst users I think the trend is already starting to form. The problem with the uptake isn’t just the licensing of course, it’s that for many situations there isn’t a compelling reason to upgrade – it’s good enough has set in.

I can certainly, positively understand VMware providing premium pricing for premium services, an Enterprise Plus Plus ..or whatever. But don’t vary the price based on provisioned utilization that’s just plain shooting yourself (and your loyal customers) in the feet. The provisioned part is another stickler for me – the hypervisor has the ability to measure actual usage, yet they stick their model to provisioned capacity – whether or not the VM is actually using the resource. It is a simpler model but it makes planning more complicated.

The biggest scam in this whole cloud computing era so many people think we’re getting into is the vast chasm between provisioned vs utilized capacity. With companies wanting to charge you for provisioned capacity and customers wanting to over provision so they don’t have to make constant changes to manage resources, knowing that they won’t be using all that capacity up front.

The technology exists, it’s just that few people are taking advantage of it and fewer yet have figured out how to leverage it (at least in the service provider space from what I have seen).

Take Terremark (now Verizon), a VMware-based public cloud provider (and one of only two partners listed on VMware’s site for this now old program). They built their systems on VMware, they build their storage on 3PAR. Yet for this vCloud express offering there is no ability to leverage resource pools, no ability to utilize thin provisioning (from a customer standpoint). I have to pay attention to exactly how much space I provision up front, and I don’t have the option to manage it like I would on my own 3PAR array.

Now Terremark has an enterprise offering that is more flexible and does offer resource pools, but this isn’t available on their on demand offering. I still have the original quote Terremark sent me for the disaster recovery project I was working on at the time, it makes me want to either laugh or cry to this day. I have to give Terremark credit though at least they have an offering that can utilize resource pools, most others (well I haven’t heard of even one – though I haven’t looked recently) does not. (Side note: I hosted my own personal stuff on their vCloud express platform for a year so I know it first hand – it was a pretty good experience what drove me away primarily was their billing for each and every TCP and UDP port I had open on an hourly rate. Also good to not be on their platform anymore so I don’t risk them killing my system if they see something I say and take it badly).

Obviously the trend in system design over recent years has bitten into the number of licenses that VMware is able to sell – and if their claims are remotely true – that 50% of the world’s workloads are virtualized and of that they have 80% market share – it’s going to be harder and harder to maintain a decent growth rate. It’s quite a pickle they are in, customers in large part apparently haven’t bought into the more premium products VMware has provided (that are not part of the Hypervisor), so they felt the pressure to increase the costs of the Hypervisor itself to drive that growth in revenue.

Bottom Line

VMware is in trouble.

Simple as that.