The most recent incarnation of this debate seemed to start with a somewhat interesting article over at Wired who talked to Miguel de Icaza who is a pretty famous desktop developer in Linux, mostly famous for taking what seemed to be controversial stances on implementing Microsoft .NET on Linux in the form of Mono.

And he thinks the real reason Linux lost is that developers started defecting to OS X because the developers behind the toolkits used to build graphical Linux applications didn’t do a good enough job ensuring backward compatibility between different versions of their APIs. “For many years, we broke people’s code,†he says. “OS X did a much better job of ensuring backward compatibility.â€

It has since blown up a bit more with lots more people giving their two cents. As a Linux desktop user (who is not a developer) for the past roughly 14 years I think I can speak with some authority based on my own experience. As I think back, I really can’t think of anyone I know personally who has run Linux on the desktop for as long as I have, or more to the point hasn’t tried it and given up on it after not much time had passed – for the most part I can understand why.

For the longest time Linux advocates hoped(myself included) Linux could establish a foot hold as something that was good enough for basic computing tasks, whether it’s web browsing, checking email, basic document writing etc. There are a lot of tools and toys on Linux desktops, most seem to have less function than form(?) at least compared to their commercial counterparts. The iPad took this market opportunity away from Linux — though even without iPad there was no signs that Linux was on the verge of being able to capitalize on that market.

Miguel’s main argument seems to be around backwards compatibility, an argument I raised somewhat recently, backwards compatibility has been really the bane for Linux on the desktop, and for me at least it has been just as much to do with the kernel and other user space stuff as it does the various desktop environments.

Linux on the desktop can work fine if:

- Your hardware is well supported by your distribution – this will stop you before you get very far at all

- You can live within the confines of the distribution – if you have any needs that aren’t provided as part of the stock system you are probably in for a world of hurt.

Distributions like Ubuntu, and SuSE before it (honestly not sure what, if anything has replaced Ubuntu today) have made tremendous strides in improving Linux usability from a desktop perspective. Live CDs have helped a lot too, to be able to give the system a test run without ever installing it to your HD is nice.

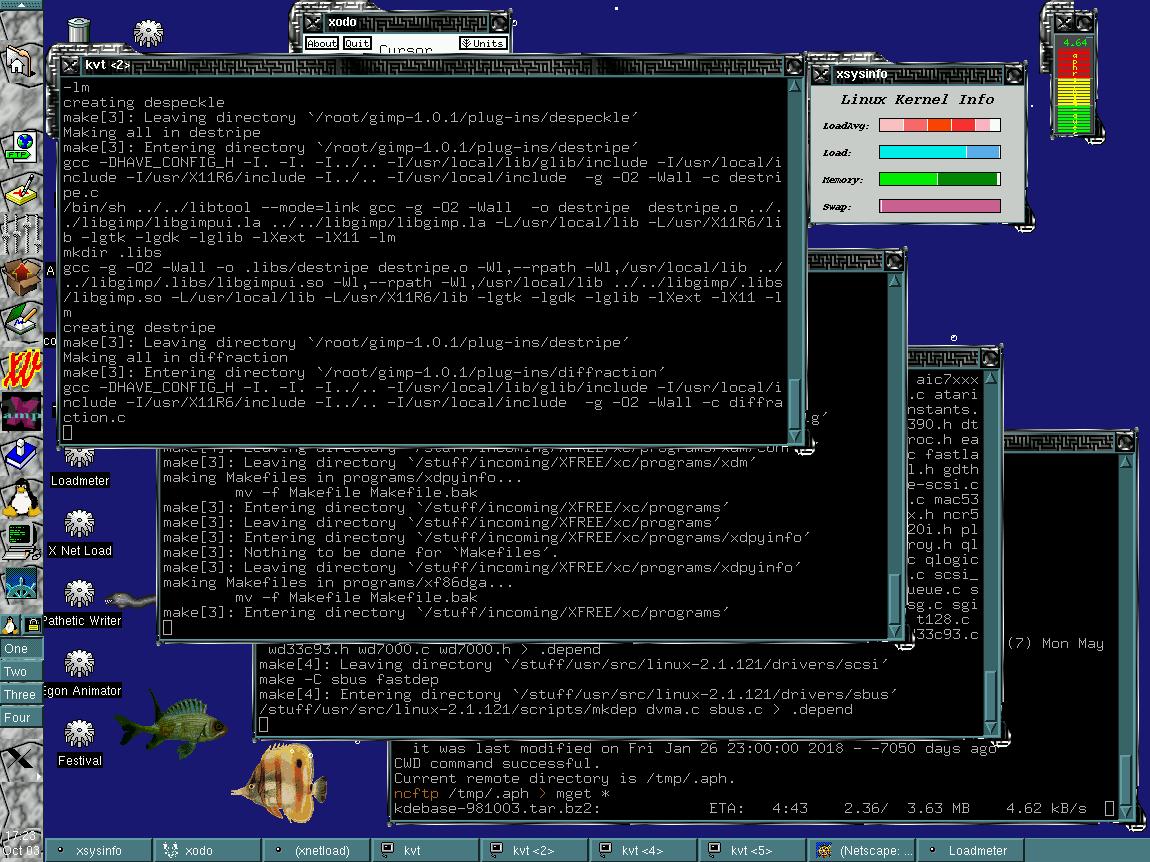

I suspect most people today don’t remember the days when the installer was entirely text based and you had to fight with XFree86 to figure out the right mode lines for your monitor for X11 to work, and fortunately I don’t think really anyone uses dial up modems anymore so the problems we had back when modems went almost entirely to software in the form of winmodems are no longer an issue. For a while I forked out the cash for Accelerated X, a commercial X11 server that had nice tools and was easy to configure.

The creation of the Common Unix Printer System or CUPS was also a great innovation, printing on Linux before that was honestly almost futile with basic printers, I can’t imagine what it would of been like with more complex printers.

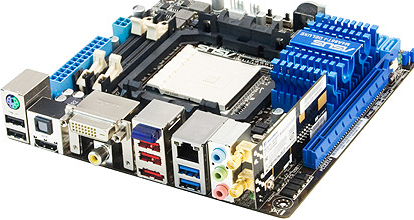

Start at the beginning though – the kernel. The kernel is not, and never really has maintained a stable binary interface for drivers over the years. To the point where I can take a generic driver for say a 2.6.x series kernel and use the same driver (singular driver) on Ubuntu, Red Hat, Gentoo or whatever. I mean you don’t have to look further than how many binary kernel drivers VMware includes with their vmware tools package to see how bad this is, on the version of vmware tools I have on the server that runs this blog there are 197 — yes 197 different kernels supported in there

- 47 for Ubuntu

- 55 For Red Hat Enterprise

- 57 for SuSE Linux Enterprise

- 39 for various other kernels

In an ideal world I would expect maybe 10 kernels for everything, including kernels that are 64 vs 32 bit.

If none of those kernels work, then yes, vmware does include the source for the drivers and you can build it yourself(provided you have the right development packages installed the process is very easy and fast). But watch out, the next time you upgrade your kernel you may have to repeat the process.

I’ve read in the most recent slashdot discussion where the likes of Alan Cox (haven’t heard his name in years!) said the Linux kernel did have a stable interface as he can run the same code from 1992 on his current system. My response to that is..then why do we have all these issues with drivers.

One of the things that has improved the state of Linux drivers is virtualization – it slashes the amount of driver code needed by probably 99% running the same virtual hardware regardless of the underlying physical hardware. It’s really been nice not to have to fight hardware compatibility recently as a result of this.

There have been times where device makers have released driver disks for Linux, usually for Red Hat based systems, however these often become obsolete fairly quickly. For some things perhaps like video drivers it’s not the end of the world, for the experienced user anyways you still have the ability to install a system and get online and get new drivers.

But if the driver that’s missing is for the storage controller, or perhaps the network card things get more painful.

I’m not trying to complain, I have dealt with these issues for many years and it hasn’t driven me away — but I can totally see how it would drive others away very quickly, and it’s too bad that the folks making the software haven’t put more of an effort into solving this problem.

The answer is usually make it open source – in the form of drivers at least, if the piece of software is widely used then making it open source may be a solution, but I’ve seen time and time again source released and it just rots on the vine because nobody has interest in messing with it (can’t blame them if they don’t need it). If the interface was really stable the driver could probably go unmaintained for several years without needing anyone to look at it (at least through the life of say the 2.6.x kernel)

When it comes to drivers and stuff – for the most part they won’t be released as open source, so don’t get your hopes up. I saw one person say that their company didn’t want to release open source drivers because they feared that they might be in violation of someone else’s patents and releasing the source would make it easier for their competition to be able to determine this.

The kernel driver situation is so bad in my opinion that distributions for the most part don’t back port drivers into their previous releases. Take Ubuntu for example, I run 10.04 LTS on my laptop that I am using now as well as my desktop at work. I can totally understand if the original released version doesn’t have the latest e1000e (which is totally open source!) driver for my network card at work. But I do not understand that more than a year after it’s release it still doesn’t have this driver. Instead you either have to manage the driver yourself (which I do – nothing new for me), or run a newer version of the distribution (all that for one simple network driver?!). This version of the distribution is supported until April 2013. Please note I am not complaining, I deal with the situation – I’m just stating a fact. This isn’t limited to Ubuntu either, it has applied to every Linux distribution I’ve ever used for the most part. I saw Ubuntu recently updated Skype to the latest version for Linux recently on 10.04 LTS, but they still haven’t budged on that driver (no I haven’t filed a bug/support request, I don’t care enough to do it – I’m simply illustrating a problem that is caused by the lack of a good driver interface in the kernel – I’m sure this applies to FAR more than just my little e1000e).

People rail on the manufacturers for not releasing source, or not releasing specs. This apparently was pretty common back in the 70s and early 80s. It hasn’t been common in my experience since I have been using computers (going back to about 1990). As more and more things are moved from hardware to software I’m not surprised that companies want to protect this by not releasing source/specs. Many manufacturers have shown they want to support Linux, but if you force them to do so by making them build a hundred different kernel modules for the various systems they aren’t going to put the effort in to doing it. Need to lower the barrier of entry to get more support.

I can understand where the developers are coming from though, they don’t have incentive to make the interfaces backwards and forwards compatible since that does involve quite a bit more work(much of it boring), instead prefer to just break things as the software evolves. I had been hoping that as the systems matured more this would become less common place, but it seems that hasn’t been the case.

So I don’t blame the developers…

But I also don’t blame people for not using Linux on the desktop.

Linux would of come quite a bit further if there was a common way to install drivers for everything from network cards to storage controllers, to printers, video cards, whatever, and have these drivers work across kernel versions, even across minor distribution upgrades. This has never been the case though (I also don’t see anything on the horizon, I don’t see this changing in the next 5 years if it changes ever).

The other issue with Linux is working within the confines of the distribution, this is similar to the kernel driver problem – because different distros are almost always more than a distribution with the same software, the underlying libraries are often incompatible between distributions so a binary on one, especially a complex one that is say a KDE or Gnome application won’t work on another, there are exceptions like Firefox, Chrome etc – though other than perhaps static linking in some cases not sure what they do that other folks can’t do. So the amount of work to support Linux from a desktop perspective is really high. I’ve never minded static linking, to me it’s a small price to pay to improve compatibility with the current situation. Sure you may end up loading multiple copies of the libraries into memory(maybe you haven’t heard but it’s not uncommon to get 4-8GB on a computer these days), sure if there is a security update you have to update the applications that have these older libraries as well. It sucks I suppose, but from my perspective it sucks a lot less than what we have now. Servers – are an entirely different beast, run by(hopefully) experienced people who can handle this situation better.

BSD folks like to tout their compatibility – though I don’t think that is a fair comparison, comparing two different versions of FreeBSD against Red Hat vs Debian are not fair, comparing two different versions of Red Hat against each other with two different versions of FreeBSD (or NetBSD or OpenBSD or DragonFly BSD …etc)Â is more fair. I haven’t tried BSD on the desktop since FreeBSD 4.x, for various reasons it did not give me any reasons to continue using it as a desktop and I haven’t had any reasons to consider it since.

I do like Linux on my desktop, I ran early versions of KDE (pre 1.0), up until around KDE 2, then switched to AfterStep for a while, eventually switching over to Gnome with Ubuntu 7 or 8, I forget. With the addition of an application called Brightside, GNOME 2.x works really well for me. Though for whatever reason I have to launch Brightside manually each time I login, setting it to run automatically on login results in it not working.

I also do like Linux on my servers, I haven’t compiled a kernel from scratch since the 2.2 days, but have been quite comfortable working with the issues operating Linux on the server end, the biggest headaches were always drivers with new hardware, though thankfully with virtualization things are much better now.

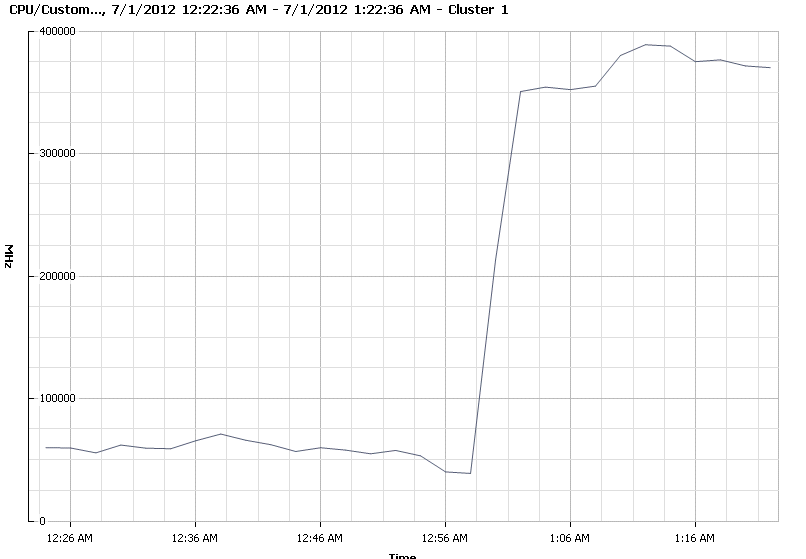

The most recent issue I’ve had with Linux on servers has been some combination of Ubuntu 10.04, with LVM and ext4 along with enterprise storage. Under heavy I/O have have seen many times ext4 come to a grinding halt. I have read that Red Hat explicitly requires that barriers be disabled with ext4 on enterprise storage, though that hasn’t helped me. My only working solution has been to switch back to ext3 (which for me is not an issue). The symtoms are very high system cpu usage, little to no i/o (really any attempts to do I/O result in the attempt freezing up), and when I turn on kernel debugging it seems the system is flooded with ext4 messages. Nothing short of a complete power cycle can recover the system in that state. Fortunately all of my root volumes are ext3 so it doesn’t prevent someone from logging in and poking around. I’ve looked high and low and have not found any answers. I had never seen this issue on ext3, and the past 9 months has been the first time I have run ext4 on enterprise storage. Maybe a bug specific to Ubuntu, am not sure. LVM is vital when maximizing utilization using thin provisioning in my experience, so I’m not about to stop using LVM, as much as 3PAR’s marketing material may say you can get rid of your volume managers – don’t.