UPDATED I’ve been waiting for this for quite some time, finally the 12-core AMD Opteron 6100s have arrived. AMD did the right thing this time by not waiting to develop a “true” 12-core chip and instead bolted a pair of CPUs together into a single package. You may recall AMD lambasted Intel when it released it’s first four core CPUs a few years ago(composed of a pair of two-core chips bolted together), a strategy that paid off well for them, AMD’s market share was hurt badly as a result, a painful lesson which they learned from.

For me I’d of course rather have a “true” 12-core processor, but I’m very happy to make do with these Opteron 6100s in the meantime, I don’t want to have to wait another 2-3 years to get 12 cores in a socket.

Some highlights of the processor:

- Clock speeds ranging from 1.7Ghz(65W) to 2.2Ghz(80W), with a turbo boost 2.3Ghz model coming in at 105W

- Prices ranging from $744 to $1,396 in 1,000-unit quantities

- Twelve-core and Eight–core, L2 – 512K/core, L3 – 12MB of shared L3 Cache

- Quad-Channel LV & U/RDDR3, ECC, support for on-line spare memory

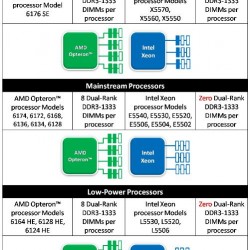

- Supports up to 3 DIMMs/channel, up to 12 DIMMS per CPU

- Quad 16-bit HyperTransportâ„¢ 3 technology (HT3) links, up to 6.4 GT/s per link (more than triple HT1 performance)

- AMD SR56x0 chipset with I/O Virtualization and PCIe® 2.0

- Socket compatibility with planned AMD Opteronâ„¢ 6200 Series processor.(16 cores?)

- New advanced idle states allowing the processor to idle with less power usage than the previous six core systems (AMD seems to have long had the lead in idle power conservation).

The new I/O virtualization looks quite nice as well – AMD-V 2.0, from their site:

Hardware features that enhance virtualization:

- Unmatched Memory Bandwidth and Scalability – Direct Connect Architecture 2.0 supports a larger number of cores and memory channels so you can configure robust virtual machines, allowing your virtual servers to run as close as possible to physical servers.

- Greater I/O virtualization efficiencies –I/O virtualization to help increase I/O efficiency by supporting direct device assignment, while improving address translation to help improve the levels of hypervisor intervention.

- Improved virtual machine integrity and security –With better isolation of virtual machines through I/O virtualization, helps increase the integrity and security of each VM instance.

- Efficient Power Management – AMD-P technology is a suite of power management features that are designed to drive lower power consumption without compromising performance. For more information on AMD-P, click here

- Hardware-assisted Virtualization – AMD-V technology to enhance and accelerate software-based virtualization so you can run more virtual machines, support more users and transactions per virtual machine with less overhead. This includes Rapid Virtualization Indexing (RVI) to help accelerate the performance of many virtualized applications by enabling hardware-based VM memory management. AMD-V technology is supported by leading providers of hypervisor and virtualization software, including Citrix, Microsoft, Red Hat, and VMware.

- Extended Migration – a hardware feature that helps virtualization software enable live migration of virtual machines between all available AMD Opteron™ processor generations. For a closer look at Extended Migration, follow this link.

With AMD returning to the chipset design business I’m happy with that as well, I was never comfortable with Nvidia as a server chipset maker.

The Register has a pair of great articles on the launch as well, though the main one I was kind of annoyed I had to scroll so much to get past the Xeon news, which I don’t think they had to go out of their way to recap with such detail in an article about the Opterons, but oh well.

I thought this was an interesting note on the recent Intel announcement of integrated silicon for encryption –

While Intel was talking up the fact that it had embedded cryptographic instructions in the new Xeon 5600s to implement the Advanced Encryption Standard (AES) algorithm for encrypting and decrypting data, Opterons have had this feature since the quad-core “Barcelona” Opterons came out in late 2007, er, early 2008.

And as for performance –

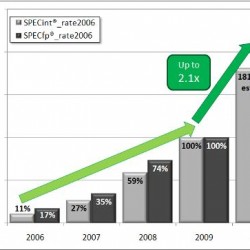

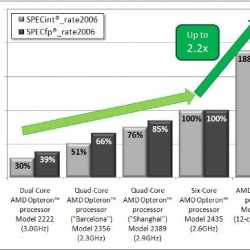

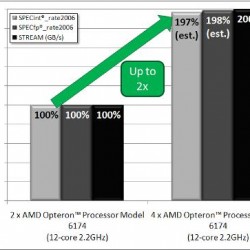

Generally speaking, bin for bin, the twelve-core Magny-Cours chips provide about 88 per cent more integer performance and 119 per cent more floating point performance than the six-core “Istanbul” Opteron 2400 and 8400 chips they replace..

AMD seems geared towards reducing costs and prices as well with –

The Opteron 6100s will compete with the high-end of the Xeon 5600s in the 2P space and also take the fight on up to the 4P space. But, AMD’s chipsets and the chips themselves are really all the same. It is really a game of packaging some components in the stack up in different ways to target different markets.

Sounds like a great way to keep costs down by limiting the amount of development required to support the various configurations.

AMD themselves also blogged on the topic with some interesting tidbits of information –

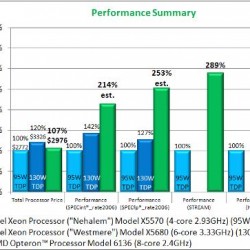

You’re probably wondering why we wouldn’t put our highest speed processor up in this comparison. It’s because we realize that while performance is important, it is not the most important factor in server decisions. In most cases, we believe price and power consumption play a far larger role.

[..]

Power consumption – Note that to get to the performance levels that our competitor has, they had to utilize a 130W processor that is not targeted at the mainstream server market, but is more likely to be used in workstations. Intel isn’t forthcoming on their power numbers so we don’t really have a good measurement of their maximum power, but their 130W TDP part is being beaten in performance by our 80W ACP part. It feels like the power efficiency is clearly in our court. The fact that we have doubled cores and stayed in the same power/thermal range compared to our previous generation is a testament to our power efficiency.

Price – This is an area that I don’t understand. Coming out of one of the worst economic times in recent history, why Intel pushed up the top Xeon X series price from $1386 to $1663 is beyond me. Customers are looking for more, not less for their IT dollar. In the comparison above, while they still can’t match our performance, they really fall short in pricing. At $1663 versus our $1165, their customers are paying 42% more money for the luxury of purchasing a slower processor. This makes no sense. Shouldn’t we all be offering customers more for their money, not less?

In addition to our aggressive 2P pricing, we have also stripped away the “4P tax.†No longer do customers have to pay a premium to buy a processor capable of scaling up to 4 CPUs in a single platform. As of today, the 4P tax is effectively $0. Well, of course, that depends on you making the right processor choice, as I am fairly sure that our competitor will still want to charge you a premium for that feature. I recommend you don’t pay it.

As a matter of fact, a customer will probably find that a 4P server, with 32 total cores (4 x 8-core) based on our new pricing, will not only perform better than our competitor’s highest end 2P system, but it will also do it for a lower price. Suddenly, it is 4P for the masses!

While for the most part I am mainly interested in their 12-core chips, but I also see significant value in the 8 core chips, being able to replace a pair of 4 core chips with a single socket 8 core system is very appealing as well in certain situations. There is a decent premium on motherboards that need to support more than one socket. Being able to get 8, (and maybe even 12 cores) on a single socket system is just outstanding.

I also found this interesting –

Each one is capable of 105.6 Gigaflops (12 cores x 4 32-bit FPU instructions x 2.2GHz). And that score is for the 2.2GHz model, which isn’t even the fastest one!

I still have a poster up on one of my walls back from 1995-1996 era on the world’s first Teraflop machine, which was –

The one-teraflops demonstration was achieved using 7,264 Pentium Pro processors in 57 cabinets.

With the same number of these new Opterons you could get 3/4ths of the way to a Petaflop.

SGI is raising the bar as well –

This means as many as 2,208 cores in a single rack of our Rackable™ rackmount servers. And in the SGI ICE Cube modular data center, our containerized data center environment, you can now scale within a single container to 41,760 cores! Of course, density is only part of the picture. There’s as much to be excited about when it comes to power efficiency and the memory performance of SGI servers using AMD Opteron 6100 Series processor technology

Other systems announced today include:

- HP DL165G7

- HP SL165z G7

- HP DL385 G7

- Cray XT6 supercomputer

- There is mention of a Dell R815 though it doesn’t seem to be officially announced yet. The R815 specs seem kind of underwhelming in the memory department, with it only supporting 32 DIMMs (the HP systems above support the full 12 DIMMs/socket). It is only 2U however. Sun has had 2U quad socket Opteron systems with 32 DIMMs for a couple years now in the form of the X4440, strange that Dell did not step up to max out the system with 48 DIMMs.

I can’t put into words how happy and proud I am of AMD for this new product launch, not only is it an amazing technological achievement, but the fact that they managed to pull it off on schedule is just amazing.

Congratulations AMD!!!