I came across this article on LinkedIn which I found very interesting. The scenario given by the article was a professional photographer had 500GB of data to backup and they decided to try Carbonite to do it.

The problem was Carbonite apparently imposes significant throttling on the users uploading large amounts of data –

[..]At that rate, it takes nearly two months just to upload the first 200GB of data, and then another 300 days to finish uploading the remaining 300GB.

Which takes me back to a conversation I was having with my boss earlier in the week about why I decided to buy my own server and put it in a co-location facility, instead of using some sort of hosted thing.

I have been hosting my own websites, email etc since about 1996. At one point I was hosted on T1s at an office building, then I moved things to my business class DSL at home for a few years, then when that was no longer feasible I got a used server and put it up at a local colo in Seattle. Then I decided to retire that old server(build in 2004) and spent about a year in the Terremark vCloud, before buying a new server and putting it up at a colo in the Bay area where I live now.

My time in the Terremark cloud was OK, my needs were pretty minimal, but I didn’t have a lot of flexibility(due to the costs). My bill was around $120/mo or something like that for a pair of VMs. Terremark operates in a Tier 4 facility and doesn’t use the built to fail model I hate so much, so I had confidence things would get fixed if they ever broke, so I was willing to pay some premium for that.

Cloud or self hosting for my needs?

I thought hard about whether or not to invest in a server+colo again or stay on some sort of hosted service. The server I am on today was $2,900 when I bought it, which is a decent amount of money for me to toss around in one transaction.

Then I had the idea of storing data off site, I don’t have much that is critical, mostly media files and stuff that would take a long time to re-build in case of major failure or something. But I wanted something that could do at least 2-3TB of storage.

So I started looking into what this would cost in the cloud. I was sort of shocked I guess you could say. The cost for regular, protected cloud storage was going to easily be more than $200/mo for 3TB of usable space.

Then there are backup providers like Carbonite, Mozy, Backblaze etc.. I read a comment on Slashdot I think it was about Backblaze and was pretty surprised to then read their fine print –

Your external hard drives need to be connected to your computer and scanned by Backblaze at least once every 30 days in order to keep them backed up.

So the data must be scanned at least once every 30 days or it gets nuked.

They also don’t support backing up network drives. Most of the providers of course don’t support Linux either.

The terms do make sense to me, I mean it costs $$ to run, and they advertise unlimited. So I don’t expect them to be storing TBs of data for only $4/mo. It just would be nice if they (and others) would be more clear on their limitations up front, at least unlike the person in the article above I was able to make a more informed decision.

The only real choice: Host it myself

So the decision was really simple at that point. Go invest and do it myself. It’s sort of ironic if you think about it, all this talk about cloud saving people money. Here I am, just one person, with no purchasing power whatsoever and I am saving more money doing it myself then some massive scale service provider can offer it.

The point wasn’t just the storage though. I wanted something to host:

- This blog

- My email

- DNS

- my other websites / data

- would be nice if there was a place to experiment/play as well

So I bought this server which is a single socket quad core Intel chip, originally with 8GB, now it has 16GB of memory, and 4x2TB SAS disks in RAID 1+0(~3.6TB usable) w/3Ware hardware RAID controller(I’ve been using 3Ware since 2001). It has dual power supplies(though both are connected to the same source, my colo config doesn’t offer redundant power). It even has out of band management with full video KVM and virtual media options. Nothing like the quality of HP iLO, but far better than what a system of this price point could offer going back a few years ago.

On top of that I am currently running 5 active VMs

- VM #1 runs my personal email, DNS,websites, this blog etc

- VM #2 runs email for a few friends, and former paying customers(not sure how many are left) from an ISP that we used to run many years ago, DNS, websites etc

- VM #3 is a OpenBSD firewall running in layer 3 mode, also provides site to site VPN to my home, as well as a end-user VPN for my laptop when I’m on the road)

- VM #4 acts as a storage/backup server for my home data with a ~2TB file system

- VM #5 is a windows VM in case I need one of those remotely. It doesn’t get much use.

- VM #6 is the former personal email/dns/website server that ran a 32-bit OS. Keeping it around on an internal IP for a while in case I come across more files that I forgot to transfer.

There is an internal and an external network on the server, the site to site VPN of course provides unrestricted access to the internal network from remote which is handy since I don’t have to rely on external IPs to run additional things. The firewall also does NAT for devices that are not on external IPs.

Obviously as you might expect the server sits at low CPU usage 99% of the time and it’s running at around 9GB of used memory, so I can toss on more VMs if needed. It’s obviously a very flexible configuration.

When I got the server originally I decided to host it with the company I bought it from, and they charged me $100/mo to do it. Unlimited bandwidth etc.. good deal(also free on site support)! First thing I did was take the server home and copy 2TB of data onto it. Then I gave it back to them and they hosted it for a year for me.

Then they gave me the news they were going to terminate their hosting and I had only two weeks to get out. I evaluated my options and decided to stay at the same facility but started doing business with the facility itself (Hurricane Electric). The down side was the cost was doubling to $200/mo for the same service (100Mbit unlimited w/5 static IPs), since I was no longer sharing the service with anyone else. I did get a 3rd of a rack though, not that I can use much of it due to power constraints(I think I only get something like 200W). But in the grand scheme of things it is a good deal, I mean it’s a bit more than double what I was paying in the Seattle area but I am getting literally 100 times the bandwidth. That gives me a lot of opportunities to do things. I’ve yet to do much with it beyond my original needs, that may change soon though.

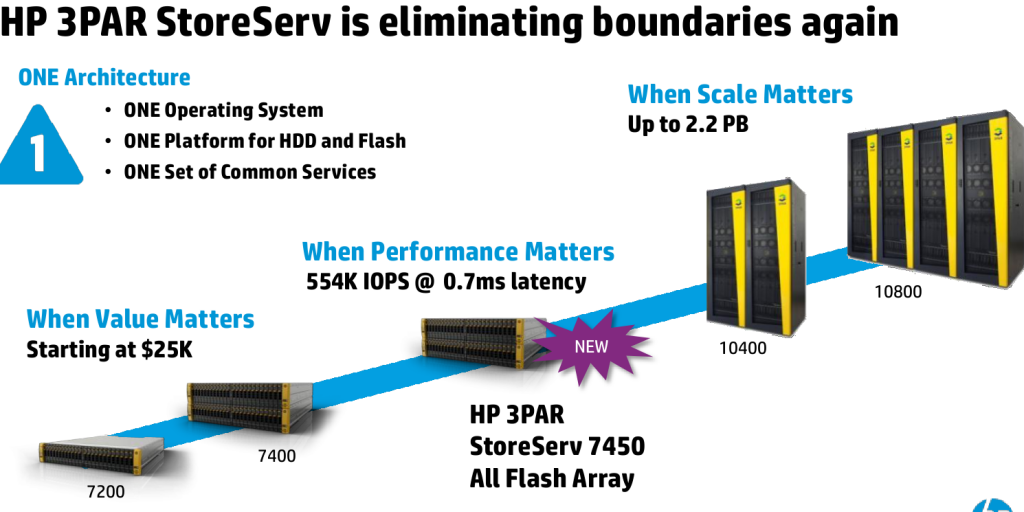

Now granted it’s not high availability, I don’t have 3PAR storage like Terremark did when I was a customer, I have only 1 server so if it’s down everything is down. It’s been reliable though, providing really good uptime over the past couple of years. I have had to replace at least two disks, and I also had to replace the USB stick that runs vSphere the previous one seemed to have run out of flash blocks as I could no longer write much to the file system. That was a sizable outage for me as I took the time to install vSphere 5.1 (from 4.x) on the new USB stick, re-configure things as well as upgrade the memory all in one day, took probably 4-5 hours I think. I’m connected to a really fast backbone and the network has been very reliable (not perfect, but easily good enough).

So my server was $2,900, and I pay currently $2,400/year for service. It’s certainly not cheap, but I think it’s a good deal still relative to other options. I maintain a very high level of control, I can store a lot of data, I can repair the system if it breaks down, and the solution is very flexible, I can do a lot of things with the virtualization as well as the underlying storage and the high bandwidth I have available to me.

Which brings me to next steps, something I’ve always wanted to do is make the data more mobile, that is one area which it was difficult(or impossible) to compete with cloud services, especially on things like phones and tablets. Since they have the software R&D to make those “apps” and other things.

I have been using WebOS for several years now, which of course runs on top of Linux. Though the underlying Linux OS is really too minimal to be of any use to me. It’s especially useless on the phone where I am just saddened that there has never been a decent terminal emulation app released for WebOS. Of all the things that could be done, that one seems really trivial. But it never happened(that I could see, there were a few attempts but nothing usable as far as I could tell). On the touchpad things were a little different, you could get an Xterm and it was kind of usable, significantly more so than the phone. But still the large overhead of X11 just to get a terminal seemed quite wasteful. I never really used it very much.

So I have this server, and all this data sitting on a fast connection but I didn’t have a good way to get to it remotely unless I was on my laptop (except for the obvious like the blog etc are web accessible).

Time to switch to new mobile platform

WebOS is obviously dead(RIP), in the early days post termination of the hardware unit at HP I was holding out some hope for the software end of things but that hope has more or less dropped to 0 now, nothing remains but disappointment of what could of been. I think LG acquiring the WebOS team was a mistake and even though they’ve announced a WebOS-powered TV to come out early next year, honestly I’ll be shocked if it hits the market. It just doesn’t make any sense to me to run WebOS on a TV outside of having a strong ecosystem of other WebOS devices that you can integrate with.

So as reality continued to set in, I decided to think about alternatives, what was going to be my next mobile platform. I don’t trust Google, don’t like Apple. There’s Blackberry and Windows Phone as the other major brands in the market. I really haven’t spent any time on any of those devices. So I suppose I won’t know for sure but I did feel that Samsung had been releasing some pretty decent hardware + software (based on stuff I have read only), and they obviously have good market presence. Some folks complain etc.. If I were to go to a Samsung Android platform I probably wouldn’t have an issue. Those complaining about their platform probably don’t understand the depression that WebOS has been in since about 6 months after it was released – so really anything relative to that is a step up.

I mean I can’t even read my personal email on my WebOS device without using the browser. Using webmail via the browser on WebOS for me at least is a last resort thing, I don’t do it often(because it’s really painful – I bought some skins for the webmail app I use that are mobile optimized only to find they are not compatible with WebOS so when on WebOS I use a basic html web mail app, it gets the job done but..). The reason I can’t use the native email client is I suppose in part my fault, the way I have my personal email configured is I have probably 200 email addresses and many of them go directly to different inboxes. I use Cyrus IMAP and my main account subscribes to these inboxes on demand. If I don’t care about that email address I unsubscribe and it continues to get email in the background. WebOS doesn’t support accessing folders via IMAP outside of the INBOX structure of a single account. So I’m basically SOL for accessing the bulk of my email (which doesn’t go to my main INBOX). I have no idea if Samsung or Android works any different.

The browser on the touchpad is old and slow enough that I keep javascript disabled on it, I mean it’s just a sad decrepit state for WebOS these days(and has been for almost two years now). My patience really started running out recently when loading a 2-page PDF on my HP Pre3, then having the PDF reader constantly freeze (unable to flip between pages, though the page it was on was still very usable) if I let it sit idle for more than a couple of minutes (have to restart the app). This was nothing big, just a 2-page PDF the phone couldn’t even handle that.

I suppose my personal favorite problem is not being able to use bluetooth and 2.4Ghz wifi at the same time on my phone. The radios conflict, resulting in really poor quality over bluetooth or wifi or both. So wifi stays disabled the bulk of the time on my phone since most hotspots seem only to do 2.4Ghz, and I use bluetooth almost exclusively when I make voice calls.

There are tons of other pain points for me on WebOS, and I know they will never get fixed, those are just a couple of examples. WebOS is nice in other ways of course, I love the Touchstone (inductive charging) technology for example, the cards multitasking interface is great too(though I don’t do heavy multi tasking).

So I decided to move on. I was thinking Android, I don’t trust Google but, ugh, it is Linux based and I am a Linux user(I do have some Windows too but my main systems desktops, laptops are all Linux) and I believe Windows Phone and BlackBerry would likely(no, certainly) not play as well with Linux as Android. (WebOS plays very well with Linux, just plug it in and it becomes a USB drive, no restrictions – rooting WebOS is as simple as typing a code into the device). There are a few other mobile Linux platforms out there, I think Meego(?) might be the biggest trying to make a come back, then there is FirefoxOS and Ubuntu phone.. all of which feel less viable(in today’s market) than WebOS did back in 2009 to me.

So I started thinking more about leaving WebOS, and I think the platform I will go to will be the Samsung Galaxy Note 3, some point after it comes out(I have read ~9/4 for the announcement or something like that). It’s much bigger than the Pre3, not too much heavier(Note 2 is ~30g heavier). Obviously no dedicated keyboard, I think the larger screen will do well for typing with my big hands. The Samsung multimedia / multi tasking stuff sounds interesting(ability to run two apps at once, at least Samsung apps).

I do trust Samsung more than Google, mainly because Samsung wants my $$ for their hardware. Google wants my information for whatever it is they do..

I’m more than willing to trade money in a vein attempt to maintain some sort of privacy. In fact I do it all the time, I suppose that could be why I don’t get much spam to my home address(snail mail). I also very rarely get phone calls from marketers(low single digits per year I think), even though I have never signed up to any do not call lists(I don’t trust those lists).

Then I came across this comment on Slashdot –

Well I can counter your anecdote with one of my own. I bought my Galaxy S3 because of the Samsung features. I love multi-window, local SyncML over USB or WiFi so my contacts and calendar don’t go through the “cloud”, Kies Air for accessing phone data through the browser, the Samsung image gallery application, the ability to easily upgrade/downgrade/crossgrade and even load “frankenfirmware” using Odin3, etc. I never sign in to any Google services from my phone – I’ve made a point of not entering a Google login or password once.

So, obviously, I was very excited to read that.

Next up, and this is where the story comes back around to online backup, cloud, my co-lo, etc.. I didn’t expect the post to be this long but it sort of got away from me again..

I think it was on another Slashdot comment thread actually (I read slashdot every day but never have had an account and I think I’ve only commented maybe 3 times since the late 90s), where someone mentioned the software package Owncloud.

Just looking at the features, once again got me excited. They also have Android and IOS apps. So this would, in theory, from a mobile perspective allow me to access files, sync contacts, music, video, perhaps even calendar(not that I use one outside of work which is Exchange) and keep control over all of it myself. Also there are desktop sync clients (ala dropbox or something like that??) for Linux, Mac, and Windows.

So I installed it on my server, it was pretty easy to setup, I pointed it to my 2TB of data and off I went. I installed the desktop sync client on several systems(Ubuntu 10.04 was the most painful to install to, had to compile several packages from source but it’s nothing I haven’t done a million times before on Linux). The sync works well (had to remove the default sync which was to sync everything, at first it was trying to sync the full 2TB of data, and it kept failing, not that I wanted to sync that much…I configured new sync directives for specific folders).

So that’s where I’m at now. Still on WebOS, waiting to see what comes of the new Note 3 phone, I believe I saw for the Note 2 there was even a custom back cover which allowed for inductive charging as well.

It’s sad to think of the $$ I dumped on WebOS hardware in the period of panic following the termination of the hardware division, I try not to think about it ….. The touchpads do make excellent digitial picture frames especially when combined with a touchstone charger. I still use one of my touchpads daily(I have 3), and my phone of course daily as well. Though my data usage is quite small on the phone since there really isn’t a whole lot I can do on it, unless I’m traveling and using it as a mobile hot spot.

whew, that was a lot of writing.