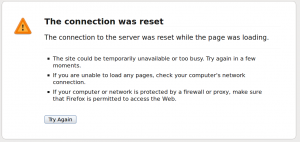

While I’m on the subject of that damn status site I’ll tell you what – I filed a support ticket with them back in AUGUST 2012 – yes people that is EIGHT MONTHS ago, to report to them that their status site doesn’t #@$@ work. Well at least most of the time it doesn’t #@$@! work. You see a lot of times the site returns an invalid Location: header which is relative instead of absolute, and standards based browsers(e.g. Firefox), don’t like that so I get a pretty error message that says the site is down, basically. I can usually get it to load after forcing a refresh 5-25 times.

I first came across this site when Opscode was in the midst of a fairly major outage. So naturally I feel it’s important that the web site that hosts your status page work properly. So I filed the ticket, after going back and forth with support, I determined the reason for the browser errors and they said they’d look into it. There wasn’t a lot they claimed they could do because the site was hosted with another provider (Tumbler or something??).

That’s no excuse.

So time passes, and nothing gets done. I mentioned a while back I met some of the senior opscode staff a few years ago, so I directly reached out to the Chief Operating Officer of Opscode (who is a very technical guy himself) to plead with him FIX THE DAMN SITE. If Tumbler is not working then host it elsewhere, it is trivial to setup that sort of site, I mean just look at the content on the site! I was polite in my email to him. He responded and thanked me.

So more time passes, and nothing happens. So in early January I filed another support ticket outlining the reason behind their web site errors and asked that they fix their site. This time I got no reply.

More time passes. I was bored tonight so I decided to hit the site again, guess what? Yeah, they haven’t done squat.

How incompetent are these people? Sorry maybe it is not incompetence but laziness. If you can’t be bothered to properly operate the site take the site down.

I mean it doesn’t take a rocket scientist to read that and not think immediately how absurd that is. It’s one thing to say you are going to stop supporting something that is fine. But to say OH WE DECIDED TO STOP SUPPORT, TODAY IS YOUR LAST DAY.

So I go to the page they reference above and it says

On or after June 11th, we’ll deploy a change to Hosted Chef that will disable all access to Hosted Chef for 0.9 clients, so you will want to make sure you’ve upgraded before then.

Last I checked, it is nowhere near June 11th. (now that I think of it maybe they meant last year, they don’t say for sure). In any case there was extremely poor notification on this – and how much work does it take to maintain servers running chef 0.9 ? So you can stop development on it, no new patches. Big deal.

This has absolutely no impact on anything I do because we have been on Chef 0.10 forever. But the fact they would even consider doing something like this just shows how poorly run things are over there.

How can they expect customers to take them seriously by doing stuff like this? This is BASIC STUFF. REAL BASIC.

Something else that caught my eye recently as I was doing some stuff in Chef, was their APIs seemed to be down completely. So I hopped on over to the status site after forcing a refresh a dozen or more times to get it to load and saw

Hosted Chef Maintenance Underway

The following systems are unavailable while Hosted Chef is migrated from MySQL to PostgreSQL.

– The Hosted Chef Platform including the API and Management Console

– Opscode Support Ticketing System

– Chef Community Site

Apparently they had announced it on the site one or more days prior(can’t tell for sure now since both posts say posted 1 week ago). But they took the APIs down at 2:00 PM Pacific time! (they are based in Seattle so that’s local time for them). Who in their right mind takes their stuff down in the middle of the afternoon intentionally for a data migration? BASIC STUFF PEOPLE. And their method of notification was poor as well, nobody at my company(we are a paying customer) had any idea it was happening. Fortunately it had only a minor impact on us. I just got lucky when I happened to try to use their API at the exact moment they took it down.

Believe me there are plenty of times when one of our developers comes up to me and says OH #@$ WE NEED THIS CONFIGURATION SETTING IN PRODUCTION NOW! As you might imagine most of that is in Chef, so we rely on that functioning for us at all times. Unscheduled down time is one thing, but this is not excusable. At the very least you could migrate customers in smaller batches(with downtime for any given customer measured in seconds – maybe the really big customers take longer but they can work with those individually to schedule a good time). If they didn’t build the product to do that they should go back to the drawing board.

My co-worker was recently playing around with a slightly newer build of Chef 0.10.x that he thinks we should upgrade to (ours is fairly out of date – primarily because we had some major issues on a newer build at the time). He ran into a bunch of problems including Opscode changing some major things around within a minor release breaking a bunch of stuff. Just more signs of how cavalier they are, typical modern “web 2.0” developer types, that don’t know anything about stability.

Maybe I was lucky I don’t know. But I basically ran the same version of CFengine v2 for nearly 7 years without any breakage (hell I can’t remember encountering a single issue I considered a bug!), across three different companies. I want my configuration system to be stable, fast and simple to manage. Chef is none of those, the more I use it the more I dislike it. I still believe it is a good product and has it’s niche, but it’s got a looooooooong way to go to win over people like me.

As a CFengine employee put it in my last post, Chef views things as configuration as code, and CFengine views them as configuration as documentation. I’m far in the documentation camp. I believe in proper naming conventions whether it is servers, or load balancer addresses, or storage volumes, mount points on servers etc. Also I believe strongly in a good descriptive domain name (have always used the airport codes like most other folks). None of this randomly generated crap(here’s looking at you Amazon). If you are deploying 10,000 servers that are doing the same thing you can still number them in some sort of sane manor. I’ve always been good at documentation, it does take work, and I find more often than not most people are overwhelmed by what I write (you may get the idea with what I have written here) so they often don’t read it — but it is there and I can direct them to it. I take lots of screen shots and do a lot of step by step guides.

On a side note, this configuration as documentation is a big reason why I do not look forward to IPv6.

Chef folks will say go read the code! That can be a pretty dangerous thing to say, really, it is. I mean just yesterday or was it the day before, I was trying to figure out how a template on a server was getting a particular value. Was it coming from the cookbook attributes? from the role? from the environment? I looked everywhere and I could not find the values that were being populated — and the values I specified were being ignored. So I passed this task to my co-worker who I have to acknowledge has been a master in Chef, he has written most of what we have, and while I can manage to tweak stuff here and there, the difficult stuff I give him because if I don’t my fist will go through the desk or perhaps the monitor (desk is closer), after a couple hours working with Chef. A tool is not supposed to make you get so frustrated.

So I ask him to look into it, and quickly I find HIM FIGHTING CHEF! OH MY THE IRONY. He was digging up and down and trying to set things but Chef was undoing them and he was cursing and everything. I loved it. It’s what I go through all the time. After some time he eventually found the issue, the values were being set in another cookbook and they conflicted.

So he worked on it for a bit, and decided to hard code the values for a time while he looked into a better solution. So he deployed this better solution and it had more problems. The most recent thing is for some reason Chef was no longer able to successfully complete a run on certain types of servers(other types were fine though). He’s working on fixing it.

I know he can do it, he’s a really smart guy I just wanted to write about that story – I’m not the only one that has these problems.

Sure I’d love to replace Chef with something else. But it’s not a priority I want to try to shove in my boss’ face (who likes the concept of Chef). I have other fish to fry, and as long as I have this guy doing the dirty work well it’s not as much of a pain for me.

Tracking down conflicting things in CFengine was really simple for me – probably because I wasn’t trying anything too over the top with configuration. Opscode guys liked to say, oh wouldn’t it be great if you could have one configuration stanza that could adapt to ANY SITUATION.

I SAY NO. —-Â IT! IS! NOT! GREAT!

It might be nice in some situations but in many others it just gives me a headache. I like to be able to look at a config and say THAT IS GOING TO SERVER X, EXACTLY HOW IT SITS NOW. Sure I have to duplicate configs and files for different environments and such but really at the end of the day – at all of the companies I have worked at — IT’S NOT A BIG DEAL. In the grand scheme of things. If your configuration is so complex that you need all of this maybe you should step back and consider if you are doing something wrong – does it really need to be that complex? Why?

Oh and don’t get me started on that #$@ damn ruby syntax in the Chef configuration files. Oh you need quotes around a string that is nothing more than a word? You puke with a cryptic stack trace if you don’t have that? Oh you puke with a cryptic stack trace unless these two configuration settings are on their own lines? Come on, this is stupid. I go back to this post on Ruby, how I am reminded of it almost every time I use Chef. I had to support Ruby+Rails apps back from 2006-2008 and it was a bad experience. Left a bad taste in my mouth for Ruby. Chef just keeps on piling on the crap. I’ll fully admit I am very jaded against Ruby (and Chef for that matter). I think for good reason. How’s that saying go? Burn me once shame on you, burn me 500 times shame on me?

With the background that some of these folks have at Opscode it’s absolutely stunning to me the number of times they have shot themselves in the feet over the past few years, on such BASIC THINGS. Maybe that’s how things are done at the likes of Amazon I don’t know, never worked there(knew many that did and do though, general consensus is stay away).

In my neck of the woods people take more care in what they do.

I’ll end this again by mentioning I could train someone on CFEngine in an afternoon, Chef – here I am 2 and a half years later and still struggling.

(In case your wondering YES I run Ubuntu 10.04 LTS on my laptop and desktop (guess what – it is about to go EOL too) – I have no plans to change, because it’s stable, and it does the job for me. I run Debian STABLE on my servers because – IT’S STABLE. No testing, no unstable, no experimental. Tried and true. The new UI stuff in the newer Ubuntu is just scary for me, I have no interest in trying it.)

Ok that’s enough for this rant I guess. Thanks for listening.