HP made a little bit of headlines recently when they officially unveiled their first set of ultra dense micro servers, under the product name Moonshot. Originally speculated as likely being an ARM-platform, it seems HP has surprised many in making this first round of products Intel Atom based.

They are calling it the world’s first software defined server. Ugh. I can’t tell you how sick I feel whenever I hear the term software defined <insert anything here>.

In any case I think AMD might take issue with that, with their SeaMicro unit which they acquired a while back. I was talking with them as far back as 2009 I believe and they had their high density 10U virtualized Intel Atom-based platform(I have never used Seamicro though knew a couple folks that worked there). Complete with integrated switching, load balancing and virtualized storage(the latter two HP is lacking).

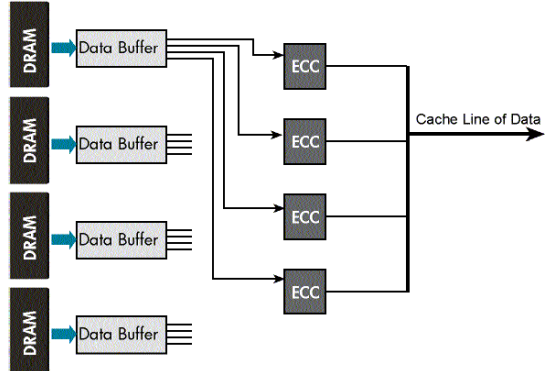

Unlike legacy servers, in which a disk is unalterably bound to a CPU, the SeaMicro storage architecture is far more flexible, allowing for much more efficient disk use. Any disk can mount any CPU; in fact, SeaMicro allows disks to be carved into slices called virtual disks. A virtual disk can be as large as a physical disk or it can be a slice of a physical disk. A single physical disk can be partitioned into multiple virtual disks, and each virtual disk can be allocated to a different CPU. Conversely, a single virtual disk can be shared across multiple CPUs in read-only mode, providing a large shared data cache. Sharing of a virtual disk enables users to store or update common data, such as operating systems, application software, and data cache, once for an entire system

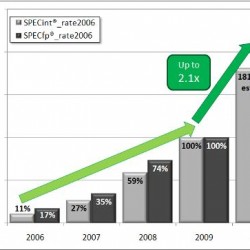

Really the technology that SeaMicro has puts the Moonshot Atom systems to shame. SeaMicro has the advantage that this is their 2nd or 3rd (or perhaps more) generation product. Moonshot is on it’s first gen.

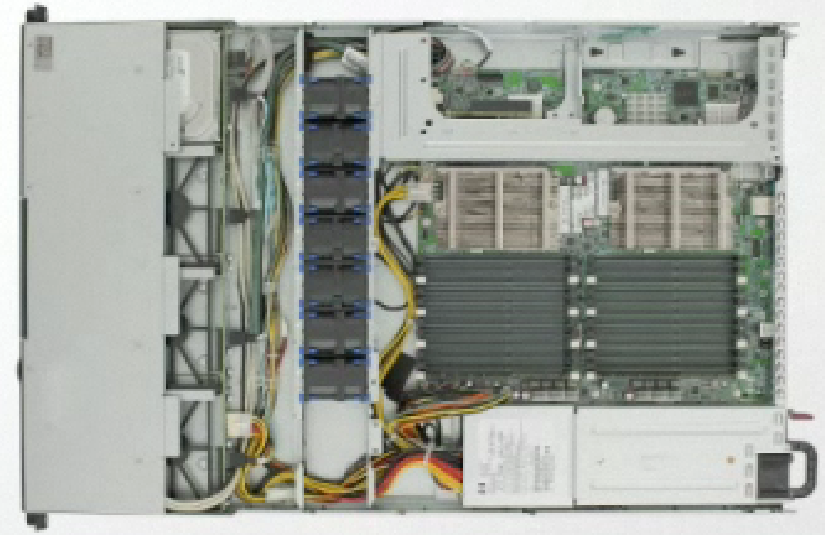

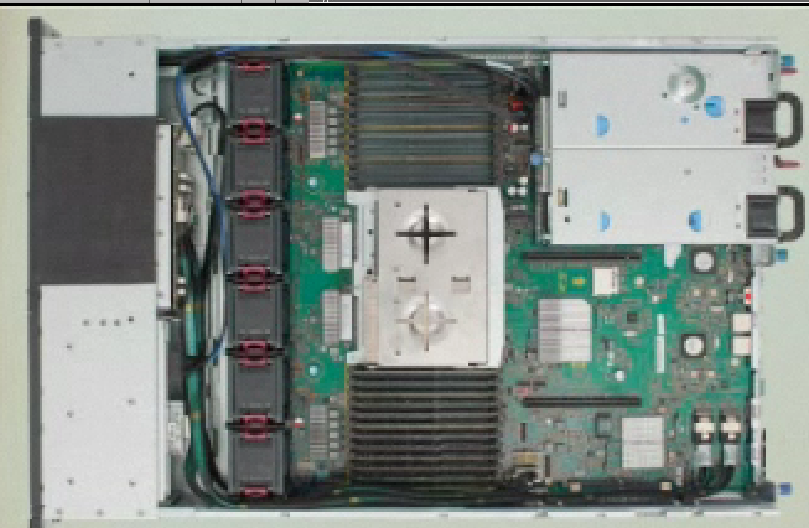

Moonshot provides 45 hot pluggable single socket dual core Atom processors, each with 8GB of memory and a single local disk in a 4.5U package.

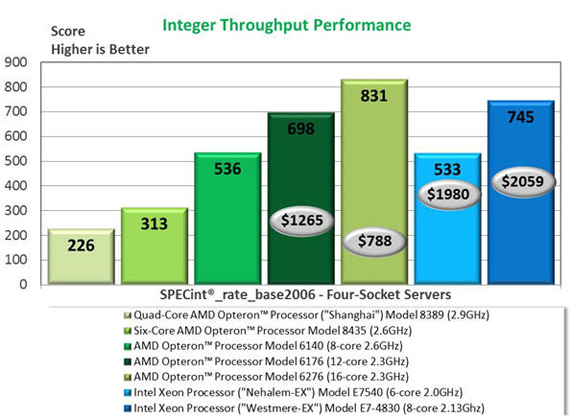

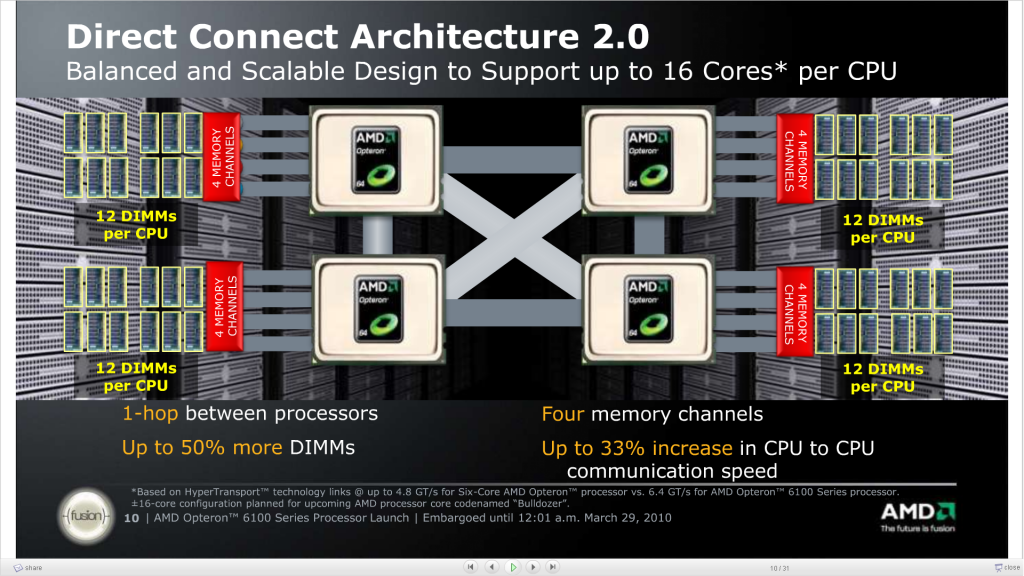

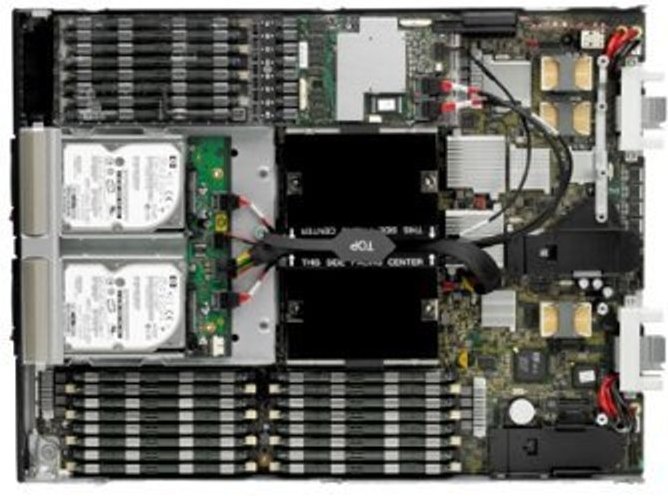

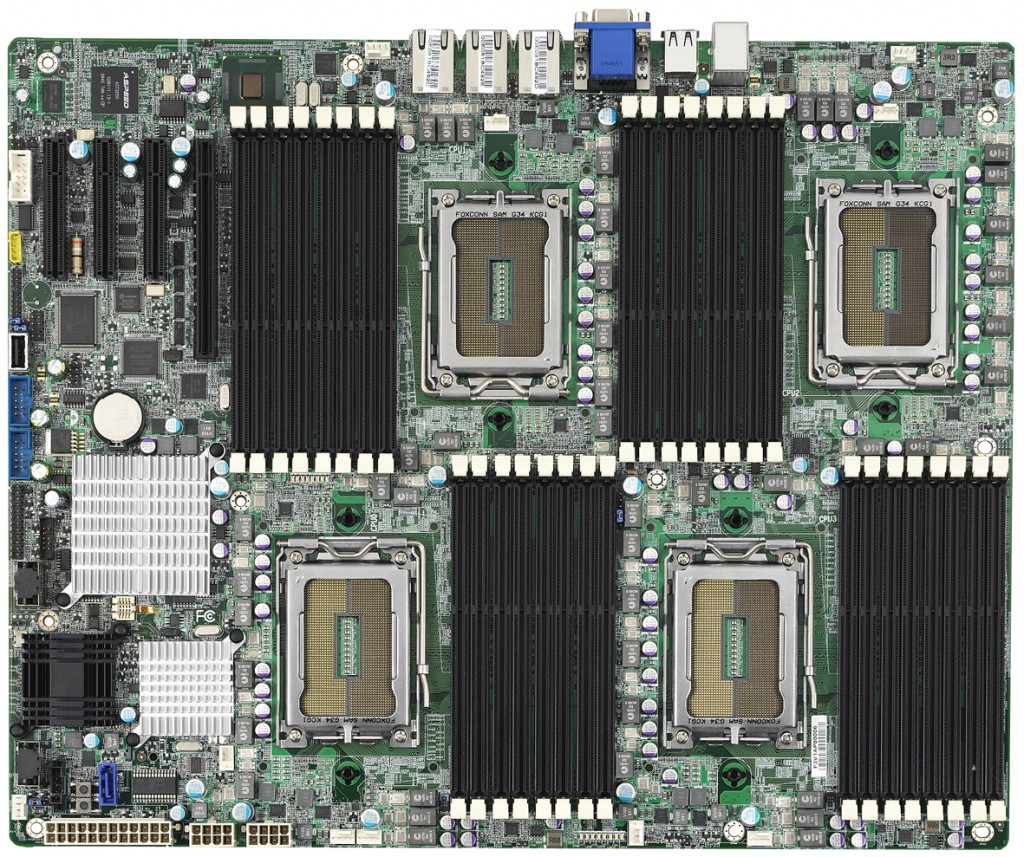

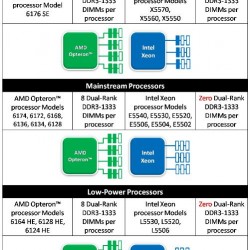

SeaMicro provides up to 256 sockets of dual core Atom processors, each with 4GB of memory and virtualized storage. Or you can opt for up to 64 sockets of either quad core Intel Xeon or eight core AMD Opteron, with up to 64GB/system (32GB max for Xeon). All of this in a 10U package.

Let’s expand a bit more – Moonshot can get 450 servers(900 cores) and 3.6TB of memory in a 47U rack. SeaMicro can get 1,024 servers (2,048 cores) and 4TB of memory in a 47U rack. If that is not enough memory you could switch to Xeon or Opteron with similar power profile, at the high end 2,048 Opteron(AMD uses a custom Opteron 4300 chip in the Seamicro system – a chip not available for any other purpose) cores with 16TB of memory. Or maybe you mix/match .. There is also fewer systems to manage – HP having 10 systems, and Sea Micro having 4 per rack. I harped on HP’s SL-series a while back for similar reasons.

Seamicro also has dedicated external storage which I believe extends upon the virtualization layer within the chassis but am not certain.

All in all it appears Seamicro has been years ahead of Moonshot before Moonshot ever hit the market. Maybe HP should of scrapped Moonshot and taken out Seamicro when they had the chance.

At the end of the day I don’t see anything to get excited about with Moonshot – unless perhaps it’s really cheap (relative to Seamicro anyway). The micro server concept is somewhat risky in my opinion. I mean if you really got your workload nailed down to something specific and you can fit it into one of these designs then great. Obviously the flexibility of such micro servers is very limited. Seamicro of course wins here too, given that an 8 core Opteron with 64GB of memory is quite flexible compared to the tiny Atom with tiny memory.

I have seen time and time again people get excited about this and say oh how they can get so many more servers per watt vs the higher end chips. Most of the time they forget to realize how few workloads are CPU bound, and simply slapping a hypervisor on top of a system with a boatload of memory can get you significantly more servers per watt than a micro server could hope to achieve. HOWEVER, if your workload can effectively exploit the micro servers, drive utilization up etc, then it can be a real good solution — in my experience those sorts of workloads are the exception rather than the rule, I’ll put it that way.

It seems that HP is still evaluating whether or not to deploy ARM processors in Moonshot – in the end I think they will – but won’t have a lot of success – the market is too niche. You really have to go to fairly extreme lengths to have a true need for something specialized like ARM. The complexities in software compatibility are not trivial.

I think HP will not have an easy time competing in this space. The hyper scale folks like Rackspace, Facebook, Google, Microsoft etc all seem to be doing their own thing, and are unlikely to purchase much from HP. At the same time there of course is Seamicro, amongst other competitors (Dell DCS etc) who are making similar systems. I really don’t see anything that makes Moonshot stand out, at least not at this point. Maybe I am missing something.