After reading an article on The Register (yeah you probably realize by now I spend more time on that site online than pretty much any other site), it got me thinking about a topic that bugs me.

The article is from last week but is written by the CEO of the organization behind Ubuntu. It basically talks about how using open source software is a good way to save costs in a down(or up) economy. And tries to give a bunch of examples on companies basing their stuff on open source.

That’s great, I like open source myself, fired up my first Slackware Linux box in 1996 I think it was(Slackware 3.0). I remember picking Slackware over Red Hat at the time specifically because Slackware was known to be more difficult to use and it would force me to learn Linux the hard way, and believe me I learned a lot. To this day people ask me what they should study or do to learn Linux and I don’t have a good answer, I don’t have a quick and easy way to learn Linux the way I learned it. It takes time, months, years of just playing around with it. With so many “easy” distributions these days I’m not sure how practical my approach is now but I’m getting off topic here.

So back to what bugs me. What bugs me is people out there, or more specifically organizations out there that do nothing but leach off of the open source community. Companies that may make millions(or billions!) in revenue in large part because they are leveraging free stuff. But it’s not the usage of the free stuff that I have a problem with, more power to them. I get annoyed when those same organizations feel absolutely no moral obligation to contribute back to those that have given them so much.

You don’t have to do much. Over the years the most that I have contributed back have been participating in mailing lists, whether it is the Debian users list(been many years since I was active there), or the Red Hat mailing list(few years), or the CentOS mailing list(several months). I try to help where I can. I have a good deal of Linux experience, which often means the questions I have nobody else on the list has answers to. But I do(well did) answer a ton of questions. I’m happy to help. I’m sure at some point I will re-join one of those lists(or maybe another one) and help out again, but been really busy these past few months. I remember even buying a bunch of Loki games to try to do my part in helping them(despite it not being open source, they were supporting Linux indirectly). Several of which I never ended up playing(not much of a gamer). VMware of course was also a really early Linux supporter(still have my VMware 1.0.2 linux CD I believe that was the first version they released on CD previous versions were download only), though I have gotten tired of waiting for vCenter for Linux.

The easiest way for a corporation to contribute back is to say use and pay for Red Hat Enterprise, or SuSE or whatever. Pay the companies that hire the developers to to make the open source software go. I’m partial to Red Hat myself at least in a business environment, though I use Debian-based in my personal life.

There are a lot of big companies that do contribute code back, and that is great too, if you have the expertise in house. Opscode is one such company I have been working with recently on their Chef product. They leverage all sorts of open source stuff in their product(which in itself is open source). I asked them what their policy is for getting things fixed in the open source code they depend on, do they just file bugs and wait or do they contribute code, and they said they contribute a bunch of code, constantly. That’s great, I have enormous respect for organizations that are like that.

Then there are the companies that leach off open source and not only don’t officially contribute in any way whatsoever but they actively prevent their own employees from doing so. That’s really frustrating & stupid.

Imagine where Linux, and everything else would be if more companies contributed back. It’s not hard, go get a subscription to Red Hat, or Ubuntu or whatever for your servers (or desktops!). You don’t have to contribute code, and if you can’t contribute back in the form of supporting the community on mailing lists, or helping out with documentation, or the wikis or whatever. Write a check, and you actually get something in return, it’s not like it’s a donation. But donations are certainly accepted by the vast numbers of open source non profits

HP has been a pretty big backer of open source for a long time, they’ve donated a lot of hardware to support kernel.org and have been long time Debian supporters.

Another way to give back is to leverage your infrastructure, if you have a lot of bandwidth or excess server capacity or disk space or whatever, setup a mirror, sponsor a project. Looking at the Debian page as an example it seems AboveNet is one such company.

I don’t use open source everywhere, I’m not one of those folks who has to make sure everything is GPL or whatever.

So all I ask, is the next time you build or deploy some project that is made possible by who knows how many layers of open source products, ask yourself how you can contribute back to support the greater good. If you have already then I thank you 🙂

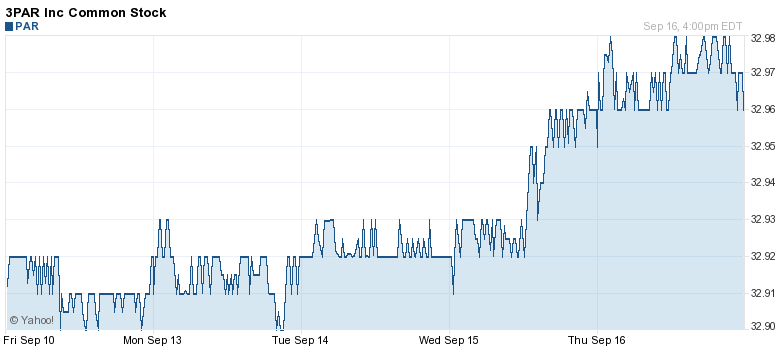

Speaking of Debian, did you know that Debian powers 3PAR storage systems? Well it did at one point I haven’t checked recently, I do recall telnetting to my arrays on port 22 and seeing a Debian SSH banner. The underlying Linux OS was never exposed to the user. And it seems 3PAR reports bugs, which is another important way to contribute back. And, as of 3PAR’s 2.3.1 release(I believe) they finally officially started supporting Debian as a platform to connect to their storage systems. By contrast they do not support CentOS.

Extreme Networks’s ExtremeWare XOS is also based on Linux, though I think it’s a special embedded version. I remember in the early days they didn’t want to admit it was Linux they said “Unix based”. I just dug this up from a backup from back in 2005, once I saw this on my core switch booting up I was pretty sure it was Linux!

Extreme Networks Inc. BD 10808 MSM-R3 Boot Monitor

Version 1.0.1.5 Branch mariner_101b5 by release-manager on Mon 06/14/04

Copyright 2003, Extreme Networks, Inc.

Watchdog disabled.

Press and hold the <spacebar> to enter the bootrom.

Boot path is /dev/fat/wd0a/vmlinux

(elf)

0x85000000/18368 + 0x85006000/6377472 + 0x8561b000/12752(z) + 91 syms/

Running image boot…

Starting Extremeware XOS 11.1.2b3

Copyright (C) 1996-2004 Extreme Networks. All rights reserved.

Protected by U.S. Patents 6,678,248; 6,104,700; 6,766,482; 6,618,388; 6,034,957

Then there’s my Tivo that runs Linux, my TV runs Linux(Phillips TV), my Qlogic FC switches run Linux, I know F5 equipment runs on Linux, my phone runs Linux(Palm Pre). It really is pretty crazy how far Linux has come in the past 10 years. And I’m pretty convinced the GPL played a big part, making it more difficult to fork it off and keep the changes for yourself. A lot of momentum built up in Linux and companies and everyone just flocked to it. I do recall early F5 load balancers used BSDI, but switched over to Linux (didn’t the company behind BSDI go out of business earlier this decade? or maybe they got bought I forget). Seems Linux is everywhere and in most cases you never notice it. The only way I knew it was in my TV is because of the instructions came with all sorts of GPL disclosures.

In theory the BSD licensing scheme should make the *BSDs much more attractive, but for the most part *BSD has not been able to keep pace with Linux(outside some specific niches I do love OpenBSD‘s pf) so never really got anywhere close to the critical mass Linux has.

Of course now someone will tell me some big fancy device that runs BSD that is in every data center, every household and I don’t know it’s there! If I recall right I do remember that Juniper’s JunOS is based on FreeBSD? And I think Force10 uses NetBSD.

Also recall being told by some EMC consultants back in 2004/2005 that the EMC Symmetrix ran Linux too, I do remember the Clariions of the time(at least, maybe still) ran Windows(probably because EMC bought the company that made that product rather than creating it themselves)