Sorry been really hammered recently, just spent the last two weeks in Atlanta doing a bunch of data center work(and the previous week or two planning for that trip), many nights didn’t get back to the hotel until after 7AM .. But got most of it done..still have a lot more to do though from remote.

I know there has been some neat 3PAR announcements recently I plan to try to cover that soon.

In the meantime onto a new thing to me: two factor authentication. I recently went through preparations for PCI compliance and among those things we needed two factor authentication on remote access. I had never set up nor used two factor before. I am aware of the common approach of using a keyfob or mobile app or something to generate random codes etc. Seemed kind of, I don’t know, not user friendly.

In advance of this I was reading a random thread on slashdot something related to two factor, and someone pointed out the company Duo Security as one option. The PCI consultants I was working with had not used it and had proposed another (self hosted) option which involved integrating our OpenLDAP with it, along with radius and mysql and a mobile app or keyfob with codes and well it just all seemed really complicated(compounded by the fact that we needed to get something deployed in about a week). I especially did not like the having to type in a code bit. I mean it wasn’t too much before that I got a support request from a non technical user trying to login to our VPN – she would login and the website would prompt her to download & install the software. She would download the software (but not install it) and think it wasn’t working – then try again (download and not install). I wanted something simpler.

So enter Duo Security, a SaaS platform for two factor authentication that integrates with quite a bit of back end things including lots of SSL and IPSec VPNs (and pretty much anything that speaks Radius which seems to be standard with two factor).

They tie it all up into a mobile app that runs on several different major mobile platforms both phone and tablet. The kicker for them is there are no codes. I haven’t seen any other two factor systems personally that are like this (have only observed maybe a dozen or so, by no means am I an expert at this). The ease of use comes in two forms:

Inline self enrollment for our SSL VPN

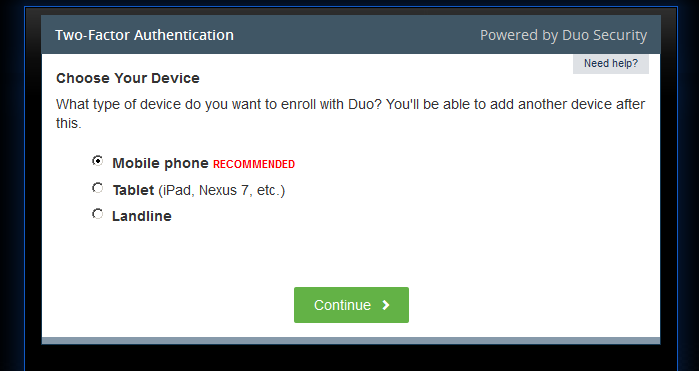

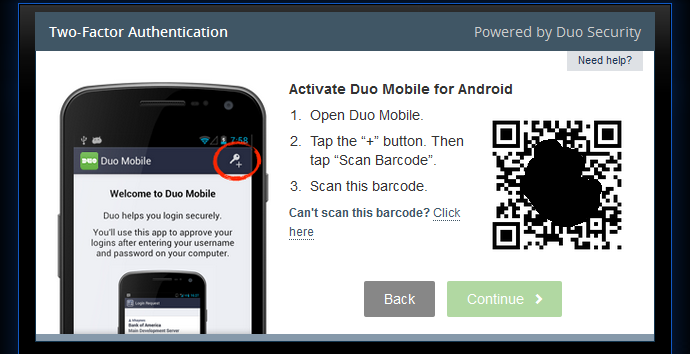

Initial setup is very simple, once the user types their username and password to login to the SSL VPN (which is browser based of course), an iframe kicks in (how this magic works I do not know) and they are taken through a wizard that starts off looking something like this

No separate app, no out of band registration process.

By comparison (what prompted me to write this now) is I just went through a two factor registration process for another company (which requires it now) who uses something called Symantec Validation & ID Protection which is also a mobile app. Someone had to call me on the phone, I told them my Credential ID, and a security code, then I had to wait for the 2nd security code and told them that, and that registered my device with whatever they use. Compared to Duo this is a positively archaic solution.

Yet another service provider I interact with regularly recently launched (and is pestering me to sign up for) two factor authentication – they too use these old fashioned codes. I’ve been hit with more two factor related things in the past month than in the past probably 5 years or something.

Sync your phone with Duo security by scanning a QR code with your phone (obscured the QR code a bit just in case that has sensitive info in it)

By contrast the self enrollment in Duo is simple, requires no interaction on my part, users can enroll whenever they want. They can even register multiple devices on their own, and add/delete devices if they wish.

One of the times during testing I did have an issue scanning the QR code, which normally takes about 2 seconds on my phone. I was struggling with it for a minute or two, until I realized my mouse cursor was on top of it, which was blocking the scan from working. Maybe they could improve it by somehow cloaking the mouse cursor with javascript or something if it goes over the code, I don’t know.

Don’t have a mobile app? Duo can use those same old fashioned key codes too(by their or 3rd party keyfob or mobile app), or they can send you a SMS message, or make a voice call to you (the prompt basically says hit any button on the touch tone phone to acknowledge the 2nd factor — of course that phone# has to be registered with them).

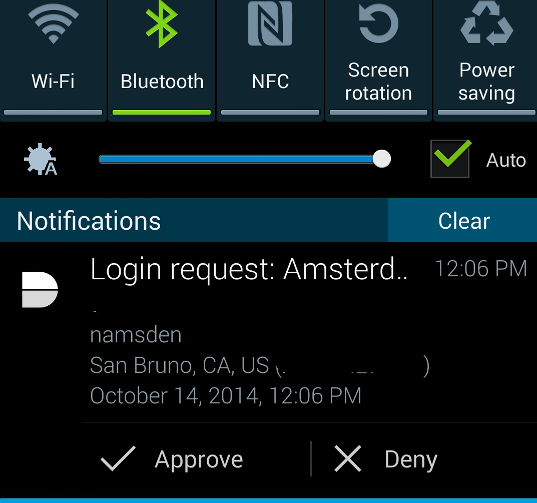

Simply press a button to acknowledge 2nd factor

The other easy part is there is of course no codes to have to transcribe from a device to the computer. If you are using the mobile app, upon login you get a push notification from the app (in my experience more often than not this comes in less than 2 seconds after I try to login). The app doesn’t have to be running (it runs in the background even if you reboot your phone). I get a notification in Android (in my case) that looks like this:

Duo integrated nicely into Android

Duo integrated nicely into Android

I obscured the IP address and the company name just to try to keep this not associated with the company I work for. If you have the app running in the foreground you can see a full screen login request similar to the smaller one above. If for some reason you are not getting the push notification you can use tell the app to poll the Duo service for any pending notifications(only had to do that once so far).

The mobile app also has one of those number generator things so you can use that in the event you don’t have a data connection on the phone. In the event the Duo service is off line you have the option of disabling 2nd factor automatically(default) so them being down doesn’t stop you from getting access, or if you prefer ultra security you can tell the system to prevent any users from logging in if the 2nd factor is not available.

Normally I am not one for SaaS type stuff – really the only exception is if the SaaS provides something that I can’t provide myself. In this case the simple two factor stuff, the self enrollment, the ability to support SMS and phone voice calls(of which about a half dozen of my users have opted to use) is not anything I could of setup in a short time frame anyway (our PCI consultants were not aware of any comparable solution – and they had not worked with Duo before).

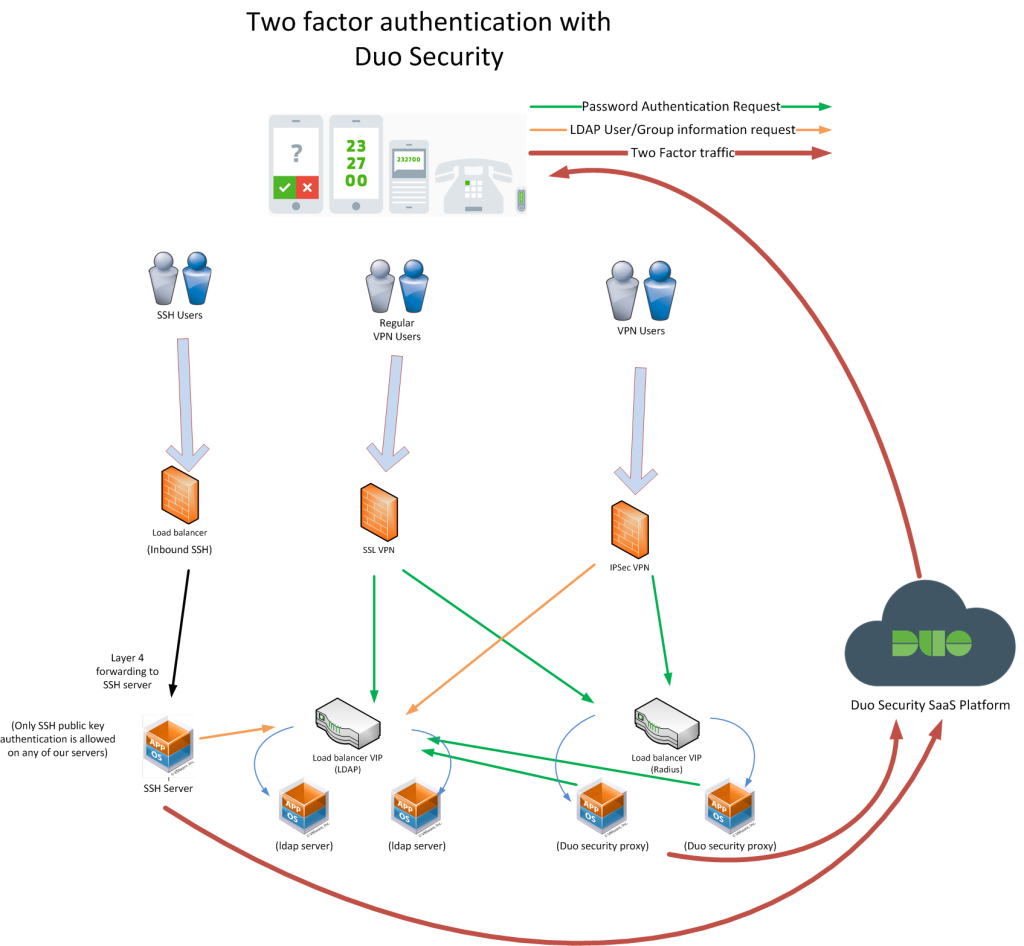

Duo claims to be able to setup in just a few minutes – for me the reality was a little different, the instructions they had were only half what I needed for our main SSL VPN, I had to resort to instructions from our VPN appliance maker to make up the difference (and even then I was really confused, until support explained it to me. Their instructions were specifically for two factor on IOS devices though applied to my scenario as well). For us the requirement is that the VPN device talk to BOTH LDAP and Radius. LDAP stores the groups that users belong to, and those groups determine what level of network access they get. Radius is the 2nd factor(or in the case of our IPSec VPN the first factor too more on that in a moment). In the end it took me probably 2-3 hours to figure it out, about half of that was wondering why I couldn’t login(because I hadn’t setup the VPN->LDAP link so the authentication wasn’t getting my group info so I was not getting any network permissions).

So for our main SSL VPN, I had to configure a primary and a secondary authentication, and initially with Duo I just kept it in pass through mode (only talking to them and not any other authentication source) because the SSL VPN was doing the password auth via LDAP.

When I went to hook up our IPSec VPN that was a different configuration, that did not support dual auth of both LDAP and Radius, it could do LDAP group lookups and password auth with radius though. So I put the Duo proxy in a more normal configuration which meant I needed another Radius server that was integrated with our LDAP(which runs on the same VM as the Duo proxy on a different port) that the Duo proxy could talk to(talks to localhost) in order to authenticate passwords. So the IPSec VPN would send a radius request to the Duo proxy which would then send that information to another Radius (integrated with LDAP) and to their SaaS platform, and give a single response back to allow or deny the user.

At the end of the day the SSL VPN ends up authenticating the user’s password twice (once via LDAP once via RADIUS), but other than being redundant there is no harm.

Here is what the basic architecture looks like, this graphic is more ugly than my official one since I wanted to hide some of the details, you can get the gist of it though

The SSL VPN supported redundant authentication schemes, so if one Duo proxy was down it would fail back to another one, the problem was the timeout was too long, it would take upwards of 3 minutes to login(and you are in danger of the login timing out). So I setup a pair of Duo proxies and am load balancing between them with a layer 7 health check. If a failure occurs there is no delay in login and it just works better.

As the image shows I have integrated SSH logins with Duo as well in a couple of cases, there is no inline pretty self enrollment, but if you happen to not be enrolled, the two factor process with spit out a url to put into your browser upon first login to the SSH host to enroll in two factor.

I deployed the setup to roughly 120 users a few weeks ago, and within a few days roughly 50-60 users had signed up. Internal IT said there were zero – count ’em zero – help desk tickets related to the new system, it was that easy and functional to use. My biggest concern going into this whole project was tight timelines and really no time for any sort of training. Duo security made that possible (even without those timelines I still would of preferred this solution — or at least this type of solution assuming there is something else similar on the market I am not aware of any).

My only support tickets to-date with them were two users who needed to re-register their devices(because they got new devices). Currently we are on the cheaper of the two plans which does not allow self management of devices. So I just login to the Duo admin portal, delete their phone and they can re-enroll at their leisure.

Duo’s plans start as low as $1/user/month. They have a $3/user/month enterprise package which gives more features. They also have an API package for service providers and stuff which I think is $3/user/year (with a minimum number of users).

I am not affiliated with Duo in any way, not compensated by them, not bribed not given any fancy discounts.. but given I have written brief emails to the two companies that have recently deployed two factor I thought I would write this so I could point them and others to my story here to get more insight on a better way to do two factor authentication.