Looks like Facebook released a pretty cool tool that apparently provides the ability to perform MySQL schema changes online, something most real databases take for granted.

Another thing noted by our friends at The Register, was how extensively Facebook leverages MySQL. I was working on a project revolving around Apache Hadoop and someone that was involved with it was under the incorrect assumption that Facebook stores most of it’s data on Hadoop.

At Facebook, MySQL is the primary repository for user data, with InnoDB the accompanying storage engine.

[..]

All Callaghan will say is that the company runs “X thousands” of MySQL servers. “X” is such a large number, the company needed a way of making index changes on live machines.

I wouldn’t be surprised if they probably had a comparable number of MySQL servers to servers running Hadoop. After all Yahoo! is the biggest Hadoop user and at my last count had “only” about 25,000 servers running the software.

It certainly is unfortunate to see so many people out there see some sort of solution and think they can get it to solve all of their problems.

Hadoop is a good example, lots of poor assumptions are made around Hadoop. It’s designed to do one thing really well, and it does that fairly well. But when you think you can adapt it into a more general purpose storage system it starts falling apart. Which is completely understandable, it wasn’t designed for that purpose. Many people don’t understand that simple concept though.

Another poor use of Hadoop is trying to shoehorn a real time application on top of it, it just doesn’t work. Yet there are people out there (I’ve talked to some of them in person) who have devoted significant developer resources to try to attack that angle. Spend thirty minutes of time researching the topic and you can realize pretty quickly that it is a wasted effort. Google couldn’t even do it!

Speaking of Hadoop, and Oracle for that matter it seems Oracle announced a Hadoop-style system yesterday at Open World, only Oracle’s version seems to be orders of magnitutde faster (and more orders of magnitude expensive given the amount of flash it is using).

Using the skinnier and faster SAS disks, Oracle says that the Exadata X2-8 appliance can deliver up to 25GB/sec of raw disk bandwidth on uncompressed data and 50GB/sec across the flash drives. The disks deliver 50,000 I/O operations per second (IOPs), while the flash delivers 1 million IOPs. The machine has 100TB of raw disk capacity per rack and up to 28TB of uncompressed user data. The rack can load data at a rate of 5TB per hour. Using the fatter disks, the aggregate disk bandwidth drops to 14GB/sec, but the capacity goes up to 336TB and the user data space grows to 100TB.

The system is backed by an Infiniband-based network, I didn’t notice specifics but assume 40Gbps per system.

Quite impressive indeed. Like Hadoop, this Exadata system is optimized for throughput, it can do IOPS pretty well too but it’s clear that throughput is the goal. By contrast a more traditional SAN gets single digit gigabytes per second even on the ultra high end for data transfers at least on the industry standard SPC-2 benchmark.

- IBM DS8700 rated at around 7.2 Gigabytes/second with 256 drives and 256GB cache costing a cool $2 million

- Hitachi USP-V rated at around 8.7 Gigabytes/second with 265 drives and 128GB cache costing a cool $1.6 million

Now it’s not really apples to apples comparison of course, but it can give some frame of reference.

It seems to scale really well according to Oracle –

Ellison is taking heart from the Exadata V2 data warehousing and online transaction processing appliance, which he said now has a $1.5bn pipeline for fiscal 2011. He also bragged that at Softbank, Teradata’s largest customer in Japan, Oracle won a deal to replace 60 racks of Teradata gear with three racks of Exadata gear, which he said provided better performance and which had revenues that were split half-and-half on the hardware/software divide.

From 60 to 3? Hard to ignore those sorts of numbers!

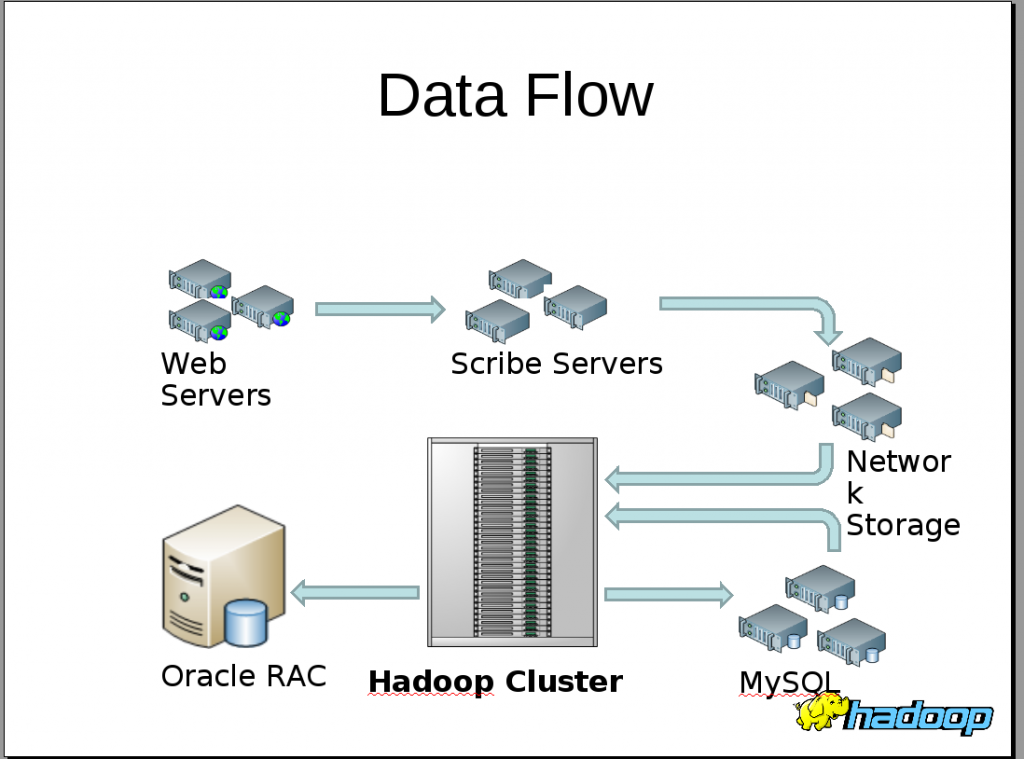

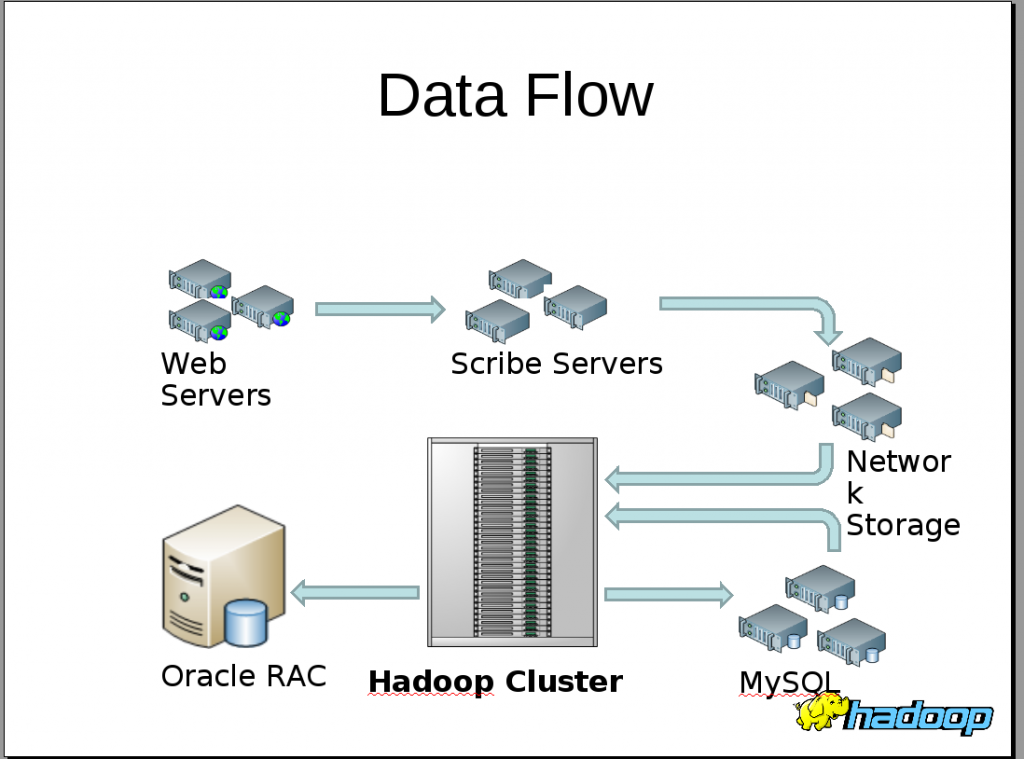

Oh and speaking of Facebook, and Hadoop, and Oracle, as part of my research into the topic of Hadoop I came across this, I don’t know how up to date it is but thought it was neat. Oracle DB is one product I do miss using, the company is filled with scumbags to be sure, I had to educate their own sales people on their licensing the last time I dealt with them. But it is a nice product, works really well, and IMO at least it’s pretty easy to use especially with enterprise manager (cursed by DBAs from coast to coast I know!). Of course makes MySQL look like it’s a text file based key-value pair database by comparison.

Anyways onto the picture!

Oh my god! Facebook is not only using Hadoop, but they are using MySQL, normal NAS storage, and even Oracle RAC! Who’da thunk it?

Find a tool or a solution that does everything well? The more generic the approach, the more difficult it is to pull it off, which is why so many solutions like that typically cost a significant amount of money, because there is significant value in what the product provides. If perhaps the largest open source platform in the world (Linux) has not been able to do it (how many big time open source advocates do you see running OS X and how many run OS X on their servers), who can?

That’s what I thought.

(posted from my Debian Lenny workstation with updates from my Ubuntu Lucid Lynx laptop)