I was at a little event thrown for the Vertica column-based database, as well as Tableau Software, a Seattle-based data visualization company. Vertica was recently acquired by HP for an undisclosed sum. I had not heard of Tableau until today.

I went in not really knowing what to expect, have heard good things about Vertica from my friend over there but it’s really not an area I have much expertise in.

I left with my mouth on the floor. I mean holy crap that combination looks wicked. Combining the world’s fastest column based data warehouse with a data visualization tool that is so easy some of my past managers could even run it. I really don’t have words to describe it.

I never really considered Vertica for storing IT-related data, and they brought up a case study with one of their bigger customers – Comcast who sends more than 65,000 events a second into a vertica database (including logs, SNMP traps and other data). Hundreds of terabytes of data with sub second query response times. I don’t know if they use Tableau software’s products or not. But there was a good use case for storing IT data in Vertica.

(from Comcast case study)

The test included a snapshot of their application running on a five-node cluster of inexpensive servers with 4 CPU AMD 2.6 GHz core processors with 64-bit 1 MB cache; 8 GB RAM; and ~750 GBs of usable space in a RAID- 5 configuration.

To stress-test Vertica, the team pushed the average insert rate to 65K samples per second; Vertica delivered millisecond-level performance for several different query types, including search, resolve and accessing two days’ worth of data. CPU usage was about 9%, with a fluctuation of +/- 3%, and disk utilization was 12% with spikes up to 25%.

That configuration could of course easily fit on a single server. How about a 48-core Opteron with 256GB of memory and some 3PAR storage or something? Or maybe a DL385G7 with 24 cores, 192GB memory(24x8GB), and 16x500GB 10k RPM SAS disks with RAID 5Â and dual SAS controllers with 1GB of flash-backed cache(1 controller per 8 disks). Maybe throw some Fusion IO in there too?

Now I suspect that there will be additional overhead with trying to feed IT data into a Vertica database since you probably have to format it in some way.

Another really cool feature of Vertica – all of it’s data is mirrored at least once to another server, nothing special about that right? Well they go one step further, they give you the ability to store the data pre-sorted in two different ways, so mirror #1 may be sorted by one field, and mirror #2 is sorted by another field, maximizing use of every copy of the data, while maintaining data integrity.

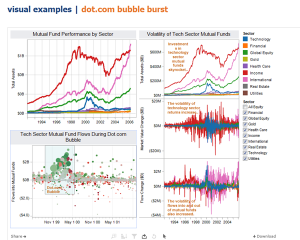

Something that Tableu did really well that was cool was you don’t need to know how you want to present your data, you just drag stuff around and it will try to make intelligent decisions on how to represent it. It’s amazingly flexible.

Tableu does something else well, there is no language to learn, you don’t need to know SQL, you don’t need to know custom commands to do things, the guy giving the presentation basically never touched his keyboard. And he published some really kick ass reports to the web in a matter of seconds, fully interactive, users could click on something and drill down really easily and quickly.

This is all with the caveat that I don’t know how complicated it might be to get the data into the database in the first place.

Maybe there are other products out there that are as easy to use and stuff as Tableau I don’t know as it’s not a space I spend much time looking at. But this combination looks incredibly exciting.

Both products have fully functional free evaluation versions available to download on the respective sites.

Vertica licensing is based on the amount of data that is stored (I assume regardless of the number of copies stored but haven’t investigated too much), no per-user, no per-node, no per-cpu licensing. If you want more performance, add more servers or whatever and you don’t pay anything more. Vertica automatically re-balances the cluster as you add more servers.

Tableau is licensed as far as I know on a named-user basis or a per-server basis.

Both products are happily supported in VMware environments.

This blog entry really does not do the presentation justice, I don’t have the words for how cool this stuff was to see in action, there aren’t a lot of products or technologies that I get this excited about, but these has shot to near the top of my list.

Time to throw your Hadoop out the window and go with Vertica.