(Standard disclaimer HP covered my hotel and stuff while in Vegas etc etc…)

I have tried to be a vocal critic of the whole software defined movement, in that much of it is hype today and has been for a while and will likely to continue to be for a while yet. My gripe is not so much about the approach, the world of “software defined” sounds pretty neat, my gripe is about the marketing behind it that tries to claim we’re already there, and we are not, not even close.

I was able to vent a bit with the HP team(s) on the topic and they acknowledged that we are not there yet either. There is a vision, and there is a technology. But there aren’t a lot of products yet, at least not a lot of promising products.

Software defined networking is perhaps one of the more (if not the most) mature platforms to look at. Last year I ripped pretty good into the whole idea with good points I thought, basically that technology solves a problem I do not have and have never had. I believe most organizations do not have a need for it either (outside of very large enterprises and service providers). See the link for a very in depth 4,000+ word argument on SDN.

More recently HP tried to hop on the bandwagon of Software Defined Storage, which in their view is basically the StoreVirtual VSA. A product that to me doesn’t fit the scope of Software defined, it is just a brand propped up onto a product that was already pretty old and already running in a VM.

Speaking of which, HP considers this VSA along with their ConvergedSystem 300 to be “hyper converged”, and least the people we spoke to do not see a reason to acquire the likes of Simplivity or Nutanix (why are those names so hard to remember the spelling..). HP says most of the deals Nutanix wins are small VDI installations and aren’t seen as a threat, HP would rather go after the VCEs of the world. I believe Simplivity is significantly smaller.

I’ve never been a big fan of StoreVirtual myself, it seems like a decent product, but not something I get too excited about. The solutions that these new hyper converged startups offer sound compelling on paper at least for lower end of the market.

The future is software defined

The future is not here yet.

It’s going to be another 3-5 years (perhaps more). In the mean time customers will get drip fed the technology in products from various vendors that can do software defined in a fairly limited way (relative to the grand vision anyway).

When hiring for a network engineer, many customers would rather opt to hire someone who has a few years of python experience than more years of networking experience because that is where they see the future in 3-5 years time.

My push back to HP on that particular quote (not quoted precisely) is that level of sophistication is very hard (and expensive) to hire for. A good comparative mark is hiring for something like Hadoop. It is very difficult to compete with the compensation packages of the largest companies offering $30-50k+ more than smaller (even billion $) companies.

So my point is the industry needs to move beyond the technology and into products. Having a requirement of knowing how to code is a sign of an immature product. Coding is great for extending functionality, but need not be a requirement for the basics.

HP seemed to agree with this, and believes we are on that track but it will take a few more years at least for the products to (fully) materialize.

HP Oneview

(here is the quick video they showed at Discover)

I’ll start off by saying I’ve never really seriously used any of HP’s management platforms(or anyone else’s for that matter). All I know is that they(in general not HP specific) seem to be continuing to proliferate and fragment.

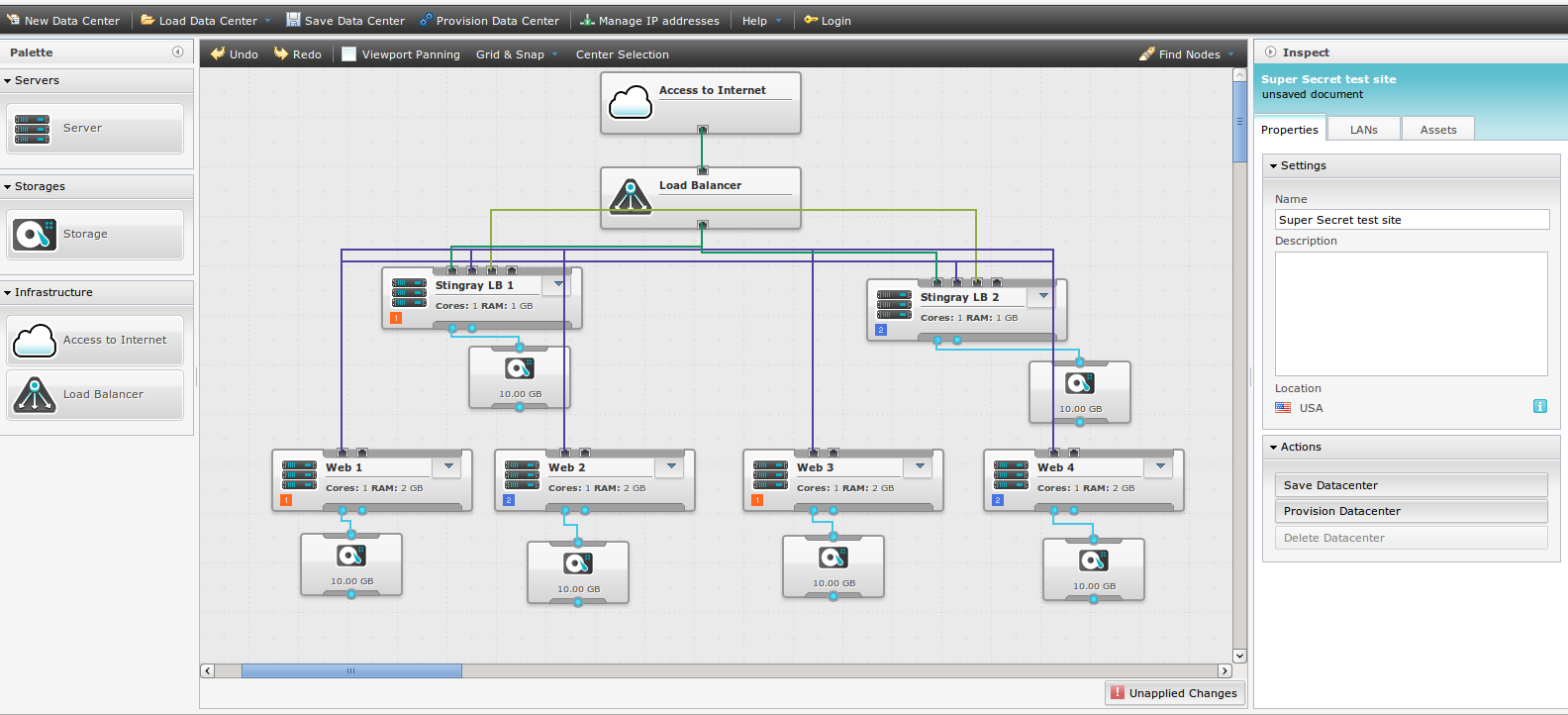

HP Oneview 1.1 is a product that builds on this promise of software defined. In the past five years of HP pitching converged systems seeing the demo for Oneview was the first time I’ve ever shown just a little bit of interest in converged.

HP Oneview was released last October I believe and HP claims something along the lines of 15,000 downloads or installations. Version 1.10 was announced at Discover which offers some new integration points including:

- Automated storage provisioning and attachment to server profiles for 3PAR StoreServ Storage in traditional Fibre Channel SAN fabrics, and Direct Connect (FlatSAN) architectures.

- Automated carving of 3PAR StoreServ volumes and zoning the SAN fabric on the fly, and attaching of volumes to server profiles.

- Improved support for Flexfabric modules

- Hyper-V appliance support

- Integration with MS System Center

- Integration with VMware vCenter Ops manager

- Integration with Red Hat RHEV

- Similar APIs to HP CloudSystem

Oneview is meant to be light weight, and act as a sort of proxy into other tools, such as Brocade’s SAN manager in the case of Fibre channel (myself I prefer Qlogic management but I know Qlogic is getting out of the switch business). Though for several HP products such as 3PAR and Bladesystem Oneview seems to talk to them directly.

Oneview aims to provide a view that starts at the data center level and can drill all the way down to individual servers, chassis, and network ports.

However the product is obviously still in it’s early stages – it currently only supports HP’s Gen8 DL systems (G7 and Gen8 BL), HP is thinking about adding support for older generations but their tone made me think they will drag their feet long enough that it’s no longer demanded by customers. Myself the bulk of what I have in my environment today is G7, only recently deployed a few Gen8 systems two months ago. Also all of my SAN switches are Qlogic (and I don’t use HP networking now) so Oneview functionality would be severely crippled if I were to try to use it today.

The product on the surface does show a lot of promise though, there is a 3 minute video introduction here.

HP pointed out you would not manage your cloud from this, but instead the other way around, cloud management platforms would leverage Oneview APIs to bring that functionality to the management platform higher up in the stack.

HP has renamed their Insight Control systems for vCenter and MS System Center to Oneview.

The goal of Oneview is automation that is reliable and repeatable. As with any such tools it seems like you’ll have to work within it’s constraints and go around it when it doesn’t do the job.

“If you fancy being able to deploy an ESX cluster in 30 minutes or less on HP Proliant Gen8 systems, HP networking and 3PAR storage than this may be the tool for you.” – me

The user interface seems quite modern and slick.

They expose a lot of functionality in an easy to use way but one thing that struck me watching a couple of their videos is it can still be made a lot simpler – there is a lot of jumping around to do different tasks. I suppose one way to address this might be broader wizards that cover multiple tasks in the order they should be done in or something.