I was out of town for most of last week so didn’t happen to catch this bit of news that came out.

It seems shortly after Facebook released their server/data center designs Microsoft has done the same.

I have to admit when I first heard of the Facebook design I was interested, but once I saw the design I felt let down, I mean is that the best they could come up with? It seems there are market based solutions that are vastly superior to what Facebook designed themselves. Facebook did good by releasing in depth technical information but the reality is only a tiny number of organizations would ever think about attempting to replicate this kind of setup. So it’s more for the press/geek factor than being something practical.

I attended a Datacenter Dynamics conference about a year ago, where the most interesting thing that I saw there was a talk by a Microsoft guy who spoke about their data center designs, and focused a lot on their new(ish) “IT PAC“. I was really blown away. Not much Microsoft does has blown me away but consider me blown away by this. It was (and still is) by far the most innovative data center design I have ever seen myself at least. Assuming it works of course, at the time the guy said there was still some kinks they were working out, and it wasn’t on a wide scale deployment at all at that point. I’ve heard on the grape vine that Microsoft has been deploying them here and there in a couple facilities in the Seattle area. No idea how many though.

Anyways, back to the Microsoft server design, I commented last year on the concept of using rack level batteries and DC power distribution as another approach to server power requirements, rather than the approach that Google and some others have taken which involve server-based UPSs and server based power supplies (which seem much less efficient).

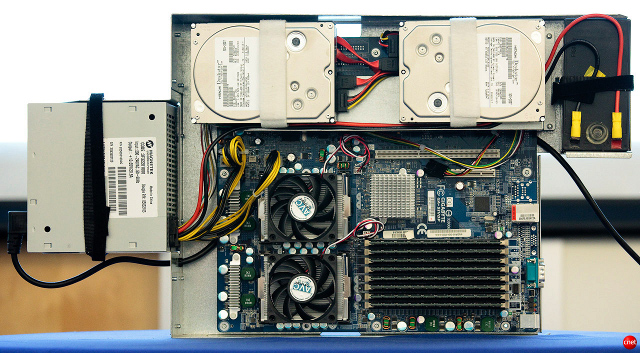

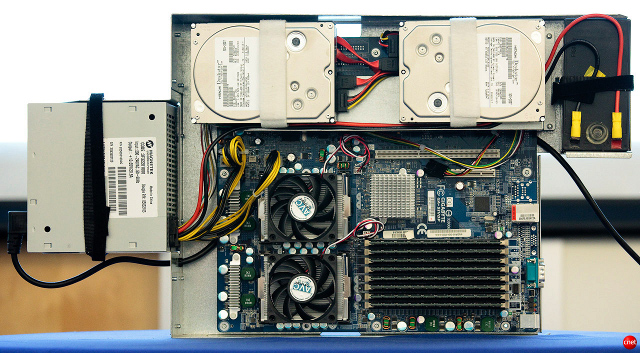

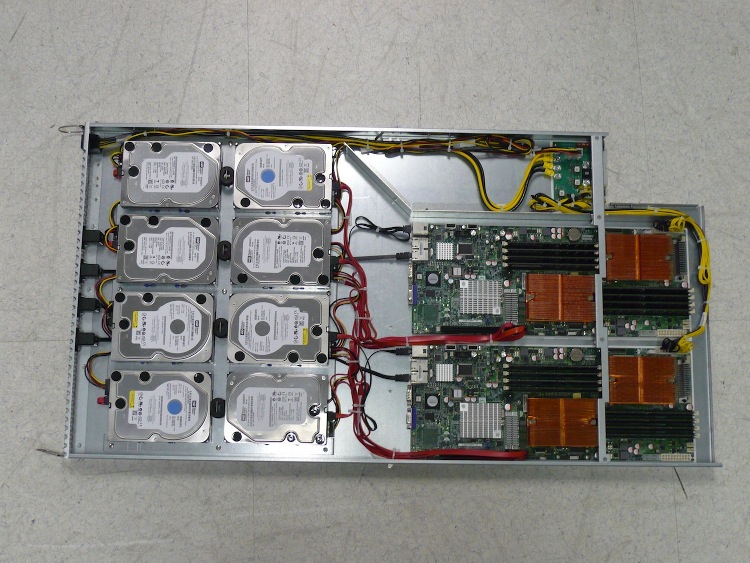

Google Server Design with server-based batteries and power supplies

Add to that rack-based cooling(or in Microsoft’s case – container based cooling), ala SGI CloudRack C2/X2, and Microsoft’s extremely innovative IT PAC containers, and you got yourself a really bad ass data center. Microsoft seems to borrow heavily from the CloudRack design, enhancing it even further. The biggest update would be the power system with the rack level UPS and 480V distribution. I don’t know of any commercial co-location data centers that offer 480V to the cabinets, but when your building your own facilities you can go to the ends of the earth to improve efficiency.

Microsoft’s design permits up to 96 dual socket servers(2 per rack unit) each with 8 memory slots in a single 57U rack (the super tall rack is due to the height of the container). This compares to the CloudRack C2 which fits 76 dual socket servers in a 42U rack (38U of it used for servers).

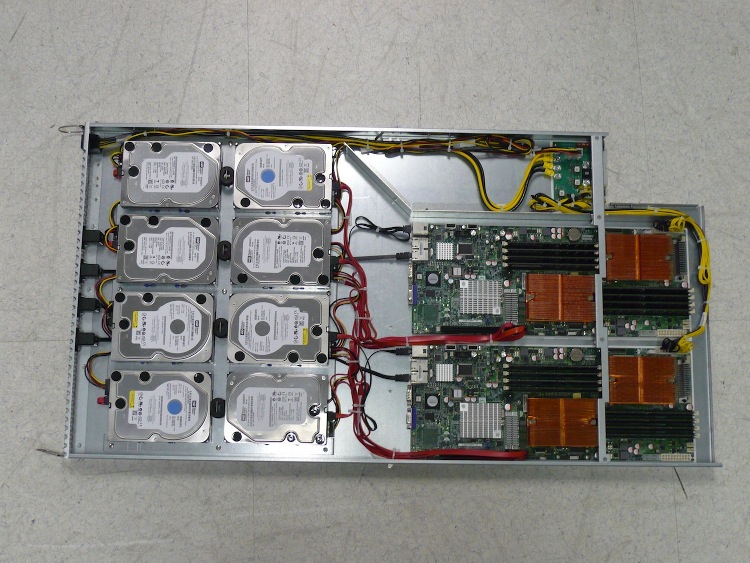

SGI Cloudrack C2 tray with 2 servers, 8 disks (note no power supplies or fans, those are provided at the rack level )

My only question on Microsoft’s design is their mention of “top of rack switches”. I’ve never been a fan of top of rack switches myself. I always have preferred to have switches in the middle of the rack, better for cable management (half of the cables go up, the other half go down). Especially when we are talking about 96 servers in one rack. Maybe it’s just a term they are using to describe what kind of switches, though there is a diagram which shows the switches positioned at the top of the rack.

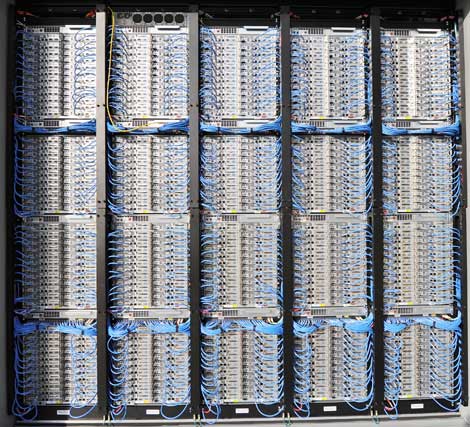

SGI CloudRack C2 with top of rack switches positioned in the middle of the rack

I am also curious on their power usage, which they say they aim to have 40-60 watts/server, which seems impossibly low for a dual socket system, so they likely have done some work to figure out optimal performance based on system load and probably never have the systems run at anywhere near peak capacity.

Having 96 servers consume only 16kW of power is incredibly impressive though.

I have to give mad, mad, absolutely insanely mad props to Microsoft. Something I’ve never done before.

Facebook – 180 servers in 7 racks (6 server racks + 1 UPS rack)

Microsoft – 630 servers in 7 racks

Density is critical to any large scale deployment, there are limits to how dense you can practically go before the costs are too high to justify it. Microsoft has gone about as far as is achievable given current technology to accomplish this.

Here is another link where Microsoft provides a couple of interesting PDFs, the first one I believe is written by the same guy that gave the Microsoft briefing at the conference I was at last year.

(As a side note I have removed Scott from the blog since he doesn’t have time to contribute any more)