I came across this report on Extreme’s site which seems to be from somewhat of an “independent 3rd party”, but I’ve not heard of them so I can’t vouch for them.

I’d like to consider myself at least somewhat up to date on what is out there so when things like this come out I do find it interesting to see what they say.

The thing that stands out to me the most: Arista Networks has only 75 employees ?!? Wow, they’ve been able to do all of that work with only 75 employees? Really? Good job.. that is very surprising to me, I mean most of the companies I have worked at have had more than 75 employees and they’ve accomplished (in my opinion) a fraction of what Arista seems to have, at least from a technology stand point (revenue wise is a different story assuming again the report is accurate).

The thing that made me scratch my head the most: Cisco allows you to run virtual machines on their top of rack switches? Huh? Sounds like EMC and them wanting you to run VMs on their VMAX controllers? I recall at one point Citrix and Arista teamed up to allow some sort of VM to run Netscaler embedded in the Arista switches, though never heard of anyone using it and never heard Citrix promoting it over their own stuff. Seemed like an interesting concept, though no real advantage to doing it I don’t think (main advantage I can think of is non blocking access to the switch fabric which really isn’t a factor with lower end load balancers since they are CPU bound not network bound).

The report seems to take a hypothetical situation where a fairly large organization is upgrading their global network and then went to each of the vendors and asked for a proposal. They leave out what each of the solutions was, specifically which is dissapointing.

They said HP was good because it was cheap, which is pretty much what I’ve heard in the field, it seems nobody that is serious runs HP Procurve.

They reported that Juniper and Brocade were the most “affordable” (having Juniper and affordable together makes no sense), and Arista and Force10 being least affordable (which seems backwards too – they are not clear on what they used to judge costs, because I can’t imagine a Force10 solution costing more than a Juniper one).

They placed some value on line cards that offered both copper and fiber at the same time, which again doesn’t make a lot of sense to me since you can get copper modules to plug into SFP/SFP+ slots fairly easily. The ability to “Run VMs on your switch” also seemed iffy at best, they say you can run “WAN optimization” VMs on the switches, which for a report titled “Data center networking” really should be a non issue as far as features go.

The report predicts Brocade will suffer quite a bit since Dell now has Force10. How Brocade doesn’t have as competitive products as they otherwise could have.

They tout Juniper’s ability to have multiple power supplies, switch fabrics, routing modules as if it was unique to Juniper, which makes no sense to me either. They do call out Juniper for saying their 640-port 10GbE switch is line rate only to 128 ports.

They believe Force10 will be forced into developing lower end solutions to fill out Dell’s portfolio rather than staying competitive on the high end, time will tell.

Avaya? Why bother? They say you should consider them if you’ve previously used Nortel stuff.

They did include their sample scenario that they sent to the vendors and asked for solutions for. I really would of liked to have seen the proposals that came back.

A four-site organization with 7850 employees located at a Canadian head office facility, and three branch offices located in the US, Europe, and Canada. The IT department consists of 100 FTE, and are located primarily at the Canadian head office, with a small proportion of IT staff and systems located at the branch offices.

The organization is looking at completing a data center refurbish/refresh:

The organization has 1000 servers, 50% of which are virtualized (500 physical). The data center currently contains 40 racks with end-of-row switches. None of the switching/routing layers have any redundancy/high availability built in, leaving many potential single points of failure in the network (looking for 30% H/A).

A requirements gathering process has yielded the need for:

- A redundant core network, with capacity for 120 x 10Gbps SFP+ ports

- Redundant top of rack switches, with capacity for 48 x 1Gbps ports in each rack

- 1 ready spare ToR switch and 1 ready spare 10Gps chassis card

- 8x5xNBD support & maintenance

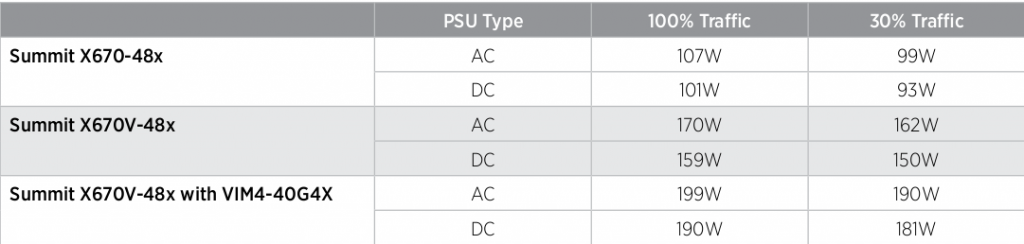

- Nameplate data – power consumption – watts/amps

- 30% of the servers to be highly available

It is unclear how redundant they expect the network to be, would a single chassis with redundant fabrics and power supplies be enough or would you want two? They are also not clear as to what capabilities their ToR switches need other than the implied 10Gbps uplinks.

If I were building this network with Extreme gear I would start out with two pairs of stacked X670Vs at the core(each stack having 96x10GbE), each stack would be connected by 2x40GbE connections with passive copper cabling. The two stacks would be linked together with 4x40GbE connections with passive copper cabling, running (of course) ESRP as the fault tolerance protocol of choice between the two. This would provide 192x10GbE ports between the two stacks, with half being active half being passive.

Another, simpler approach would be to just stack three of the X670V switches together for 168x10GbE active-active ports. Though you know I’m not a big fan of stacking(any more than I am running off a single chassis), if I am connecting 1000 servers I want a higher degree of fault tolerance.

Now if you really could not tolerate a active/passive network, if you really needed that extra throughput then you can use M-LAG to go active-active at layer 2, but I wouldn’t do that myself unless you were really sure you needed that ability. I prefer the reduced complexity with active/passive.

As for the edge switches, they call for redundant 48 port 1GbE switches. Again they are not clear as to their real requirements, but what I would do (what I’ve done in the past) is two stand alone 48-port 1GbE switches, each with 2x10GbE (Summit X460) or 4x10GbE(Summit X480) connections to the core. These edge switches would NOT be stacked, they would be stand alone devices. You could go lower cost with Summit X450e, or even Summit X350 though I would not go with the X350 for this sort of data center configuration. Again I assume your using these switches in an active-passive way for the most part(as in 1 server is using 1 switch at any given time), though if you needed a single server to utilize both switches then you could go the stacking approach at the edge, all depends on what your own needs are – which is why I would of liked to have seen more detail in the report. Or you could do M-LAG at the edge as well, but ESRP’s ability to eliminate loops is hindered if you link the edge switches together since there is a path in the network that ESRP cannot deal with directly (see this post with more in depth info on the how, what, and why for ESRP).

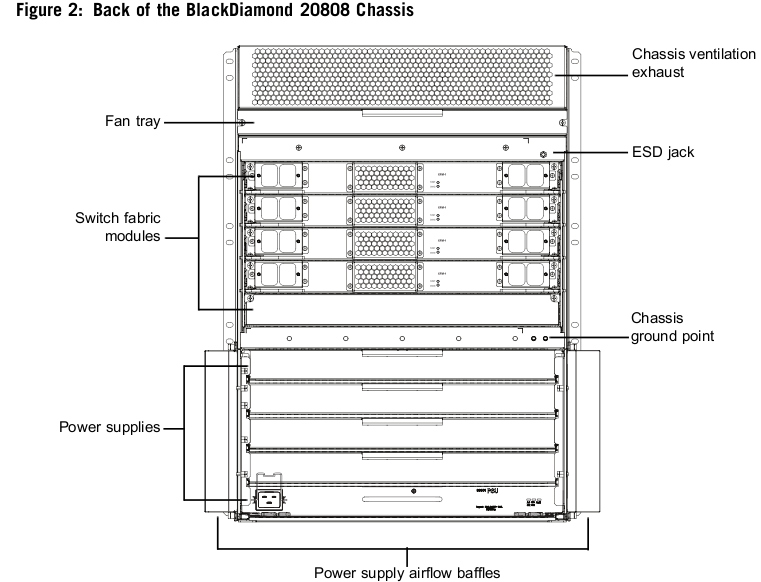

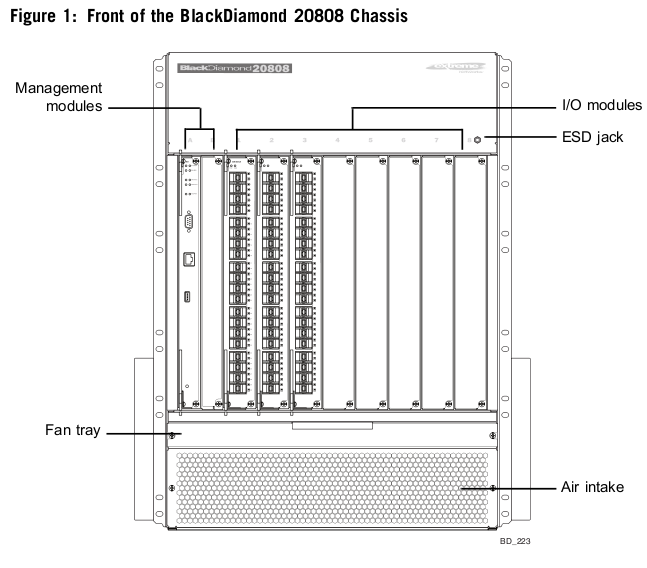

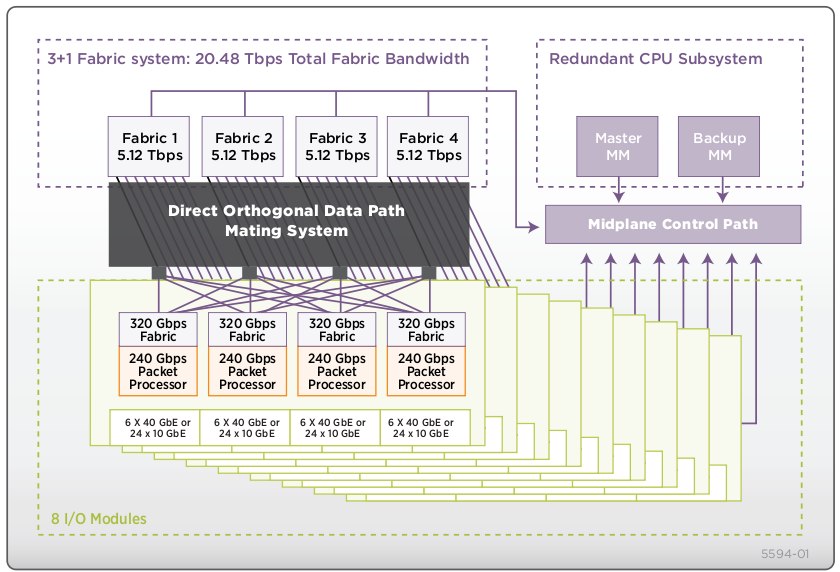

I would NOT go with a Black Diamond solution (or any chassis-based approach) unless cost was really not an issue at all. Despite this example organization having 1,000 servers it’s still a small network they propose building, and the above approach would scale seamlessly to say 4 times that number non disruptively providing sub second layer 2 and layer 3 fail over. It is also seamlessly upgradeable to a chassis approach with zero downtime (well sub second), should the organization needs grow beyond 4,000 hosts. The number of organizations in the world that have more than 4,000 hosts I think is pretty small in the grand scheme of things. If I had to take a stab at a guess I would say easily less than 10% maybe less than 5%.

So in all, an interesting report, not very consistent in their analysis, lacked some more detail that would of been nice to see, but still interesting to see someone else’s thought patterns.