Dell’s Force10 unit must be feeling the heat from the competition. I came across this report which the industry body Tolly did on behalf of Dell/Force10.

Normally I think Tolly reports are halfway decent although they are usually heavily biased towards the sponsor (not surprisingly). This one though felt light on details. It felt like they rushed this to market.

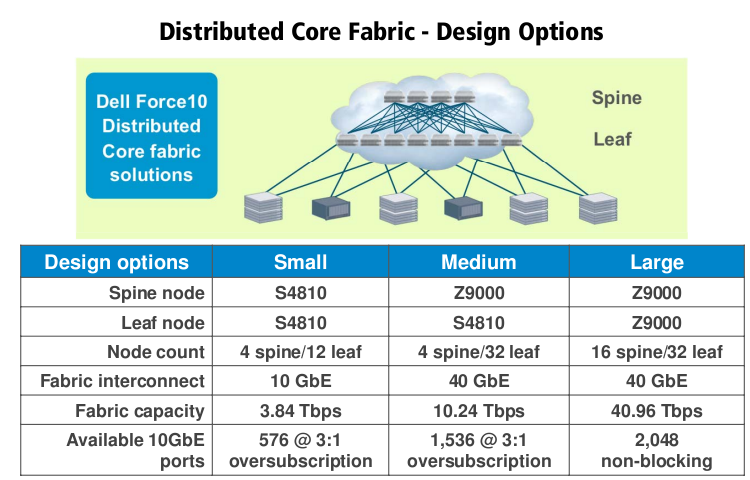

Basically what Force10 is talking about is a distributed core architecture with their 32-port 40GbE Z9000 switches as what they call the spine(though sometimes they are used as the leaf), and their 48-port 10 GbE S4810 switches as what they call the leaf (though sometimes they are used as the spine).

They present 3 design options:

I find three things interesting about these options they propose:

- The minimum node count for spine is 4 nodes

- They don’t propose an entirely non blocking fabric until you get to “large”

- The “large” design is composed entirely of Z9000s, yet they keep the same spine/leaf configuration, whats keeping them from being entirely spine?

The distributed design is very interesting, though it would be a conceptual hurdle I’d have a hard time getting over if I was in the market for this sort of setup. It’s nothing against Force10 specifically I just feel safer with a less complex design (I mentioned before I’m not a fan of stacking for this same reason), less things talking to each other in such a tightly integrated fashion.

That aside though a couple other issues I have with the report is while they do provide the configuration of the switches (that IOS-like interface makes me want to stab my eyes with an ice pick) – I’m by no means familiar with Force10 configuration and they don’t talk about how the devices are managed. Are the spine switches all stacked together? Are the spine and leaf switches stacked together? Are they using something along the lines of Brocade’s VCS technology? Are the devices managed independently and they are relying on other protocols like MLAG? The web site mentions using TRILL at layer 2, which would be similar to Brocade.

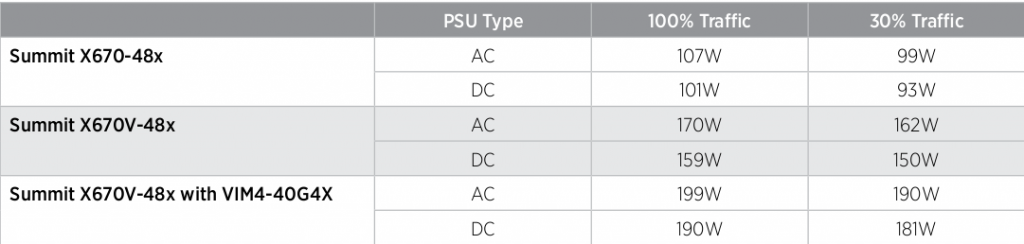

The other issue I have with the report is the lack of power information, specifically would be interested (slightly, in the grand scheme of things I really don’t think this matters all that much) in the power per usable port (ports that aren’t being used for up links or cross connects). They do rightly point out that power usage can vary depending on the workload and so it would be nice to get power usage based on the same workload. Though conversely it may not matters as much, looking at the specs for the Extreme X670V (48x10GbE + 4x40GbE) says there is only 8 watts of difference between (that particular switch) 30% traffic load and 100% traffic load, seems like a trivial amount.

As far as I know the Force10 S4810 switch uses the same Broadcom chipset as the X670V.

On their web site they have a nifty little calculator where you input your switch fabric capacity and it spits out power/space/unit numbers. The numbers there don’t sound as impressive:

- 10Tbps fabric = 9.6kW / 12 systems / 24RU

- 15Tbps fabric = 14.4kW / 18 systems / 36RU

- 20Tbps fabric = 19.2kW / 24 systems / 48RU

The aforementioned many times Black Diamond X-Series comes in at somewhere around 4kW (well if you want to be really conservative you could say 6.1kW assuming 8.1W/port which their report was likely high considering system configuration) and a single system to get up to 20Tbps of fabric(you could perhaps technically say it is has 15Tbps of fabric since the last 5Tbps is there for redundancy, 192 x 80Gbps = 1.5Tbps). 14.5RU worth of rack space too.

Dell claims non-blocking scalability up to 160Tbps, which is certainly a lot! Though I’m not sure what it would take for me to make the leap into a distributed system such as TRILL. Given TRILL is a layer 2 only protocol (which I complained about a while ago), I wonder how they handle layer 3 traffic, is it distributed in a similar manor? What is the performance at layer 3? Honestly I haven’t read much on TRILL at this point (mainly because it hasn’t really interested me yet), but one thing that is not clear to me(maybe someone can clarify), is is TRILL just a traffic management protocol or does it also include more transparent system management(e.g. manage multiple devices as one), or does that system management part require more secret sauce by the manufacturer.

My own, biased(of course), thoughts on this architecture, while innovative:

- Uses a lot of power / consumes a lot of space

- Lots of devices to manage

- Lots of connections – complicated physical network

- Worries over resiliency of TRILL (or any tightly integrated distributed design – getting this stuff right is not easy)

- On paper at least seems to be very scalable

- The Z9000 32-port 40GbE switch certainly seems to be a nice product from a pure hardware/throughput/formfactor perspective. I just came across Arista’s new 1U 40GbE switch and I think I’d prefer the Force10 design with twice the size and twice the ports purely for more line rate ports in the unit.

It would be interesting to read a bit more in depth about this architecture.

I wonder if this is going to be Force10s approach going forward, the distributed design, or if they are going to continue to offer more traditional chassis products for customers who prefer that type of setup. In theory it should be pretty easy to do both.

I think the report is saying that this architecture is more efficient in space and power. If a fully loaded single (2RU Z9000) switch consumes 407 Watts, then 12 switches in this scheme, that give you 512 non-blocking 10Gb ports, would only take 24 RUs and consume a total of 4884 Watts. If you can get the same number of ports in a 24RU chassis (any one has that ?) its going to take anywhere above 10,000W. With a good management tool it can be a compelling offering.

I did a bit of research on this and found that many research organizations have been looking at a similar architecture. http://cseweb.ucsd.edu/~vahdat/papers/hoti09.pdf

If you need to build a large datacenter this is probably the best way to go. Days of large chassis are numbered.

–Jay

Comment by Jay — December 2, 2011 @ 1:08 am

I think the efficiency claim is what the report is trying to say but the real results(power/space requirements) from Dell’s website tells the opposite story.

Chassis days may be numbered I don’t know, the same could be said of large storage arrays but those are doing fine as well(vmware has done wonders to bring big arrays back to the forefront of storage). At a small scale this leaf/spine architecture makes some sense but as the thing grows it just becomes unwieldy in it’s complexity, and power inefficient.

We’ll see what happens though, at the moment as far as I know only Force10 and Arista are proposing this kind of approach.

thanks for the comment!

Comment by Nate — December 2, 2011 @ 10:52 am

[…] Opter pour une architecture réseau de type Spine Fabric […]

Pingback by OCTO talks ! » Hadoop dans ma DSI : comment dimensionner un cluster ? — October 30, 2012 @ 4:43 am

[…] a Spine Fabric network […]

Pingback by OCTO talks ! » Hadoop in my IT department: How to plan a cluster? — November 26, 2012 @ 4:13 am