Read an interesting article over on The Register with a lot of comments by a Red Hat executive.

And I can’t help but disagree on a bunch of stuff the executive says. But it could be because the executive is looking at and talking with big bloated slow moving organizations that have a lot of incompetent people in their ranks (“Never got fired for buying X” mantra), instead of smaller more nimble more leading edge organizations willing, ready and able to take some additional “risk” for a much bigger return (such as running virtualized production systems, seems like a common concept to many but I know there’s a bunch of people out there that aren’t convinced that it will work, btw I ran my first VMware in production in 2004, and saved my company BIG BUCKS with the customer (that’s a long story, and an even longer weekend)).

OK so this executive says

After all, processor and storage capacity keep tracking along on their respective Moore’s and Kryder’s Laws, doubling every 18 months, and Gilder’s Law says that networking capacity should double every six months. Those efficiencies should lead to comparable economies. But they’re not.

I was just thinking this morning about the price and capacity of the latest systems(sorry keep going back to the BL685c G7 with 48 cores and 512GB of ram 🙂 ).

I remember back in 2004/2005 time frame the company I was at paying well over $100,000 for a 8-way Itanium system with 128GB of memory to run Oracle databases. The systems of today whether it is the aforementioned blade or countless others can run circles around such hardware now at a tiny fraction of the price. It wasn’t unreasonable just a few short years ago to pay more than $1M for a system that had 512GB of memory and 24-48 CPUs, and now you can get it for less than $50,000(in this case using HP web pricing). That big $1M system probably consumed at least 5-10kW of power and a full rack as well, vs now the same capacity can go for ~800W(100% load off the top of my head) and you can get at least 32 of them in a rack(barring power/cooling constraints).

Granted that big $1M system was far more redundant and available than the small blade or rack mount server, but at the time if you wanted so many CPU cores and memory in a single system you really had no choice but to go big, really big. And if I was paying $1M for a system I’d want it to be highly redundant anyways!

With networking, well 10GbE has gotten to be dirt cheap, just think back a few years ago if you wanted a switch with 48 x 10GbE ports you’d be looking at I’d say $300k+ and it’d take the better part of a rack. Now you can get such switches in a 1U form factor from some vendors(2U from others), for sub $40k?

With storage, well spinning rust hasn’t evolved all that much over the past decade for performance unfortunately but technologies like distributed RAID have managed to extract an enormous amount of untapped capacity out of the spindles that older architectures are simply unable to exploit. More recently the introduction of SSDs and the sub LUN automagic storage tiering technology that is emerging (I think it’s still a few years away from being really useful) you can really get a lot more bang out of your system. EMC‘s fast cache looks very cool too from a conceptual perspective at least I’ve never used it and don’t know anyone who has but I do wish 3PAR had it! Assuming I understand the technology right, with the key being the SSDs are used for both read and write caching. Verses something like the NetApp PAM card which is only a read cache. Neither Fast cache nor PAM is enough to make we want to use those platforms for my own stuff.

The exec goes on to say

Simply put, Whitehurst’s answer to his own question is that IT vendors suck, and that the old model of delivering products to customers is fundamentally broken.

I would tend to agree for the most part but there are those out there that really are awesome. I was lucky enough to find one such vendor, and a few such manufacturers. As one vendor I deal with says they work with the customer not with the manufacturer, they work to give the customer what is best for them. So many vendors I have dealt with over the years are really lazy when it comes down to it, they only know a few select solutions from a few big name organizations and give blank stares if you go outside their realm of comfort (random thought: I got the image of Speed Bump: The roadkill possum from a really old TV series called Liquid Television that I watched on MTV for a brief time in the 90s).

By the same token while most IT vendors suck, most IT managers suck too, for the same reason. Probably because most people suck that may be what it comes down to it at the end of the day. IT as you well know is still an emerging industry, still a baby really evolving very quickly, but has a ways to go. So like with anything the people out there that can best leverage IT are few and far between. Most of the rest are clueless — like my first CEO about 10-11 years ago was convinced he could replace me with a tech head from Fry’s Electronics (despite my 3 managers telling him he could not). About a year after I left the company he did in fact hire such a person — only problem was that individual never showed up for work (maybe he forgot).

Exec goes on to say..

“Functionality should be exploding and costs should be plummeting — and being a CIO, you should be a rock star and out on the golf course by 3 pm,” quipped Whitehurst to his Interop audience.

That is in fact what is happening — provided your choosing the right solutions, and have the right people to manage them, the possibilities are there, just most people don’t realize it or don’t have the capacity to evolve into what could be called the next generation of IT, they have been doing the same thing for so long, it’s hard to change.

Speaking of being a rock star and out on the golf course by 3pm, I recall two things I’ve heard in the past year or so-

The first one used the golf course analogy, from a local VMware consulting shop that has a bunch of smart folks working for them I thought this was a really funny strategy and can see it working quite well in many cases – the person took an industry average of say 2-3 days to provision a new physical system, and said in the virtual world — don’t tell your customers that you can provision that new system in ten minutes, tell them it will take you 2-3 days, spend the ten minutes doing what you need and spend the rest of the time on the golf course.

The second one was from a 3PAR user I believe. Who told one of their internal customers/co-workers something along the lines of “You know how I tell you it takes me a day to provision your 10TB of storage? Well I lied, it only takes me about a minute”.

For me, I’m really too honest I think, I tell people how long I think it will really take and at least on big projects am often too optimistic on time lines. Maybe I should take up Scotty’s strategy and take my time lines and multiply them by four to look like a miracle worker when it gets done early. It might help to work with a project manager as well, I haven’t had one for any IT projects in more than five years now. They know how to manage time (if you have a good one, especially one experienced with IT not just a generic PM).

Lastly the exec says

The key to unlocking the value of clouds is open standards for cloud interoperability, says Whitehurst, as well as standardization up and down the stack to simplify how applications are deployed. Red Hat’s research calculates that about two-thirds of a programmer’s time is spent worrying about how the program will be deployed rather than on the actual coding of the program.

Worrying about how the program will be deployed is a good thing, an absolutely good thing. Rewinding again to 2004 I remember a company meeting where one of the heads of the company stood up and said something along the lines of 2004 was the year of operations, we worked hard to improve how the product operates, and the next phase is going back to feature work for customers. I couldn’t believe my ears, that year was the worst for operations, filled with half implemented software solutions that actually made things worse instead of better, outages increased, stress increased, turnover increased.

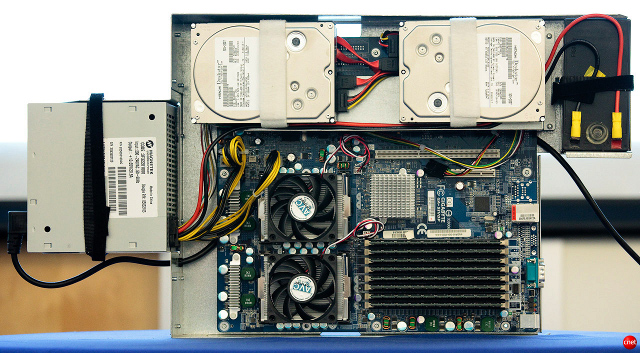

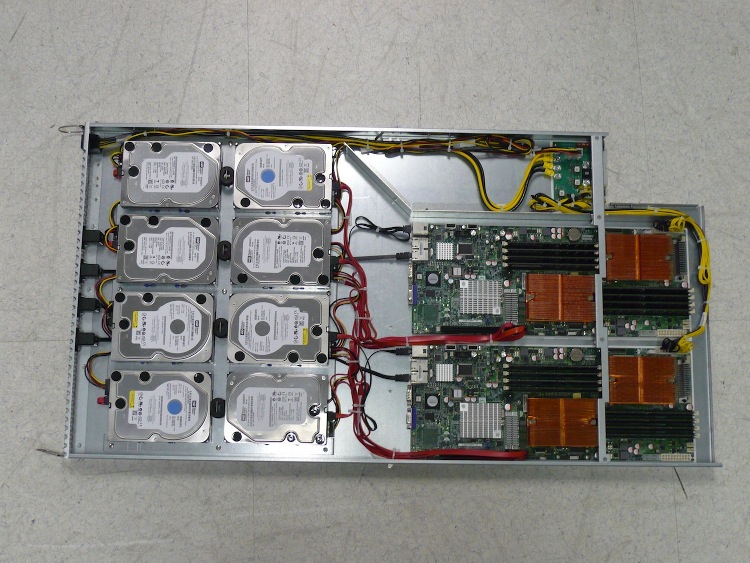

The only thing I could do from an operations perspective and buy a crap load of hardware and partition the application to make it easier to manage. We ended up with tons of excess capacity but the development teams were obviouslly unable to make the design changes we needed to improve the operations of the application, but we at least had something that was more manageable, the deployment and troubleshooting teams were so happy when the new stuff was put into production, no longer did they have to try to parse gigabyte sized log files trying to find which errors belong to which transactions from which subsystem. Traffic for different subsystems was routed to different physical systems so you knew if there was an issue with one type of process you go to server farm X to look at it, problem resolution was significantly faster.

I remember having one conversation with a software architect in early 2005 about a particular subsystem that was very poorly implemented (or maybe even designed), it caused us massive headaches in operations, non stop problems really. His response was Well I invited you to a architecture meeting in January of 2004 to talk about this but you never showed up. I don’t remember the invite but if I saw it I know why I didn’t show up, it’s because I was buried in production outages 24/7 and had no time to think more than 24 hours ahead yet alone think about a software feature that was months away from deployment. Just didn’t have the capacity, was running on fumes for more than a year.

So yes, if you are a developer please do worry about how it is deployed, never stop worrying. Consult your operations team (assuming they are worth anything), and hopefully you can get a solid solution out the door. If you have a good experienced operations team then it’s very likely they know a lot more about running production than you do and can provide some good insight into what would provide the best performance and uptime from an operations perspective. They may be simple changes, or not.

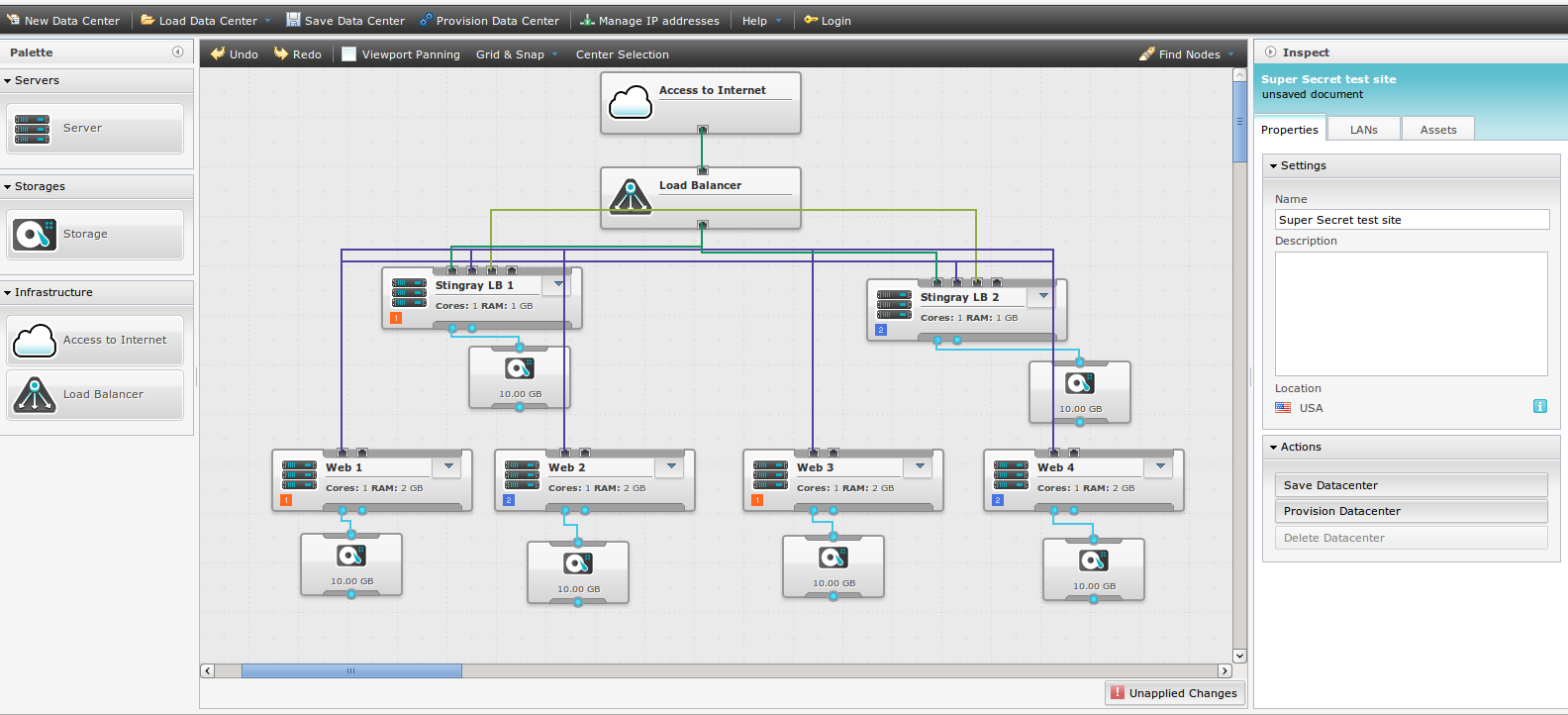

One such example, I was working at a now defunct company who had a hard on for Ruby on Rails. They were developing app after app on this shiny new platform. They were seemingly trying to follow Services Oriented Architecture (SOA), something I learned about ironically at a Red Hat conference a few years ago (didn’t know there was a acronym for that sort of thing it seemed so obvious). I had a couple, really simple suggestions for them to take into account for how we would deploy these new apps. Their original intentions called for basically everything running under a single apache instance(across multiple systems), and for example if Service A wanted to talk to Service B then it would talk to that service on the same server. My suggestions which we went with involved two simple concepts:

- Each application had it’s own apache instance, listening on it’s own port

- Each application lived behind a load balancer virtual IP with associated health checking, with all application-to-application communication flowing through the load balancer

Towards the end we had upwards of I’d say 15 of these apps running on a small collection of servers.

The benefits are pretty obvious, but the developers weren’t versed in operations — which is totally fine they don’t need to be (though it can be great when they are, I’ve worked with a few such people though they are VERY RARE) that’s what operations people do and you should involve them in your development process.

As for cloud standards — folks are busy building those as we speak and type. VMware seems to be the furthest along from an infrastructure cloud perspective I believe, I wouldn’t expect them to lose their leadership position anytime soon they have an enormous amount of momentum behind them, and it takes a lot to counter that momentum.

About a year ago I was talking to some former co-workers who told me another funny story they were launching a new version of software to production, the software had been crashing their test environments daily for about a month. They had a go no-go meeting in which everyone involved with the product said NO GO. But management overrode them, and they deployed it anyways. The result? A roughly 14 hour production outage while they tried to roll the software back. I laughed and said, things really haven’t changed since I left have they?

So the solutions are there, the software companies and hardware companies have been evolving their stuff for years, the problem is the concepts can become fairly complex when talking about things like capacity utilization and stranded resources, getting the right people in place to be able to not only find such solutions but deploy and manage them as well can really go a long ways, but those people are rare at this point.

I haven’t been writing too much recently been really busy, Scott looks to be doing a good job so far though.