For the past couple of years the folks behind the Datacenter Dynamics conferences have been hounding me to fork over the $500 fee to attend their conference. I’ve looked at it, and it really didn’t seem aimed at people like me, aimed more for people who build/design/manage data centers. I mostly use co-location space. While data center design is somewhat interesting to me, at least the leading edge technology it’s not something I work with.

So a couple days ago a friend of mine offered to get me in for free so I took him up on his offer. Get away from the office, and the conference is about one mile from my apartment.

The keynote was somewhat interesting, given by a Distinguished Engineer from Microsoft. I suppose more than anything I thought some things he had to say were interesting to note. I’ll skip the obvious stuff, he had a couple of less obvious things to say –

Let OEMs innovate

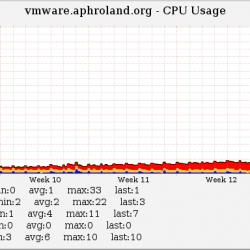

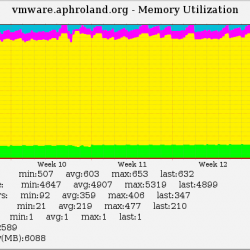

One thing he says MS does is they have a dedicated team of people that instrument subsets of their applications and servers and gather performance data(if you know me you probably know I collect a lot of stats myself everything from apps, to OS, to network, load balancers, power strips, storage etc).

They take this data and build usage profiles for their applications and come up with as generic as possible yet still being specific on certain areas for their server designs:

- X amount of CPU capacity

- X amount of memory

- X amount of disk

- X amount of I/O

- Power envelope at a per-rack and per-container basis

- Operational temperature and humidity levels the systems will operate in

He raised the point if you get too specific you tie the hands of the OEMs and they can’t get creative. He mentioned that on a few occasions they sent out RFPs and gotten back very different designs from different OEMs. He says they use 3 different manufacturers (two I know are SGI/Rackable and Dell, don’t know the third). They apparently aren’t big enough to deal with more OEMs (a strange statement I thought) so they only work with a few.

Now I think for most organizations this really isn’t possible, as getting this sort of precise information isn’t easy, especially from the application level.

They seem to aim for operating the servers in up to mid 90 degree temperatures. Which is pretty extreme but these days not too uncommon among the hyper scale companies. I saw a resume of a MS operations guy recently that claimed he used monitoring software to watch over 800,000 servers.

They emphasized purpose built servers, eliminating components that are not needed to reduce costs and power usage, use low power processors regardless of application. Emphasis on performance/watt/TCO$ I think is what he said.

Stranded Power

Also emphasized eliminating stranded power, use every watt that is available to you, stranded power is very expensive. To achieve this they leverage power capping in the servers to get more servers per rack because they know what their usage profile is they cap their servers at a certain level. I know HP has this technology I assume others do too though I haven’t looked. One thing that was confusing to me when quizzing the HP folks on it was that it was server or chassis(in the case of blades) level. To me that doesn’t seem very efficient, I would expect it to at least be rack/circuit level. I mean if the intelligence is already in the servers you should be able to aggregate that intelligence outside the individual servers to operate more as a collective, to gain even more efficiencies. You could potentially extend the concept to the container level as well.

Idle servers still draw significant power

In my own experience measuring power levels at the PDU/CDU level over the past 6 years I have seen that typical power usage fluctuates at most 10-15% from idle to peak(application peak not really server peak). This guy from MS says that even with the most advanced “idle” states the latest generation CPUs offer it only reduces overall power usage to about 50% of peak. So there still seems to be significant room for improving power efficiencies when a system is idle. Perhaps that is why Google is funding some project to this end.

MS’s Generation 4 data centers

I’m sure others have read about them, but you know me, I really don’t pay much attention to what MS does, really have not in a decade or more so this was news to me..

He covered their evolution of data center design, topping out at what he called Gen3 data centers in Chicago and Ireland, container based.

Their generation 4 data centers are also container based but appear to be significantly more advanced from an infrastructure perspective than current data center containers. If you have silverlight you can watch a video on it here, it’s the same video shown at the conference.

I won’t go into big details I’m sure you can find them online but the basics is it is designed to be able to operate in seemingly almost any environment, using just basic outside air for cooling. If it gets too hot then a water system kicks in to cool the incoming air(an example was lowering ~105 degree air outside to ~95 degree air inside cool enough for the servers). If it gets too cold then an air re-circulation system kicks in and circulates a portion of the exhaust from the servers back to the front of the servers to mix with incoming cold air. If it gets too humid it does something else to compensate(forgot what).

They haven’t deployed it at any scale yet so don’t have hard data on things yet but have enough research to move forward with the project.

I’ll tell you what I’m glad I don’t deal with server hardware anymore, these new data centers are running so hot I’d want them to run the cooling just for me, I can’t work in those temperatures I’d die.

Server level UPS

You may of heard people like Google talking about using batteries in their servers. I never understood this concept myself, I can probably understand not using big centralized UPSs in your data center, but I would expect the logical move would be to rack level UPSs. Ones that would take AC input and output DC power to the servers directly.

One of the main complaints about normal UPSs as far as efficiency goes is the double conversion that goes on, incoming AC converts to DC to the batteries, then back to AC to go to the racks. I think this is mainly because DC power isn’t very good for long distances. But there are already some designs on the market from companies like SGI (aka Rackable) for rack level power distribution(e.g. no power supplies in the servers). This is the Cloudrack product, something I’ve come to really like a lot since I first got wind of it in 2008.

If you have such a system, and I imagine Google does something similar, I don’t understand why they’d put batteries in the server instead of integrate them into the rack power distribution, but whatever it’s their choice.

I attended a breakout session which talked about this topic presented by someone from the Electric Power Research Institute. The speaker got pretty technical into the electrical terminology beyond what I could understand but I got some of the basic concepts.

The most interesting claim he made was that 90% of electrical disruptions last less than two seconds. This here was enough for me to understand why people are looking to server-level batteries instead of big centralized systems.

They did pretty extensive testing with system components and power disruptions and had some interesting results, honestly can’t really recite them they were pretty technical, involved measuring power disruptions in the number of cycles(each cycle is 1/60th of a second), comparing the duration of the disruption with the magnitude of it(in their case voltage sags). So they measured the “breaking” point of equipment, what sort of disruptions can they sustain, he said for the most part power supplies are rated to handle 4 cycles of disruption, or 4/60ths of a second likely without noticeable impact. Beyond that in most cases equipment won’t get damaged but it will shut off/reboot.

He also brought up that in their surveys it was very rare that power sags went below 60% of total voltage. Which made me think about some older lower quality UPSs I used to have in combination with auto switching power supplies. What would happen when the UPS went to battery it caused a voltage spike, which convinced the auto switching power supplies to switch from 120V to 208V and that immediately tripped their breaker/saftey mechanism because the voltage returned to 120V within who knows how many cycles. I remember when I was ordering special high grade power supplies I had to request that they be hard set to 110V to avoid this. Eventually I replaced the UPSs with better ones and haven’t had the problem since.

But it got me thinking could the same thing happen? I mean pretty much all servers now have auto switching power supplies, and one of this organizations tests involved 208V/220V power sags dropping as low as 60% of regular voltage. Could that convince the power supply it’s time to go to 120V ? I didn’t get to ask..

They constructed a power supply board with some special capacitors I believe they were(they were certainly not batteries but they may of had another technical term that escapes me), which can store enough energy to ride that 2 second window where 90% of power problems occur in. He talked about other components that would assist in the charging of this capacitor, since it was an order of magnitude larger than the regular ones in the system there had to be special safeguards in place to prevent it from exploding or something when it charged up. Again lots of electrical stuff beyond my areas of expertise(and interest really!).

They demonstrated it the lab, and it worked well. He said there really isn’t anything like it on the market, and this is purely a lab thing they don’t plan to make anything to sell. The power supply manufacturers are able to do this, but they are waiting to see if a market develops, if there will be demand in the general computing space to make such technology available.

I’d be willing to bet for the most part people will not use server level batteries. In my opinion it doesn’t make sense unless your operating at really high levels of scale, I believe for the most part people want and need more of a buffer, more of a margin of error to be able to correct things that might fail, having only a few seconds to respond really isn’t enough time. At certain scale it becomes less important but most places aren’t at that scale. I’d guesstimate that scale doesn’t kick in until you have high hundreds or thousands of systems, preferably at diverse facilities. And most of those systems have to be in like configurations running similar/same applications in a highly redundant configuration. Until you get there I think it will still be very popular to stick with redundant power feeds and redundant UPSs. The costs to recover from such a power outage are greater than what you gain in efficiency(in my opinion).

In case it’s not obvious I feel the same way about flywheel UPSs

Airflow Optimization

Another breakout session was about airflow optimization. The one interesting thing I learned here is that you can measure how efficient your airflow is by comparing the temperature of the intake of the AC units vs the output of them. If the difference is small (sub 10 degrees) then there is too much air mixture going on. If you have a 100% efficient cooling system it will be 28-30 degrees difference. He also mentioned that there isn’t much point in completely isolating thermal zones from each other unless your running high densities(at least 8kW per rack). If your doing less the time for ROI is too long for it to be effective.

He mentioned one customer that they worked with, they spent $45k on sensors(350 of them I think), and a bunch of other stuff to optimize the airflow for their 5,000 square foot facility. While they could of saved more by keeping up to 5 of their CRAH(?) units turned off(AC units), the customer in the end wanted to keep them all on they were not comfortable operating with temps in the mid 70s. But despite having the ACs on, with the airflow optimization they were able to save ~5.7% in power which resulted in something like $33k in annual savings. And now they have a good process and equipment to be able to repeat this procedure on their own if they want in other locations.

Other stuff

There was a couple other breakout sessions I went to, one from some sort of Wall Street research firm, which really didn’t seem interesting, he mostly talked about what his investors are interested in(stupid things like the number of users on Twitter and Facebook – if you know me you know I really hate these social sites)

Then I can’t leave this blog without mentioning the most pointless breakout session ever, sorry no offense to the person who put it on, it was about Developing Cloud Services. I really wasn’t expecting much but what I got was nothing. He spent something like 30 minutes talking about how you need infrastructure, power, network, support etc. I talked with another attendee who agreed this guy had no idea what he was talking about he was just rambling on about infrastructure(he works for a data center company). I can understand talking about that stuff but everything he covered was so incredibly obvious it was a pointless waste of time.

Shameless Plug

If your building out a datacenter with traditional rack mount systems and can choose what PDU you use I suggest you check out Servertech stuff, I really like them for their flexibility but they also on their higher end models offer integrated environmental sensors, if you have 2 PDUs per rack as an example you can have up to 4 sensors(two in front, two in back yes you want sensors in back). I really love the insight that massive instrumentation gives. And it’s nice to have this feature built into the PDU so you don’t need extra equipment.

Servertech also has software that integrates with server operating systems which can pro-actively gracefully shut down systems(in any order you want) as the temperature rises in the event of a cooling failure, they call it Smart Load Shedding.

I think they may also be unique in the industry in having a solution that can measure power usage on a per-outlet basis. And they claim something like accuracy within 1% or something. I recall asking another PDU manufacturer a few years ago on the prospects of measuring power on a per-outlet basis and they said it was too expensive, it would add ~$50 per outlet monitored. I don’t know what Servertech charges for these new CDUs (as they call them), but I’m sure they don’t charge $50/outlet more.

There may be other solutions in the industry that are similar, I haven’t found a reason to move away from Servertech yet. My previous PDU vendor had some pretty severe quality issues(~30% failure rate), so of course not all solutions are equal.

Conclusion

The conclusion to this is it pretty much lived up to my expectations. I would not pay for this event to attend it unless I was building/designing data centers. The sales guys from Datacenter Dynamics tried to convince me that it would be of value to me, and on their site they list a wide range of professions that can benefit from it. Maybe it’s true, I learned a few things, but really nothing that will cause me to adjust strategy in the near future.