(I don’t know if I need one of those disclaimer things at the top here that says HP paid for my hotel and stuff in Vegas for Discover because I learned about this before I got here and was going to write about it anyway, but in any case know that..)

All about Flash

The 3PAR announcements at HP Discover this week are all about HP 3PAR’s all flash array the 7450, which was announced at last year’s Discover event in Las Vegas. HP has tried hard to convince the world that the 3PAR architecture is competitive even in the new world of all flash. Several of the other big players in storage – EMC, NetApp, and IBM have all either acquired companies specialized in all flash or in the case of NetApp they acquired and have been simultaneously building a new system(apparently called Flash Ray which folks think will be released later in the year).

Dell and HDS, like HP have not decided to do that, instead relying on in house technology for all flash use cases. Of course there have been a ton of all flash startups, all trying to be market disruptors.

So first a brief recap of what HP has done with 3PAR to-date to optimize for all flash workloads:

- Faster CPUs, doubling of the data cache (7400 vs 7450)

- Sophisticated monitoring and alerting with SSD wear leveling (alert at 90% of max endurance, force fail the SSD at 95% max endurance)

- Adaptive Read cache – only read what you need, does not attempt to read ahead because the penalty for going back to the SSD is so small, and this optimizes bandwidth utilization

- Adaptive write cache – only write what you require, if 4kB of a 16kB page is written then the array only writes 4kB, which reduces wear on the SSD who typically has a shorter life span than that of spinning rust.

- Autonomic cache offload – more sophisticated cache flushing algorithms (this particular one has benefits for disk-based 3PARs as well)

- Multi tenant I/O processing – multi threaded cache flushing, and supports both large(typically sequential) and small I/O sizes(typically random) simultaneously in an efficient manor – separates the large I/Os into more efficient small I/Os for the SSDs to handle.

- Adaptive sparing – basically allows them to unlock hidden storage capacity (upwards of 20%) on each SSD to use for data storage without compromising anything.

- Optimize the 7xxx platform by leveraging PCI Express’ Message Signal Interrupts which allowed the system to reach a staggering 900,000 IOPS at 0.7 millisecond response times (caveat that is a 100% read workload)

I learned at HP Storage tech day last year that among the features 3PAR was working on was:

- In line deduplication for file and block

- Compression

- File+Object services running directly on 3PAR controllers

There were no specifics given at the time.

Well part of that wait is over.

Hardware-accelerated deduplication

In what I believe is an industry exclusive, somehow 3PAR has managed to find some spare silicon in their now 3-year old Gen4 ASIC to give complete CPU-offloaded inline deduplication for transactional workloads on their 7450 all flash array.

They say that the software will return typically a 4:1 to 10:1 data reduction levels. This is not meant to compete against HP StoreOnce which offers much higher levels of data reduction, this is for transaction processing (which StoreOnce cannot do) and primarily to reduce the cost of operating an all flash system.

It has been interesting to see 3PAR evolve, as a customer of theirs for almost eight years now. I remember when NetApp came out and touted deduplication for transactional workloads and 3PAR didn’t believe in the concept due to the performance hit you would(and they did) take.

Now they have line rate(I believe) hardware deduplication so that argument no longer applies. The caveat, at least for this announcement is this feature is limited to the 7450. There is nothing technically that prevents it from getting to their other Gen4 systems whether it is the 7200, 7400, the 10400, and 10800. But support for those is not mentioned yet, I imagine 3PAR is beating their drum to the drones out there who might be discounting 3PAR still because they have a unified architecture between AFA and hybrid flash/disk and disk-only systems(like mine).

One of 3PAR’s main claims to fame is that you can crank up a lot of their features and they do not impact system performance because most of it is performed by the ASIC, it is nice to see that they have been able to continue this trend, and while it obviously wasn’t introduced on day one with the Gen4 ASIC, it does not require customers wait, or upgrade their existing systems (whenever the Gen5 comes out I’d wager December 2015) to the next generation ASIC to get this functionality.

The deduplication operates using fixed page sizes that are 16kB each, which is a standard 3PAR page size for many operations like provisioning.

For 3PAR customers note that this technology is based on Common Provisioning Groups(CPG). So data within a CPG can be deduplicated. If you opt for a single CPG on your system and put all of your volumes on it, then that effectively makes the deduplication global.

Express Indexing

This is a patented approach which allows 3PAR to use significantly less memory than would be otherwise required to store lookup tables.

Thin Clones

Thin clones are basically the ability to deduplicate VM clones (I imagine this would need hypervisor integration like VAAI)Â for faster deployment. So you could probably deploy clones at 5-20x the speed at which you could before.

NetApp here too has been touting a similar approach for a few years on their NFS platform anyway.

Two Terabyte SSDs

Well almost 2TB, coming in at 1.9TB, these are actually 1.6TB cMLC SSDs but with the aforementioned adaptive sparing it allows 3PAR to bump the usable capacity of the device way up without compromising on any aspect of data protection or availability.

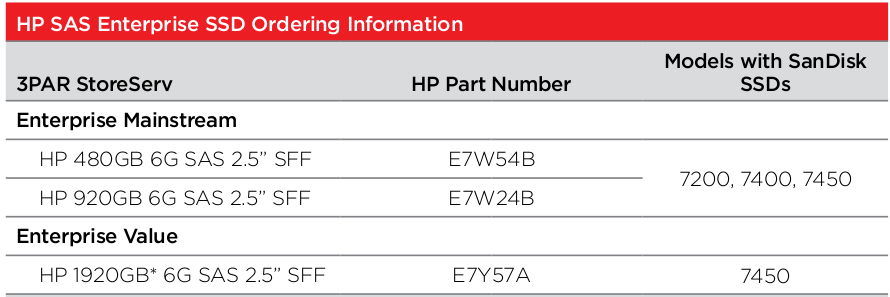

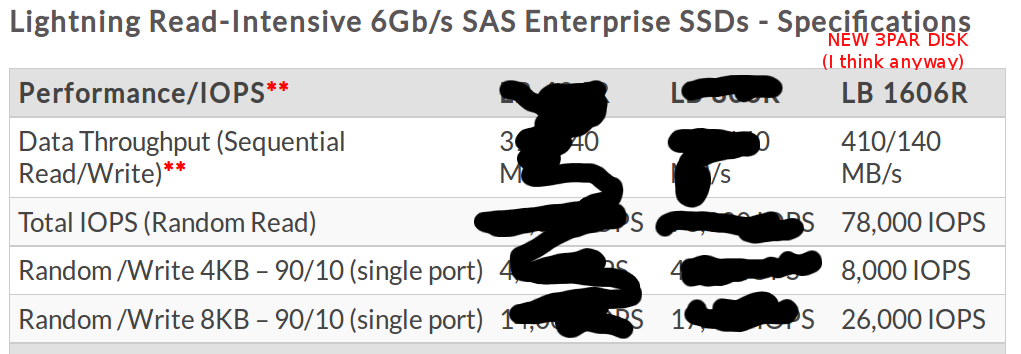

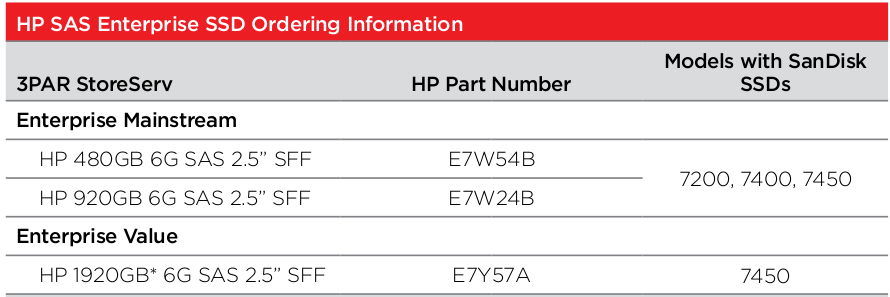

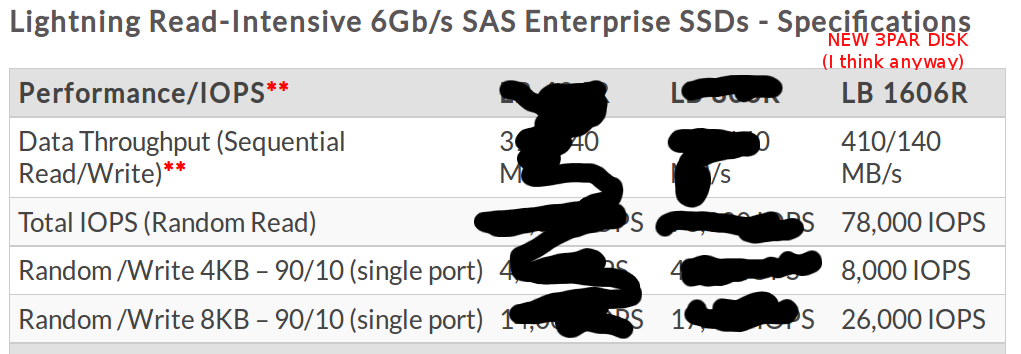

These appear to be Sandisk Lightning Read-intensive SSDs. I found that info here(PDF).

Info on ordering Sandisk SSDs for HP 3PAR

I also quote aforementioned PDF

The 1920GB is available only in the StoreServ 7450 until the end of September 2014.

�

It will then be available in other models as well October 2014.

New 1.6TB SSD I believe comes from Sandisk, not the fastest around but certainly a bunch faster than a 15k RPM disk!

These SSDs come with a five year unconditional warranty, which is better than the included warranty on disks on 3PAR(three year). This 5-year warranty is extended to the 480GB and 920GB MLC SSDs as well. Assuming Sandisk is indeed the supplier as they claim the 5-year warranty exceeds the manufacturer’s own 3-year warranty.

These are technically consumer grade however HP touts their sophisticated flash features that make the media effectively more reliable than it otherwise might be in another architecture, and that claim is backed by the new unconditional warranty.

These are much more geared towards reads vs writes, and are significantly lower cost on a per GB basis than all previous SSD offerings from HP 3PAR.

The cost impact of these new SSDs is pretty dramatic, with the per GB list cost dropping from about $26.50 this time last year to about $7.50 this year.

These new SSDs allow for up to 460TB of raw flash on the 7450, which HP claims is seven times more than Pure Storage(which is a massively funded AFA startup), and 12 times more than a four brick EMC ExtremIO system.

With deduplication the 7450 can get upwards of 1.3PB of usable flash capacity in a single system along with 900,000 read I/Os with sub millisecond response times.

Dell Compellent about a year or so ago updated their architecture to leverage what they called read optimized low cost SSDs, and updated their auto tiering software to be aware of the different classes of SSDs. There are no tiering enhancements announced today, in fact I suspect you can’t even license the tiering software on a 3PAR 7450 since there is only one “tier” there.

So what do you get when you combine this hardware accelerated deduplication and high capacity low cost solid state media?

Solid state at less than $2/GB usable

HP says this puts solid state costs roughly in line with that of 15k RPM spinning disk. This is a pretty impressive feat. Not a unique one, there are other players out there that have reached the same milestone, but that is obviously not the only arrow 3PAR has in their arsenal.

That arsenal, is what HP believes is the reason you should go 3PAR for your all flash workloads. Forget about the startups, forget about EMC’s Xtrem IO, forget about NetApp Flash Ray, forget about IBM’s TMS flash systems etc etc.

Six nines of availability, guaranteed

HP is now willing to put their money where their mouth is and sign a contract that guarantees six nines of availability on any 4-node 3PAR system (originally thought it was 7450-specific it is not). That is a very bold statement to make in my opinion. This obviously comes as a result of an architecture that has been refined over the past roughly fifteen years and has some really sophisticated availability features including:

- Persistent ports – very rapid fail over of host connectivity for all protocols in the event of planned or unplanned controller disruption. They have laser loss detection for fibre channel as well which will fail over the port if the cable is unplugged. This means that hosts do not require MPIO software to deal with storage controller disruptions.

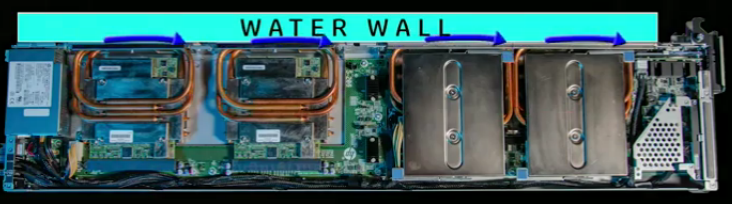

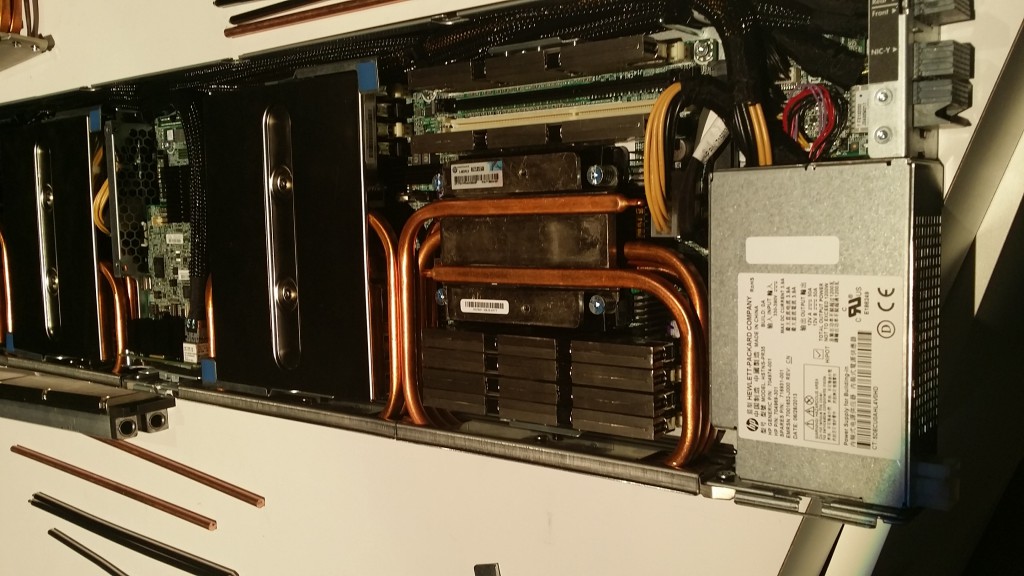

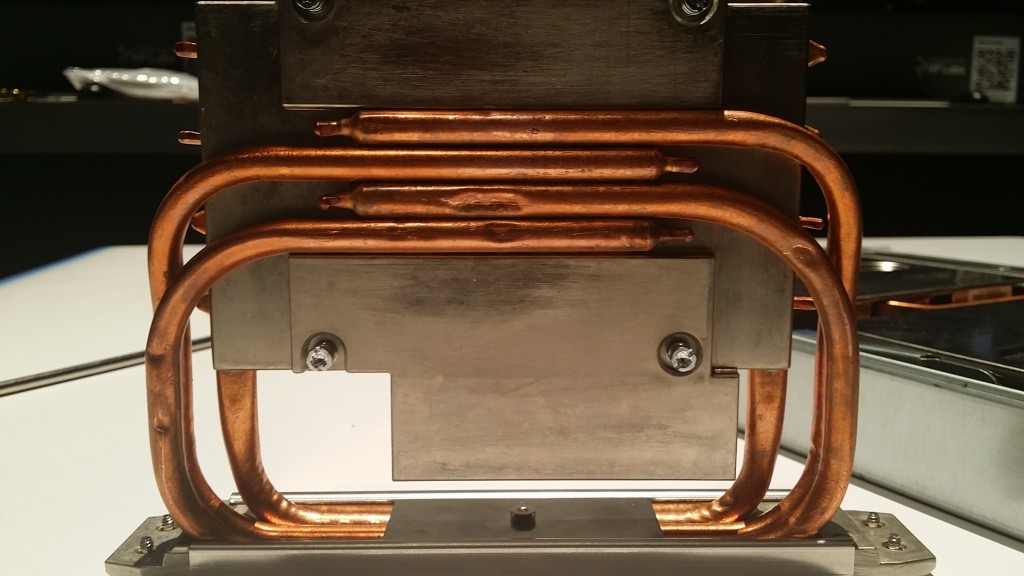

- Persistent cache – rapid re-mirroring of cache data to another node in the event of planned or unplanned controller disruption. This prevents the system from going into “write through” mode which can otherwise degrade performance dramatically. The bulk of the 128 GB of cache(4-node) on a 7450 is dedicated to writes (specifically optimizing I/O for the back end for the most efficient usage of system resources).

- The aforementioned media wear monitoring and proactive alerting (for flash anyway)

They have other availability features that span systems(the guarantee does not require any of these):

I would bet that you’ll have to follow very strict guidelines to get HP to sign on the dotted line, no deviation from supported configurations. 3PAR has always been a stickler for what they have been willing to support, for good reason.

Performance guaranteed

HP won’t sign on a dotted line for this, but with the previously released Priority Optimization, customers can guarantee their applications:

- Performance minimum threshold

- Performance maximum threshold (rate limiting)

- Latency target

In a very flexible manor, these capabilities (combined) I believe are still unique in the industry (some folks can do rate limiting alone).

Online import from EMC VNX

This was announced about a month or so ago, but basically HP makes it easy to import data from a EMC VNX without any external appliances, professional services, or performance impact.

This product (which is basically a plugin written to interface with VNX/CX’s SMI-S management interface) went generally available I believe this past Friday. I watched a full demo of it at Discover and it was pretty neat. It does require direct fibre channel connections between the EMC and 3PAR system, and it does require (in the case of Windows anyway that was the demo) two outages on the server side:

- Outage 1: remove EMC Powerpath – due to some damage Powerpath leaves behind you must also uninstall and re-install the Microsoft MPIO software using the standard control panel method. These require a restart.

- Outage 2: Configure Microsoft MPIO to recognize 3PAR (requires restart)

Once the 2nd restart is complete the client system can start using the 3PAR volumes as the data migrates in the background.

So online import may not be the right term for it, since the system does have to go down at least in the case of Windows configuration.

The import process currently supports Windows and Linux. The product came about as a result of I believe the end of life status of the MPX 2000 appliance which HP had been using to migrate data. So they needed something to replace that functionality so they were able to leverage the Peer Motion technology on 3PAR already that was already used for importing data from HP EVA storage and extend it to EMC. They are evaluating the possibility of extending this to more platforms – I guess VNX/CX was an easy first target given there are a lot of those out there that are old and there isn’t an easier migration path than the EMC to 3PAR import tool (which is significantly easier and less complex apparently than EMC’s options). One of the benefits that HP touts of their approach is it has no impact on host performance as the data goes directly between the arrays.

The downside to this direct approach is the 7000-series of 3PAR arrays are very limited in port counts, especially if you happen to have iSCSI HBAs in them(as did the F and E classes before them). 10000-series has a ton of ports though. I learned last year at HP storage tech day, was HP was looking at possibly shrinking the bigger 10000-series controllers for the next round of mid range systems (rather than making the 7000-series controllers bigger) in an effort to boost expansion capacity. I’d like to see at least 8 FC-host and 2 iSCSI Host per controller on mid range. Currently you get only 2 FC-host if you have a iSCSI HBA installed in a 7000-series controller.

The tool is command line based, there are a few basic commands it does and it interfaces directly with the EMC and 3PAR systems.

The tool is free of charge as far as I know, and while 3PAR likes to tout no professional services required HP says some customers may need assistance (especially at larger scales) planning migrations, if this is the case then HP has services ready to hold your hand through every step of the way.

What I’d like to see from 3PAR still

Read and write SSD caches

I’ve talked about it for years now, but still want to see a read (and write – my workloads are 90%+ write) caching system that leverages high endurance SSDs on 3PAR arrays. HP announced SmartCache for Proliant Gen8 systems I believe about 18 months ago with plans to extend support to 3PAR but that has not yet happened. 3PAR is well aware of my request, so nothing new here. David did mention that they do want to do this still, no official timelines yet. Also it sounded like they will not go forward with the server-side SmartCache integration with 3PAR (I’d rather have the cache in the array anyway and they seem to agree).

David Scott – SVP and GM of all of HP Storage talking with us bloggers in the lounge

3PAR 7450 SPC-1

I’d like to see SPC-1 numbers for the 7450 especially with these new flash media, it ought to provide some pretty compelling cost and performance numbers. You can see some recent performance testing that was done(that wasn’t 100% read) on a four node 7450 on behalf of HP.

Demartek also found that the StoreServ 7450 was not very heavily taxed with a single OLTP database accessing the array. As a result, we proceeded to run a combination of database workloads including two online transaction processing (OLTP) workloads and a data warehouse workload to see how well this storage system would handle a fairly heavy, mixed workload.

HP says the lack of SPC-1 comes down to priorities, it’s a decent amount of work to do the tests and they have had people working on other things, they still intend to do them but not sure when it will happen.

Compression

Would like to see compression support, not sure whether or not that will have to wait for Gen5 ASIC or if there are more rabbits hiding in the Gen4 hat.

Feature parity

Certainly want to see deduplication come to the other Gen4 platforms. HP touts a lot about the competition’s flash systems being silos. I’ll let you in on a little secret – the 3PAR 7450 is a flash silo as well. Not a technical silo, but one imposed on the product by marketing. While the reasons behind it are understandable it is unfortunate that HP feels compelled into limiting the product to appease certain market observers.

Faster CPUs

I was expecting a CPU refresh on the 7450, which was launched with an older generation of processor because HP didn’t want to wait for Intel’s newest chip to launch their new storage platform. I was told last year the 7450 is capable of operating with the newer chip, so it should just be a matter of plugging it in and doing some testing. That is supposed to be one of the benefits of using x86 processors is you don’t need to wait years to upgrade. HP says the Gen4 ASIC is not out of gas, the performance numbers to-date are limited by the CPU cores in the system, so faster CPUs would certainly benefit the system further without much cost.

No compromises

At the end of the day the 3PAR flash story has evolved into one of no compromises. You get the low cost flash, you get the inline hardware accelerated deduplication, you get the high performance with multitenancy and low latency, you get all of that and your not compromising on any other tier 1 capabilities(too many to go into here, you can see past posts for more info). Your getting a proven architecture that has matured over the past decade, a common operating system, the only storage platform that leverages custom ASICs to give uncompromising performance even with the bells & whistles turned on.

The only compromise here is you had to read all of this and I didn’t give you many pretty pictures to look at.