(Standard disclaimer HP covered my hotel and stuff while in Vegas etc etc…)

I witnessed what I’d like to say is one of the most insane unveiling of a new server in history. It was sort of mimicking the launch of an Apollo space craft. Lots of audio and video from NASA, and then when the system appeared lots of compressed air/smoke (very very loud) and dramatic music.

Here’s the full video in 1080p, beware it is 230MB. I have 100Mbit of unlimited bandwidth connected to a fast backbone, will see how it goes.

Apollo is geared squarely at compute bound HPC, and is the result of a close partnership between HP, Intel and the National Renewable Energy Laboratory (NREL).

The challenge HP presented itself with is what would it take to drive a million teraflops of compute. They said with today’s technology it would require one gigawatt of power and 30 football fields of space.

Apollo is supposed to help fix that, though the real savings are still limited by today’s technology, it’s not as if they were able to squeeze 10 fold improvement in performance out of the same power footprint. Intel said they were able to get I want to say 35% more performance out of the same power footprint using Apollo vs (I believe) the blades they were using before, I am assuming in both cases the CPUs were about the same and the savings came mainly from the more efficient design of power/cooling.

They build the system as a rack design, you probably haven’t been reading this blog very long but four years ago I lightly criticized HP on their SL series as not being visionary enough in that they were not building for the rack. The comparison I gave was with another solution I was toying with at the time for a project from SGI called CloudRack.

Fast forward to today and HP has answered that, and then some with a very sophisticated design that is integrated as an entire rack in the water cooled Apollo 8000. They have a mini version called the Apollo 6000(If this product was available before today I had never heard of it myself though I don’t follow HPC closely), of which Intel apparently already has something like 20,000 servers deployed on this platform.

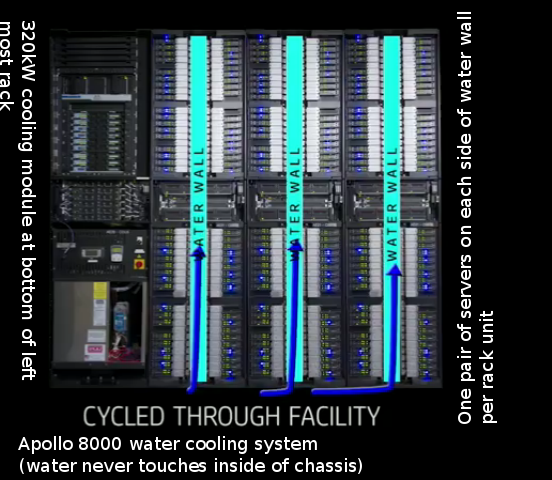

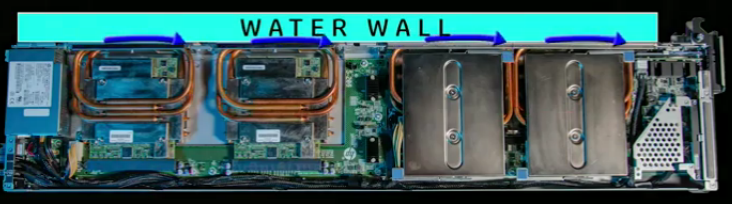

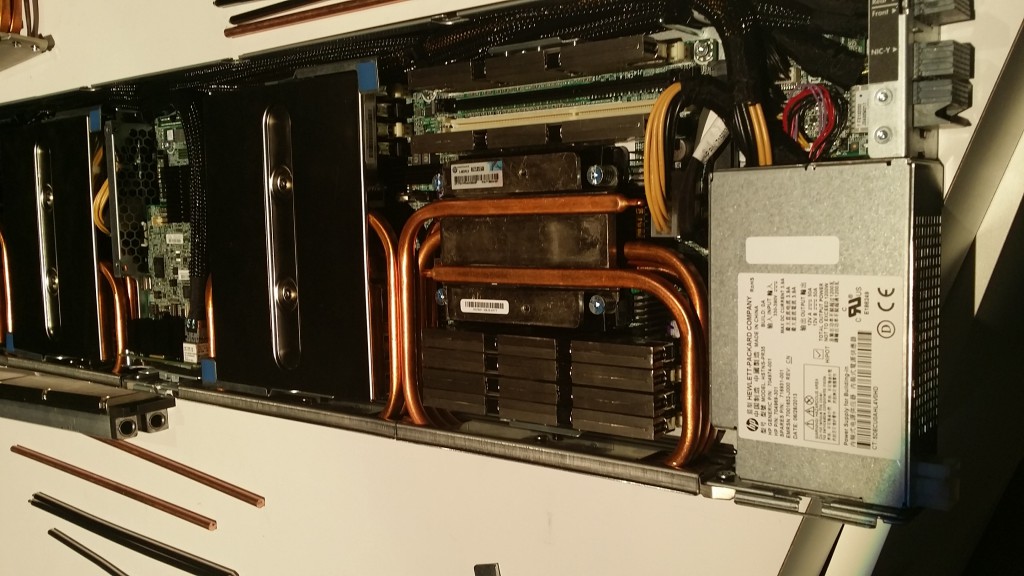

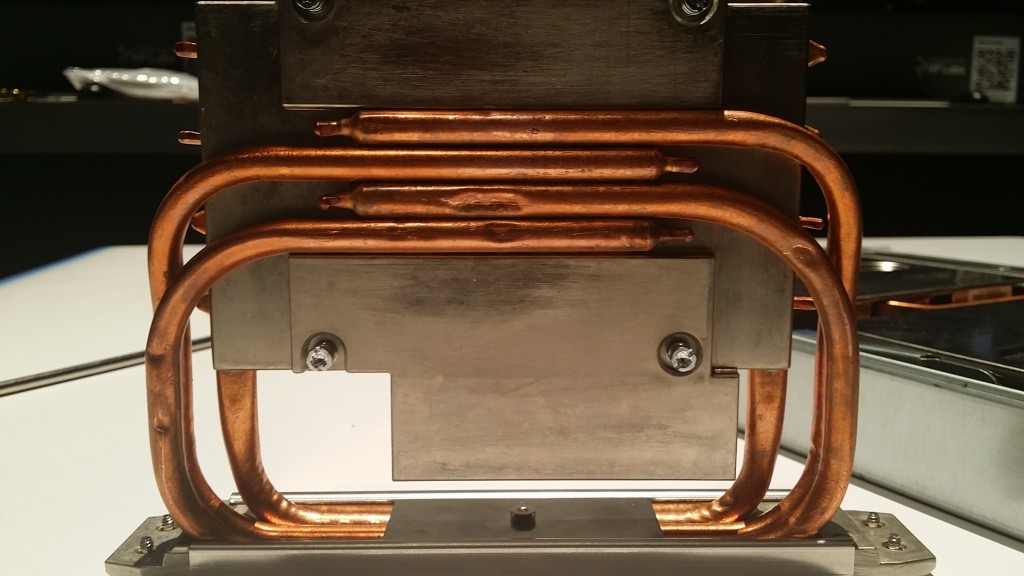

Anyway one of the keys to this design is the water cooling – it’s not just any water cooling though, the water in this case never gets into the server, they use heat pipes on the CPUs and GPUs and transfer the heat to what appears to be a heat sink of some kind that is on the outside of the chassis, which then “melds” with the rack’s water cooling system to transfer the heat away from the servers. Power is also managed at a rack level. Don’t get me wrong this is far more advanced than the SGI system of four years ago. HP is apparently not giving this platform a very premium price either.

Their claims include:

- 4 X the teraflops per square foot (vs air cooled servers)

- 4 X density per rack per dollar (not sure what the per dollar means but..)

- Deployment possible within days (instead of “years”)

- More than 250 Teraflops per rack (the NREL Apollo 8000 system is 1.2 Petaflops in 18 racks …)

- Apollo 6000 offering 160 servers per rack, and Apollo 8000 having 144

- Some fancy new integrated management platform

- Up to 80KW powering the rack (less if you want redundancy – 10kW per module)

- One cable for ILO management of all systems in the rack

- Can run on water temps as high as 86 degrees F (30 C)

The cooling system for the 8000 goes in another rack, which consumes 1/2 of a rack for a maximum of four server racks. If you need redundancy then I believe a 2nd cooling unit is required so two racks. The cooling system weighs over 2,000 pounds by itself so it appears unlikely that you’d be able to put two of them in a single cabinet.

The system takes in 480V AC and converts it into DC in up to 8x10kW redundant rectifiers in the middle of the rack.

NREL integrated the Apollo 8000 system with their building’s heating system, so that the Apollo cluster contributes to heating their entire building so that heat is not wasted.

It looks as if SGI discontinued the product I was looking at four years ago (at the time for a Hadoop cluster). It supported up to 76 dual socket servers in a rack at the time supporting a max of something like 30kW(I don’t believe any configuration at the time could draw more than 20kW) and had rack based power distribution as well as rack based cooling(air cooling). It seems as if it was replaced with a newer product called Infinite data cluster which can go up to 80 dual socket servers in an air cooled rack(though GPUs not supported unlike Apollo).

This new system doesn’t mean a whole lot to me, I mean I don’t deal with HPC so I may never use it but the tech behind it seemed pretty neat, and obviously was interested in HP finally answering my challenge to deploy a system based on an entire rack with rack based power and cooling.

The other thing that stuck out to me was the new management platform, HP said it was another unique management platform that is specific to Apollo which was sort of confusing given I sat through what I thought was a very compelling preview of what HP OneView (the latest version announced today) had to offer which is HP’s new converged management interface. Seems strange to me that they would not integrate Apollo into that from the start, but I guess that’s what you get from a big company with teams working in parallel.

HP tries to justify this approach by saying there are several unique things in Apollo components so they needed a custom management package. I think they just didn’t have time to integrate with OneView, since there is no justification I can think of to not expose those management points via APIs that OneView can call/manage/abstract on behalf of the customer.

It sure looks pretty though(more so in person, I’m sure I’ll get a better pic of it on the showroom floor in normal lighting conditions along with pics of the cooling system).

UPDATE – some pics of the compute stuff