I first heard that Fujitsu had storage maybe one and a half years ago, someone told me that Fujitsu was one company that was seriously interested in buying Exanet at the time, which caused me to go look at their storage, I had no idea they had storage systems. Even today I really never see anyone mention them anywhere, my 3PAR reps say they never encounter Fujitsu in the field(at least in these territories they suspect over in Europe they go head to head more often).

Anyways, EMC folks seem to be trying to attack the high end Fujitsu system, saying it’s not “enterprise”, in the end the main leg that EMC has trying to hold on to what in their eyes is “enterprise” is mainframe connectivity, which Fujitsu rightly tries to debunk that myth since there are a lot of organizations that are consider themselves “enterprise” that don’t have any mainframes. It’s just stupid, but EMC doesn’t really have any other excuses.

What prompted me to write this, more than anything else was this

One can scale from one to eight engines (or even beyond in a short timeframe), from 16 to 128 four-core CPUs, from two to 16 backend- and front-end directors, all with up to 16 ports.

The four core CPUs is what gets me. What a waste! I have no doubt that in EMC’s (short time frame) they will be migrating to quad socket 10 core CPUs right? After all, unlike someone like 3PAR who can benefit from a purpose built ASIC to accelerate their storage, EMC has to rely entirely on software. After seeing SPC-1 results for HDS’s VSP, I suspect the numbers for VMAX wouldn’t be much more impressive.

My main point is, and this just drives me mad. These big manufacturers touting the Intel CPU drum and then not exploiting the platform to it’s fullest extent. Quad core CPUs came out in 2007. When EMC released the VMAX in 2009, apparently Intel’s latest and greatest was still quad core. But here we are, practically 2012 and they’re still not onto at LEAST hex core yet? This is Intel architecture, it’s not that complicated. I’m not sure what quad core CPUs specifically are in the VMAX, but the upgrade from Xeon 5500 to Xeon 5600 for the most part was

- Flash bios (if needed to support new CPU)

- Turn box off

- Pull out old CPU(s)

- Put in new CPU(s)

- Turn box on

- Get back to work

That’s the point of using general purpose CPUs!! You don’t need to pour 3 years of R&D into something to upgrade the processor.

What I’d like to see, something I mentioned in a comment recently is a quad socket design for these storage systems. Modern CPUs have had integrated memory controllers for a long time now (well only available on Intel since the Xeon 5500). So as you add more processors you add more memory too. (Side note: the documentation for VMAX seems to imply a quad socket design for a VMAX engine but I suspect it is two dual socket systems since the Intel CPUs EMC is likely using are not quad-socket capable). This page claims the VMAX uses the ancient Intel 5400 processors, which if I remember right was the first generation quad cores I had in my HP DL380 G5s many eons ago. If true, it’s even more obsolete than I thought!

Why not 8 socket? or more? Well cost mainly. The R&D involved in an 8-socket design I believe is quite a bit higher, and the amount of physical space required is high as well. With quad socket blades common place, and even some vendors having quad socket 1U systems, the price point and physical size related to quad socket designs is well within reach of storage systems.

So the point is on these high end storage systems you start out with a single socket populated on a quad socket board with associated memory. Want to go faster? add another CPU and associated memory? Go faster still? add two more CPUs and associated memory (though I think it’s technically possible to run 3 CPUs, well there have been 3 CPU systems in the past, it seems common/standard to add them in pairs). Your spending probably at LEAST a quarter million for this system initially, probably more than that, the incremental cost of R&D to go quad socket given this is Intel after all is minimal.

Currently VMAX goes to 8 engines, they say they will expand that to more. 3PAR took the opposite approach, saying while their system is not as clustered as a VMAX is (not their words), they feel such a tightly integrated system (theirs included) becomes more vulnerable to “something bad happening” that impacts the system as a whole, more controllers is more complexity. Which makes some sense. EMC’s design is even more vulnerable being that it’s so tightly integrated with the shared memory and stuff.

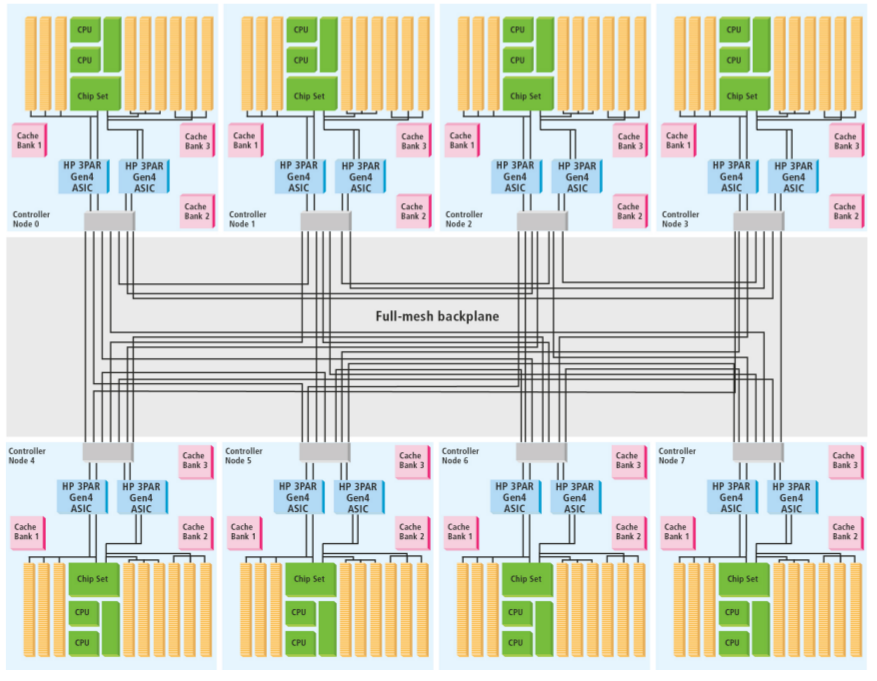

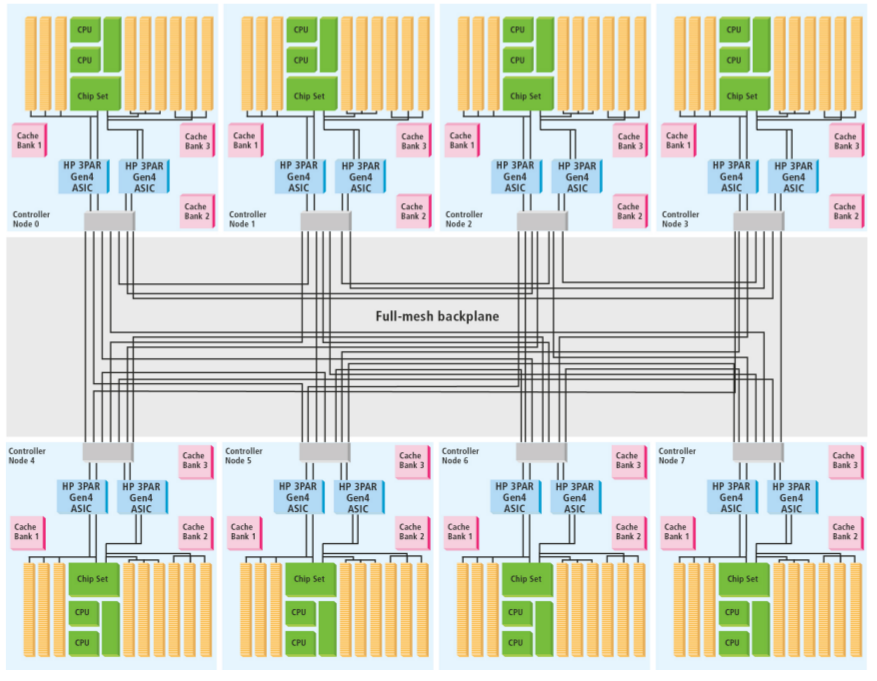

3PAR V-Class Cluster Architecture with low cost high speed passive backplane with point to point connections totalling 96 Gigabytes/second of throughput

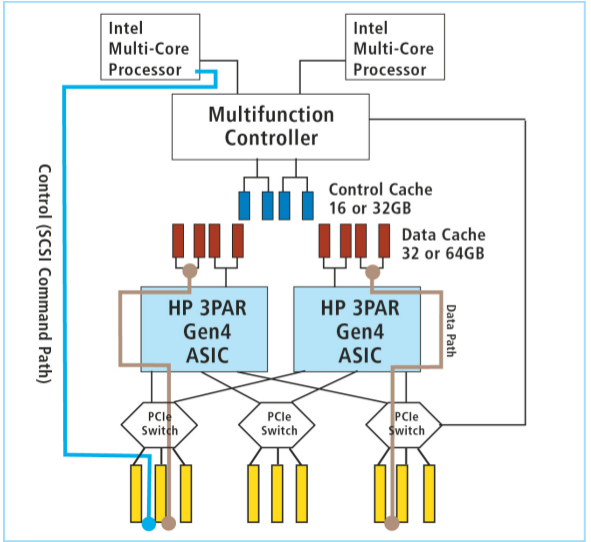

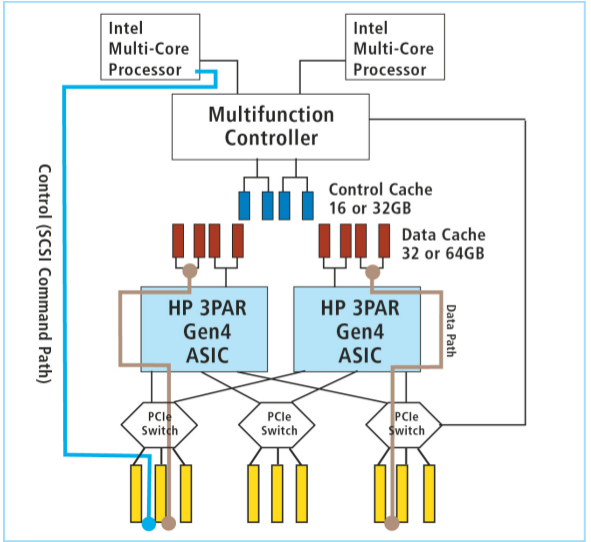

3PAR goes even further in their design to isolate things – like completely separating control cache which is used for the operating system that powers the controllers and for the control data on top of it, with the data cache, which as you can see in the diagram below is only connected to the ASICs, not to the Intel CPUs. On top of that they separate the control data flow from the regular data flow as well.

One reason I have never been a fan of “stacking” or “virtual chassis” on switches is the very same reason, I’d rather have independent components that are not tightly integrated in the event “something bad” takes down the entire “stack”. Now if your running with two independent stacks, so that one full stack can fail without an issue then that works around that issue, but most people don’t seem to do that. The chances of such a failure happening are low, but they are higher than something causing all of the switches to fail if the switches were not stacked.

One exception might be some problems related to STP which some people may feel they need when operating multiple switches. I’ll answer that by saying I haven’t used STP in more than 8 years, so there have been ways to build a network with lots of devices without using STP for a very long time now. The networking industry recently has made it sound like this is something new.

Same with storage.

So back to 3PAR. 3PAR changed their approach in their V-series of arrays, for the first time in the company’s history they decided to include TWO ASICs in each controller, effectively doubling the I/O processing abilities of the controller. Fewer, more powerful controllers. A 4-node V400 will likely outperform an 8-node T800. Given the system’s age, I suspect a 2-node V400 would probably be on par with an 8-node S800 (released around 2003 if I remember right).

3PAR V-Series ASIC/CPU/PCI/Memory Architecture

EMC is not alone, and not the worst abuser here though. I can cut them maybe a LITTLE slack given the VMAX was released in 2009. I can’t cut any slack to NetApp though. They recently released some new SPEC SFS results, which among other things, disclosed that their high end 6240 storage system is using quad core Intel E5540 processors. So basically a dual proc quad core system. And their lower end system is — wait for it — dual proc dual core.

Oh I can’t describe how frustrated that makes me, these companies touting using general purpose CPUs and then going out of their way to cripple their systems. It would cost NetApp all of maybe what $1200 to upgrade their low end box to quad cores? Maybe $2500 for both controllers? But no they rather you spend an extra, what $50,000-$100,000Â to get that functionality?

I have to knock NetApp more to some extent since these storage systems are significantly newer than the VMAX, but I knock them less because they don’t champion the Intel CPUs as much as EMC does, that I have seen at least.

3PAR is not a golden child either, their latest V800 storage system uses — wait for it — quad core processors as well. Which is just as disgraceful. I can cut 3PAR more slack because their ASIC is what provides the horsepower on their boxes, not the Intel processors, but still that is no excuse for not using at LEAST 6 core processors. While I cannot determine precisely which Intel CPUs 3PAR is using, I know they are not using Intel CPUs because they are ultra low power since the clock speeds are 2.8Ghz.

Storage companies aren’t alone here, load balancing companies like F5 Networks and Citrix do the same thing. Citrix is better than F5 in their offering software “upgrades” on their platform that unlock additional throughput. Without the upgrade you have full reign of all of the CPU cores on the box which allow you to run more expensive software features that would normally otherwise impact CPU performance. To do this on F5 you have to buy the next bigger box.

Back to Fujitsu storage for a moment, their high end box certainly seems like a very respectable system with regards to paper numbers anyways. I found it very interesting the comment on the original article that mentioned Fujitsu can run the system’s maximum capacity behind a single pair of controllers if the customer wanted to, of course the controllers couldn’t drive all the I/O but it is nice to see the capacity not so tightly integrated to the controllers like it is on the VMAX or even on the 3PAR platform. Especially when it comes to SATA drives which aren’t known for high amounts of I/O, higher end storage systems such as the recently mentioned HDS, 3PAR and even VMAX tap out in “maximum capacity” long before they tap out in I/O if your loading the system with tons of SATA disks. It looks like Fujitsu can get up to 4.2PB of space leaving, again HDS, 3PAR and EMC in the dust. (Capacity utilization is another story of course).

With Fujitsu’s ability to scale the DX8700 to 8 controllers, 128 fibre channel interfaces, 2,700 drives and 512GB of cache that is quite a force to be reckoned with. No sub-disk distributed RAID, no ASIC acceleration, but I can certainly see how someone would be willing to put the DX8700 up against a VMAX.

EMC was way late to the 2+ controller hybrid modular/purpose built game and is still playing catch up. As I said to Dell last year, put your money where your mouth is and publish SPC-1 results for your VMAX, EMC.

With EMC so in love with Intel I have to wonder how hard they had to fight off Intel from encouraging EMC to use the Itanium processor in their arrays instead of Xeons. Or has Intel given up completely on Itanium now (which, again we have to thank AMD for – without AMD’s x86-64 extensions the Xeon processor line would of been dead and buried many years ago).

For insight to what a 128-CPU core Intel-based storage system may perform in SPC-1, you can look to this system from China.

(I added a couple diagrams, I don’t have enough graphics on this site)