I first heard that Fujitsu had storage maybe one and a half years ago, someone told me that Fujitsu was one company that was seriously interested in buying Exanet at the time, which caused me to go look at their storage, I had no idea they had storage systems. Even today I really never see anyone mention them anywhere, my 3PAR reps say they never encounter Fujitsu in the field(at least in these territories they suspect over in Europe they go head to head more often).

Anyways, EMC folks seem to be trying to attack the high end Fujitsu system, saying it’s not “enterprise”, in the end the main leg that EMC has trying to hold on to what in their eyes is “enterprise” is mainframe connectivity, which Fujitsu rightly tries to debunk that myth since there are a lot of organizations that are consider themselves “enterprise” that don’t have any mainframes. It’s just stupid, but EMC doesn’t really have any other excuses.

What prompted me to write this, more than anything else was this

One can scale from one to eight engines (or even beyond in a short timeframe), from 16 to 128 four-core CPUs, from two to 16 backend- and front-end directors, all with up to 16 ports.

The four core CPUs is what gets me. What a waste! I have no doubt that in EMC’s (short time frame) they will be migrating to quad socket 10 core CPUs right? After all, unlike someone like 3PAR who can benefit from a purpose built ASIC to accelerate their storage, EMC has to rely entirely on software. After seeing SPC-1 results for HDS’s VSP, I suspect the numbers for VMAX wouldn’t be much more impressive.

My main point is, and this just drives me mad. These big manufacturers touting the Intel CPU drum and then not exploiting the platform to it’s fullest extent. Quad core CPUs came out in 2007. When EMC released the VMAX in 2009, apparently Intel’s latest and greatest was still quad core. But here we are, practically 2012 and they’re still not onto at LEAST hex core yet? This is Intel architecture, it’s not that complicated. I’m not sure what quad core CPUs specifically are in the VMAX, but the upgrade from Xeon 5500 to Xeon 5600 for the most part was

- Flash bios (if needed to support new CPU)

- Turn box off

- Pull out old CPU(s)

- Put in new CPU(s)

- Turn box on

- Get back to work

That’s the point of using general purpose CPUs!! You don’t need to pour 3 years of R&D into something to upgrade the processor.

What I’d like to see, something I mentioned in a comment recently is a quad socket design for these storage systems. Modern CPUs have had integrated memory controllers for a long time now (well only available on Intel since the Xeon 5500). So as you add more processors you add more memory too. (Side note: the documentation for VMAX seems to imply a quad socket design for a VMAX engine but I suspect it is two dual socket systems since the Intel CPUs EMC is likely using are not quad-socket capable). This page claims the VMAX uses the ancient Intel 5400 processors, which if I remember right was the first generation quad cores I had in my HP DL380 G5s many eons ago. If true, it’s even more obsolete than I thought!

Why not 8 socket? or more? Well cost mainly. The R&D involved in an 8-socket design I believe is quite a bit higher, and the amount of physical space required is high as well. With quad socket blades common place, and even some vendors having quad socket 1U systems, the price point and physical size related to quad socket designs is well within reach of storage systems.

So the point is on these high end storage systems you start out with a single socket populated on a quad socket board with associated memory. Want to go faster? add another CPU and associated memory? Go faster still? add two more CPUs and associated memory (though I think it’s technically possible to run 3 CPUs, well there have been 3 CPU systems in the past, it seems common/standard to add them in pairs). Your spending probably at LEAST a quarter million for this system initially, probably more than that, the incremental cost of R&D to go quad socket given this is Intel after all is minimal.

Currently VMAX goes to 8 engines, they say they will expand that to more. 3PAR took the opposite approach, saying while their system is not as clustered as a VMAX is (not their words), they feel such a tightly integrated system (theirs included) becomes more vulnerable to “something bad happening” that impacts the system as a whole, more controllers is more complexity. Which makes some sense. EMC’s design is even more vulnerable being that it’s so tightly integrated with the shared memory and stuff.

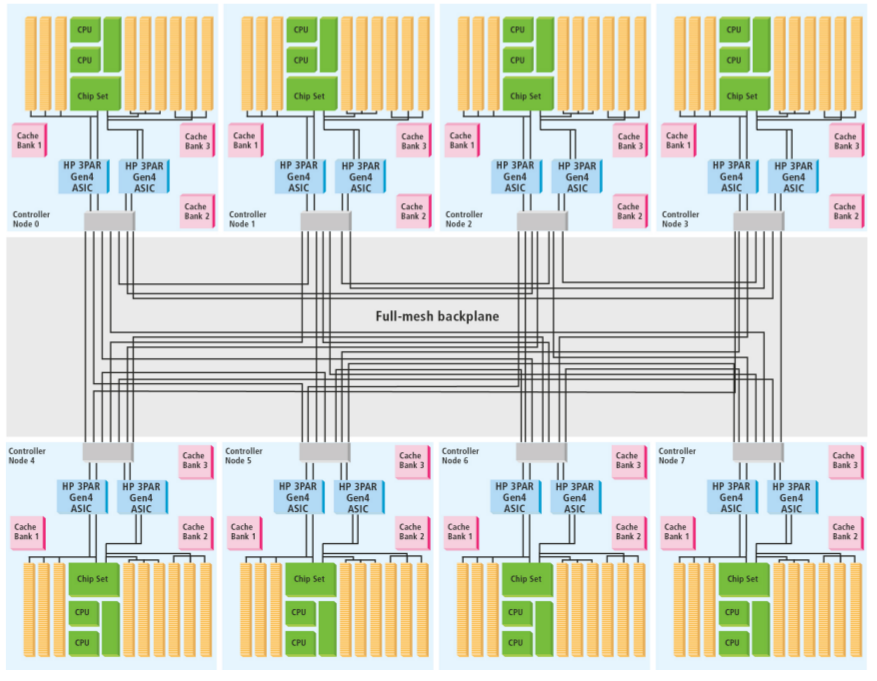

3PAR V-Class Cluster Architecture with low cost high speed passive backplane with point to point connections totalling 96 Gigabytes/second of throughput

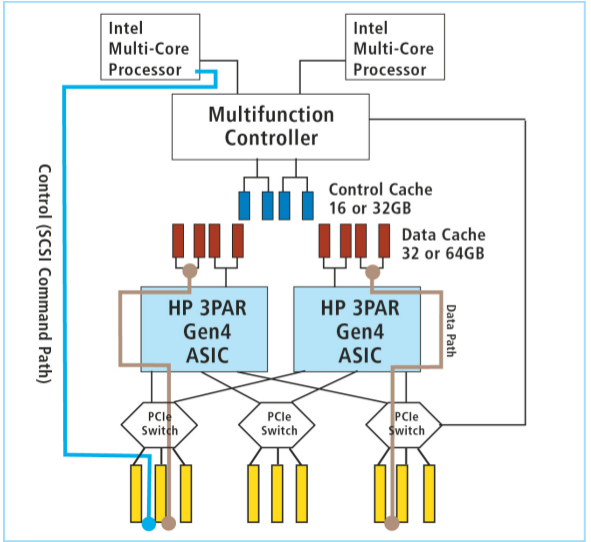

3PAR goes even further in their design to isolate things – like completely separating control cache which is used for the operating system that powers the controllers and for the control data on top of it, with the data cache, which as you can see in the diagram below is only connected to the ASICs, not to the Intel CPUs. On top of that they separate the control data flow from the regular data flow as well.

One reason I have never been a fan of “stacking” or “virtual chassis” on switches is the very same reason, I’d rather have independent components that are not tightly integrated in the event “something bad” takes down the entire “stack”. Now if your running with two independent stacks, so that one full stack can fail without an issue then that works around that issue, but most people don’t seem to do that. The chances of such a failure happening are low, but they are higher than something causing all of the switches to fail if the switches were not stacked.

One exception might be some problems related to STP which some people may feel they need when operating multiple switches. I’ll answer that by saying I haven’t used STP in more than 8 years, so there have been ways to build a network with lots of devices without using STP for a very long time now. The networking industry recently has made it sound like this is something new.

Same with storage.

So back to 3PAR. 3PAR changed their approach in their V-series of arrays, for the first time in the company’s history they decided to include TWO ASICs in each controller, effectively doubling the I/O processing abilities of the controller. Fewer, more powerful controllers. A 4-node V400 will likely outperform an 8-node T800. Given the system’s age, I suspect a 2-node V400 would probably be on par with an 8-node S800 (released around 2003 if I remember right).

EMC is not alone, and not the worst abuser here though. I can cut them maybe a LITTLE slack given the VMAX was released in 2009. I can’t cut any slack to NetApp though. They recently released some new SPEC SFS results, which among other things, disclosed that their high end 6240 storage system is using quad core Intel E5540 processors. So basically a dual proc quad core system. And their lower end system is — wait for it — dual proc dual core.

Oh I can’t describe how frustrated that makes me, these companies touting using general purpose CPUs and then going out of their way to cripple their systems. It would cost NetApp all of maybe what $1200 to upgrade their low end box to quad cores? Maybe $2500 for both controllers? But no they rather you spend an extra, what $50,000-$100,000Â to get that functionality?

I have to knock NetApp more to some extent since these storage systems are significantly newer than the VMAX, but I knock them less because they don’t champion the Intel CPUs as much as EMC does, that I have seen at least.

3PAR is not a golden child either, their latest V800 storage system uses — wait for it — quad core processors as well. Which is just as disgraceful. I can cut 3PAR more slack because their ASIC is what provides the horsepower on their boxes, not the Intel processors, but still that is no excuse for not using at LEAST 6 core processors. While I cannot determine precisely which Intel CPUs 3PAR is using, I know they are not using Intel CPUs because they are ultra low power since the clock speeds are 2.8Ghz.

Storage companies aren’t alone here, load balancing companies like F5 Networks and Citrix do the same thing. Citrix is better than F5 in their offering software “upgrades” on their platform that unlock additional throughput. Without the upgrade you have full reign of all of the CPU cores on the box which allow you to run more expensive software features that would normally otherwise impact CPU performance. To do this on F5 you have to buy the next bigger box.

Back to Fujitsu storage for a moment, their high end box certainly seems like a very respectable system with regards to paper numbers anyways. I found it very interesting the comment on the original article that mentioned Fujitsu can run the system’s maximum capacity behind a single pair of controllers if the customer wanted to, of course the controllers couldn’t drive all the I/O but it is nice to see the capacity not so tightly integrated to the controllers like it is on the VMAX or even on the 3PAR platform. Especially when it comes to SATA drives which aren’t known for high amounts of I/O, higher end storage systems such as the recently mentioned HDS, 3PAR and even VMAX tap out in “maximum capacity” long before they tap out in I/O if your loading the system with tons of SATA disks. It looks like Fujitsu can get up to 4.2PB of space leaving, again HDS, 3PAR and EMC in the dust. (Capacity utilization is another story of course).

With Fujitsu’s ability to scale the DX8700 to 8 controllers, 128 fibre channel interfaces, 2,700 drives and 512GB of cache that is quite a force to be reckoned with. No sub-disk distributed RAID, no ASIC acceleration, but I can certainly see how someone would be willing to put the DX8700 up against a VMAX.

EMC was way late to the 2+ controller hybrid modular/purpose built game and is still playing catch up. As I said to Dell last year, put your money where your mouth is and publish SPC-1 results for your VMAX, EMC.

With EMC so in love with Intel I have to wonder how hard they had to fight off Intel from encouraging EMC to use the Itanium processor in their arrays instead of Xeons. Or has Intel given up completely on Itanium now (which, again we have to thank AMD for – without AMD’s x86-64 extensions the Xeon processor line would of been dead and buried many years ago).

For insight to what a 128-CPU core Intel-based storage system may perform in SPC-1, you can look to this system from China.

(I added a couple diagrams, I don’t have enough graphics on this site)

Nate – interesting topic. Perhaps this quad-core limitation is not the storage vendors choice but lack of it. Intel does have a line of “Storage Xeon’s” that include features such as;

– HW-based RAID engine to offload RAID calculations (XOR/P+Q) from the core

– Integrated PCI Express non-transparent bridging for redundant systems

– Asynchronous DRAM Self-Refresh (ADR) to preserve critical data in RAM during a power fail

More info;

http://www.intel.com/design/intarch/iastorage.htm

ftp://download.intel.com/embedded/processor/prez/SF09_EMBS001_100.pdf

I only see the 5500 series listed on the site indicating no 6+ core CPU’s.

Andy

Comment by Andy G — November 8, 2011 @ 10:00 am

I had no idea Intel had that kind of CPU! That is interesting indeed. However at least for NetApp, and the original VMAX neither system was using that CPU (C55xx). I suspect the storage companies may be able to get better performance (or more likely better features) by doing it entirely in software. I’m not sure though.

As for the 6-core 5500s, yeah that is true technically the 5500 is a 4-core processor. But the 5600 is 6-core, and is a drop-in replacement for the 5500 (unless the manufacturer was stupid and for some reason built the bios or something in a way that it would not support a 5600, I’m not aware of any that was in that situation though).

Thanks for the Intel link I will look at it more, and thanks for the post!!

Comment by Nate — November 8, 2011 @ 10:20 am

That is true, I have not seen information indicating the use of the Storage Xeon line of CPU’s.

You may wish to re-evaluate the statement regarding better performance in software given the recently released 3Par 10000 Series SPC-1 performance results 🙂

http://h30507.www3.hp.com/t5/Around-the-Storage-Block-Blog/A-deeper-look-at-the-HP-3PAR-SPC-1-results/ba-p/101263

As for the 5500 upgrade to 5600 path, your characterization is not that out of line. I have an HP Proliant ML150 G6 currently populated with a pair of E5540’s. The ML150 uses the Intel 5500 chipset that supports the 5600 series Xeon’s but HP does not support the 5600 series in this configuration. No BIOS support means no microcode and bad things can happen when the CPU is not properly identified.

Andy

Comment by Andy G — November 8, 2011 @ 10:51 am

BTW, thanks for the VSP SPC-1 Result post. You’d think I would have remembered where I read that and still linked the 3Par results 🙂

Cheers

Andy

Comment by Andy G — November 8, 2011 @ 11:00 am

I don’t understand the question about re-evaluating my statement on better performance in software? What I was referring to was better performance in software on the Xeons than the “Intel RAID offload” hardware in that special Intel chip. I don’t know if that is the case or not it’s just speculation as to why EMC and NetApp and others might not be using that special chip.

Comment by Nate — November 8, 2011 @ 11:13 am

Apologies for not connecting the dots clearly.

The basis of my comparison is that the 3Par ASIC performs the RAID calculations, similar in function to the hardware in the Storage Xeon’s, and performs better than the purely software solutions.

Therefore I would expect similar results comparing a Storage Xeon against a standard Xeon. It would be my expectation that between Xeon modules (apples-to-apples: cache, bus, memory..) the Storage Xeon offer greater overall performance in a storage system by offloading the RAID calculations. Otherwise why would Intel invest the effort to offer said product.

Your thoughts?

Andy

Comment by Andy G — November 8, 2011 @ 11:23 am

It is certainly a reasonable assumption to me. The only caveat is the efficiency of such code both in software and hardware. The hardware acceleration could be a tiny piece of silicon that has no horsepower. There’s been, I don’t want to call it a trend, but development in recent years where more and more people are leaning towards software RAID on lower end systems because the cheaper hardware RAID cards don’t perform as well as running the RAID in software, and a good hardware RAID card does cost some $, especially if your looking to get battery backed cache on it.

Thanks for the idea for a new blog post at some point “World’s largest storage vendor touts x86 CPUs from world’s largest CPU maker are key to storage future, World’s largest CPU maker touts hardware acceleration as key to improving storage performance”

Ok the title needs to be shortened a bit but still it is funny!

Comment by Nate — November 8, 2011 @ 11:50 am

That does make you chuckle doesn’t it.

What we need are “Storage Atom’s” for cheap NAS boxes 🙂

Comment by Andy G — November 8, 2011 @ 12:00 pm

Intel has you covered there

http://newsroom.intel.com/community/intel_newsroom/blog/2010/08/16/intel-atom-processors-further-expand-into-storage-appliances-for-homes-small-businesses

“Since Intel’s initial foray in this area in March, leading storage manufacturers, including Acer*, Cisco*, LaCie*, LG Electronics*, NETGEAR*, QNAP*, Super Micro*, Synology* and Thecus* have announced products based on the energy-efficient Intel Atom processor platform.

[..]

The new Intel Atom processors (D425 and D525) are paired with the Intel® 82801 IR I/O Controller that delivers the input/output (I/O) connectivity to satisfy the growing throughput demands of leading storage vendors. Both additions to the storage platform offer the flexibility to support Microsoft Windows Home Server* and open source Linux operating systems.”

I recall reading an article about those chips not long ago, ok maybe it was longer ago than I thought I think this was it http://www.theregister.co.uk/2010/08/18/faster_storage_atoms/

Comment by Nate — November 8, 2011 @ 12:05 pm

I think that is a misnomer. Intel bundled an existing Atom with an existing south-bridge.

http://ark.intel.com/products/49490

http://ark.intel.com/products/31894/Intel-82801IR-IO-Controller

There is no indication of any “special sauce” in that combination. They addressed the “growing throughput demands” with more MHZ 🙂 (On an in-order CPU no less, thanks Intel).

How about Atom with hardware RAID offloading + some form of sub-LUN tiering software ala LSI CacheCade (http://www.lsi.com/channel/marketing/Pages/LSI-MegaRAID-CacheCade-Pro-2.0-software.aspx)

Then I’d be interested.

Comment by Andy G — November 8, 2011 @ 1:35 pm

that is true! I believe on the latest Intel chipsets(?) they have the ability to use flash as a cache, not sure if i is read or write or both. Heard it’s pretty good. I have one of the Seagate Hybrid drives in my laptop and it works well too, I was trying to hold out to see if Seagate would come with a drive that was 1TB in size with 8GB of SLC cache but I ended up caving and buying the 500GB/4GB version, it was cheap – $99 if I recall right, pretty noticeable difference in performance vs my earlier 7200RPM disk.

Comment by Nate — November 8, 2011 @ 1:39 pm

Per your HP ML box above that you could not upgrade to 5600, that is certainly unfortunate and I wouldn’t expect that from HP. Though it reminds me of a similar experience on the DL380G5s. I bought a DL380G5 with dual core CPUs a long time ago(even though quad core was available) specifically for optimal licensing with Oracle Enterprise Edition(also with the intention of being able to go quad core later).

Later came, and we migrated to Oracle Standard edition and thus the licensing was basically socket-based at that point – unlimited cores/socket. So I went back to HP and ordered the quad core upgrade kit for the server. I even had HP support install it — only to have it fail. It didn’t work. I spent a good week on/off with HP support only to eventually figure out that the particular model# of DL380G5 did not support quad core CPUs. So I had to have the motherboard replaced for extra cost (in the end it was still worth it 5-10x over due to the Oracle license savings).

I noticed today when I pasted the link to the DL380G5 in my original post that HP has a note on the page saying some models aren’t upgradeable to quad core.. If they only had known that in 2007 it would of saved me a ton of pain 🙁

I don’t know what the limitation was in the models that could not be upgraded, if it had a different chipset, or some other component that was not compatible.

Comment by Nate — November 8, 2011 @ 1:48 pm

hehe, I forgot about that Intel solution. If only it worked on ESX(i).

The Seagate XT’s are interesting but considering the write-through nature, not an ideal solution for NAS where write-back would be preferred.

Comment by Andy G — November 8, 2011 @ 1:51 pm

yeah that is true, write back would be nicer, but also more dangerous, you really have to have your stuff working right with that sort of setup, so I can understand Seagate airing on the side of caution and just having the SSD as being a read cache. I’ve only had 1 issue with the drive when I was re-installing Ubuntu on my laptop I was hammering the disk really hard and I think I caused the disk to go offline. The behavior was very strange, iostat wasn’t yet installed so I couldn’t run that, but I could not write any data to the disk it would just hang, but I could still do read operations, I assume stuff that was in the SSD cache. Ended up having to power cycle – haven’t had the issue since. I have read other people having issues with the drives — so far perhaps I have been lucky.

what an active thread! i love it.

thanks for all the posts

Comment by Nate — November 8, 2011 @ 2:48 pm

Agreed that write-back should not be the default on a consumer based device. I believe the Intel caching solution defaults to write-through as well with the option to enable write-back.

The problem I have is that I want enterprise level features at consumer level pricing 😉

Comment by Andy G — November 8, 2011 @ 3:04 pm

yes, don’t we all.. it wasn’t easy for me to swallow the costs of a 3ware 9650SE with battery backup unit for home use(then another 3ware 9750+BBU for my co-lo)..I think it was around $500/ea or something. hopefully they’ll last a good long time! I remember the first hardware SCSI RAID card I had, I think it was an Ultra wide, and it had a grand total of 4MB of cache on it (write through – no bbu). worked very well, never had a drive failure on those cards so never got to really test it. Eventually retired the setup since 9GB disks weren’t too efficient and noisy as hell!

Comment by Nate — November 8, 2011 @ 3:13 pm

Definitely understand that, I was in the same boat when I went w/ a Smart Array P410 w/ 512MB BBWC. One of the best and least appreciated purchases for a lab to balance out a system configuration.

Arguably, the flip side is that adding fast SSD’s can mitigate not having a good RAID controller and not to expect miracles even w/ one when using SATA spindles.

Comment by Andy G — November 8, 2011 @ 3:48 pm

http://thessdreview.com/our-reviews/sata-3/ocz-synapse-cache-64gb-ssd-review-top-caching-solution-at-a-great-price/

Comment by Andy G — November 8, 2011 @ 3:55 pm

OCZ has some flashy(no pun intended) products to be sure but I’m hesitant to use them myself it seems they have had more firmware issues than most other SSD companies.

I have a CORSAIR CMFSSD-64GBG2D SSD in my “main” system at home, and it is buggy too. I think it’s write cache is locked in the “on” state. A few months ago I had my UPS battery finally kick the bucket and my system lost power, I spent the next 2 hours trying to get the system to boot again, quite a bit of corruption on the SSD. I later saw this in the logs

[ 3.212950] sd 2:0:0:0: [sdc] Write cache: enabled, read cache: enabled, doesn’t support DPO or FUA

No matter what I’ve tried I cannot seem to turn off the write cache, assuming that is the issue. I was able to get the system to boot again though, after going into rescue mode and deleting a bunch of corrupted data. There was one directory on the file system in the /lost+found folder that until I actually deleted the data from the directory from recovery mode anything that touched the directory instantly caused data corruption and the file system to go to read only mode.

I replaced the UPS eventually, so I can be lazy again for finding a real solution like switching the SSD out or finding a good way to turn off the write cache. All of my “important” data is on my 3ware RAID array(and for the most part replicated off site to my co-lo on another 3ware RAID array though that process is still manual). I just got the SSD in the hopes it would make things feel faster, apparently it’s not a very fast SSD I really don’t notice much difference, I suspect if I just put my OS on the 3ware card with the 4x2TB disks in RAID 10+256MB of write back cache it would probably feel plenty snappy enough, but to do that means I have to do work and I am trying to master the art of procrastination.

Comment by Nate — November 8, 2011 @ 4:27 pm

[…] it certainly seems like a good system when put up against at least the VSP, and probably the V-MAX […]

Pingback by IBM shows it still has some horses left « TechOpsGuys.com — February 3, 2012 @ 11:17 am

[…] have bitched and griped in the past about how some storage companies waste their customer's time, money and resources by […]

Pingback by Oracle not afraid to leverage Intel architecture « TechOpsGuys.com — April 20, 2012 @ 11:29 am

[…] 8 core CPUs instead of 10-core which Intel of course has had for some time. You know how I like more cores! The answer was two fold to this. The primary reason was cooling(the enclosure as is has two […]

Pingback by HP Storage Tech Day « TechOpsGuys.com — July 30, 2013 @ 2:10 am