This just hit me a few seconds ago and it gave me something else to write about so here goes.

Oracle recently released Solaris 11, the first major rev to Solaris in many many years. I remember using Solaris 10 back in 2005, wow it’s been a while!

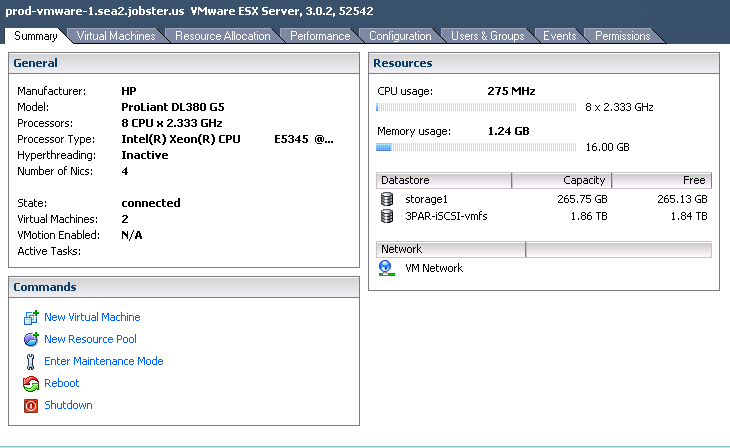

They’re calling it the first cloud OS. I can’t say I really agree with that, vSphere, and even ESX before that has been more cloudy than Solaris for many years now, and remains today.

While their Xen-based Oracle VM is still included in Solaris 11, the focus clearly seems to be Solaris Zones, which, as far as I know is a more advanced version of User mode linux (which seems to be abandoned now?).

Zones, and UML are nothing new, Zones having been first released more than six years ago. It’s certainly a different approach to a full hypervisor approach so has less overhead, but overall I believe is an outdated approach to utility computing (using the term cloud computing makes me feel sick).

Oracle Solaris Zones virtualization scales up to hundreds of zones per physical node at a 15x lower overhead than VMware and without artificial limits on memory, network, CPU and storage resources.

It’s an interesting strategy, and a fairly unique one in today’s world, so it should give Oracle some differentiation. I have been following the Xen bandwagon off and on for many years and never felt it a compelling platform, without a re-write. Red Hat, SuSE and several other open source folks have basically abandoned Xen at this point and now it seems Oracle is shifting focus away from Xen as well.

I don’t see many new organizations gravitating towards Solaris zones that aren’t Solaris users already (or at least have Solaris expertise in house), if they haven’t switched by now…

New, integrated network virtualization allows customers to create high-performance, low-cost data center topologies within a single OS instance for ultimate flexibility, bandwidth control and observability.

The terms ultimate flexibility and single OS instance seem to be in conflict here.

The efficiency of modern hypervisors is to the point now where the overhead doesn’t matter in probably 98% of cases. The other 2% can be handled by running jobs on physical hardware. I still don’t believe I would run a hypervisor on workloads that are truely hardware bound, ones that really exploit the performance of the underlying hardware. Those are few and far between outside of specialist niches these days though, I had one about a year and a half ago, but haven’t come across one since.