I came across this yesterday which is both a video, and more importantly an in-depth report on the about-to-be-released Black Diamond X-series switch. I have written a few times on this topic, I don’t have much that is really new, but then I ran across this PDF which has something I have been looking for – a better diagram on how this new next generation fabric is hooked up.

Up until now, most (all?) chassis switches relied on backplanes, or more modern systems used mid planes to transmit their electrical signals between the modules in the chassis.

Something I learned a couple of years ago (I’m not an electrical engineer) is that there are physical limits as to how fast you can push those electrons over those back and mid planes. There are serious distance limitations which makes the engineering ever more complicated the faster you push the system. Here I was thinking just crank up the clock speeds and make it go faster, but apparently it doesn’t work quite that way 🙂

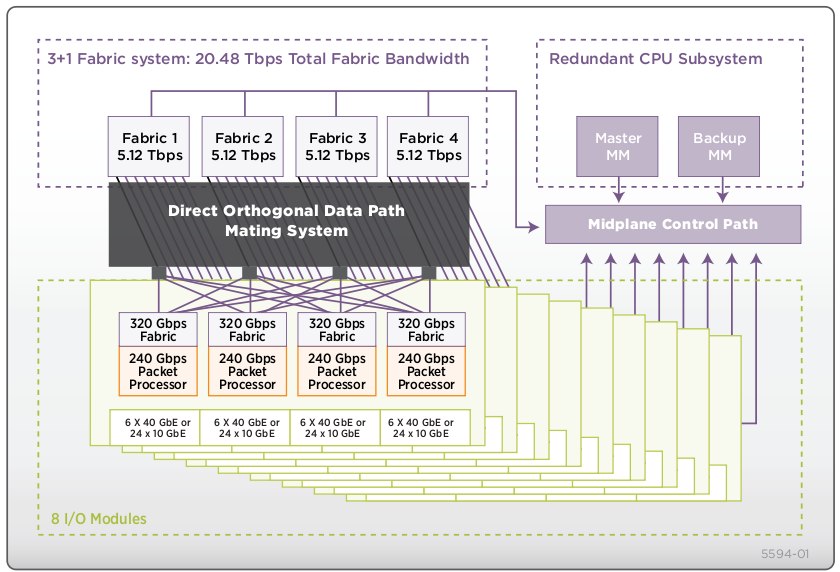

For the longest time all of Extreme’s products were backplane based. Then they released a mid plane based product the Black Diamond 20808 a couple of years ago. This product was discontinued earlier this year when the X-series was announced. The 20808 had (in my simple mind) a similar design to what Force10 had been doing for many years – which is basically N+1 switch fabric modules (I believe the 20808 could go to something like 5 fabric modules), all of their previous switches had what they called MSMs, or Management Switch Modules. These were combination switch fabric and management modules., with a maximum of two per system, each providing half of the switch’s fabric capacity. Some other manufacturers like Cisco separated out their switch fabric from their management module. Having separate fabric modules really doesn’t buy you much when you only have two modules in the system. But if your architecture can go to many more (I seem to recall Force10 at one point having something like 8), then of course you can get faster performance. Another key point in the design is having separate slots for your switch fabric modules so they don’t consume space that would otherwise be used by ethernet ports.

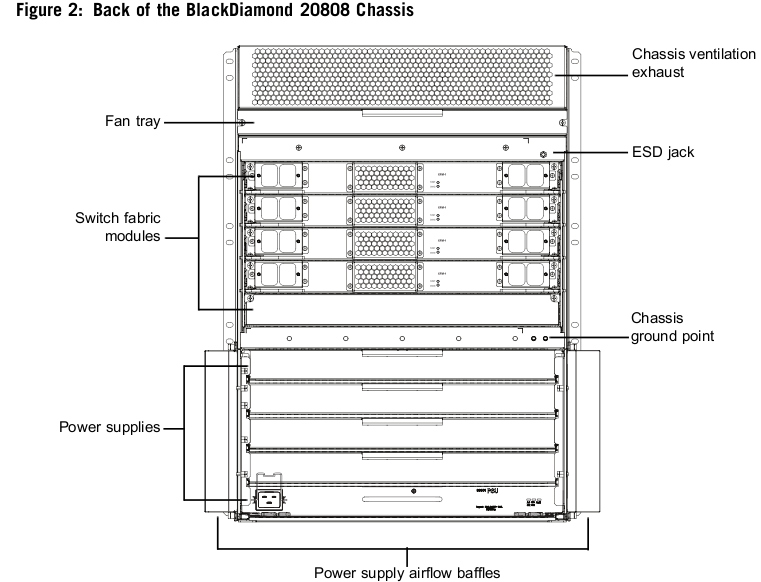

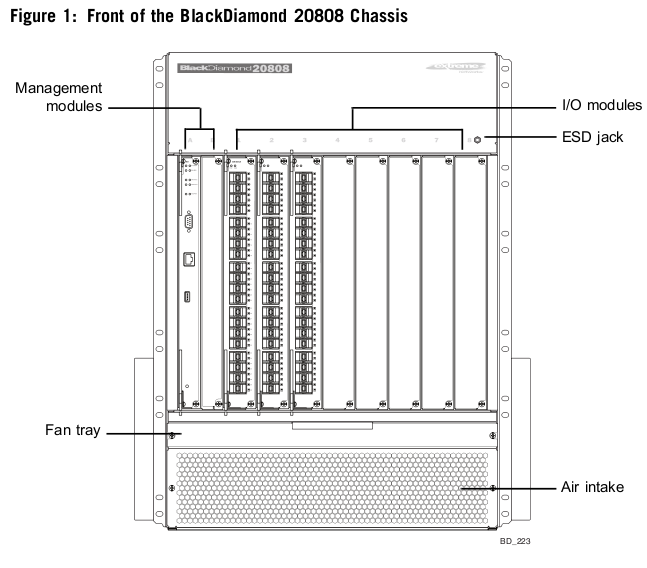

Anyways, on the Black Diamond 20808 they did something else they had never done before, they put modules on both the front, AND on the back of the chassis. On top of that the modules were criss-crossed. The modules on the front were vertical, the modules on the back were horizontal. This is purely guessing here but I speculate the reason for that is, in part, to cut the distance needed to travel between the fabric and the switch ports. HP’s c-Class Blade enclosure has a similar mid plane design with criss crossed components. Speaking of which, I wonder if the next generation 3PAR will leverage the same “non stop” midplane technology of the c-Class. The 5 Terabits of capacity on the c-Class is almost an order of magnitude more than what is even available on the 3PAR V800. Whether or not the storage system needs that much fabric is another question.

The 20808 product seemed to be geared more towards service providers and not towards high density enterprise or data center computing(if I remember right the most you could get out of the box was 64x10GbE ports which you can now get in a 1U X670V).

Their (now very old) Black Diamond 8000 series (with the 8900 model which came out a couple of years ago being the latest incarnation) has been the enterprise workhorse for them for many years, with a plethora of different modules and switch fabric options. The Black Diamond 8900 is a backplane based product. I remember when it came out too – it was just a couple months after I bought my Black Diamond 10808s, in the middle of 2005. Although if I remember right the Black Diamond 8800, as it was originally released, did not support the virtual router capability that the 10808 supported that I intended to base my network design on. Nor did it support the Clear Flow security rules engine. Support for these features was added years later.

You can see the impact distance has on the Black Diamond 8900 for example, with the smaller 6-slot chassis getting at least 48Gbps more switching capacity per line card than the 10-slot chassis simply because it is smaller. Remember this is a backplane designed probably seven years ago, so it doesn’t have as much fabric capacity as a modern mid plane based system.

Anyways, back on topic, the Black Diamond X-series. Extreme’s engineers obviously saw the physics (?) limits they were likely going to hit when building a next generation platform and decided to re-think how the system works, resulting, in my opinion a pretty revolutionary way of building a switch fabric (at least I’m not aware of anything else like it myself). While much of the rest of the world is working with mid planes for their latest generation of systems, here we have the Direct Orthogonal Data Path Mating System or DOD PMS (yeah, right).

What got me started down this path, was I was on the Data Center Knowledge web site, and just happened to see a Juniper Qfabric advertisement. I’ve heard some interesting things about Qfabric since it was announced, it sounds similar to the Brocade VCS technology. I was browsing through some of their data sheets and white papers and it came across as something that’s really complicated. It’s meant to be simple, and it probably is, but the way they explain it to me at least makes it sound really complicated. Anyways I went to look at their big 40GbE switch which is at the core of their Qfabric interconnect technology. It certainly looks like a respectable switch from a performance stand point – 128 40GbE ports, 10 Terabits of switching fabric, weighs in at over 600 pounds(I think Juniper packs their chassis products with lead weights to make them feel more robust).

So back to the report that they posted. The networking industry doesn’t have anything like the SPC-1 or SpecSFS standardized benchmarks to measure performance, and most people would have a really hard time generating enough traffic to tax these high end switches.There is standard equipment that does it, but it’s very expensive.

So, to a certain extent you have to trust the manufacturer as to the specifications of the product, a way many manufacturers try to prove their claims of performance or latency is to hire “independent” testers to run tests on the products and give reports. This is one of those reports.

Reading it made me smile, seeing how well the X-Series performed but in the grand scheme of things it didn’t surprise me given the design of the system and the fabric capacity it has built into it.

The BDX8 breaks all of our previous records in core switch testing from performance, latency, power consumption, port density and packaging design. The BDX8 is based upon the latest Broadcom merchant silicon chipset.

For the Fall Lippis/Ixia test, we populated the Extreme Networks BlackDiamond® X8 with 256 10GbE ports and 24 40GbE ports, thirty three percent of its capacity. This was the highest capacity switch tested during the entire series of Lippis/Ixia cloud network test at iSimCity to date.

We tested and measured the BDX8 in both cut through and store and forward modes in an effort to understand the difference these latency measurements offer. Further, latest merchant silicon forward packets in store and forward for smaller packets, while larger packets are forwarded in cut-through making this new generation of switches hybrid cut-through/store and forward devices.

Reading through the latency numbers, they looked impressive, but I really had nothing to compare them with, so I don’t know how good. Surely for any network I’ll ever be on it’d be way more than enough.

The BDX8 forwards packets ten to six times faster than other core switches we’ve tested.

[..]

The Extreme Networks BDX8 did not use HOL blocking which means that as the 10GbE and 40GbE ports on the BDX8 became congested, it did not impact the performance of other ports. There was no back pressure detected. The BDX8 did send flow control frames to the Ixia test gear signaling it to slow down the rate of incoming traffic flow.

Back pressure? What an interesting term for a network device.

The BDX8 delivered the fastest IP Multicast performance measured to date being able to forward IP Multicast packets between 3 and 13 times faster then previous core switch measures of similar 10GbE density.

The Extreme Networks BDX8 performed very well under cloud simulation conditions by delivering 100% aggregated throughput while processing a large combination of east-west and north-south traffic flows. Zero packet loss was observed as its latency stayed under 4.6 μs and 4.8 μs measured in cut through and store and forward modes respectively. This measurement also breaks all previous records as the BDX8 is between 2 and 10 times faster in forwarding cloud based protocols under load.

[..]

While these are the lowest Watts/10GbE port and highest TEER values observed for core switches, the Extreme Networks BDX8’s actual Watts/10GbE port is actually lower; we estimate approximately 5 Watts/10GbE port when fully populated with 768 10GbE or 192 40GbE ports. During the Lippis/Ixia test, the BDX8 was only populated to a third of its port capacity but equipped with power supplies, fans, management and switch fabric modules for full port density population. Therefore, when this full power capacity is divided across a fully populated BDX8, its WattsATIS per 10GbE Port will be lower than the measurement observed [which was 8.1W/port]

They also mention the cost of power, and the % of list price that cost is, so we can do some extrapolation. I suspect the list price of the product is not final, and I am assuming the prices they are naming are based on the configuration they are testing with rather than a fully loaded system(which as mentioned above the switch was configured with enough fabric and power for the entire chassis but only ~50% of the port capacity was installed).

Anyways, they say the price to power it over 3 years is $10,424.05 and say that is less than 1.7% of it’s list price. Extrapolating that a bit I can guesstimate that the list price of this system as tested with 352 10GbE ports is roughly $612,000, or about $1,741 per 10GbE port.

The Broadcom technology is available to the competition, the real question is how long will it take for the competition develop something that can compete with this 20 Terabit switching fabric, which seems to be about twice as fast as anything else currently on the market.

HP has been working on some next generation stuff, I read about their optical switching technology earlier this year that their labs are working on, sounds pretty cool.

[..] Charles thinks this is likely to be sometime in the next 3-to-5 years.

So, nothing on the immediate horizon on that front.

Good article. Do you have any updated thoughts on the BDX-8 now that its in general availability?

Comment by MN — April 5, 2012 @ 10:46 am

Thanks for the post!

My most recent thoughts that I posted about are here – http://www.techopsguys.com/2012/02/16/30-billion-packets-per-second/ (didn’t have it tagged right). Beyond that I’d still like to see a more specific pictures or diagrams on how this new fabric connectivity works and how it differs from a midplane/backplane since I’m still uncertain there. I find it curious that the L2 forwarding tables on the switch, at least on paper are the same as their 1U X670 switch (128k MAC). The IPv4 host entries is not a whole lot higher (not to the scale I’d expect given the 30 fold increase in forwarding performance) vs the X670.

At the end of the day the main difference between the X670-series which I use and the BD-X is all about scale, all of the software features are pretty much the same, the OS is the same, etc.

Comment by Nate — April 5, 2012 @ 12:49 pm