Still really busy getting ready to move out of the cloud. Ran into a networking issue today noticed my 10GbE NICs are going up and down pretty often. Contacted HP and apparently these Qlogic 10GbE NICs have overheating issues and the thing that has fixed it for other customers is to set the bios to increased cooling, basically ramp up those RPMs (as well as a firmware update, which is already applied) Our systems may have more issues given that each server has two of these NICs right on top of each other. So maybe we need to split them apart, waiting to see what support says. There haven’t been any noticeable issues with the link failures since everything is redundant across both NICs (both NIC ports on the same card seem to go down at about the same time).

Anyways, you know I’ve been talking about it a lot here, and it’s finally arrived (well a few days ago), the Black Diamond X-Series (self proclaimed world’s largest cloud switch). Officially announced almost a year ago, their marketing folks certainly liked to grab the headlines early. They did the same thing with their first 24-port 10GbE stackable switch.

The numbers on this thing are just staggering, I mean 30 billion packets per second, a choice of either switching fabric based on 2.5Tbps (meaning up to 10Tbps in the switch), or 5.1Tbps (with up to 20Tbps in the switch). They are offering both 48x10GbE line cards as well 12 and 24x40GbE line cards (all line rate, duh). You already know it has up to 192x40GbE or 768x10GbE(via breakout cables) in a 14U footprint – half the size of the competition which was already claiming to be a fraction of the size of their other competition.

Power rated at 5.5 watts per 10GbE port or 22W per 40GbE port.

They are still pushing their Direct Attach approach, support for which still hasn’t exactly caught on in the industry. I didn’t really want Direct Attach but I can see the value in it. They apparently have a solution for KVM ready to go, though their partner in crime that supported VMware abandoned the 10GbE market a while ago (it required special NIC hardware/drivers for VMware). I’m not aware of anyone that has replaced that functionality from another manufacturer.

I found it kind of curious they rev’d their Operating system 3 major versions for this product. (from version 12 to 15). They did something similar when they re-wrote their OS about a decade ago (jumped from version 7 to 10).

Anyways not much else to write about, I do plan to write more on the cloud soon just been so busy recently – this is just a quick post / random thought.

So the clock starts – how long until someone else comes out with a midplane-less chassis design ?

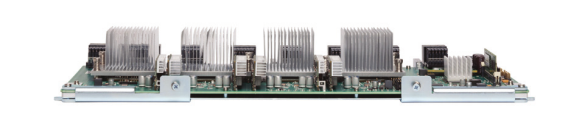

I will take a guess and say those are their 10GB CNA adapters. I had a near identical issue within our vSphere boxes where suddenly all inbound or outbound traffic would cease on both cards at the same time across all hosts in the cluster. We never did find the reason for this, after nearly a month of trying to troubleshoot with IBM, VMware and Qlogic we simply kicked the cards to the curb and went with Emulex. Heck, Emulex local reps gave us the cards for free, and I still have 6 of these CNA’sitting in a drawer that I cant give away.

Comment by gchapman — February 17, 2012 @ 6:25 am

Hit reply too soon. More on topic to your post, I’ve found it nearly impossible to displace Cisco gear from our organization. Our ” network team” is horribly resistant to any form of change and any product from anyone without a cisco stamp on it. It does not matter if the Cisco lines are more expensive, with higher support costs and fewer features, they simply wont look at competitive lines. They even want me to go with MDS for the SAN Fabrics which I basically told them over my dead body. It’s time to replace our 6509’s/4500’s and they are talking up Nexus now, and I’ve pointed them at alternative gear and they just dont bother to look. It’s puzzling to me.

Comment by gchapman — February 17, 2012 @ 6:34 am

They are technically CNA chips yes though I don’t think things like FCoE are supported on them. I believe they are the same chips used in the HP FlexFabric (if not they are really close), Qlogic has NIC virtualization firmware out for them as of a few months ago, though HP is not sure when they will support it.

I was kind of worried when I was the issue because I expected it was going to be a pain to diagnose. There was no indication as to why the links were failing, both sides just said link is down then back up.

As for the Cisco stuff I’m not too surprised for a big company like the one your at. It is unfortunate though. Many folks are just resistant to change. One of my friends is at a company where about 2 years ago now there was major supply issues with Nexus gear and his company had to wait at least 2 months to get stuff. Their network engineers really wanted Cisco too despite the widespread issues Nexus had at the time (not sure if it still has those issues or not), it wasn’t until his company said they were going to go Extreme unless the gear shipped by Friday that the gear actually got shipped.

When I was in Atlanta a few weeks ago I saw in the cage next to mine someone installed a pair of what seemed to be brand new Cisco 6500s(it was a brand new cage otherwise with no gear in it. One day I was out there a couple of people were there installing patch panels.

I thought what a waste, one of my 1U 10GbE switches has twice the performance of their big 6509 (which I think only had 3 slots populated). Deploying new network hardware that was considered cutting edge more than seven years ago is just sad. But it goes to show I suppose that customers are still resisting Nexus.

One guy I interviewed recently(whom I’m sure we will offer the job to not sure if he will take it or not) swore by EMC for storage. His only experience was (ironically enough working at Cisco) using EMC Clariion, which of all of the EMC storage is just the worst platform they have. But he swore by it…

Comment by Nate — February 17, 2012 @ 7:44 am

I guess it depends on if he had to actually manage the storage, or it was just at his shop. I have a great slide from a presenter with his LUN mapping for his EMC gear. He had put it into a visio because excel just wasn’t working and he couldn’t visualize all the luns as they were actually laid out. It kind of looks like a cubist version of a Jackson Pollack painting.

Comment by gchapman — February 17, 2012 @ 7:49 am

btw, did you see that symantec is suing Veeam and Acronis for patent infringement. Digging deeper its pretty funny that they were given a patent for “using a graphical user interface to map computer resources”

Comment by gchapman — February 17, 2012 @ 9:11 am

HP released a customer advisory notice on these NICs about a week ago (just got that info this morning)

http://h20000.www2.hp.com/bizsupport/TechSupport/Document.jsp?lang=en&cc=us&taskId=110&prodSeriesId=3913537&prodTypeId=329290&objectID=c02964542

The only new thing they suggest is increasing the fan speed. Another level 1 tech called me this morning and disputed the fact that it is cooling related as he is aware of a couple other cases with the same NIC at level 2 tech support and not resolved. He thinks it might help but is not a real fix.

Level 2 will get back to me on Monday or Tuesday on their suggestion, these servers are supposed to go to production on Wednesday night so hopefully we have a plan of action and can get something done to fix it by then.

I just noticed it by casually browsing my switch logs yesterday, there was no indication there was an issue from a vmware perspective (there are plenty of events for it in the vmware logs but no alerts or anything – I’ve seen alerts pop up when a link failure occurs but the alert goes away when the link state recovers). I happened to install Xangati to play around with recently and it too didn’t pick up anything.

Since we have two physical NICs in each server and they are redundant for each other the impact is limited to a couple of seconds to fail over to the other NIC, it’s not a critical issue but at the same time the issue may get worse as we put load on the systems.

As for the lawsuit – no I didn’t see that yet that is kind of sad, and coming from Symantec which doesn’t seem to be your typical patent troll. Speaking of trolls one of the companies I used to work for turned into a patent troll a few years ago after their main business didn’t seem to be going anywhere. Sad to see. They haven’t gotten very far though, they tried to sue the likes of Google, yahoo etc because for using a database behind their web site, then tried to sue the likes of Juniper and others for something related to firewalls.

Comment by Nate — February 17, 2012 @ 11:20 am

Oh – when was your qlogic issue on the IBM boxes? was it before late November when the updated firmware came out or after?

Comment by Nate — February 17, 2012 @ 11:23 am

March 2011 is when we had the issue. I know there are firmware changes since then. IBM server proven is the site that tells you if the cards fully supported or not on a specific version. ESX/i 4.0 was supported, but not 4.1 at the time

Comment by gchapman — February 18, 2012 @ 7:39 am