The best word I can come up with when I saw this was

oof

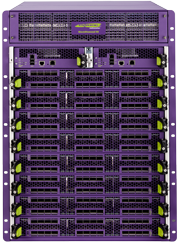

What I’m talking about is the announcement of the Black Diamond X-Series from my favorite switching company Extreme Networks. I have been hearing a lot about other switching companies coming out with new next gen 10 GbE and 40GbE switches, more than one using Broadcom chips (which Extreme uses as well), so have been patiently awaiting their announcements.

I don’t have a lot to say so I’ll let the specs do the talking

- 14.5 U

- 20 Tbps switching fabric (up ~4x from previous models)

- 1.2 Tbps fabric per line slot (up ~10x from previous models)

- 2,304 line rate 10GbE ports per rack (5 watts per port) (768 line rate per chassis)

- 576 line rate 40GbE ports per rack (192 line rate per chassis)

- Built in support to switch up to 128,000 virtual machines using their VEPA/ Direct Attach system

This was fascinating to me:

Ultra high scalability is enabled by an industry-leading fabric design with an orthogonal direct mating system between I/O modules and fabric modules, which eliminates the performance bottleneck of pure backplane or midplane designs.

I was expecting their next gen platform to be a mid plane design (like that of the Black Diamond 20808), their previous 10GbE high density Enterprise switch Black Diamond 8800, by contrast was a backplane design (originally released about six years ago). The physical resemblance to the Arista networks chassis switches is remarkable. I would like to see how this direct mating system looks in a diagram of some kind to get a better idea on what this new design is.

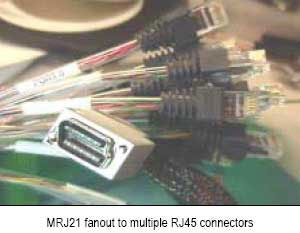

To put that port density in to some perspective, their older system (Black Diamond 8800), by comparison, has an option to use Mini RJ21 adapters to achieve 768 1GbE ports in a chassis (14U), so an extra inch of space gets you the same number of ports running at 10 times the speed, and line rate (the 768x1GbE is not quite to line rate but still damn fast). It’s the only way to fit so many copper ports in such a small space.

It seems they have phased out the Black Diamond 10808 (I deployed a pair of these several years ago first released 2003), the Black Diamond 12804C (first released about 2007), the Black Diamond 12804R (also released around 2007) and the Black Diamond 20808 (this one is kind of surprising given how recent it was though didn’t have anything approaching this level of performance of course, I think it was released in around 2009). They also finally seemed to drop the really ancient Alpine series (10+ year old technology) as well.

Also they seem to have announced a new high density stackable 10GbE switch the Summit X670, the successor to the X650 which was already an outstanding product offering several features that until recently nobody else in the market was providing.

- 1U

- 1.28 Tbps switching fabric (roughly double that of the X650)

- 48 x 10Gbps line rate standard (64 x 10Gbps max)

- 4 x 40Gbps line rate (or 16 x 10Gbps)

- Long distance stacking support (up to 40 kilometers)

The X670 from purely a port configuration standpoint looks similar to some of other recently announced products from other companies, like Arista and Force10, both of whom are using the Broadcom Trident+ chipset, I assume Extreme is using the same. These days given so many manufacturers are using the same type of hardware you have to differentiate yourself in the software, which is really what drives me to Extreme more than anything else, their Linux-based easy-to-use Extremeware XOS operating system.

Neither of these products appear to be shipping, not sure when they might ship, maybe sometime in Q3 or something.

40GbE has taken longer than I expected to finalize, they were one of the first to demonstrate 40GbE at Interop Las Vegas last year, but the parts have yet to ship (or if they have the web site is not updated).

For the most part, the number of companies that are able to drive even 10% of the performance of these new lines of networking products is really tiny. But the peace of mind that comes with everything being line rate, really is worth something !

x86 or ASIC? I’m sure performance boosts like the ones offered here pretty much guarantees that x86 (or any general purpose CPU for that matter) will not be driving high speed networking for a very long time to come.

Myself I am not yet sold on this emerging trend in the networking industry that is trying to drive everything to be massive layer 2 domains. I still love me some ESRP! I think part of it has to do with selling the public on getting rid of STP. I haven’t used STP in 7+ years so not using any form of STP is nothing new for me!

[…] was thinking of the Extreme Networks Black Diamond X-Series which was announced (note not yet shipping…) a few months […]

Pingback by Cisco’s new 10GbE push – a little HP and Dell too « TechOpsGuys.com — October 18, 2011 @ 7:56 pm

[…] Black Diamond X-Series (self proclaimed world’s largest cloud switch). Officially announced almost a year ago, their marketing folks certainly liked to grab the headlines early. They did the same thing with […]

Pingback by 30 billion packets per second « TechOpsGuys.com — February 16, 2012 @ 11:28 pm