I feel like I am almost alone in the world when it comes to deploying environmental sensors around my equipment. I first did it at home back around 2001 when I had a APC SmartUPS and put a fancy environmental monitoring card in it, which I then wrote some scripts for and tied it into MRTG.

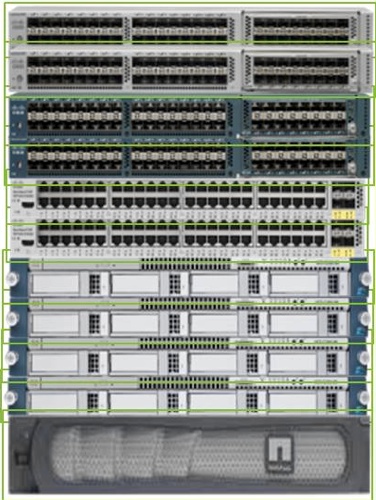

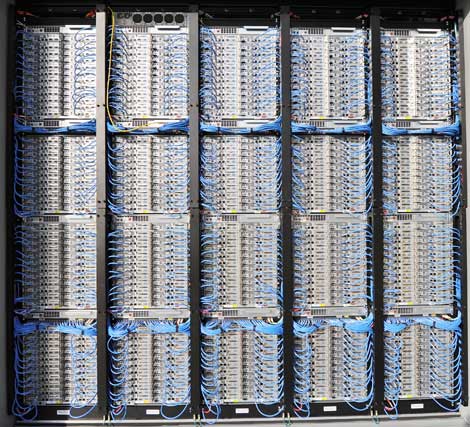

A few years later I was part of a decently sized infrastructure build out that had a big budget so I got one of these, and 16 x environmental probes each with 200 foot cables (I think the probes+cables alone were about $5k(the longest cables they had at the time, which were much more expensive than the short ones, I wasn’t sure what lengths I needed so I just went all out), ended up truncating the ~3200 feet of cables down to around ~800 feet I suspect). I focused more on cage environmental than per rack, I would of needed a ton more probes if I had per rack. Some of the sensors went into racks, and there was enough slack on the end of the probes to temporarily position them anywhere within say 10 feet of their otherwise fixed position very easily.

The Sensatronics device was real nifty, so small, and yet it supported both serial and ethernet, had a real basic web server, was easily integrated to nagios (though at the time I never had the time to integrate it so relied entirely on the web server). We were able to prove to the data center at the time their inadequate cooling and they corrected it by deploying more vented tiles. They were able to validate the temperature using one of those little laser gun things.

At the next couple of companies I changed PDU manufacturers and went to ServerTech instead, many (perhaps all?) of their intelligent PDUs come with ports for up to two environmental sensors. Some of their PDUs require an add-on to get the sensor integration.

The probes are about $50 a piece and have about a 10 foot cable on them. Typically I’d have two PDUs in a rack and I’d deploy four probes (2 per PDU). Even though environmental SLAs only apply to the front of the racks, I like information so I always put two sensors in front and two sensors in rear.

I wrote some scripts to tie this sensor data into cacti (the integration is ugly so I don’t give it out), and later on I wrote some scripts to tie this sensor data into nagios (this part I did have time to do). So I could get alerts when the facility went out of SLA.

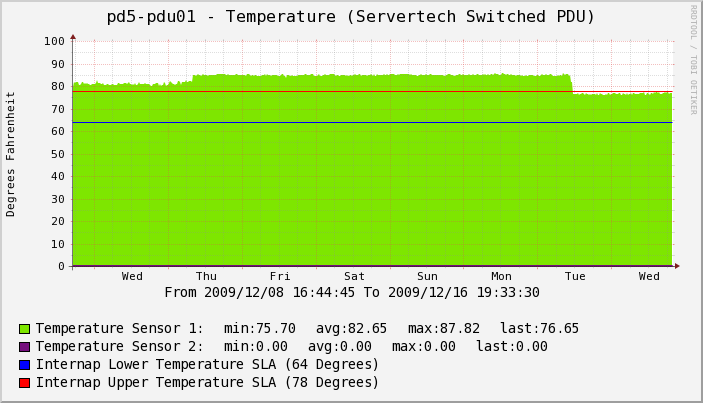

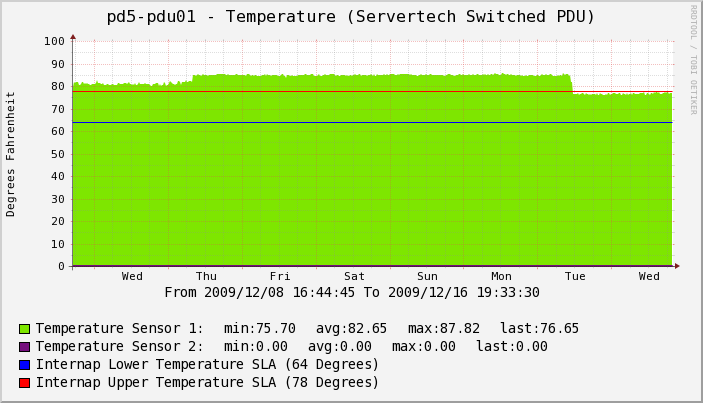

Until today the last time I was at a facility that was out of SLA was in 2009, when one of the sensors on the front of the rack was reporting 87 degrees. The company I was at during that point had some cheap crappy IDS systems deployed in each facility, and this particular facility had a high rate of failures for these IDSs. At first we didn’t think *too* much of it, then I had the chance to hook up the sensors and wow, was I surprised. I looked at the temperatures inside the switches and compared it to other facilities (can’t really extrapolate ambient temp from inside the switch), and confirmed it was much warmer there than at our other locations.

So I bitched to them and they said there was no problem, after going back and forth they did something to fix it – this was a remote facility – 5,000 miles away and we had no staff anywhere near it, they didn’t tell us what they did but the temp dropped like a rock, and stayed within (barely) their SLA after that – it was stable after that.

Cabinet Ambient Temperature

There you have it, oh maybe you noticed there’s only one sensor there, yeah the company was that cheap they didn’t want to pay for a second sensor, can you believe that, so glad I’m not there anymore (and oh the horror stories I’ve heard about the place since! what a riot).

Anyways so fast forward to 2012. Last Friday we had a storage controller fail (no not 3PAR, another lower end HP storage system), with a strange error message, oddly enough the system did not report there was a problem in the web UI (system health “OK”), but one of the controllers was down when you dug into the details.

So we had that controller replaced (yay 4 hour on site support), the next night the second controller failed with the same reason. HP came out again and poked at it, at one point there was a temperature alarm but the on site tech said he thought it was a false alarm, they restarted the controller again and it’s been stable since.

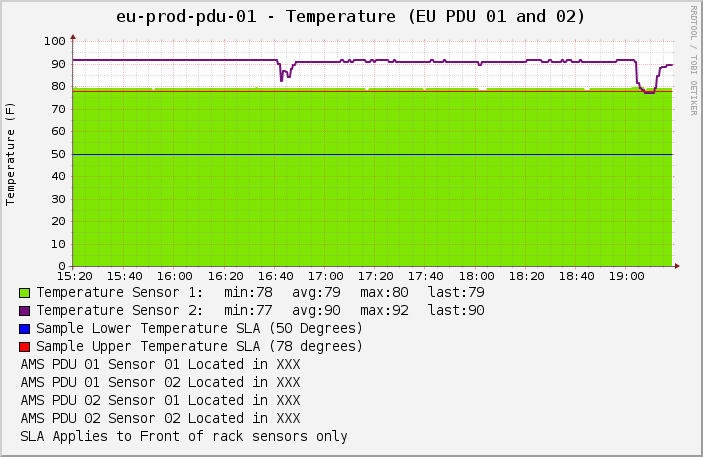

So today I finally had some time to start hooking up the monitoring for the temperature sensors in that facility, it’s a really small deployment, just 1 rack, so 4 sensors.

I was on site a couple of months ago and at the time I sent an email noting that none of the sensors were showing temperatures higher than 78 degrees (even in the rear of the rack).

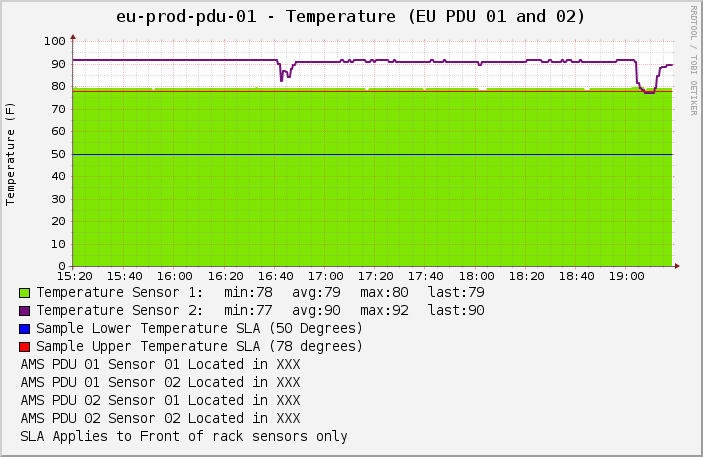

So imagine my surprise when I looked at the first round of graphs that said 3 of the 4 sensors were now reporting 90 degrees or hotter temperature, and the 4th(near the floor) was reporting 78 degrees.

Wow, that is toasty, freakin hot more like it. So I figured maybe one of the sensors got moved to the rear of the rack, I looked at the switch temperatures and compared them with our other facility, the hotter facility was a few degrees hotter (4C), not a whole lot.

The servers told another story though.

Before I go on let me say that in all cases the hardware reports the systems are “within operating range”, everything says “OK” for temperature – it’s just way above my own comfort zone.

Here is a comparison of two servers at each facility, the server configuration hardware and software is identical, the load in both cases is really low, actually load at the hot facility would probably be less given the time of day (it’s in Europe so after hours). Though in the grand scheme of things I think the load in both cases is so low that it wouldn’t influence temperature much between the two. Ambient temperature is one of 23 temperature sensors on the system.

| Data Center | Device | Location | Ambient Temperature | Fan Speeds (0-100%)

[6 fans per server] |

| Hot Data Center | Server X | Roughly 1/3rd from bottom of rack | 89.6 F | 90 / 90 / 90 / 78 / 54 / 50 |

| Normal Data Center | Server X | Roughly 1/3rd from bottom of rack | 66.2 F | 60 / 60 / 57 / 57 / 43 / 40 |

| Hot Data Center | Server Y | Roughly 1/3rd from bottom of rack | 87.8 F | 90 / 90 / 72 / 72 / 50 / 50 |

| Normal Data Center | Server Y | Bottom of Rack | 66.2 F | 59 / 59 / 57 / 57 / 43 / 40 |

That’s a pretty stark contrast, now compare that to some of the external sensor data from the ServerTech PDU temperature probes:

| Location | Ambient Temperature (one number per sensor) | Relative Humidity (one number per sensor) |

| Hot Data Center - Rear of Rack | 95 / 88 | 28 / 23 |

| Normal Data Center - Rear of Rack | 84 / 84 / 76 / 80 | 44 / 38 / 35 / 33

|

| Hot Data Center - Front of Rack | 90 / 79 | 42 / 31 |

| Normal Data Center - Front of Rack | 75 / 70 / 70 / 70 | 58 / 58 / 58 / 47 |

Again pretty stark contrast. Given that all equipment (even the storage equipment that had issues last week) is in “normal operating range” there would be no alerts or notification, but my own alerts go off when I see temperatures like this.

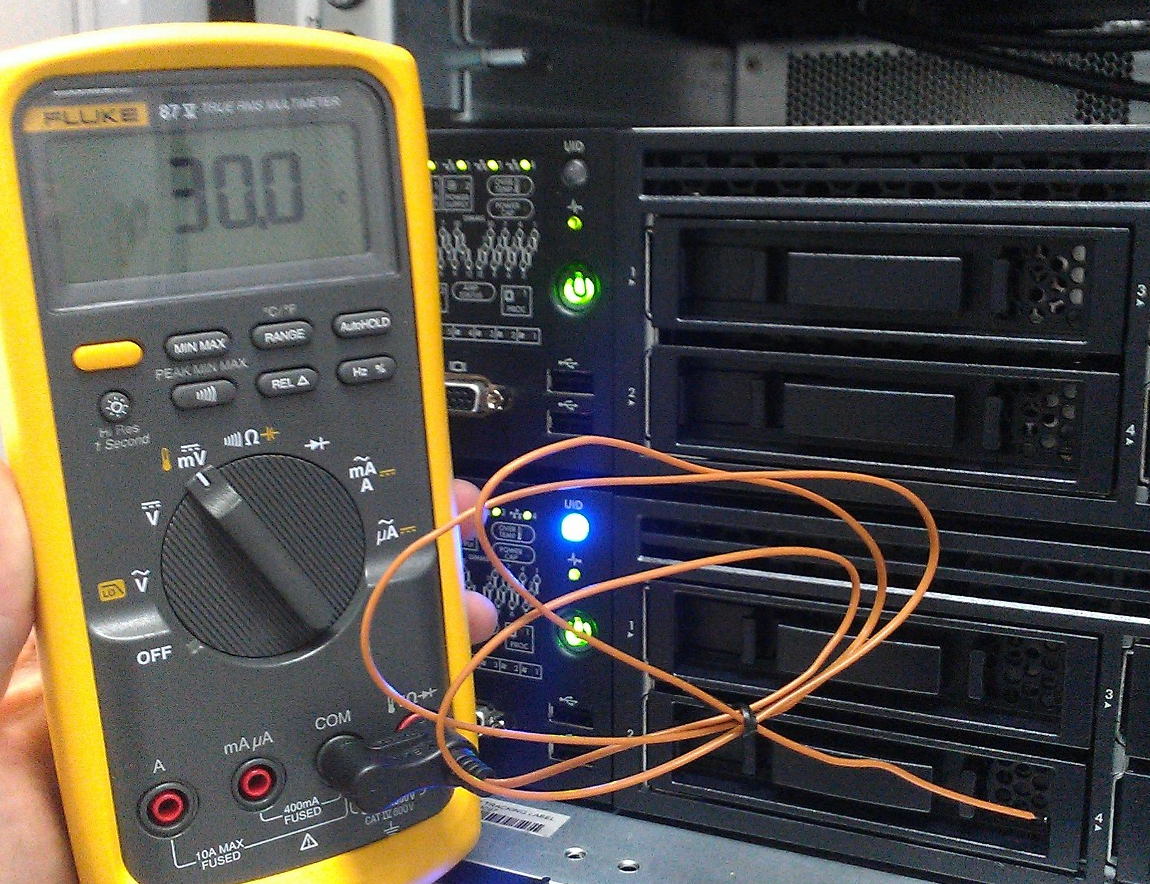

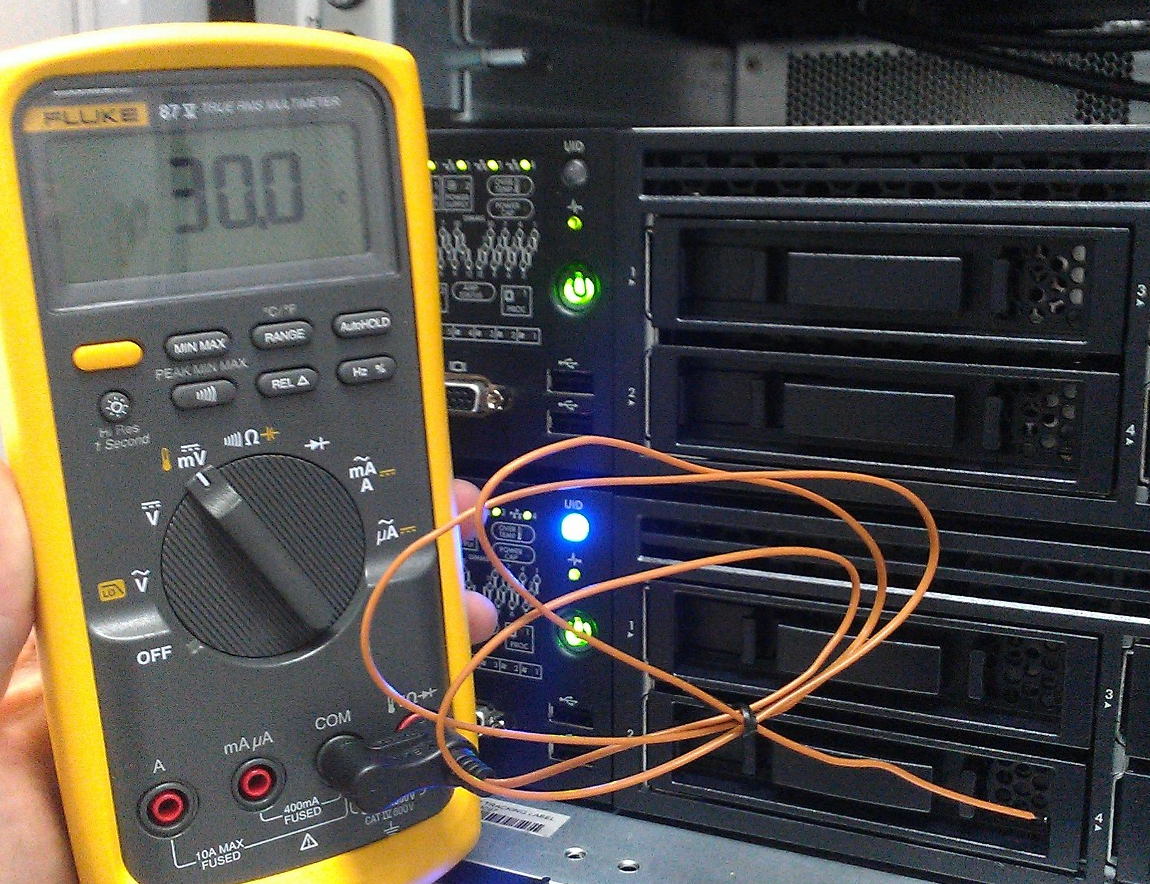

The on site personnel used a hand held meter and confirmed the inlet temperature on one of the servers was 30C (86 F), the server itself reports 89.6, I am unsure as to the physical location of the sensor in the server but it seems reasonable that an extra 3-4 degrees from the outside of the server to the inside is possible. The data center’s own sensors report roughly 75 degrees in the room itself, though I’m sure that is due to poor sensor placement.

Temperature readout using a hand held meter

I went to the storage array, and looked at it’s sensor readings – the caveat being I don’t know where the sensors are located (trying to find that out now), in any case:

- Sensor 1 = 111 F

- Sensor 2 = 104 F

- Sensor 3 = 100.4 F

- Sensor 4 = 104 F

Again the array says everything is “OK”, I can’t really compare to the other site since the storage is totally different(little 3PAR array), but I do know that the cooler data center has a temperature probe directly in front of the 3PAR controller air inlets, and that sensor is reading 70 F. The only temperature sensors I can find on the 3PAR itself are on the physical disks, which range from 91F to 98F, the disk specs say operating temperature from 5-55C (55C = 131F).

So the lesson here is, once again – invest in your own environmental monitoring equipment – don’t rely on the data center to do it for you, and don’t rely on the internal temperature sensors of the various pieces of equipment (because you can’t extract the true ambient temperature and you really need that if your going to tell the facility they are running too hot).

The other lesson is, once you do have such sensors in place, hook them up to some sort of trending tool so you can see when stuff changes.

PDU Temperature Sensor data

The temperature changes in the image above was from when the on site engineer was poking around.

Some sort of irony here the facility that is running hot is a facility that has a high focus on hot/cold isle containment (though the row we are in is not complete so it is not contained right now), they even got upset when I told them to mount some equipment so the airflow would be reversed. They did it anyway of course, that equipment generates such little heat.

In any case there’s tons of evidence that this other data center is operating too hot! Time to get that fixed..