Beam me up!

Damn those folks at VMware..

Anyways I was browsing around this afternoon looking around at things and while I suppose I shouldn’t be I was surprised to see that the new storage VAAI APIs are only available to people running Enterprise or Enterprise Plus licensing.

I think at least the block level hardware based locking for VMFS should be available to all versions of vSphere, after all VMware is offloading the work to a 3rd party product!

VAAI certainly looks like it offers some really useful capabiltiies, from the documentation on the 3PAR VAAI plugin (which is free) here are the highlights:

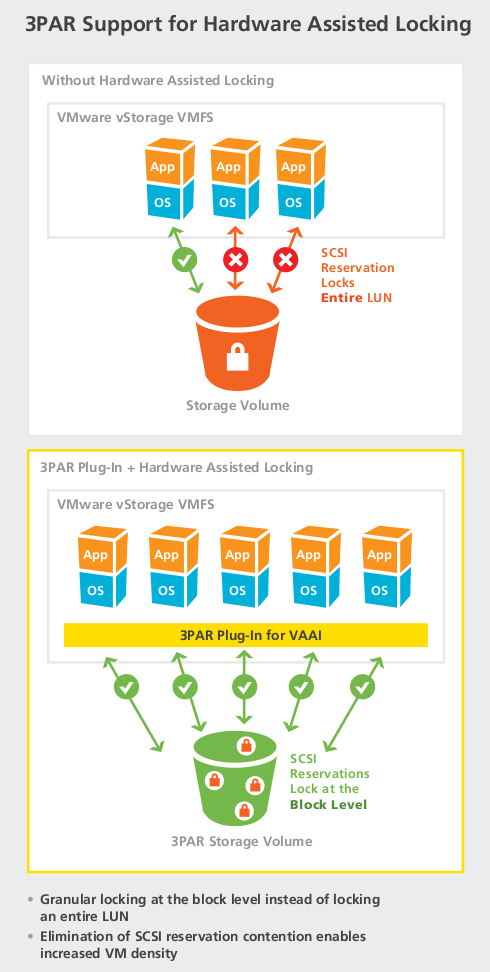

- Hardware Assisted Locking is a new VMware vSphere storage feature designed to significantly reduce impediments to VM reliability and performance by locking storage at the block level instead of the logical unit number (LUN) level, which dramatically reduces SCSI reservation contentions. This new capability enables greater VM scalability without compromising performance or reliability. In addition, with the 3PAR Gen3 ASIC, metadata comparisons are executed in silicon, further improving performance in the largest, most demanding VMware vSphere and desktop virtualization environments.

- The 3PAR Plug-In for VAAI works with the new VMware vSphere Block Zero feature to offload large, block-level write operations of zeros from virtual servers to the InServ array, boosting efficiency during several common VMware vSphere operations— including provisioning VMs from Templates and allocating new file blocks for thin provisioned virtual disks. Adding further efficiency benefits, the 3PAR Gen3 ASIC with built-in zero-detection capability prevents the bulk zero writes from ever being written to disk, so no actual space is allocated. As a result, with the 3PAR Plug-In for VAAI and the 3PAR Gen3 ASIC, these repetitive write operations now have “zero cost†to valuable server, storage, and network resources—enabling organizations to increase both VM density and performance.

- The 3PAR Plug-In for VAAI adds support for the new VMware vSphere Full Copy feature to dramatically improve the agility of enterprise and cloud datacenters by enabling rapid VM deployment, expedited cloning, and faster Storage vMotion operations. These administrative tasks are now performed in half the time. The 3PAR plug-in not only leverages the built-in performance and efficiency advantages of the InServ platform, but also frees up critical physical server and network resources. With the use of 3PAR Thin Persistence and the 3PAR Gen3 ASIC to remove duplicated zeroed data, data copies become more efficient as well.

Cool stuff. I’ll tell you what. I really never had all that much interest in storage until I started using 3PAR about 3 and a half years ago. I mean I’ve spread my skills pretty broadly over the past decade, and I only have so much time to do stuff.

About five years ago some co-workers tried to get me excited about NetApp, though for some reason I never could get too excited about their stuff, sure it has tons of features which is nice, though the core architectural limitations of the platform (from a spinning rust perspective at least) I guess is what kept me away from them for the most part. If you really like NetApp, put a V-series in front of a 3PAR and watch it scream. I know of a few 3PAR/NetApp users that are outright refusing to entertain the option of running NetApp storage, they like the NAS, and keep the V-series but the back end doesn’t perform.

On the topic of VMFS locking – I keep seeing folks pimping the NFS route attack the VMFS locking as if there was no locking in NFS with vSphere. I’m sure prior to block level locking the NFS file level locking (assuming it is file level) is more efficient than LUN level. Though to be honest I’ve never encountered issues with SCSI reservations in the past few years I’ve been using VMFS. Probably because of how I use it. I don’t do a lot of activities that trigger reservations short of writing data.

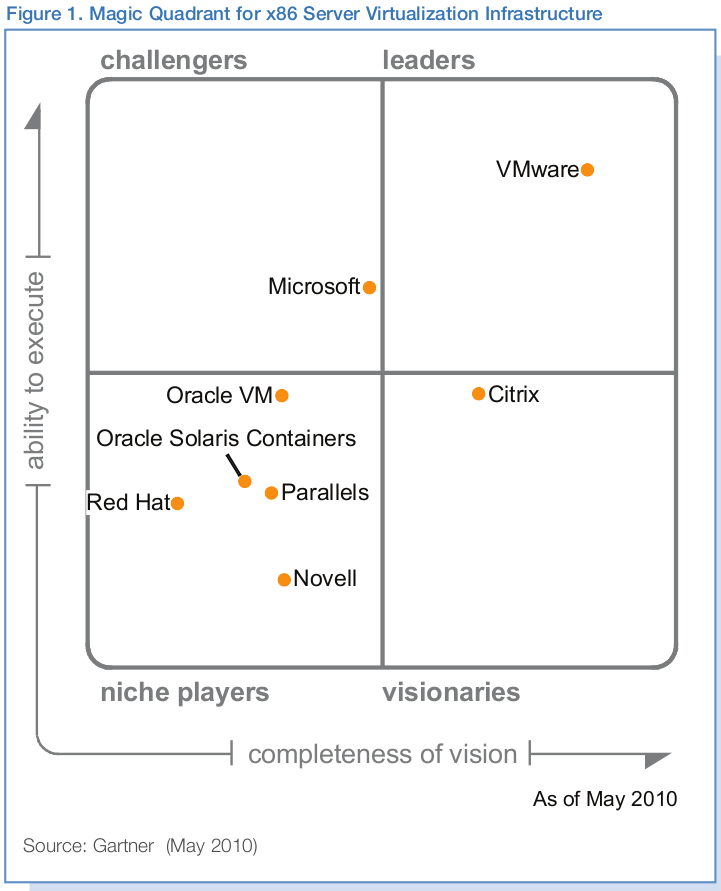

Another graphic which I thought was kind of funny, is the current Gartner group “magic quadrant”, someone posted a link to it for VMware in a somewhat recent post, myself I don’t rely on Gartner but I did find the lop sidedness of the situation for VMware quite amusing –

I’ve been using VMware since before 1.0, I still have my VMware 1.0.2 CD for Linux. I deployed VMware GSX to production for an e-commerce site in 2004, I’ve been using it for a while, I didn’t start using ESX until 3.0 came out(from what I’ve read about the capabiltiies of previous versions I’m kinda glad I skipped them 🙂 ). It’s got to be the most solid piece of software I’ve ever used, besides Oracle I suppose. I mean I really, honestly can not remember it ever crashing. I’m sure it has, but it’s been so rare that I have no memory of it. It’s not flawless by any means, but it’s solid. And VMware has done a lot to build up my loyalty to them over the past, what is it now eleven years? Like most everyone else at the time, I had no idea that we’d be doing the stuff with virtualization today that we are back then.

I’ve kept my eyes on other hypervisors as they come around, though even now none of the rest look very compelling. About two and a half years ago my new boss at the time was wanting to cut costs, and was trying to pressure me into trying the “free” Xen that came with CentOS at the time. He figured a hypervisor is a hypervisor. Well it’s not. I refused. Eventually I left the company and my two esteemed colleges were forced into trying it after I left(hey Dave and Tycen!) they worked on it for a month before giving up and going back to VMware. What a waste of time..

I remember Tycen at about the same time being pretty excited about Hyper-V. Well at a position he recently held he got to see Hyper-V in all it’s glory, and well he was happy to get out of that position and not having to use Hyper-V anymore.

Though I do think KVM has a chance, I think it’s too early to use it for anything too serious at this point, though I’m sure that’s not stopping tons of people from doing it anyways, just like it didn’t stop me from running production on GSX way back when. But I suspect by the time vSphere 5.0 comes out, which I’m just guessing here will be in the 2012 time frame, KVM as a hypervisor will be solid enough to use in a serious capacity. VMware will of course have a massive edge on management tools and fancy add ons, but not everyone needs all that stuff (me included). I’m perfectly happy with just vSphere and vCenter (be even happier if there was a Linux version of course).

I can’t help but laugh at the grand claims Red Hat is making for KVM scalability though. Sorry I just don’t buy that the Linux kernel itself can reach such heights and be solid & scalable, yet alone a hypervisor running on top of Linux (and before anyone asks, NO ESX does NOT run on Linux).

I love Linux, I use it every day on my servers and my desktops and laptops, have been for more than a decade. Despite all the defectors to the Mac platform I still use Linux 🙂 (I actually honestly tried a MacBook Pro for a couple weeks recently and just couldn’t get it to a usable state).

Just because the system boots with X number of CPUs and X amount of memory doesn’t mean it’s going to be able to effectively scale to use it right. I’m sure Linux will get there some day, but believe it is a ways off.