I was an Exanet customer a few years ago up until they crashed. They had a pretty nice NFS cluster for scale out, well at least it worked well for us at the time and it was really easy to manage.

Dell bought them over two years ago and hired many of the developers and have been making the product better I guess over the past couple of years. Really I think they could of released a product – wait for it – a couple of years ago given that Exanet was simply a file system that ran on top of CentOS 4.x at the time. Dell was in talks with Exanet at the time they crashed to make Exanet compatible with an iSCSI back end (because really who else makes a NAS head unit that can use iSCSI as a back end disk). So even that part of the work was pretty much done.

It was about as compatible as you could get really. It would be fairly trivial to certify it against pretty much any back end storage. But Dell didn’t do that, they sat on it making it better(one would have to hope at least). I think at some point along the line perhaps even last year they released something in conjunction with Equallogic – I believe that was going to be their first target at least, but with so many different names for their storage products I’m honestly not sure if it has come out yet or not.

Anyways that’s not the point of this post.

Exanet clustering, as I’ve mentioned before was sort of like 3PAR for file storage. It treated files like 3PAR treats chunklets. It was highly distributed (but lacked data movement and re-striping abilities that 3PAR has had for ages).

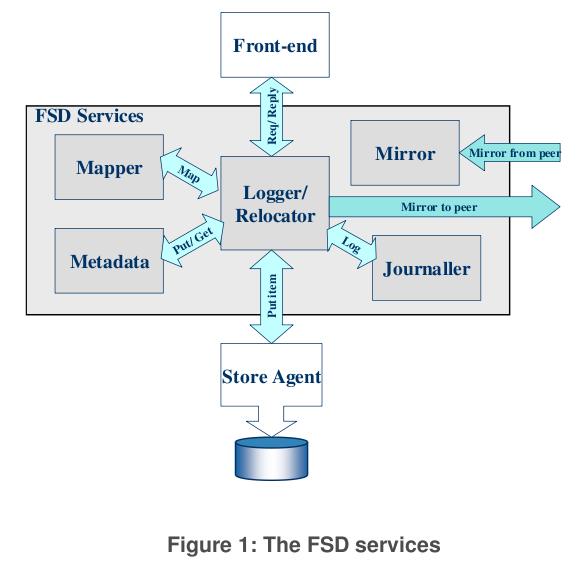

Exanet File System Daemon - a software controller for files in the file system, typically one per CPU core, a file had a primary FSD and a secondary FSD. New files would be distributed evenly across all FSDs.

One of the areas where the product needed more work I thought was being able to scale up more. It was a 32-bit system – so inherited your typical 32-bit problems like memory performance going in the tank when you try to address large amounts of memory. When their Sun was about to go super nova they told me they had even tested up to 16-node clusters on their system, they could go higher there just wasn’t customer demand.

3PAR too was a 32-bit platform for the longest time, but those limitations were less of an issue for it because so much of the work was done in hardware – it even has physical separation of the memory used for the software vs the data cache. Unlike Exanet which did everything in software, and of course shared memory between the OS and data cache. Each FSD had it’s own data cache, something like up to 1.5GB per FSD.

Requests could be sent to any controller, any FSD, if that FSD was not the owner of the file it would send a request on a back end cluster interconnect and proxy the data for you, much like 3PAR does in it’s clustering.

I believed it was a great platform to just throw a bunch of CPU cores and gobs of memory at, it runs on a x86-64 PC platform (IBM Dual socket quad core was their platform of choice at the time). 8, 10 and 12 core CPUs were just around the corner, as were servers which could easily get to 256GB or even 512GB of memory. When your talking software licensing costs being in the tens of thousands of dollars – give me more cores and ram, the cost is minimal on such a commodity platform.

So you can probably understand my disappointment when I came across this a few minutes ago, which tries to hype up the upcoming Exanet platform.

- Up to 8 nodes and 1PB of storage (Exanet could do this and more 4 years ago – though in this case it may be a Compellent limitation as they may not support more than two Compellent systems behind a Exanet cluster – docs are un clear) — Originally Exanet was marketed as a system that could scale to 500TB per 2-node pair. Unofficially they preferred you had less storage per pair (how much less was not made clear – at my peak I had around I want to say 140TB raw managed by a 2-node cluster? It didn’t seem to have any issues with that we were entirely spindle bound)

- Automatic load balancing (this could be new – assuming it does what it implies – which the more I think about it I’d be it does not do what I think it should do and probably does the same load balancing Exanet did four years ago which was less load balancing and more round robin distribution)

- Dual processor quad core with 24GB – Same controller configuration I got in 2008 (well the CPU cores are newer) — Exanet’s standard was 16GB at the time but you could get a special order and do 24GB though there was some problem with 24GB at the time that we ran into during a system upgrade I forgot what it was.

- Back end connectivity – 2 x 8Gbps FC ports (switch required) — my Exanet was 4Gbps I believe and was directly connected to my 3PAR T400, queue depths maxed out at 1500 on every port.

- Async replication only – Exanet had block based async replication this in late 2009/early 2010. Prior to that they used a bastardized form of rsync (I never used either technology)

- Backup power – one battery per controller. Exanet used old fashioned UPSs in their time, not sure if Dell integrated batteries into the new systems or what.

- They dropped support for Apple File Protocol. That was one thing that Exanet prided themselves on at the time – they even hired one of the guys that wrote the AFP stack for Linux, they were the only NAS vendor (that I can recall) at the time that supported AFP.

- They added support for NDMP – something BlueArc touted to us a lot at the time but we never used it, wasn’t a big deal. I’d rather have more data cache than NDMP.

I mean from what I can see I don’t really see much progress over the past two years. I really wanted to see things like

- 64-bit (the max memory being 24GB implies to me still a 32-bit OS+ file system code)

- Large amounts of memory – at LEAST 64GB per controller – maybe make it fancy and make it it flash-backed? RAM IS CHEAP.

- More cores! At least 16 cores per controller, though I’d be happier to see 64 per controller (4x Opteron 6276 @ 2.3Ghz per controller) – especially for something that hasn’t even been released yet. Maybe based on Dell R815 or R820

- At least 16-node configuration (the number of blades you can fit in a Dell blade chassis(perhaps running Dell M620), not to mention this level of testing was pretty much complete two and a half years ago).

- SSD Integration of some kind – meta data at least? There is quite a bit of meta data mapping all those files to FSDs and LUNs etc.

- Clearer indication that the system supports dynamic re-striping as well as LUN evacuation (LUN evacuation especially something I wanted to leverage at the time – as the more LUNs you had the longer the system took to fail over. In my initial Exanet configuration the 3PAR topped out at 2TB LUNs, later they expanded this to 16TB but there was no way from the Exanet side to migrate to them, and Exanet being fully distributed worked best if the back end was balanced so it wasn’t a best practice to have a bunch of 2TB LUNs then start growing by adding 16TB LUNs you get the idea) – the more I look at this pdf the less confident I am in them having added this capability (that PDF also indicates using iSCSI as a back end storage protocol).

- No clear indication that they support read-write snapshots yet (all indications point to no). For me at the time it wasn’t a big deal, snapshots were mostly used for recovering things that were accidentally deleted. They claim high performance with their redirect on write – though in my experience performance was not high. It was adequate with some tuning, they claimed unlimited snapshots at the time, but performance did degrade on our workloads with a lot of snapshots.

- A low end version that can run in VMware – I know they can do it because I have an email here from 2 years ago that walks you through step by step instructions installing an Exanet cluster on top of VMware.

- Thin provisioning friendly – Exanet wasn’t too thin provisioning friendly at the time Dell bought them – no indication from what I’ve seen says that has changed (especially with regards to reclaiming storage). The last version Exanet released was a bit more thin provisioning friendly but I never tested that feature before I left the company, by then the LUNs had grown to full size and there wasn’t any point in turning it on.

I can only react based on what I see on the site – Dell isn’t talking too much about this at the moment it seems, unless perhaps your a close partner and sign a NDA.

Perhaps at some point I can connect with someone who has in depth technical knowledge as to what Dell has done with this fluid file system over the past two years, because really all I see from this vantage point is they added NDMP.

I’m sure the code is more stable, easier to maintain perhaps, maybe they went away from the Outlook-style GUI, slapped some Dell logos on it, put it on Dell hardware.

It just feels like they could of launched this product more than two years ago minus the NDMP support (take about 1 hour to put in the Dell logos, and say another week to certify some Dell hardware configuration).

I wouldn’t imagine the SpecSFS performance numbers would of changed a whole lot as a result, maybe it would be 25-35% faster with the newer CPU cores (those SpecSFS results are almost four years old). Well performance could be boosted more by the back end storage. Exanet used to use the same cheap crap LSI crap that BlueArc used to use (perhaps still does in some installations on the low end). Exanet even went to the IBM OEM version of LSI and wow have I heard a lot of horror stories about that too(like entire arrays going off line for minutes at a time and IBM not being able to explain how/why then all of a sudden they come back as if nothing happened). But one thing Exanet did see time and time again, performance on their systems literally doubled when 3PAR storage was used (vs their LSI storage). So I suspect fancy Compellent tiered storage with SSDs and such would help quite a bit in improving front end performance on SpecSFS. But that was true when the original results were put out four years ago too.

What took so long? Exanet had promise, but at least so far it doesn’t seem Dell has been able to execute on that promise. Prove me wrong please because I do have a soft spot for Exanet still 🙂