I was an Exanet customer a few years ago up until they crashed. They had a pretty nice NFS cluster for scale out, well at least it worked well for us at the time and it was really easy to manage.

Dell bought them over two years ago and hired many of the developers and have been making the product better I guess over the past couple of years. Really I think they could of released a product – wait for it – a couple of years ago given that Exanet was simply a file system that ran on top of CentOS 4.x at the time. Dell was in talks with Exanet at the time they crashed to make Exanet compatible with an iSCSI back end (because really who else makes a NAS head unit that can use iSCSI as a back end disk). So even that part of the work was pretty much done.

It was about as compatible as you could get really. It would be fairly trivial to certify it against pretty much any back end storage. But Dell didn’t do that, they sat on it making it better(one would have to hope at least). I think at some point along the line perhaps even last year they released something in conjunction with Equallogic – I believe that was going to be their first target at least, but with so many different names for their storage products I’m honestly not sure if it has come out yet or not.

Anyways that’s not the point of this post.

Exanet clustering, as I’ve mentioned before was sort of like 3PAR for file storage. It treated files like 3PAR treats chunklets. It was highly distributed (but lacked data movement and re-striping abilities that 3PAR has had for ages).

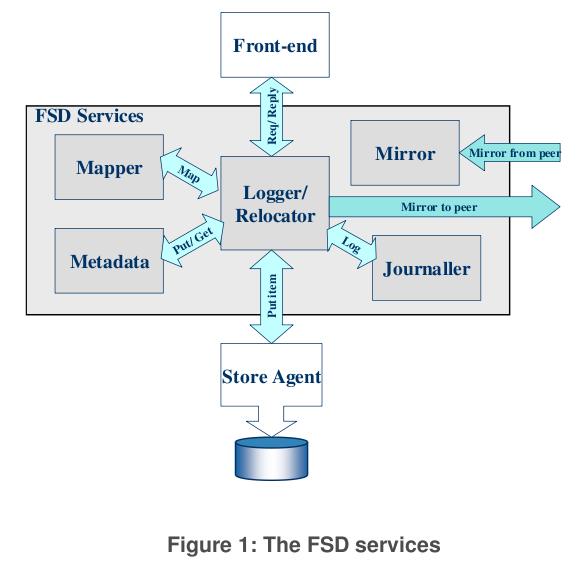

Exanet File System Daemon - a software controller for files in the file system, typically one per CPU core, a file had a primary FSD and a secondary FSD. New files would be distributed evenly across all FSDs.

One of the areas where the product needed more work I thought was being able to scale up more. It was a 32-bit system – so inherited your typical 32-bit problems like memory performance going in the tank when you try to address large amounts of memory. When their Sun was about to go super nova they told me they had even tested up to 16-node clusters on their system, they could go higher there just wasn’t customer demand.

3PAR too was a 32-bit platform for the longest time, but those limitations were less of an issue for it because so much of the work was done in hardware – it even has physical separation of the memory used for the software vs the data cache. Unlike Exanet which did everything in software, and of course shared memory between the OS and data cache. Each FSD had it’s own data cache, something like up to 1.5GB per FSD.

Requests could be sent to any controller, any FSD, if that FSD was not the owner of the file it would send a request on a back end cluster interconnect and proxy the data for you, much like 3PAR does in it’s clustering.

I believed it was a great platform to just throw a bunch of CPU cores and gobs of memory at, it runs on a x86-64 PC platform (IBM Dual socket quad core was their platform of choice at the time). 8, 10 and 12 core CPUs were just around the corner, as were servers which could easily get to 256GB or even 512GB of memory. When your talking software licensing costs being in the tens of thousands of dollars – give me more cores and ram, the cost is minimal on such a commodity platform.

So you can probably understand my disappointment when I came across this a few minutes ago, which tries to hype up the upcoming Exanet platform.

- Up to 8 nodes and 1PB of storage (Exanet could do this and more 4 years ago – though in this case it may be a Compellent limitation as they may not support more than two Compellent systems behind a Exanet cluster – docs are un clear) — Originally Exanet was marketed as a system that could scale to 500TB per 2-node pair. Unofficially they preferred you had less storage per pair (how much less was not made clear – at my peak I had around I want to say 140TB raw managed by a 2-node cluster? It didn’t seem to have any issues with that we were entirely spindle bound)

- Automatic load balancing (this could be new – assuming it does what it implies – which the more I think about it I’d be it does not do what I think it should do and probably does the same load balancing Exanet did four years ago which was less load balancing and more round robin distribution)

- Dual processor quad core with 24GB – Same controller configuration I got in 2008 (well the CPU cores are newer) — Exanet’s standard was 16GB at the time but you could get a special order and do 24GB though there was some problem with 24GB at the time that we ran into during a system upgrade I forgot what it was.

- Back end connectivity – 2 x 8Gbps FC ports (switch required) — my Exanet was 4Gbps I believe and was directly connected to my 3PAR T400, queue depths maxed out at 1500 on every port.

- Async replication only – Exanet had block based async replication this in late 2009/early 2010. Prior to that they used a bastardized form of rsync (I never used either technology)

- Backup power – one battery per controller. Exanet used old fashioned UPSs in their time, not sure if Dell integrated batteries into the new systems or what.

- They dropped support for Apple File Protocol. That was one thing that Exanet prided themselves on at the time – they even hired one of the guys that wrote the AFP stack for Linux, they were the only NAS vendor (that I can recall) at the time that supported AFP.

- They added support for NDMP – something BlueArc touted to us a lot at the time but we never used it, wasn’t a big deal. I’d rather have more data cache than NDMP.

I mean from what I can see I don’t really see much progress over the past two years. I really wanted to see things like

- 64-bit (the max memory being 24GB implies to me still a 32-bit OS+ file system code)

- Large amounts of memory – at LEAST 64GB per controller – maybe make it fancy and make it it flash-backed? RAM IS CHEAP.

- More cores! At least 16 cores per controller, though I’d be happier to see 64 per controller (4x Opteron 6276 @ 2.3Ghz per controller) – especially for something that hasn’t even been released yet. Maybe based on Dell R815 or R820

- At least 16-node configuration (the number of blades you can fit in a Dell blade chassis(perhaps running Dell M620), not to mention this level of testing was pretty much complete two and a half years ago).

- SSD Integration of some kind – meta data at least? There is quite a bit of meta data mapping all those files to FSDs and LUNs etc.

- Clearer indication that the system supports dynamic re-striping as well as LUN evacuation (LUN evacuation especially something I wanted to leverage at the time – as the more LUNs you had the longer the system took to fail over. In my initial Exanet configuration the 3PAR topped out at 2TB LUNs, later they expanded this to 16TB but there was no way from the Exanet side to migrate to them, and Exanet being fully distributed worked best if the back end was balanced so it wasn’t a best practice to have a bunch of 2TB LUNs then start growing by adding 16TB LUNs you get the idea) – the more I look at this pdf the less confident I am in them having added this capability (that PDF also indicates using iSCSI as a back end storage protocol).

- No clear indication that they support read-write snapshots yet (all indications point to no). For me at the time it wasn’t a big deal, snapshots were mostly used for recovering things that were accidentally deleted. They claim high performance with their redirect on write – though in my experience performance was not high. It was adequate with some tuning, they claimed unlimited snapshots at the time, but performance did degrade on our workloads with a lot of snapshots.

- A low end version that can run in VMware – I know they can do it because I have an email here from 2 years ago that walks you through step by step instructions installing an Exanet cluster on top of VMware.

- Thin provisioning friendly – Exanet wasn’t too thin provisioning friendly at the time Dell bought them – no indication from what I’ve seen says that has changed (especially with regards to reclaiming storage). The last version Exanet released was a bit more thin provisioning friendly but I never tested that feature before I left the company, by then the LUNs had grown to full size and there wasn’t any point in turning it on.

I can only react based on what I see on the site – Dell isn’t talking too much about this at the moment it seems, unless perhaps your a close partner and sign a NDA.

Perhaps at some point I can connect with someone who has in depth technical knowledge as to what Dell has done with this fluid file system over the past two years, because really all I see from this vantage point is they added NDMP.

I’m sure the code is more stable, easier to maintain perhaps, maybe they went away from the Outlook-style GUI, slapped some Dell logos on it, put it on Dell hardware.

It just feels like they could of launched this product more than two years ago minus the NDMP support (take about 1 hour to put in the Dell logos, and say another week to certify some Dell hardware configuration).

I wouldn’t imagine the SpecSFS performance numbers would of changed a whole lot as a result, maybe it would be 25-35% faster with the newer CPU cores (those SpecSFS results are almost four years old). Well performance could be boosted more by the back end storage. Exanet used to use the same cheap crap LSI crap that BlueArc used to use (perhaps still does in some installations on the low end). Exanet even went to the IBM OEM version of LSI and wow have I heard a lot of horror stories about that too(like entire arrays going off line for minutes at a time and IBM not being able to explain how/why then all of a sudden they come back as if nothing happened). But one thing Exanet did see time and time again, performance on their systems literally doubled when 3PAR storage was used (vs their LSI storage). So I suspect fancy Compellent tiered storage with SSDs and such would help quite a bit in improving front end performance on SpecSFS. But that was true when the original results were put out four years ago too.

What took so long? Exanet had promise, but at least so far it doesn’t seem Dell has been able to execute on that promise. Prove me wrong please because I do have a soft spot for Exanet still 🙂

Surprising they didn’t make more progress.

Comment by Brandon — June 27, 2012 @ 10:33 am

We had just completed an evaluation about a week before they went into receivership in ’09. The product was interesting, other than an issue we ran into with one daemon ‘owning’ 90+ % of the files due to an error during ingest that they didn’t discover until we ran into performance problems later in the eval due to the imbalance.

It is disappointing to see how far the product hasn’t come in the past 2.5 years. It looks like they intend to roll the Ocarina dedupe into the same product, but seems like that could’ve been done by now too. The more disappointing thing in my opinion is seeing two products that were fairly vendor-agnostic (Exanet/Ocarina) rolled into an ‘integrated’ solution that ultimately removes choices from the market – particularly when the integration effort appears to have set them back two years of progress relative to the rest of the market. I may have been interested in either product individually, but there’s basically zero chance I’ll be replacing any existing storage with Dell storage, so they may as well no longer exist for my organization.

Comment by Chuck — June 28, 2012 @ 7:36 am

I agree completely! I too remember being told at one point they were integrating all of that stuff together though their documentation still is vague about being released at some future date. They could of released what they had (to existing customers at the least), a long time ago given how little progress has seemingly been made. I have a friend at what seems to be a pretty close Dell partner who knows more than me and tells me there is more to the story and will see what he can tell me beyond what I’ve found that is not under NDA, I plan to see him in a couple of weeks so maybe will get more info then. Though he was never a direct Exanet customer so he doesn’t have as much insight into what the product did vs what it appears to be able to do now.

Interesting about your issue with their ingestion, at one point I was curious about what our balance was with our FSDs, they gave me a script to run and it turned out to be pretty balanced. Though our workloads involved creating and deleting lots of files on a continuous basis.

It was sad to see the 3rd party open NAS platforms die off one by one (or be acquired). There just wasn’t enough margin in selling just the NAS heads, you really had to sell the back end storage too in order to make money. Whether it was Exanet, Ibrix, Onstor(LSI couldn’t make it work after they aquired them), and another that HP bought(other than Ibrix) forgot the name off hand.

Who’s left ? Nexenta is out there, and seems to be growing quickly. I’m a customer of theirs for two very very small installations from within VMWare (one cluster, one standalone) – though I’d much rather have Exanet.

Nexenta has a pretty nasty issue from the management standpoint where if you do anything with snapshots from the GUI/CLI it has to iterate through each and every snapshot on the system – for my really small system that took more than 4 hours – meanwhile the management interfaces are basically unresponsive. It’s an active/passive cluster design – active/active is possible in a hacky way by putting different file systems on different controllers. But a volume/file system can’t span controllers – which for my particular use case is no big deal since the size is so small. I wouldn’t use it for anything too serious in it’s present state. Also anyone’s reliance on ZFS from organizations other than Oracle sort of scare me due to support issues. I’m not sure as to the level of R&D these 3rd party ZFS people have to maintain their products going forward now that Open Solaris is buried.

Ocarnia looked like a pretty cool product, I almost evaluated it a few years ago I think that product too hasn’t seen the light of day since Dell bought them?

thanks for the comment!

Comment by Nate — June 28, 2012 @ 8:11 am

We’ve used Nexenta in a VMware lab environment, and it worked well for a couple years. Then just recently began to lock the iSCSI LUNs during any major VM deployment or consolidation operations which would cause the host and all other VMs to become unresponsive. The size of the LUNs was small enough that the gain from dedupe wasn’t really significant so we just moved them to our main production array after the 4th Nexenta lockup in as many weeks.

That said, I’m sure we’ll find other uses for it, but it unfortunately doesn’t really compare to the Exanet boxes for us.

Melio has an interesting software product for scale-out NAS on a Windows platform. Symantec used to have a software offering based on the Vertias file system, but at one point they productized the hardware/software and I’m not sure if you can buy it standalone anymore. The last time I came across it it appeared they’d rolled it into their hardware for Netbackup. It also looks like MS is doing some work on scale-out NAS clusters in Server 2012, but the supported use cases are limited right now.

I think the Ocarina technology showed up in Dell’s object storage product at some point last year, but I haven’t seen it other than that. Presumably they kept selling the original product to existing customers.

Comment by Chuck — June 29, 2012 @ 1:21 pm

I just had a brief tech call with Nexenta this morning and one of the comments was sort of funny. When I first deployed Nexenta they said 12GB of ram was enough for my really small installation, then I had an issue and they speculated maybe ram was a problem (the root cause ended up being something else) and suggested I upgrade to 24GB, so I did. The person today mentioned they normally recommend a minimum of 48GB. I just looked at the extent of our Nexenta data set and it’s 380GB of written data (so, really tiny!). He thought 24GB would be fine given the size, but I just thought it kind of funny every so often I talk to them and the amount of ram they want doubles.

Comment by Nate — July 3, 2012 @ 12:07 pm

Hi Nate,

Appreciate your comments on Exanet (now rebranded as FluidFS), and your architectural description is pretty much right on. I run product planning for NAS, formerly of Ocarina, so I can speak to all of the above.

Happy to fill in more details, but here it is in bullet form:

– After the acquisition, we implemented several architectural changes such as moving to shared back-end storage, improvements to metadata architecture, integration of the block/file GUIs, and automated LUN provisioning. But alas things always move more slowly at big companies than one might hope.

– The FluidFS 1.0 products have been in the market for a year and doing well (NX3500, FS7500)

– Our day-1 strategy was focused on adding basic file capabilities to EqualLogic, Compellent, and PowerVault…not particularly focused on Exanet-style Petabyte scale-out initially. We are now expanding that envelope with FS8600 supporting 8 controllers in a cluster.

– The scale of current products (1PB) don’t reflect any FluidFS limitations; more of test limitations and certification. We are also expanding that envelope with each release, and you’ll be seeing multi-PB support in not too distant future. FluidFS has great namespace scalability and can utilize all the capacity of the SAN in a single volume if desired.

– We engineered a purpose built NAS appliance with battery built into the controllers, shipping with all 3 new products this fall (NX3600, FS7600, FS8600). Its role is just to drain the write cache upon outage.

– VmWare support did not regress, still works fine. Vcenter and VAAI support are getting to be more important these days though, and those are on the way.

– The legacy Ocarina product was hard to integrate since it was an in-band appliance. The work at Dell is to integrate it as a logical tier (policy-driven) in the FS. I guarantee work is well underway, but just can’t commit to dates at this point. We’re hyper sensitive to anything that touches the data-path, and that partly explains the lengthy development time.

– FluidFS leverages underlying LUN features such as block-tiering, thin provisioning, and capacity balancing. But you’re right FluidFS doesn’t do its own pre-emptive capacity balancing at this time. That’s one of the things on our list.

– Cache is still 24GB, although we’ll be raising that in future releases. Also, we’re not particularly core bound today, so CPU choice is about aligning performance (NIC, RAM, use-case expectations) and cost.

– We’re very happy with our metadata performance…No need at this time for SSD

– Confirmed our new NAS Appliance supports FCP, iSCSI, 10G, 1G (base-T and SFP+). Details vary by model.

Feel free to reach out if you have more questions. There’s tons of work yet to do, and we’re doing what we can to accelerate that.

Regards, Mike

Comment by Mike Davis — July 16, 2012 @ 8:53 am

Thanks for the info mike!

Nice to see there is a purpose built appliance as the requirement to have an external UPS

greatly complicated many Exanet installs in 3rd party data centers.

Hopefully snapshot performance can be (or has been) improved as that was another sore spot

on the Exanet file system. I see claims of 10,000 snapshot support on the products you

mentioned, back when I was using the tech I would say snapshot limits would entirely

depend on workload, for our workloads I don’t think I went above say 500 snapshots on the

~100TB file system, with large amounts of files created and deleted on a regular basis

the performance of the volumes could degrade quite a bit with many snapshots.

As per CPU cores – my main point in the post (and in previous posts referring to other

storage products from other companies – mainly NetApp and EMC) is that adding the extra

CPU cores really adds almost no cost to the solution, given the margins on the product

and stuff. As a customer I’d happily pay the extra say $2-4k for doubling/tripling CPU

power, even if the software for whatever reason can’t directly leverage it today, I’d

take the extra cpu horse power up front in the hopes – that during the life cycle of

the system (which I would expect would be a few years) that there would be opportunities

to take advantage of them in the future.

But instead the likes of say NetApp in particular since they disclose their CPU type

in the SpecSFS benchmarks, would rather the customer pay an extra $50k+ to do a simple

cpu/memory upgrade.

I’ll continue to keep an eye out for Exanet stuff..!

thanks again

Comment by Nate — July 29, 2012 @ 1:36 pm

Hey, I think you’re the only person left on the Internet who talks about Exanet… and as of last year, I’ve inherited a pretty busted installation, and this morning it really shit the bed and won’t even get past the mounts_manager startup… can we chat? 🙂

Comment by Dossy Shiobara — March 11, 2013 @ 7:21 pm

Specifically, stage 7 of startup tries to mount localhost:/int_mnt onto /mnt/internal, and fails with a “mount: Stale NFS file handle” error. `showmount -e` shows /exavol/vol{0,1,2,3}. exafsscan of int_mnt volume doesn’t seem to help.

Comment by Dossy Shiobara — March 11, 2013 @ 7:27 pm

I wish I could help but can’t really — I haven’t had an exanet in a couple of years now — I did have to seek out exanet support post-collapse and I managed to get some but it was really tough. I had to call and beg and plead every favor I could.

BUT I do know a company that may be able to help. They employ some former Exanet employees including the support engineer that supported me, and have some good knowledge of the system. At the time at least they were even offering formal support contracts that included spare parts etc and “best effort” support (of course given they don’t have the actual code).

When I had to seek out Exanet support from them on one occasion (real bad problem — that wasn’t caused by Exanet), they managed to get the support engineer that I knew on site with some spare equipment to help me rebuild the cluster within 24 hours of my calling them. Of course this was somewhat of a special case I suspect.

The company is Daystrom – I believe the website is http://daystrom.com/

I am not sure whether or not they still offer Exanet extended support now — though I know for sure with this guy on staff they have some pretty senior tech talent. Provided your problem is not too out of this world.

If you have trouble getting through to someone or that ends up being the wrong Daystrom, let me know and I can get you in direct contact with the support engineer. I assume the email address you used on your comment is a valid one ?

best of luck

Comment by Nate — March 11, 2013 @ 7:30 pm

Thanks, Nate. I looked at Daystrom’s site and they don’t mention Exanet anywhere, but if they have former Exanet employees, that could be promising.

Yes, my email I use with my comments is my valid email — feel free to pass it along — basically, the issue is that the localhost:/int_mnt seems to have disappeared and will no longer mount, and I’m desperately trying to figure out a way to fix this … I don’t even know if it’s possible to fix … I wish I knew what was stored there, if it could be reconstructed somehow … 🙁

Anyway, it’s gonna be a long night … been dealing with this since ~9:30 AM this morning …

Comment by Dossy Shiobara — March 11, 2013 @ 7:48 pm

sorry for the late reply – got side tracked — sending you an email and will CC him

Comment by Nate — March 13, 2013 @ 2:47 pm