I was thinking about when the last time I built a computer from scratch this morning and I think it was about ten years ago, maybe longer – I remember the processor was a Pentium 3 800Mhz. It very well may of been almost 12 years ago. Up until around 2004 time frame I had built and re-built computers re-using older parts and some newer components, but as far as going out and buying everything from scratch, it was 10-12 years ago.

I had two of them, one was a socket-based system the other was a “Slot 2“-based system. I also built a couple systems around dual-slot (Slot 1) Pentium 2 systems with the Intel L440GX+ Motherboard (probably my favorite motherboard of all time). For those of you think that I use nothing but AMD I’ll remind you that aside from the AMD K6-3 series I was an Intel fanboy up until the Opteron 6100 was released. I especially liked the K6-3 for it’s on chip L2 cache, combined with 2 Megabytes of L3 cache on the motherboard it was quite zippy. I still have my K6-3 CPU itself in a drawer around here somewhere.

So I decided to build a new computer to move my file serving functions out of my HP xw9400 workstation which I bought about a year and a half ago into something smaller so I could turn the HP box into something less serious to play some games on my TV on (like WC: Saga!). Maybe get a better video card for it I’m not sure.

I have a 3Ware RAID card and 4x2TB disks in my HP box so I needed something that could take that. This is what I ended up going with, from Newegg –

- Chassis: Lian LIPC-Q25B (Mini ITX)

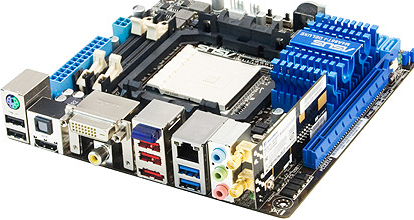

- Motherboard: ASUS M4A88T-I

- CPU: AMD Athlon II X3 3.3Ghz 3-core 95W (I wanted a lower wattage chip but Newegg didn’t have any, I figured in the end it won’t matter since the cpu will sit at 99.9% idle during 99% of the time)

- Memory: 2x4GBÂ (Maxed out motherboard)

- Power Supply: PC Power & Cooling 500W Silencer Mk III Semi-modular

Seemed like an OK combination, the case is pretty nice having a 5-port hot swap SATA backplane, supporting up to 7 HDDs. PC Power & Cooling (I used to swear by them and so thought might as well go with them again) had a calculator and said for as many HDDs as I had to get a 500W so I got that.

There is a lot of new things here that are new to me anyways and it’s been interesting to see how technology has changed since I last did this in the Pentium 3 era.

Mini ITX. Holy crap is that small. I knew it was small based on dimensions but it really didn’t set in until I held the motherboard box in my hand and it seemed about the same size as a retail box for a CPU 10 years ago. It’s no wonder the board uses laptop memory. The amount of integrated features on it are just insane as well from ethernet to USB 2 and USB 3, eSATA, HDMI, DVI, PS/2, optical audio output, analog audio out, and even wireless all crammed into that tiny thing. Oh! Bluetooth is thrown in as well. During my quest to find a motherboard I even came across a motherboard that had a parallel port on it – I thought those died a while ago. The thing is just so tiny and packed.

On the subject of motherboards – the very advanced overclocking functions is just amazing. I will not overclock since I value stability over performance, and I really don’t need the performance in this box. I took the overclocking friendliness of this board to hopefully mean higher quality components and the ability to run more stable at stock speeds. Included tweaking features –

- 64-step DRAM voltage control

- Adjustable CPU voltage at 0.00625V increments (?!)

- 64-step chipset voltage control

- PCI Express frequency tuning from 100Mhz up to 150Mhz in 1Mhz increments

- HT Tuning from 100Mhz to 550Mhz in 1Mhz increments

- ASUS C.P.R. (CPU Parameter Recall) – no idea what that is

- Option to unlock the 4th CPU core on my CPU

- Options to run on only 1,2 or or all 3 cores.

Last time I recall over clocking stuff there was maybe 2-3 settings for voltage and the difference was typically at least 5-15% between them. I remember the only CPU I ever over clocked was a Pentium 200 MMX (o/c to 225Mhz – no voltage changes needed ran just fine).

I seem to recall from a PCI perspective, back in my day there was two settings for PCI frequencies, whatever the normal was, and one setting higher(which was something like 25-33% faster).

Memory – wow it’s so cheap now, I mean 8GB for $45 ?! The last time I bought memory was for my HP workstation which requires registered ECC – and it was not so cheap ! This system doesn’t use ECC of course. Though given how dense memory has been getting and the possibility of memory errors only increasing I would think at some point soon we would want some form of ECC across the board ? It was certainly a concern 10 years ago when building servers with even say 1-2GB of memory now we have many desktops and laptops coming standard with 4GB+. Yet we don’t see ECC on the desktops and laptops – I know because of cost but my question is more around there doesn’t appear to be a significant (or perhaps in some cases even noticeable) hit in reliability of these systems with larger amounts of memory without ECC which is interesting.

Another thing I noticed was how massive some video cards have become, consuming as many as 3 PCI slots in some cases for their cooling systems. Back in my day the high end video cards didn’t even have heat sinks on them! I was a big fan of Number Nine back in my day and had both their Imagine 128 and Imagine 128 Series 2 cards, with a whole 4MB of memory (512kB chips if I remember right on the series 2, they used double the number of smaller chips to get more bandwidth). Those cards retailed for $699 at the time, a fair bit higher than today’s high end 3D cards (CAD/CAM workstation cards excluded in both cases).

Modular power supplies – the PSU I got was only partially modular but it was still neat to see.

I really dreaded the assembly of the system since it is so small, I knew the power supply was going to be an issue as someone on Newegg said that you really don’t want a PSU that is longer than 6″ because of how small the case is. I think PC Power & Cooling said mine was about 6.3″(with wiring harness). It was gonna be tight — and it was tight. I tried finding a shorter power supply in that class range but could not. It took a while to get the cables all wrapped up. My number one fear of course after doing all that work, hitting the power button and find out there’s a critical problem (bought the wrong ram, bad cpu, bad board, plugged in the power button the wrong way whatever).

I was very happy to see when I turned it on for the first time it lit up and the POST screen came right up on the TV. There was a bad noise comming from one of the fans because the cable was touching it, so I opened it up again and tweaked the cables more so they weren’t touching the fan, and off I went.

First, without any HDs just to make sure it turned on, the keyboard worked, I could get into the BIOS screen etc. All that worked fine, then I opened up the case again and installed an old 750GB HD in one of the hot swap slots. Hooked up a USB CDROM with a CD of Ubuntu 10.04 64-bit and installed it on the HD.

Since this board has built in wireless I was looking forward to trying it out – didn’t have much luck. It could see the 50 access points in the area but it was not able to login to mine for some reason, I later found that it was not getting a DHCP response so I hard wired an IP and it worked — but then other issues came up like DNS not working, very very slow transfer speeds(as in sub 100 BYTES per second), after troubleshooting for about 20 minutes I gave up and went wired and it was fast. I upgraded the system to the latest kernel and stuff but that didn’t help the wireless. Whatever, not a big deal I didn’t need it anyways.

I installed SSH, and logged into it from my laptop, shut off X-Windows, and installed the Cerberus Test Suite (something else I used to swear by back in the mid 00s). Fortunately there is a packaged version of it for Ubuntu as, last I checked it hasn’t been maintained in about seven years. I do remember having problems compiling it on a 64-bit RHEL system a few years ago (Though 32-bit worked fine and the resulting binaries worked fine on 32-bit too).

Cerberus test suite (or ctcs as I call it), is basically a computer torture test. A very effective one, the most effective I’ve ever used myself. I found that if a computer can survive my custom test (which is pretty basic) for 6 hours then it’s good, I’ve run the tests as long as 72 hours and never saw a system fail in a period more than 6 hours. Normally it would be a few minutes to a couple hours. It would find problems with memory that memtest wouldn’t find after 24 hours of testing.

What cerberus doesn’t do, is it doesn’t tell you what failed or why, if your system just freezes up you still have to figure it out. On one project I worked on that had a lot of “white box” servers in it, we deployed them about a rack at a time, and I would run this test, maybe 85% of them would pass, and the others had some problem, so I told the vendor go fix it, I don’t know what it is but these are not behaving like the others so I know there is a issue. Let them figure out what component is bad (90% of the time it was memory).

So I fired up ctcs last night, and watched it for a few minutes, wondering if there is enough cooling on the box to keep it from bursting into flames. To my delight it ran great, with the CPU topping out at around 54C (honestly have no idea if that is good or not, I think it is OK though). I ran it for 6 hours overnight and no issues when I got up this morning. I fired it up again for another 8 hours (the test automatically terminates after a pre defined interval).

I’m not testing the HD, because it’s just a temporary disk until I move my 3ware stuff over. I’m mainly concerned about the memory, and CPU/MB/cooling. The box is still running silent (I have other stuff in my room so I’m sure it makes some noise but I can’t hear it). It has 4 fans in it including the CPU fan. A 140mm, a 120mm and the PSU fan which I am not sure how big that is.

My last memory of ASUS was running on an Athlon with an A7A-266 motherboard(I think in 2000), that combination didn’t last long. The IDE controller on the thing corrupted data like nobody’s business. I would install an OS, and everything would seem fine then the initial reboot kicked in and everything was corrupt. I still felt that ASUS was a pretty decent brand maybe that was just specific to that board or something. I’m so out of touch with PC hardware at this level the different CPU sockets, CPU types, I remember knowing everything backwards and forwards in the Socket 7 days, back when things were quite interchangeable. Then there was my horrible year or two experience in the ABIT BP-6, a somewhat experimental dual socket Celeron system. What a mistake that was, oh what headaches that thing gave me. I think I remember getting it based on a recommendation at Tom’s Hardware guide, a site I used to think had good information (maybe it does now I don’t know). But that experience with the BP6 fed back into my thoughts about Tom’s hardware and I really didn’t go back to that site ever again(sometimes these days I stumble upon it on accident). I noticed a few minutes ago that Abit as a company is out of business now, they seemed to be quite the innovator back in the late 90s.

Maybe this weekend I will move my 3ware stuff over and install Debian (not Ubuntu) on the new system and set it up. While I like Red Hat/CentOS for work stuff, I like Debian for home. It basically comes down to if I am managing it by hand I want Debian, if I’m using tools like CFEngine to manage it I want RH. If it’s a laptop, or desktop then it gets Ubuntu 10.04 (I haven’t seen the nastiness in the newer Ubuntu release(s) so not sure what I will do after 10.04).

I really didn’t think I’d ever build a computer again, until this little side project came up.