That’s a pretty staggering number to me. I had some friends that worked at a company that is now defunct (acquired by F5) called Crescendo Networks.

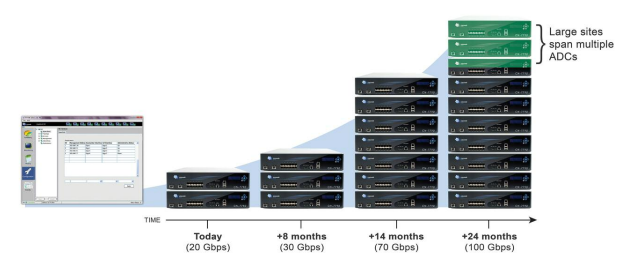

One of their claims to fame was the ability to “cluster” their load balancers so that you could add more boxes on the fly and it would just go faster, instead of having to rip and replace, or add more boxes and do funky things with DNS load balancing in trying to balance between multiple groups of load balancers

Crescendo's Scale out design - too bad the company didn't last long enough to see anyone leverage a 24-month expansion

Another company, A10 Networks (who is still around, though I think Brocade and F5 are trying to make them go away), whom introduced similar technology about a year ago called virtual chassis (details are light on their site). There may be other companies that have similar things too – they all seem gunning for the F5 VIPRION, which is a monster system, they took a chassis approach and support up to 4 blades of processing power. Then they do load balancing of the blades themselves to distribute the load. I have a long history in F5 products and know them pretty well, going back to their 4.x code base which was housed in (among other things) generic 4U servers with BSDI and generic motherboards.

I believe Zeus does it as well, I have used Zeus but have not gone beyond a 2 node cluster. I forgot, Riverbed bought them and changed the name to Stingray. I think Zeus sounds cooler.

With Crescendo the way they implemented their cluster was quite basic, it was very similar to how other load balancing companies improved their throughput for streaming media applications – some form of direct response from the server to the client, instead of having the response go back through the load balancer a second time. Here is a page from a long time ago on some reasons why you may not want to do this. I’m not sure how A10 or Zeus do it.

I am a Citrix customer now, having heard some good things about them over the years, but never having tried the product. I found it curious why the likes of Amazon and Google gobble up Netscaler appliances like M&Ms when for everything else they seem to go out of their way to build things themselves. I know Facebook is a big user of the F5 VIPRION system as well.

You’d think (or at least I think) companies like this – if they could leverage some sort of open source product and augment it with their own developer resources they would – I’m sure they’ve tried – and maybe they are using such products in certain areas. My information about who is using what could be out of date. I’ve used haproxy(briefly), nginx(more) at least for load balancers and wasn’t happy with either product. Give me a real load balancer please! Zeus seems to be a pretty nice platform – and open enough that you can run it on regular server hardware, rather than being forced into buying fixed appliances.

Anyways, I had a ticket open with Citrix today about a particular TLS issue regarding SSL re-negotiation, after a co-worker brought it to my attention that our system was reported as vunerable by her browser / plugins. During my research I came across this excellent site which shows a ton of useful info about a particular SSL site.

I asked Citrix how I could resolve the issues the site was reporting and they said the only way to do it was to upgrade to the latest major release of code (10.x). I don’t plan to do that, resolving this particular issue doesn’t seem like a big deal (though would be nice – not worth the risk of using this latest code so early after it’s release for this one reason alone). Add to that our site is fronted by Akamai (which actually posted poorer results on the SSL check than our own load balancers). We even had a “security scan” run against our servers for PCI compliance and it didn’t pick up anything related to SSL.

Anyways, back on topic. I was browsing through the release notes for the 10.x code branch and saw that Netscaler now supports clustering as well

You can now create a cluster of nCore NetScaler appliances and make them work together as a single system image. The traffic is distributed among the cluster nodes to provide high availability, high throughput, and scalability. A NetScaler cluster can include as few as 2 or as many as 32 NetScaler nCore hardware or virtual appliances.

With their top end load balancers tapping out at 50Gbps, that comes to 1.6Tbps with 32 appliances. Of course you won’t reach top throughput depending on your traffic patterns so taking off 600Gbps seems reasonable, still 1Tbps of throughput! I really can’t imagine what kind of service could use that sort of throughput at one physical site.

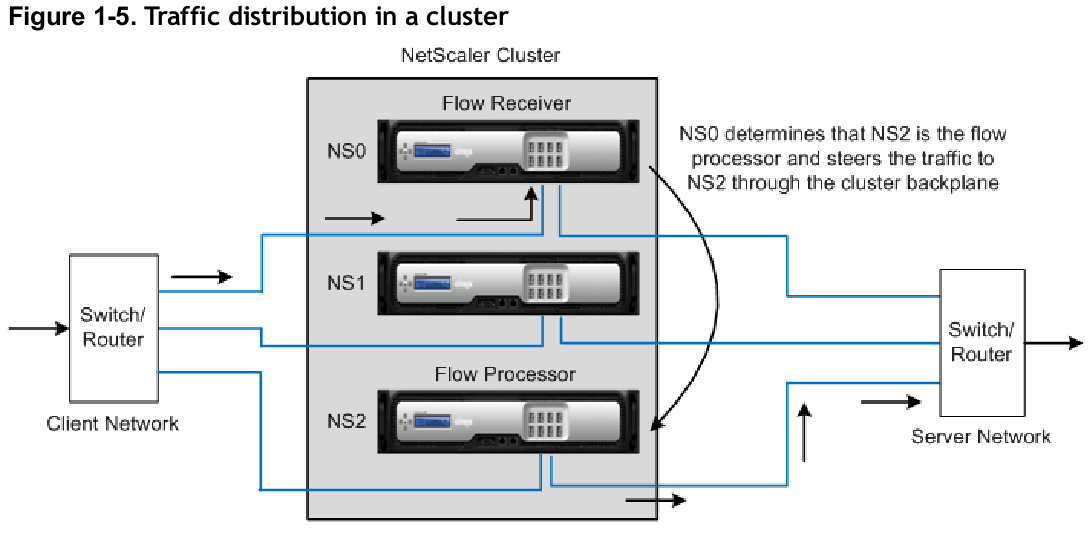

It seems, at least compared to the Crescendo model the Citrix model is a lot more like a traditional cluster, probably a lot more like a VIPRION design –

The NetScaler cluster uses Equal Cost Multiple Path (ECMP), Linksets (LS), or Cluster Link Aggregation Group (CLAG) traffic distribution mechanisms to determine the node that receives the traffic (the flow receiver) from the external connecting device. Each of these mechanisms uses a different algorithm to determine the flow receiver.

The flow reminds me a lot of the 3PAR cluster design actually.

My Thoughts on Netscaler

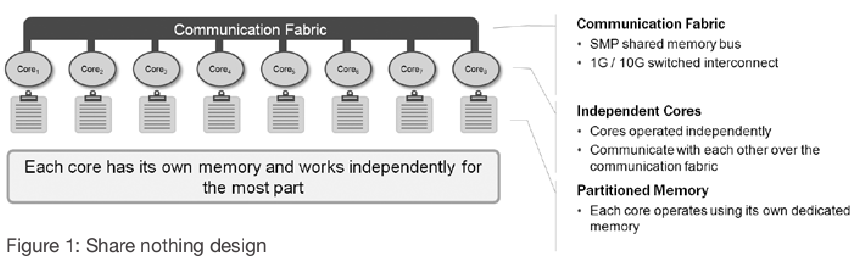

My experience so far with the Netscalers is mixed, some things I really like such as an integrated mature SSL VPN (note I said mature! well at least for windows – nothing for Linux and their Mac client is buggy and incomplete), application aware MySQL and DNS load balancing, and a true 64-bit multithreaded, shared memory design. I also really like their capacity on demand offering as well. These boxes are always CPU bound, so to have the option to buy a technically lower end box with the same exact CPU setup as a higher end box (that is rated for 2x the throughput) is really nice. It means I can turn on more of those CPU heavy features without having to fork over the cash for a bigger box.

While for the most part, at least last I checked – F5 was still operating on 32-bit TMOS (on top of 64-bit Linux kernels) leveraging a multi process design instead of a multi threaded design. So they were forced to add some hacks to load balance across multiple CPUs in the same physical load balancer in order to get the system to scale more (and there has been limitations over the years as to what could actually be distributed over multiple cores and what features were locked to a single core — as time has gone on they have addressed most of those that I am aware of). One in particular I remember (which may be fixed now I’m not sure – would be curious to know if it was fixed how they fixed it) – was that each CPU core had it’s own local memory with no knowledge of other CPUs – which means when doing HTTP caching – each CPU had to cache the content individually – massively duplicating the cache and slashing the effectiveness of the memory you had on the box. This was further compounded by the 32-bitness of TMM itself in it’s limited ability to address larger amounts of memory.

In any case the F5 design is somewhat arcane, they chose to bolt on software features early on instead of re-building the core. The strategy seems to have paid off though from a market share and profits standpoint, just from a technical standpoint it’s kinda lame 🙂

To be fair there are some features in the multi threaded Citrix Netscaler that are not available that are available in the older legacy code.

Things I don’t like about the Netscaler include their Java GUI which is slow as crap (they are working on a HTML 5 GUI – maybe that is in v10?), I mean it can literally take about 4 minutes to load up all of my server groups (Citrix term for F5 Pools). F5 I can load them in about half a second. I think the separation of services with regards to content switching on Citrix is, well pretty weird to say the least. If I want to do content filtering I have to have an internal virtual server and an external virtual server, the external one does the content filtering and forwards to the internal one. With F5 it was all in one (same for Zeus too). The terminology has been difficult to adjust to vs my F5 (and some Zeus) background.

I do miss the Priority Activation feature F5 has, there is no similar feature on Citrix as far as I know (well I think you can technically do it but the architecture of the Netscaler makes it a lot more complex). This feature allows you to have multiple pools of servers within a single pool at different priorities. By default the load balancer sends to the highest (or lowest? I forgot, it’s been almost 2 years) group of servers, if that group fails then it goes to the next, and the next. I think you can even specify the minimum number of nodes to have in a group before it fails over entirely to the next group.

Not being able to re-use objects with the default scripting language just seems brain dead to me, so I am using the legacy scripting language.

So I do still miss F5 for some things, Zeus for some other things, though Netscaler is pretty neat in it’s own respects. F5 obviously has a strong presence where I spent the last decade of my life in and around Seattle, being that it was founded and has it’s HQ in Seattle. Still have a buncha friends over there. Some pretty amazing stories I’ve heard come out of that place, they grew so fast, it’s hard to believe they are still in one piece after all they’ve been through, what a mess!

If you want to futz around with a Netscaler you have the option of downloading their virtual appliance (VPX) for free – I believe it has a default throughput limit of 1Mbit. Upgrades to as high as 3Gbps. Though the VPX is limited to two CPU cores last I recall. F5 and A10 have virtual appliances as well.

Crescendo did not have a virtual appliance, which is one of the reasons I wasn’t particularly interested in perusing their offering back when they were around. The inside story of the collapse of Crescendo is the stuff geek movies are made out of. I won’t talk about it here but it was just amazing to hear what happened.

The load balancing market is pretty interesting to see the different regions and where various players are stronger vs weaker. Radware for example is apparently strong over on the east coast but much less presence in the west. Citrix did a terrible job marketing the Netscaler for many years (a point they acknowledged to me), then there are those folks out there that still use Cisco (?!) which just confuses me. Then there are the smaller guys like A10, Zeus, Brocade – Foundry networks (acquired by Brocade, of course) really did themselves a disservice when they let their load balancing technology sit for a good five years between hardware refreshes, they haven’t been able to recover from that from what I’ve seen/heard. They tried to pitch me their latest iron a couple of years ago after it came out – only to find out that it didn’t support SSL at the time – I mean come on — of course they later fixed that lack of a feature but it was too late for my projects.

And in case you didn’t know – Extreme used to have a load balancer WAY BACK WHEN. I never used it. I forget what it’s called off the top of my head. Extreme also partnered with F5 in the early days and integrated F5 code into their network chipsets so their switches could do load balancing too (the last switch that had this was released almost a decade ago – nothing since). Though the code in the chipsets was very minimal and not useful for anything serious.