..even I was skeptical, though I knew with support it probably wouldn’t be a big deal, a disk fails and it gets replaced in a few hours. When we were looking to do a storage refresh last year I was proposing going entirely SATA for our main storage array because we had a large amount of inactive data, stuff we write to disk and then read back that same day then keep it around for a month or so before deleting it. So in theory it sounded like a good option, we get lots of disks to give us the capacity and those same lots of disks give us enough I/O to do real work too.

I don’t think you can do this with most storage systems, the architecture’s don’t support it nearly as well. To this point the competition was trying to call me out on my SATA solution last year citing reliability and performance reasons. They later backtracked on their statements after I pointed them to some documentation their own storage architects wrote which said the exact opposite.

It’s been just over a year since we had our 3PAR T400 installed with 200x750GB SATA disks, which are Seagate ST3750640NS if you are curious.

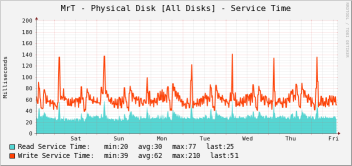

Our disks are hit hard, very hard. It’s almost a daily basis that we exceed 90 IOPS/disk at some point during the day, which large I/O sizes this drives the disk’s response time way up, I have another blog entry on that. Fortunately the controller cache is able to absorb that hit. But the point is our disks are not idle, they get slammed 24/7.

Average Service time across all spindles on my T400

How many disk failures have we had in the past year? One? two? three?

Zero.

For SATA drives, even enterprise SATA drives to me this is a shocking number given the load these disks are put under on a daily basis. Why is it zero? I think a good part of it has to do with the advanced design of the 3PAR disk chassis. Something they don’t really talk about outside of their architecture documentation. I think it is quite a unique design in their enterprise S and T-class systems (not available in their E or F-class systems). The biggest advantages these chassis have I believe is two fold:

- Vibration absorbing drive sleds – I’ve read in several places that vibration is the #1 cause of disk failure

- Switched design – no loops, each drive chassis is directly connected to the controllers, and each disk has two independent switched connections to a midplane in the drive chassis. Last year we had two separate incidents on our previous storage array that due to the loop design, allowed a single disk failure to take down the entire loop causing the array to go partially off line(outage), despite there being redundant loops on the system. I have heard stories more recently of other similar arrays doing the same thing.

There are other cool things but my thought is those are the two main ones that drive an improvement in reliability. They have further cool things like fast RAID rebuild which was a big factor in deciding to go with SATA on their system, but even if the RAID rebuilds in 5 seconds that doesn’t make the physical disks more reliable, and this post is specifically about physical disk reliability rather than recovering from failure. But as a note I did measure rebuild rate, and for a fully loaded 750GB disk we can rebuild a degraded RAID array in about three hours, with no impact to array system performance.

My biggest complaint about 3PAR at this point is their stupid naming convention for their PDFs. STUPID! FIX IT! I’ve been complaining off and on for years. But in the grand scheme of things…

Not shocked? Well I don’t know what to say. Even my co-worker who managed our previous storage system is continually amazed that we haven’t had a disk die

Now I’ve jinxed it I’m sure and I’ll get an alert saying a disk has died.

Thanks Nate, It would be interesting to see what SATA failure rates are across all vendors arrays. I have to think 3PAR would have the lowest rates – based on anecdotal evidence such as yours and from other customer I’ve spoken to. Of course SATA drives do fail in our systems sometimes.

-marc farley, social media @PAR

Comment by Marc Farley — November 20, 2009 @ 9:39 am

Nice article Nate and nice podcast Marc (Infosmack). Just another happy reader and listener (not a customer… I have a FAS 3460).

Comment by Scott — November 20, 2009 @ 3:00 pm

thanks!! Starting to get the hang of this blogging thing 🙂

Comment by Nate — November 21, 2009 @ 8:58 am

So… three years later, what’s the failure rate?

Comment by Nathan — November 29, 2012 @ 4:32 pm

Hey Nathan –

I’m sorry I can’t give a full answer since I left that company some time ago, but I do recall the first failures of those disk drives fail in the spring of 2010.

Comment by Nate — December 4, 2012 @ 8:24 am