Just got done reading this from our friends at The Register.

More than anything else this caught my eye:

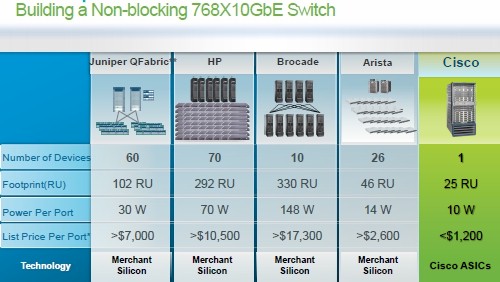

On the surface it looks pretty impressive, I mean it would be interesting to see exactly how Cisco configured the competing products as in which 60 Juniper devices or 70 HP devices did they use and how were they connected?

One thing that would of been interesting to call out in such a configuration, is the number of logical devices needed for management. For example I know Brocade’s VDX product is some fancy way of connecting lots of devices sort of like more traditional stacking just at a larger scale for ease of management. I’m not sure whether or not the VDX technology extends to their chassis product as Cisco’s configuration above seems to imply using chassis switches. I believe Juniper’s Qfabric is similar. I’m not sure if HP or Arista have such technology(I don’t believe they do). I don’t think Cisco does – but they don’t claim to need it either with this big switch. So a big part of the question is managing so many devices, or just managing one. Cost of the hardware/software is one thing..

HP recently announced a revamp of their own 10GbE products, at least the 1U variety. I’ve been working off and on with HP people recently and there was a brief push to use HP networking equipment but they gave up pretty quick. They mentioned they were going to have “their version” of the 48-port 10-gig switch soon, but it turns out it’s still a ways away – early next year is when it’s supposed to ship, even if I wanted it (which I don’t) – it’s too late for this project.

I dug into their fact sheet, which was really light on information to see what, if anything stood out with these products. I did not see anything that stood out in a positive manor, I did see this which I thought was kind of amusing –

Industry-leading HP Intelligent Resilient Framework (IRF) technology radically simplifies the architecture of server access networks and enables massive scalability—this provides up to 300% higher scalability as compared to other ToR products in the market.

Correct me if I’m wrong – but that looks like what other vendors would call Stacking, or Virtual Chassis. An age-old technology, but the key point here was the up to 300% higher scalability. Another way of putting it is at least 50% less scalable – when your comparing it to the Extreme Networks Summit X670V(which is shipping I just ordered some).

The Summit X670 series is available in two models: Summit X670V and Summit X670. Summit X670V provides high density for 10 Gigabit Ethernet switching in a small 1RU form factor. The switch supports up to 64 ports in one system and 448 ports in a stacked system using high-speed SummitStack-V160*, which provides 160 Gbps throughput and distributed forwarding. The Summit X670 model provides up to 48 ports in one system and up to 352 ports in a stacked system using SummitStack-V longer distance (up to 40 km with 10GBASE-ER SFP+) stacking technology.

In short, it’s twice as scalable as the HP IRF feature, because it goes up to 8 devices (56x10GbE each), and HP’s goes up to 4 devices (48x10GbE each — or perhaps they can do 56 too with breakout cables since both switches have the same number of physical 10GbE and 40GbE ports).

The list price on the HP switches is WAY high too, The Register calls it out at $38,000 for a 24-port switch. The X670 from Extreme has a list price of about $25,000 for 48-ports(I see it on-line for as low as about $17k). There was no disclosure of HP’s pricing for their 48-port switch.

Extreme has another 48-port switch which is cheaper (almost half the cost if I recall right – I see it on-line going for as low as $11,300) but it’s for very specialized applications where latency is really important. If I recall right they removed the PHY (?) from the switch which dramatically reduces functionality and introduces things like very short cable length limits but also slashes the latency (and cost). You wouldn’t want to use those for your VMware setup(well if you were really cost constrained these are probably better than some other alternatives especially if your considering this or 1GbE), but you may want them if your doing HPC or something with shared memory or high frequency stock trading (ugh!).

The X670 also has (or will have? I’ll find out soon) a motion sensor on the front of the switch which I thought was curious, but seems like a neat security feature, being able to tell if someone is standing in front of your switch screwing with it. It also apparently has the ability(or will have the ability) to turn off all of the LEDs on the switch when someone gets near it, and turn them back on when they go away.

(ok back on topic, Cisco!)

I looked at the Cisco slide above, and thought to myself, really, can they be that far ahead? I certainly do not go out on a routine basis and see how many devices and connectivity between them that I need to achieve X number of line rate ports, I’ll keep it simple, if you need a large number of line rate ports just use a chassis product(you may need a few of them). It is interesting to see though, assuming it’s anywhere close to being accurate.

When I asked myself the question “Can they be that far ahead?” I wasn’t thinking of Cisco, I think I’m up to 7 readers now — you know me better than that! 🙂

I was thinking of the Extreme Networks Black Diamond X-Series which was announced (note not yet shipping…) a few months ago.

- Cisco claims to do 768 x 10GbE ports in 25U (Extreme will do it in 14.5U)

- Cisco claims to do 10W per 10GbE/port (Extreme will do it in 5W/port)

- Cisco claims to do it with 1 device .. Well that’s hard to beat but Extreme can meet them, it’s hard to do it with less than one device.

- Cisco’s new top end taps out at very respectable 550Gbit per slot (Extreme will do 1.2Tb)

- Cisco claims to do it with a list price of $1200/port. I don’t know what Extreme’s pricing will be but typically Cisco is on the very high end for costs.

Though I don’t know how Cisco gets to 768 ports, Extreme does it via 40GbE ports and breakout cables (as far as I know), so in reality the X-series is a 40GbE switch (and I think 40GbE only – to start with unless you use the break out cables to get to 10GbE). It was a little over a year ago that Extreme was planning on shipping 40GbE at a cost of $1,000/port. Certainly the X-series is a different class of product than what they were talking about a while ago, but prices have also come down since.

X-Series is shipping “real soon now’. I’m sure if you ask them they’ll tell you more specifics.

It is interesting to me, and kind of sad how far Force10 has fallen in the 10GbE area, I mean they seemed to basically build themselves on the back of 10GbE(or at least tried to), but I look at their current products on the very high end, and short from the impressive little 40GbE switch they have, they seem to top out at 140 line rate 10GbE in 21U. Dell will probably do well with them, I’m sure it’ll be a welcome upgrade to those customers using Procurve, uh I mean Powerconnect? That’s what Dell call(ed) their switches right?

As much as it pains me I do have to give Dell some props for doing all of these acquisitions recently and beefing up their own technology base, whether it’s in storage, or networking they’ve come a long way (more so in storage, need more time to tell in networking). I have not liked Dell myself for quite some time, a good chunk of it is because they really had no innovation, but part of it goes back to the days before Dell shipped AMD chips and Dell was getting tons of kick backs from Intel for staying an Intel exclusive provider.

In the grand scheme of things such numbers don’t mean a whole lot, I mean how many networks in the world can actually push this kind of bandwidth? Outside of the labs I really think any organization would be very hard pressed to need such fabric capacity, but it’s there — and it’s not all that expensive.

I just dug up an old price list I had from Extreme – from late November 2005. An 6-port 10GbE module for their Black Diamond 10808 switch (I had two at the time) had a list price of $36,000. For you math buffs out there that comes to $9,000 per line rate port.

That particular product was oversubscribed (hence it not being $6,000/port) as well having a mere 40Gbps of switch fabric capacity per slot, or a total of 320Gbps for the entire switch (it was marketed as a 1.2Tb switch but hardware never came out to push the backplane to those levels – I had to dig into the depths of the documentation to find that little disclosure – naturally I found it after I purchased, didn’t matter for us though I’d be surprised if we pushed more than 5Gbps at any one point!). If I recall right the switch was 24U too. My switches were 1GbE only, cost reasons 🙂

How far we’ve come..