UPDATED(again)

I’ve been holding onto this post to get confirmation from HP support, now that I have it, here goes something’

UPDATE 1

A commenter Karl made a comment that made my brain think about this another way, and it turns out I was stupid in my original assessment as for the system storing the data for the snapshot. For some reason I was thinking the system was storing the zeros, but rather it was storing the data the zeros were replacing.

So I’m a dumbass for thinking that. homer moment *doh*

BUT the feature still appears broken in that what should happen is if there is in fact 200GB of data written to the snapshot that implies that zeros overwrote 200GB worth of non zero’d data – and that data should of been reclaimed from the source volume. In this case it was not, only a tiny fraction (1,536MB of logical storage or 12x128MB chunks of data). So at the very least the bulk of the data usage should of been moved from the source volume to the snapshot (snapshot space is allocated separate from the source volume so it’s easy to see which is using what). CURRENTLY the volume is showing 989GB of reserved space on the array with 120GB of written snapshot data and 140GB of file system data or around 260GB of total data which should come out to around 325GB of physical data in RAID 5 3+1, not 989thGB. But that space reclaiming technology is another feature thin copy reclamation. Which reclaims space from deleted snapshots.

So, sorry for being a dumbass for the original premise of the post, for some reason my brain got confused by the results of the tests, and it wasn’t until Karl’s comment that it made me think about it from the other angle.

I am talking to some more technical / non support people on Monday about this.

And thanks Karl 🙂

UPDATE 2

I got some good information from senior technical folks at 3PAR and it turns out the bulk of my problems are related to bugs in how one part reports raw space utilization (resulting in wildly inaccurate info), and a bug with regards to a space reclamation feature that was specifically disabled by a software patch on my array in order to fix another bug with space reclamation. So the fix for that is to update to a newer version of code which has that particular problem fixed for good(I hope?). I think I’d never get that kind of information out of the technical support team.

So in the end not much of a big issue after all, just confused by some bugs and functionality that was disabled and me being stupid.

END UPDATE

A lot of folks over the years have tried to claim I am a paid shill for 3PAR, or Extreme or whatever. All I can say is I’m not compensated by them for my posts in any direct way (maybe I get better discounts on occasion or something I’m not sure how that stuff factors in but in any case those benefits go to my companies rather than me).

I do knock them when I think they need to be knocked though. Here is something that made me want to knock 3PAR, well more than knock, more like kick in the butt, HARD and say W T F.

I was a very early adopter of the T-class of storage system, getting it in house just a couple months after it was released. It was the first system from them which had the thin built in – the thin reclamation and persistence technology integrated into the ASIC – only I couldn’t use it because the software didn’t exist at the time.

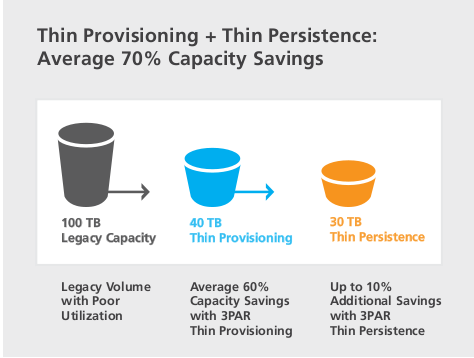

3PAR "Stay Thin" strategy - wasn't worth $100,000 in licensing for the extra 10% of additional capacity savings for my first big array.

That was kind of sad but it could of been worse – the competition that we were evaluating was Hitachi who had just released their AMS2000-series of products, literally about a month after the T-class was released. Hitachi had no thin provisioning support what-so-ever on the AMS2000 when it was launched. That came about seven months later. If you required thin provisioning at the time you had to buy a USP or (more common for this scenario due to costs at the time) a USP-V, which supported TP, and put the AMS2000 behind it. Hitachi refused to even give us a ballpark price as to the cost of TP on the AMS2000 whenever it was going to be released. I didn’t need an exact price, just tell me is it going to be $5,000, or $25,000 or maybe $100,000 or more ? Should be a fairly simple process, at least from a customer perspective especially given they already had such licensing in place on their bigger platform. In the end I took that bigger platform’s licensing costs(since they refused to give that to me too) and extrapolated what the cost might look like on the AMS line. I got the info from Storage Mojo‘s price list and basically took their price and cut it in half to take into account discounts and stuff. We ended up obviously not going for HDS so I don’t know what it would of really cost us in the end.

OK, steering the tangent closer to the topic again..bear with me.

Which got me wondering – given it is an ASIC – and not a FPGA they really have to be damn sure it works when they ship product otherwise it can be an expensive proposition to replace the ASICs if there is a design problem with the chip, after all the CPUs aren’t really in the data path of the stuff flowing through the system so it would be difficult to work around ASIC faults in software(if it was possible at all).

So I waited, and waited for the new thin stuff to come out, thinking since I had thin provisioning licensed already I would just install the new software and get the new features.

Then it was released – more than a year after I got the T400 but it came with a little surprise – additional licensing costs associated with the software – something nobody ever told me of (sounds like it was a last minute decision). If I recall right, for the system I had at the time if we wanted to fully license thin persistence it was going to be an extra $100,000 in software. We decided against it at the time, really wasn’t worth the price for what we’d reclaim. Later on 3PAR offered to give us the software for free if we bought another storage array for disaster recovery (which we were planning to) – but the disaster recovery project got canned so we never got it.

Another licensing feature of this new software was in order to get to the good stuff, the thin persistence you had to license another product – Thin Conversion whether you wanted it or not (I did not – really you might only need Thin Conversion if your migrating from a non thin storage system).

Fast forward almost two years and I’m at another company with another 3PAR, there was a thin provisioning licensing snafu with our system so for the past few months(and for the next few) I’m operating on an evaluation license which basically has all the features unlocked – including the thin reclamation tech. I had noticed recently that some of my volumes are getting pretty big – per the request of the DBA we have I agreed to make these volumes quite large – 400GB each, what I normally do is create the physical volume at 1 or 2TB (in this case 2TB), then I create a logical volume that is more in line with what the application actually needs(which may be as low as say 40GB for the database), then grow it on line as the space requirements increase.

3PAR’s early marketing at least tried to communicate that you can do away with volume management altogether. While certainly technically possible, I don’t recommend that you take that approach. Another nice thing about volume management is being able to name the volumes with decent names, which is very helpful when working with moving snapshots between systems, especially with MPIO and multiple paths and multiple snapshots on one system, with LVM it’s simple as can be, without – I really don’t want to think about it. Only downside is you can’t easily mount a snapshot back to the originating system because the LVM UUID will conflict and changing that ID is not (or was not, been a couple years since I looked into it) too easy, blocking access to the volume. Not a big deal though the number of times I felt I wanted to do that was once.

This is a strategy I came up with going back almost six years to my original 3PAR box and has worked quite well over the years. Originally, resizing was an off line operation since the kernel that we had at the time (Red Hat Enterprise 4.x) did not support on line file system growth, it does (and has) for a while now, I think since maybe 4.4/4.5 and certainly ever since v5.

Once you have a grasp as to the growth pattern of your application it’s not difficult to plan for. Getting the growth plan in the first place could be complex though given the dedicate on write technology, you had to (borrowing a term from Apple here) think different. It obviously wasn’t enough to just watch how much disk space was being consumed on average, you had to take into account space being written vs being deleted and how effective the file system was at re-utilizing deleted blocks. In the case of MySQL – being as inefficient as it is, you had to also take into account space utilization required by things like ALTER TABLE statements, in which MySQL makes a copy of the entire table with your change then drops the original. Yeah, real thin friendly there.

Given this kind of strategy it is more difficult to gauge just exactly how much your saving with thin provisioning, I mean on my original 3PAR I was about 300-400% over subscribed(which at the time was considered extremely high – I can’t count the number of hours I spent achieving that), I think I recall at that conference I was at David Scott saying the average customer was 300% oversubscribed. On my current system I am 1300% over subscribed. Mainly because I got a bunch of databases and I make them all 2TB volumes, I can say with a good amount of certainty that they will probably never get to remotely 2TB in size but it doesn’t affect me otherwise so I give it what I can (all my boxes on this array are VMware ESX 4.1 which of course has a 2TB limit – the bulk of these volumes are raw device mapped to leverage SAN-based snapshots as well as, to a lesser extent individually manage and monitor space and i/o metrics).

At the time my experience was compounded by the fact that I was still very green when it came to storage (I’d like to think I am more blue now at least whatever that might mean). Never really having dabbled much in it prior, choosing instead to focus on networking and servers. All big topics, I couldn’t take them all on at once 🙂

So my point is – even though 3PAR has had this technology for a while now – I really have never tried it. In the past couple months I have run the Microsoft sdelete tool on the 3 windows VMs I do have to support my vCenter stuff(everything else is Linux) – but honestly I don’t think I bothered to look to see if any space was reclaimed or not.

Now back on topic

Anyways, I have this one volume that was consuming about 300GB of logical space on the array when it had maybe 140GB of space written to the file system (which is 400GB). Obviously a good candidate for space reclamation, right? I mean the marketing claims you can gain 10% more space, in this case I’m gaining a lot more than that!

So I decided – hey how bout I write a basic script that writes out a ton of zeros to the file system to reclaim this space (since I recently learned that the kernel code required to do fancier stuff like fstrim [updated that post with new information at the end since I originally wrote it] doesn’t exist on my systems). So I put a basic looping script in to write 100MB files filled with zeros from /dev/zero.

I watched it as it filled up the file system over time (I spaced out the writing as to not flood my front end storage connections), watching it reclaim very little space – at the end of writing roughly 200GB of data it reclaimed maybe 1-2GB from the original volume. I was quite puzzled to say the least. But that’s not the topic of this post now is it.

I was shocked, awed, flabbergasted by the fact that my operation actually CONSUMED an additional 200GB of space on the system (space filled with zeros). Why did it do this? Apparently because I created a snapshot of the volume earlier in the day and the changes were being kept track of thus consuming the space. Never mind the fact that the system is supposed to drop the zeros even if it doesn’t reclaim space – it doesn’t appear to do so when there is a snapshot(s) on the volume, so the effects were a double negative – didn’t reclaim any space from the original, and actually consumed a ton more space (more than 2x the original volume size) due to the snapshot.

Support claims minimal space was reclaimed by the system because I wrote files in 100MB blocks instead of 128MB blocks. I find it hard to believe out of 200GB of files I wrote that there was not more 128MB contiguous blocks of space of zeros. But I will try the test again with 128MB files on that specific volume after I can contact the people that are using the snapshot to delete the snapshot and re-create it to reclaim that 200GB of space. Hell I might as well not even use the snapshot and create a full physical copy of the volume.

Honestly I’m sort of at a loss for words as to how stupid this is. I have loved 3PAR through thick and thin for a long time (and I’ve had some big thicks over the years that I haven’t written about here anyways..), but this one I felt compelled to. A feature so heavily marketed, so heavily touted on the platform is rendered completely ineffective when a basic function like snapshots is in use. Of course the documentation has nothing on this, I was looking through all the docs I had on the technology when I was running this test on Thursday and basically what it said was enable zero detection on the volume (disabled by default) and watch it work.

I’ve heard a lot of similar types of things (feature heavily touted but doesn’t work under load or doesn’t work period) on things like NetApp, EMC etc. This is a rare one for 3PAR in my experience at least. My favorite off the top of my head was NetApp’s testing of an EMC CX-3 performance with snapshots enabled. That was quite a shocker to me when I first saw it. Roughly a 65% performance drop over the same system without snapshots.

Maybe it is a limitation of the ASIC itself – going back to my speculation about design issues and not being able to work around them in software. Maybe this limitation is not present in the V-class which is the next generation ASIC. Or maybe it is, I don’t know.

HP Support says this behavior is as designed. Well I’m sure more than one person out there would agree it is a stupid design if so. I can’t help but think it is a design flaw, not an intentional one – or a design aspect they did not have time to address in order to get the T-series of arrays out in a timely manor(I read elsewhere that the ASIC took much longer than they thought to design, which I think started in 2006 – and was at least partially responsible for them not having PCI express support when the ASIC finally came out). I sent them an email asking if this design was fixed in the V-Class, will update if they respond. I know plenty of 3PAR folks (current and former) read this too so they may be able to comment (anonymously or not..).

As for why more space was not reclaimed in the volume, I ran another test on Friday on another volume without any snapshots which should of reclaimed a couple hundred gigs but according to the command line it reclaimed nothing, support points me to logs saying 24GB was reclaimed, but that is not reflected in the command line output showing the raw volume size on the system. Still working with them on that one. My other question to them is why 24GB ? I wrote zeros to the end of the file system, there was 0 bytes left. I have some more advanced logging things to do for my next test.

While I’m here I might as well point out some of the other 3PAR software or features I have not used, let’s see

- Adaptive optimization (sub LUN tiering – licensed separately)

- Full LUN-based automated tiering (which I believe is included with Dynamic optimization) – all of my 3PAR arrays to-date have had only a single tier of storage from a spindle performance perspective though had different tiers from RAID level perspectives

- Remote Copy – for the situations I have been in I have not seen a lot of value in array-based replication. Instead I use application based. The one exception is if I had a lot of little files to replicate, using block based replication is much more efficient and scalable. Array-based replication really needs application level integration, and I’d rather have real time replication from the likes of Oracle(not that I’ve used it in years, though I do miss it, really not a fan of MySQL) or MySQL then having to co-ordinate snapshots with the application to maintain consistency (and in the case of MySQL there really is no graceful way to take snapshots, again, unlike Oracle – I’ve been struggling recently with a race condition somewhere in an App or in MySQL itself which pretty much guarantees MySQL slaves will abort with error code 1032 after a simple restart of MySQL – this error has been shown to occur upwards of 15 minutes AFTER the slave has gotten back in sync with the master – really frustrating when trying to deal with snapshots and getting those kinds of issues from MySQL). I have built my systems, for the most part so they can be easily rebuilt so I really don’t have to protect all of my VMs by replicating their data, I just have to protect/replicate the data I need in order to reconstruct the VM(s) in the event I need to.

- Recovery manager for Oracle (I licensed it once on my first system but never ended up using it due to limitations in it not being able to work with raw device maps on vmware – I’m not sure if they have fixed that by now)

- Recovery manager for all other products (SQL server, Exchange, and VMware)

- Virtual domains (useful for service providers I think mainly)

- Virtual lock (used to lock a volume from having data deleted or the volume deleted for a defined period of time if I recall right)

- Peer motion

3PAR Software/features I have used (to varying degrees)

- Thin Provisioning (for the most part pretty awesome but obviously not unique in the industry anymore)

- Dynamic Optimization (oh how I love thee) – the functionality this provides I think for the most part is still fairly unique, pretty much all of it being made possible by the sub disk chunklet-based RAID design of the system. Being able to move data around in the array between RAID levels, between tiers, between regions of the physical spindles themselves (inner vs outer tracks), really without any limit as to how you move it (e.g. no limitations like aggregates in the NetApp world), all without noticeable performance impact is quite amazing (as I wrote a while back I ran this process on my T400 once for four SOLID MONTHS 24×7 and nobody noticed).

- System Tuner (also damn cool – though never licensed it only used it in eval licenses) – this looks for hot chunklets and moves them around automatically. Most customers don’t need this since the system balances itself so well out of the box. If I recall right, this product was created in response to a (big) customer’s request mainly to show that it could be done, I am told very few license it since it’s not needed. In the situations where I used it it ended up not having any positive(or negative) effect on the situation I was trying to resolve at the time.

- Virtual Copy (snapshots – both snapshots and full volume copies) – written tons of scripts to use this stuff mainly with MySQL and Oracle.

- MPIO Software for MS windows – worked fine – really not much to it, just a driver. Though there was some licensing fee 3PAR had to pay for MS for the software or development efforts they leveraged to build it – otherwise the drivers could of been free.

- Host Explorer (pretty neat utility that sends data back through the SCSI connection from the server to the array including info like OS version, MPIO version, driver versions etc – doesn’t work on vSphere hosts because VMware hasn’t implemented support for those SCSI commands or something)

- System Reporter – Collects a lot of data, though from a presentation perspective I much prefer my own cacti graphs

- vCenter Plugin for the array – really minimal set of functionality compared to the competition – a real weak point for the platform. Unfortunately it hasn’t changed much in the almost two years since it was released – hoping it gets more attention in the future, or even in the present. As-is, I consider it basically useless and don’t use it. I haven’t taken advantage of the feature on my own system since I installed the software to verify that it’s functional.

- Persistent Cache – an awesome feature in 4+ node systems that allows re-mirroring of cache to another node in the system in the event of planned or unplanned downtime on one or more nodes in the cluster (while I had this feature enabled – it was free with the upgrade to 2.3.1 on systems with 4 or more nodes I never actually had a situation where I was able to take advantage of it before I left the company with that system).

- Autonomic Groups – group volumes and systems together and make managing mappings of volumes to clusters of servers very easy. The GUI form of this is terrible and they are working to fix it. I literally practically wiped out my storage system when I first tried this feature using the GUI. It was scary the damage I did in the short time I had this(even more so given the number of years I’ve used the platform for). Fortunately the array that I was using was brand new and had really no data on it (literally). Since then – CLI for me, safer and much more clear as to what is going on. My friends over at 3PAR got a lot of folks involved over there to drive a priority plan to fix this functionality which they admit is lacking. What I did wipe out were my ESX boot volumes, so I had to re-create the volumes and re-install ESX. Another time I wiped out all of my fibre channel host mappings and had to re-establish those too. Obviously on a production system this would of resulted in massive data loss and massive downtime. Fortunately, again it was still at least 2 months from being a production system and had a trivial amount of data. When autonomic groups first came out I was on my T400 with a ton of existing volumes, migrating to use existing volumes to groups likely would of been disruptive so I only used groups for new resources, so I didn’t get much exposure to the feature at the time.

That turned out to be A LOT longer than I expected.

This is probably the most negative thing I’ve said about 3PAR here. This information should be known though. I don’t know how other platforms behave – maybe it’s the same. But I can say in the nearly three years I have been aware of this technology this particular limitation has never come up in conversations with friends and contacts at 3PAR. Either they don’t know about it either or it’s just one of those things they don’t want to admit to.

It may turn out that using SCSI UNMAP to reclaim space, rather than writing zeros is much more effective thus rendering the additional costs of thin licensing worth while. But not many things support that yet. As mentioned earlier, VMware specifically recommends disabling support for UNMAP in ESX 5.0 and has disabled it in subsequent releases because of performance issues.

Another thing that I found interesting, is that on the CLI itself, 3PAR specifically reccomends keeping Zero detection disabled unless your doing data migration because under heavy load it can cause issues –

Note: there can be some performance implication under extreme busy systems so it is recommended for this policy to be turned on only during Fat to Thin and re-thinning process and be turned off during normal operation.

Which to some degree defeats the purpose? Some 3PAR folks have told me that information is out of date and only related to legacy systems. Which didn’t really make sense since there are no legacy systems that support zero detection as it is hard wired into the ASIC. 3PAR goes around telling folks that zero detection on other platforms is no good because of the load it introduces but then says that their system behaves in a similar way. Now to give them credit I suspect it is still quite likely a 3PAR box can absorb that hit much better than any other storage platform out there, but it’s not as if your dealing with a line rate operation, there clearly seems to be a limit as to what the ASIC can process. I would like to know what an extremely busy system looks like – how much I/O as a percentage of controller and/or disk capacity?

Bottom line – at this point I’m even more glad I didn’t license the more advanced thinning technologies on my bigger T400 way back when.

I suppose I need to go back to reclaiming space the old fashioned way – data migration.

4,000+ words woohoo!

Interestingly, I just got a related email from a colleague right after reading your blog. It seems that VMware has re-enabled UNMAP in Vsphere 5.0U1, although it is now a manual setting. http://blogs.vmware.com/vsphere/2012/03/vaai-thin-provisioning-block-reclaimunmap-is-back-in-50u1.html

Comment by Steve — April 12, 2012 @ 11:47 am

That is pretty interesting, I saw an updated KB article about 5.0 Patch 2 that had UNMAP disabled by default but you could re-enable it if you wanted, but hadn’t seen the newer one you pointed to.

Having thin reclamation run only during a maintenance window seems to sort of defeat the purpose, I mean wouldn’t it be easier/better/safer to just create a new volume and do a bunch of storage vmotions to reclaim space ? At least you could do that while other stuff is running and not have to worry as much about array impact. In either case you need the enterprise license so it’s not as if your able to save on licensing fees by going with vsphere that doesn’t have storage vMotion.

Another question I’d have is how would guests reclaim space? Would you need to (I assume so) co-ordinate UNMAP activity within the guests as well as the VMFS layer during this maintenance window ? Does VMFS even handle UNMAP commands coming from guests? It’s been a few years since I tried to use the vmware tools to compact a volume inside of a VM in ESX – at the time it didn’t seem to work – perhaps a workstation-only capability. Just checked again in a windows VM I have on ESX and shrinking is disabled(nothing non-standard about the disk configuration of the VM), while in workstation 8 the option is there still.

thanks for the post!

Comment by Nate — April 12, 2012 @ 12:07 pm

so what do you suggest for when there is an application that actually writes zeros to purge data or a user dd an entire slice of a partition by mistake? how is the storage going to detect the differences?

Comment by Karl Benson — April 14, 2012 @ 3:13 am

Your statement got me thinking about the issue from the right angle. time to update the post!

Comment by Nate — April 14, 2012 @ 7:01 am

I guess this is less a comment and more a way of trying to get in touch. I am evaluating 3PAR kit and was interested in what you were saying regarding

– Scripting Clones and snaps – this interests me, was it difficult as we are looking to script similar but integated with oracle, ie but oracle into hot backup mode, clone or snap and then mount cloned or snapped luns in a vmware environment as a RDF.

-Your comment that the integregation with vmware vcentre appears to leave alot to be desired ( my interpetation ).

– That oracle recovery manager is pretty ropey for a VMware environment.

Anyhow I would love to get any feedback on whats good, bad, indifferent and any areas you would look deeper into if you had the chance again.

Comment by Bob — April 18, 2012 @ 12:18 pm

Yeah the vmware integration is really lacking with 3PAR today. I’m kind of surprised how little progress has been made since it was originally released. But for all I know maybe they have a major new version of code just around the corner. As far as I know, no such integration exists for other Hypervisors (I’m not sure how common it is for other array vendors to have tight integration with Hyper-V, Xen or KVM or whatever).

As for scripting integration, from a 3PAR standpoint scripting snapshots is dead simple, what I do is have a “San Management” server (which has always been a VM), where I run the scripts from and it has the appropriate access to the various servers and storage in the network. So it has root access to the database servers and full admin rights to the 3PAR, it authenticates with SSH keys so no passwords or anything are needed up front.

The 3PAR CLI is pretty easy to understand – I think it could be easier, they put a lot of emphasis on, command names that have multiple words on them, for example to configure an iSCSI port you use the command “controliscsiport”. I prefer having the words separated and having tab completion so you can do something like “control port iscsi” or something like that. There is no tab completion on 3PAR.

Co-ordinating the snapshots with the application layer is more complex. Back when I had a serious Oracle install I think I had a really great setup which had each Oracle server having 3 LUNs – One for the database itself, one for database logs, and one for the Oracle DB application. The 3rd one is probably one people don’t think about doing. But it really made life simple when I wanted to take a copy of production and put it in a test environment, I didn’t have to worry about installing Oracle in the test environment since it came over as part of my snapshot process. Of course any Oracle patches came over with it too. I could also easily patch a testing system to newer patches and if for any reason I wanted to undo that one option would be to refresh from production again(not that I ever had to do that for that particular purpose). Part of my snapshot process would be to install a custom init.ora file for the DB in the test environment as part of the move because the test environment did not have the same number of CPUs or memory as production.

Snapshots on 3PAR are read only or read-write. You cannot make a direct read-write snapshot from a read-write base volume. You have to create an intermediate read-only snapshot for every read-write. If you make a snapshot via the GUI it handles this for you automatically. The command to create a single snapshot is ‘createsv’, but the command to create a group of snapshots is ‘creategroupsv’ – You can use the latter command to create a single snapshot so for the most part I use creategroupsv regardless as to the number of snapshots I am creating.

So in Oracle’s case you can either pause the I/O with hotbackup, shut it down or just take a blind snapshot and do crash recovery, then you create the read only snapshot, at this point in time if you were pausing the I/O I would resume I/O. Then create the read-write snapshot after resuming I/O – saves a couple seconds during the process I think.

Restoring the snapshot to another host — from a 3PAR perspective it is easy again, from a host perpsective WITH oracle it can be fairly complex because Oracle has a lot of moving parts, I kept a log file from one of my processes that used hotbackup mode and restored Oracle to another host to show people what kind of things are involved, the log is here:

http://yehat.aphroland.org/~aphro/san/oracle-restore-prod-oracle-1a_20080319_230901.log

That is taking a snapshot from a production physical server running fibre channel, and sending it over to a virtual server running software iSCSI. It also copies and applies the latest logs since the snapshot. The point of that particular process was to run RMAN on a dedicated system so it would not impact the main DB server (we ran Oracle SE which you couldn’t run RMAN on a standby with raw devices if I recall right? or not run RMAN at all on a standby? I don’t remember).

The scripts were complex – but all of the complexity was around integrating with Oracle and with the OS, the 3PAR side was just a few simple commands.

When dealing with RDMs and VMware there is a big bug, I mean feature that I wrote about here three years ago which really pisses me off and makes snapshots more trouble to work with in vSphere. As a result for systems running in VMware and are snapshot TARGETS (that is, are getting LUNs refreshed out from under them vs just taking a snapshot), I have to make the targets use software iSCSI to bypass this vmware feature.

http://www.techopsguys.com/2009/08/18/its-not-a-bug-its-a-feature/

It may be fixed in ESX 5 I don’t know. I have ESX 4.1 U2 but have not checked to see if it was fixed yet, vmware engineering said the behavior I saw in 4.0 was “intentional”.

Hope this helps, I can provide more info if you need. I still have all my scripts and stuff too, though they weren’t built for general consumption they make a lot of assumptions about the environment.

Once I got the Oracle integration stuff down the scripts were pretty rock solid, it was extremely rare that they failed. If they did it was more often than not a failure outside of the script – I recall a couple of times restarting the iscsi service in Linux caused a kernel panic. In future scripts with newer versions of Linux I adjusted the iSCSI logic to just log out of the iSCSI target rather than stop the service and unload the kernel driver altogether. That helped a ton.

I have the same for MySQL though the integration with MySQL is very simple compared to Oracle and I don’t have the extra LUN for the MySQL install itself. The downside is MySQL needs to be shut down, which I have had issues with recently as well as in the past, bugs in mysql replication mainly could trigger replication failures if MySQL was shut down at the wrong moment which prevented MySQL from resuming replication requiring manual intervention to recover. Also the crash recovery of MySQL seems far less robust than Oracle. I’m sure it was a bug but I remember one occasion a MySQL thread was hung internally, MySQL intentionally crashed itself (the logs even said “I’m going to crash now to fix this hung thread”), and when it came back up (or tried to come back up) the data was corrupt and MySQL wouldn’t start. Damn thing. MySQL may be cheap but there are so many times when I wish I was working with a real DB. I haven’t worked with Oracle seriously in about 4 years now. I do have a mini Oracle DB for my vCenter but it’s tiny.

Comment by Nate — April 18, 2012 @ 12:50 pm