You may of read another one of my blog entries “Why I hate the cloud“, I also mentioned how I’ve been hosting my own email/etc for more than a decade in “Lesser of two evils“.

So what’s this about? I still hate the cloud for any sort of large scale deployment, but for micro deployments it can almost make sense. Let me explain my situation:

About 9 years ago the ISP I used to help operate more or less closed shop, I relocated what was left of the customers to my home DSL line (1mbps/1mbps 8 static IPs) on a dedicated little server. My ISP got bought out, then got bought out again and started jacking up the rates(from $20/mo to ~$100/mo + ~$90;/mo for Qwest professional DSL). Hosting at my apartment was convienant but at the same time was a sort of a ball and chain, as it made it very difficult to move. Co-ordinating the telco move and the ISP move with minimal downtime, well let’s just say with DSL that’s about impossible. I managed to mitigate one move in 2001 by temporarily locating my servers at my “normal” company’s network for a few weeks while things got moved.

A few years ago I was also hit with what was a 27 hour power outage(despite being located in a down town metropolitan area, everyone got hit by that storm). Shortly after that I decided longer term a co-location is the best fit for me. So phase one was to virtualize the pair of systems in VMware. I grabbed an older server I had laying around and did that, and ran it for a year, worked great(though the server was really loud).

Then I got another email saying my ISP was bought out yet again, this time the company was going to force me to change my IP addresses, which when your hosting your own DNS can be problematic. So that was the last straw. I found a nice local company to host my server at a reasonable price. The facility wasn’t world class by any stretch, but the world class facilities in the area had little interest in someone wanting to host a single 1U box that averages less than 128kbps of traffic at any given time. But it would do for now.

I run my services on a circa 2004 Dual Xeon system, with 6GB memory, ~160GB of disk on a 3Ware 8006-2 RAID controller(RAID 1). I absolutely didn’t want to go to one of those cheap crap hosting providers where they have massive downtime and no SLAs. I also had absolutely no faith in the earlier generation “UML” “VMs“(yes I know Xen and UML aren’t the same but I trust them the same amount – e.g. none). My data and privacy are fairly important to me and I am willing to pay extra to try to maintain it.

So early last year my RAID card told me one of my disks was about to fail and to replace it, so I did, rebuilt the array and off I went again. A few months later the RAID card again told me another disk was about to fail(there are only two disks in this system), so I replaced that disk, rebuilt, and off I went. Then a few months later, the RAID card again said a disk is not behaving right and I should replace it. Three disk replacements in less than a year. Though really it’s been two, I’ve ignored the most recent failing drive for several months now. Media scans return no errors, however RAID integrity checks always fail causing a RAID rebuild(this happens once a week). Support says the disk is suffering from timeouts. There is no back plane on the system(and thus no hot swap, making disk replacements difficult). Basically I’m getting tired of maintaining hardware.

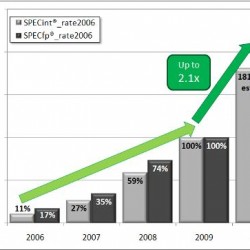

I looked at the cost of a good quality server with hot swap, remote management, etc, and something that can run ESX, cost is $3-5k. I could go $2-3k and stick to VMware server on top of Debian, a local server manufacturer has their headquarters literally less than a mile from my co-location, so it is tempting to stick with doing it on my own, and if my needs were greater than I would fo sure, cloud does not make sense in most cases in my opinion but in this case it can.

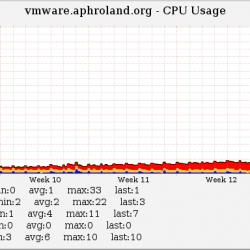

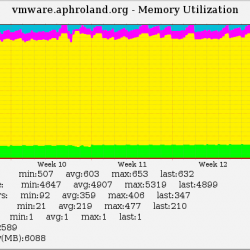

If I try to price out a cloud option that would match that $3-5k server, purely from a CPU/memory perspective the cloud option would be significantly more. But I looked closer and I really don’t need that much capacity for my stuff. My current VMware host runs at ~5-8% cpu usage on average on six year old hardware. I have 6GB of ram but I’m only using 2-3GB at best. Storage is the biggest headache for me right now hosting my own stuff.

So I looked to Terremark who seem to have a decent operation going, for the most part they know what they are doing(still make questionable decisions though I think most of those are not made by the technical teams). I looked to Terremark for a few reasons:

- Enterprise storage either from 3PAR or EMC (storage is most important for me right now given my current situation)

- Redundant networking

- Tier IV facilities (my current facility lacks true redundant power and they did have a power outage last year)

- Persistent, fiber attached storage, no local storage, no cheap iSCSI, no NFS, no crap RAID controllers, no need to worry about using APIs and other special tools to access storage it is as if it was local

- Fairly nice user interface that allows me to self provision VMs, IPs etc

Other things they offer that I don’t care about(for this situation, others they could come in real handy):

- Built in load balancing via Citrix Netscalers

- Built in firewalls via Cisco ASAs

So for me, a meager configuration of 1 vCPU, 1.5GB of memory, and 40GB of disk space with a single external static IP is a reasonable cost(pricing is available here):

- CPU/Memory: $65/mo [+$1,091/mo if I opted for 8-cores and 16GB/ram]

- Disk space: $10/mo [+$30/mo if I wanted 160GB of disk space]

- 1 IP address: $7.20/mo

- 100GB data transfer: $17/mo (bandwidth is cheap at these levels so just picked a round number)

- Total: $99/mo

Which comes to about the same as what I’m paying for in co-location fees now, if that’s all the costs were I’d sign up in a second, but unfortunately their model has a significant premium on “IP Services”, when ideally what I’d like is just a flat layer 3 connection to the internet. The charge is $7.20/mo for each TCP and UDP port you need opened to your system, so for me:

- HTTP – $7.20/mo

- HTTPS – $7.20/mo

- SMTP – $7.20/mo

- DNS/TCP – $7.20/mo

- DNS/UDP – $7.20/mo

- VPN/UDP – $7.20/mo

- SSH – $7.20/mo

- Total: $50/mo

And I’m being conservative here, I could be opening up:

- POP3

- POP3 – SSL

- IMAP4

- IMAP4 – SSL

- Identd

- Total: another $36/mo

But I’m not, for now I’m not. Then you can double all of that for my 2nd system, so assuming I do go forward with deploying the second system my total costs (including those extra ports) is roughly $353/mo (I took out counting a second 100GB/mo of bandwidth). Extrapolate that out three years:

- First year: $4,236 ($353/mo)

- First two years: $8,472

- First three years: $12,708

Compared to doing it on my own:

- First year: ~$6,200 (with new $5,000 server)

- First two years: ~$7,400

- First three years: ~$8,600

And if you really want to see how this cost structure doesn’t scale, let’s take a more apples to apples comparison of CPU/memory of what I’d have in my own server and put it in the cloud:

- First year – $15,328 [ 8 cores, 16GB ram 160GB disk ]

- First two years – $30,657

- First three years – $45,886

As you can see the model falls apart really fast.

So clearly it doesn’t make a lot of sense to do all of that at once, so if I collapse it to only the essential services on the cloud side:

- First year: $3,420 ($270/mo)

- First two years: $6,484

- First three years: $9,727

I could live with that over three years, especially if the system is reliable, and maintains my data integrity. But if they added just one feature for lil ol me, that feature would be a “Forwarding VIP” on their load balancers and say basically just forward everything from this IP to this internal IP. I know their load balancers can do it, it’s just a matter of exposing the functionality. This would dramatically impact the costs:

- First year: $2,517 ($210/mo)

- First two years: $5,035

- First three years: $7,552

- First four years: $10,070

You can see how the model doesn’t scale, I am talking about 2 vCPUs worth of power, and 3GB of memory, compared to say at least a 8-12 core physical server and 16GB or more of memory if I did it myself. But again I have no use for that extra capacity if I did it myself so it’d just sit idle, like it does today.

CPU usage is higher than I mentioned above I believe because of a bug in VMware Server 2.0 that causes CPU to “leak” somehow, which results in a steady, linear increase in cpu usage over time. I reported it to the forums, but didn’t get a reply, and don’t care enough to try to engage VMware support, they didn’t help me much with ESX and a support contract, they would do even less for VMware server and no support contract.

I signed up for Terremark’s vCloud Express program a couple of months ago, installed a fresh Debian 5.0 VM, and synchronized my data over to it from one of my existing co-located VMs.

So today I have officially transferred all of my services(except DNS) from one of my two co-located VMs to Terremark, and will run it for a while and see how the costs are, how it performs, reliability etc. My co-location contract is up for renewal in September so I have plenty of time to determine whether or not I want to make the jump, I’m hoping I can make it work, as it will be nice to not have to worry about hardware anymore. An excerpt from that link:

[..] My pager once went off in the middle of the night, bringing me out of an awesome dream about motorcycles, machine guns, and general ass-kickery, to tell me that one of the production machines stopped responding to ping. Seven or so hours later, I got an e-mail from Amazon that said something to the effect of:

There was a bad hardware failure. Hope you backed up your shit.

Look at it this way: at least you don’t have a tapeworm.

-The Amazon EC2 Team

I’ll also think long and hard, and probably consolidate both of my co-located VMs into a single VM at Terremark if I do go that route, which will save me a lot, I really prefer two VMs, but I don’t think I should be charged double for two, especially when two are going to use roughly the same amount of resources as one. They talk all about “pay for what you use”, when that is not correct, the only portion of their service that is pay for what you use is bandwidth. Everything else is “pay as you provision”. So if you provision 100GB and a 4CPU VM but you never turn it on, well your still going to pay for it.

The model needs significant work, hopefully it will improve in the future, all of these cloud companies are trying to figure out this stuff still. I know some people at Terremark and will pass this along to them to see what they think. Terremark is not alone in this model, I’m not picking on them for any reason other than I use their services. I think in some situations it can make sense. But the use cases are pretty low at this point. You probably know that I wouldn’t sign up and commit to such a service unless I thought it could provide some good value!

Part of the issue may very well be limitations in the hypervisor itself with regards to reporting actual usage, as VMware and others improve their instrumentation of their systems that could improve the cost model for customers signficantly, perhaps doing things like charging based on CPU usage based on a 95% model like we measure bandwidth. And being able to do things like cost capping, where if your resource usage is higher for an extended period the provider can automatically throttle your system(s) to keep your bill lower(at your request of course).

Another idea would be more accurate physical to virtual mapping, where I can provision say 1 physical CPU, and X amount of memory and then provision unlimited VMs inside that one CPU core and memory. Maybe I just need 1:1, or maybe my resource usage is low enough that I can get 5:1 or 10:1, afterall one of the biggest benefits of virtualization is being able to better isolate workloads. Terremark already does this to some degree on their enterprise products, but this model isn’t available for vCloud Express, at least not yet.

You know what surprised me most next to the charges for IP services, was how cheap enterprise storage is for these cloud companies. I mean $10/mo for 40GB of space on a high end storage array? I can go out and buy a pretty nice server to host VMs at a facility of my choosing, but if I want a nice storage array to back it up I’m looking at easily 10s of thousands of dollars. I just would of expected storage to be a bigger piece of the pie when it came to overall costs. When in my case it can be as low as 3-5% of the total cost over a 3 year period.

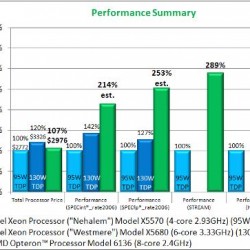

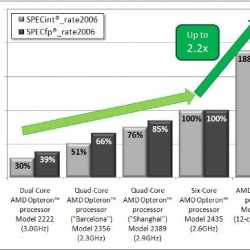

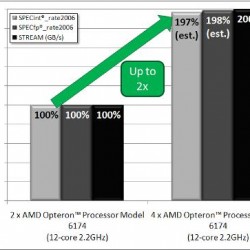

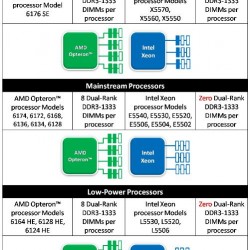

And despite Terremark listing Intel as a partner, my VM happens to be running on -you guessed it – AMD:

yehat:/var/log# cat /proc/cpuinfo

processor   : 0

vendor_id   : AuthenticAMD

cpu family   : 16

model      : 4

model name   : Quad-Core AMD Opteron(tm) Processor 8389

stepping   : 2

cpu MHz      : 2913.037

AMD get’s no respect I tell ya, no respect! 🙂

I really want this to work out.