Knock knock.. HP is kicking down your back door 3PAR..

Well that’s more like it, HP offered $1.6 Billion to acquire 3PAR this morning topping Dell’s offer by 33%. Perhaps the 3cV solution can finally be fully backed by HP. More info from The Register here. And more info on what this could mean to HP and 3PAR products from the same source here.

3PAR’s website is having serious issues, this obviously has spawned a ton of interest in the company, I get intermittent blank pages and connection refused messages.

I didn’t wake my rep up for this one.

The 3cV solution was announced about three years ago –

Elements of the 3cV solution include:

- 3PAR InServ® Storage Servers—highly virtualized, tiered-storage arrays built for utility computing. Organizations creating virtualized IT infrastructures for workload consolidation use InServ arrays to reduce the cost of allocated storage capacity, storage administration, and SAN infrastructure.

- HP BladeSystem c-Class Server Blades—the leading blade server infrastructure on the market for datacenters of all sizes. HP BladeSystem c-Class server blades minimize energy and space requirements and increase administrative productivity through advantages in I/O virtualization, powering and cooling, and manageability.

- VMware vSphere—the leading virtualization platform for industry-standard servers. VMware vSphere helps customers reduce capital and operating expenses, improve agility, ensure business continuity, strengthen security, and go green.

While I could not find the image that depicts the 3cV solution(not sure how long it’s been gone for), here is more info on it for posterity.

The Advantages of 3cV

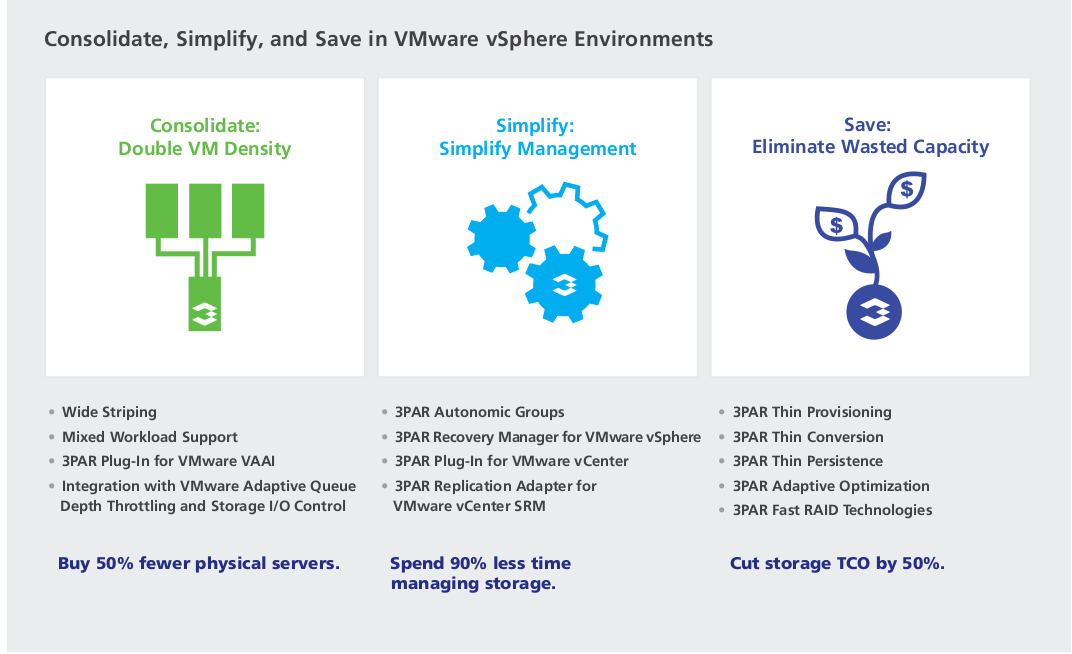

3cV offers combined benefits that enable customers to manage and scale their server and storage environments simply, allowing them to halve server, storage and operational costs while lowering the environmental impact of the datacenter.

- Reduces storage and server costs by 50%—The inherently modular architectures of the HP BladeSystem c-Class and the 3PAR InServ Storage Server—coupled with the increased utilization provided by VMware Infrastructure and 3PAR Thin Provisioning—allow 3cV customers to do more with less capital expenditure. As a result, customers are able to reduce overall storage and server costs by 50% or more. High levels of availability and disaster recovery can also be affordably extended to more applications through VMware Infrastructure and 3PAR thin copy technologies.

- Cuts operational costs by 50% and increases business agility—With 3cV, customers are able to provision and change server and storage resources on demand. By using VMware Infrastructure’s capabilities for rapid server provisioning and the dynamic optimization provided by VMware VMotion and Distributed Resource Scheduler (DRS), HP Virtual Connect and Insight Control management software, and 3PAR Rapid Provisioning and Dynamic Optimization, customers are able to provision and re-provision physical servers, virtual hosts, and virtual arrays with tailored storage services in a matter of minutes, not days. These same technologies also improve operational simplicity, allowing overall server and storage administrative efficiency to increase by 3x or more.

- Lowers environmental impact—With 3cV, customers are able to cut floor space and power requirements dramatically. Server floor space is minimized through server consolidation enabled by VMware Infrastructure (up to 70% savings) and HP BladeSystem density (up to 50% savings). Additional server power requirements are cut by 30% or more through the unique virtual power management capabilities of HP Thermal Logic technology. Storage floor space is reduced by the 3PAR InServ Storage Server, which delivers twice the capacity per floor tile as compared to alternatives. In addition, 3PAR thin technologies, Fast RAID 5, and wide striping allow customers to power and cool as much as 75% less disk capacity for a given project without sacrificing performance.

- Delivers security through virtualization, not dedicated hardware silos—Whereas traditional datacenter architectures force tradeoffs between high resource utilization and the need for secure segregation of application resources for disparate user groups, 3cV resolves these competing needs through advanced virtualization. For instance, just as VMware Infrastructure securely isolates virtual machines on shared severs, 3PAR Virtual Domains provides secure “virtual arrays” for private, autonomous storage provisioning from a single, massively-parallel InServ Storage Server.

Though due to the recent stack wars it’s been hard for 3PAR to partner with HP to promote this solution since I’m sure HP would rather push their own full stack. Well hopefully now they can. The best of both worlds technology wise can come together.

More details from 3PAR’s VMware products site.

More details from 3PAR’s VMware products site.

From HP’s offer letter –

We propose to increase our offer to acquire all of 3PAR outstanding common stock to $24.00 per share in cash. This offer represents a 33.3% premium to Dell’s offer price and is a “Superior Proposal†as defined in your merger agreement with Dell. HP’s proposal is not subject to any financing contingency. HP’s Board of Directors has approved this proposal, which is not subject to any additional internal approvals. If approved by your Board of Directors, we expect the transaction would close by the end of the calendar year.

In addition to the compelling value offered by our proposal, there are unparalleled strategic benefits to be gained by combining these two organizations. HP is uniquely positioned to capitalize on 3PAR’s next-generation storage technology by utilizing our global reach and superior routes to market to deliver 3PAR’s products to customers around the world. Together, we will accelerate our ability to offer unmatched levels of performance, efficiency and scalability to customers deploying cloud or scale-out environments, helping drive new growth for both companies.

As a Silicon Valley-based company, we share 3PAR’s passion for innovation.

[..]

We understand that you will first need to communicate this proposal and your Board’s determinations to Dell, but we are prepared to execute the merger agreement immediately following your termination of the Dell merger agreement.

Music to my ears.

[tangent — begin]

My father worked for HP in the early days back when they were even more innovative than they are today, he recalled their first $50M revenue year. He retired from HP in the early 90s after something like 25-30 years.

I attended my freshman year at Palo Alto Senior High school, and one of my classmates/friends (actually I don’t think I shared any classes with him now that I think about it) was Ben Hewlett, grandson of one of the founders of HP. Along with a couple other friends Ryan and Jon played a bunch of RPGs (I think the main one was Twilight 2000, something one of my other friends Brian introduced me to in 8th grade).

I remember asking Ben one day why he took Japanese as his second language course when it was significantly more difficult than Spanish(which was the easy route, probably still is?) I don’t think I’ll ever forget his answer. He said “because my father says it’s the business language of the future..”

How times have changed.. Now it seems everyone is busy teaching their children Chinese. I’m happy knowing English, and a touch of bash and perl.

I never managed to keep in touch with my friends from Palo Alto, after one short year there I moved back to Thailand for two more years of high school there.

[tangent — end]

HP could do some cool stuff with 3PAR, they have much better technology overall, I have no doubt HP has their eyes on their HDS partnership and the possibility of replacing their XP line with 3PAR technology in the future has got to be pretty enticing. HDS hasn’t done a whole lot recently, and I read not long ago that regardless what HP says, they don’t have much (if any) input into the HDS product line.

The HP USP-V OEM relationship is with Hitachi SSG. The Sun USP-V reseller deal was struck with HDS. Mikkelsen said: “HP became a USP-V OEM in 2004 when the USP-V was already done. HP had no input to the design and, despite what they say, very little input since.” HP has been a Hitachi OEM since 1999.

Another interesting tidbit of information from the same article:

It [HDS] cannot explain why it created the USP-V – because it didn’t, Hitachi SSG did, in Japan, and its deepest thinking and reasons for doing so are literally lost in translation.

The loss of HP as an OEM customer of HDS, so soon after losing Sun as an OEM customer would be a really serious blow to HDS(one person I know claimed it accounts for ~50% of their business), whom seems to have a difficult time selling stuff in western countries, I’ve read it’s mostly because of their culture. Similarly it seems Fujitsu has issues selling stuff in the U.S. at least, they seem to have some good storage products but not much attention is paid to them outside of Asia(and maybe Europe). Will HDS end up like Fujtisu as a result of HP buying 3PAR? Not right away for sure, but longer term they stand to lose a ton of market share in my opinion.

And with the USP getting a little stale (rumor has it they are near to announcing a technology refresh for it), it would be good timing for HP to get 3PAR, to cash in on the upgrade cycle by getting customers to go with the T class arrays instead of the updated USP whenever possible.

I read on an HP blog earlier in the year an interesting comment –

The 3PAR is drastically less expensive than an XP, but is an active/active concurrent design, can scale up to 8 clustered controllers, highly virtualized, customers can self-install, self-maintain, and requires no professional services. Its on par with the XP in terms of raw performance, but has the ease of use of the EVA. Like the XP, the 3PAR can be carved up into virtual domains so that service providers or multi-tenant arrays can have delegated administration.

I still think 3PAR is worth more, and should stay independent, but given the current situation would much rather have them in the arms of HP than Dell.

Obviously those analysts that said Dell paid too much for 3PAR were wrong, and didn’t understand the value of the 3PAR technology. HP does otherwise they wouldn’t be offering 33% more cash.

After the collapse of so many of 3PAR’s NAS partners over the past couple of years, the possibility of having Ibrix available again for a longer term solution is pretty good. Dell bought Exanet’s IP earlier in the year. LSI owns Onstor, HP bought Polyserve and Ibrix. Really just about no “open” NAS players left. Isilon seems to be among the biggest NAS players left but of course their technology is tightly integrated into their disk drive systems, same with Panasas.

Maybe that recent legal investigation into the board at 3PAR had some merit after all.

Dell should take their $billion and shove it in Pillar’s(or was it Compellent ? I forgot) face, so the CEO there can make his dream of being a billion dollar storage company come true, if only for a short time.

I’m not a stock holder or anything, I don’t buy stocks(or bonds).

HP’s Fusion IO-based I/O Accelerator

HP’s Fusion IO-based I/O Accelerator