I’m sure there are a number of articles out there on 3PAR’s Dynamic Optimization but I thought it would be worth adding one more “holy cow this is easy!” post. My company just added 8 more drives to our 3PAR E200 bringing the total spindle count from 24 to 32. In the past, using another vendor’s SAN, taking advantage of the space on these new drives meant carving out a new LUN. If you wanted to use the space on all 32 drives collectively (in a single LUN for example) it would mean copying all the data off, recreating your LUN(s) and copying the data back. Not with 3PAR. First of all, thanks to their “chunklet” technology, carving out LUNs is a thing of the past. You can create and delete multiple virtual LUNs (VLUNs) on the fly. I won’t got into the details of that here but instead want to look at their Dynamic Optimization feature.

With Dynamic Optimization, after adding those 8 new drives I can then rebalance my VLUNs across all 32 drives – taking advantage of the extra spindles and increasing IOPS (and space). Now comes the part about it being easy. It is essentially 3 commands for a single volume – obviously the total number of commands will vary based on your volumes and common provisioning groups (cpgs).

createcpg -t r5 -ha mag -ssz 9 RAID5_SLOWEST_NEW

The previous command creates a new CPG that is spread out across all of the disks. You can do a lot with CPG’s, but we use them in a pretty flat manner and just use them to define the RAID level and where the data resides on the platter (inside or outside). The “-t r5” flag defines the RAID type (RAID5 in this case). The “-ha mag” flag defines the level of redundancy for this CPG (in this case, at the magazine level which on the E200 equates to disk level). The “-ssz9” defines the set size for the RAID level (in this case 8+1 – obviously a slower RAID level but easy on the overhead). The “RAID5_SLOWEST_NEW” is the name I’m assigning to the CPG.

tunevv usr_cpg RAID5_SLOWEST_NEW -f MY_VIRTVOL1

The “tunevv” command is used for each virtual volume I want to migrate to the newly created CPG in the previous command. It tells the SAN to move the virtual volume MY_VIRTVOL1 to the CPG RAID5_SLOWEST_NEW.

Then, once all of your volumes on a particular CPG are moved to a new CPG, the final command is to delete the old CPG and regain your space.

removecpg RAID5_SLOWEST_OLD

If you start running low on space before you get to the removecpg command (when you’re moving multiple volumes, for example), you can always issue a compactcpg command that will shrink your “old” CPG and free up some space until you finish moving your volumes. Or, if you’re not moving all the volumes off the old CPG, then be sure to issue a compactcpg when you’re finished to reclaim that space.

The Dynamic Optimization can also be used to migrate a volume from one RAID level to another using commands similar to the ones above. At a previous company we moved a number of volumes from RAID1 to RAID5 because we needed the extra space that RAID5 gives. Also, due to the speed of the 3PAR SAN, we hardly noticed a performance hit! And, in this case, the entire DO operation was done by a 3PAR engineer from his Blackbarry while sitting at a restaurant in another state.

Oh, did I mention this is all done LIVE with zero downtime? In fact, I’m doing it on our production SAN right now in the middle of a weekday while the system is under load. There is a performance hit to the system in terms of disk I/O, but the system will throttle the CPG migration as needed to give priority to your applications/databases.

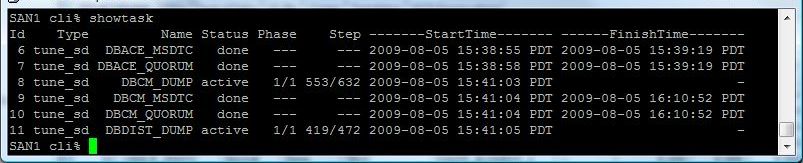

You can queue up multiple tunevv commands at the same time (I think 4 is the max) – each command kicks off a background task that you can check on with the showtask command.

Through this process I’ve created new CPG’s that are configured the same as my old CPG’s (in terms of RAID level and physical location on the platter) except that the new CPG’s are spread across all 32 disk and not just my original 24. Then I moved my VLUNs from the old CPG’s to the new CPG’s. And finally, I deleted the old CPG. Now all of my CPG’s and the VLUNs they contain are spread across all 32 disks thereby increasing IOPS and space available to the CPG’s.

createcpg -t r5 -ha mag -ssz 9 RAID5_SLOWEST_NEW

tunevv usr_cpg RAID5_SLOWEST_NEW -f MY_VIRTVOL1

# repeat as many times as need for each virtual volume in that CPG

removecpg RAID5_SLOWEST_OLD

showtask