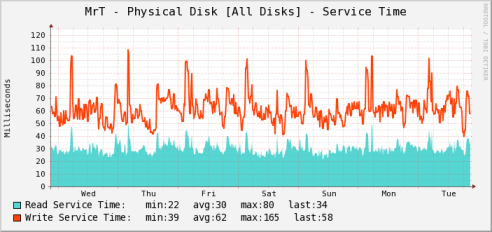

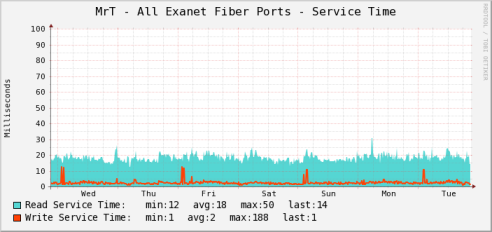

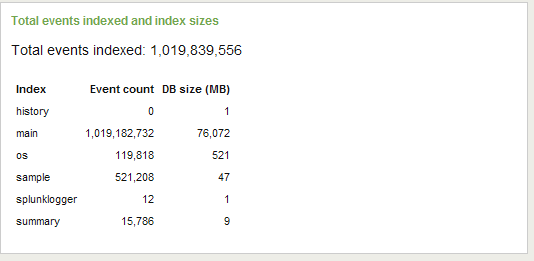

I was on a conference call with Splunk about a month or so ago, we recently bought it after using it off and on for a while. One thing that stuck out to me on that call was the engineer’s excitement around being able to show off a system that had a billion events in it. I started a fresh Splunk database in early June 2009 I think it was, and recently we passed 1 billion events. The index/DB(whatever you want to call it) just got to about 100GB(the below screenshot is a week or two old). The system is still pretty quick too. Running on a simple dual Xeon system with 8GB memory, and a software iSCSI connection to the SAN.

We have something like 400 hosts logging to it(just retired about 100 additional ones about a month ago, going to retire another 80-100 in the coming weeks as we upgrade hardware). It’s still not fully deployed right now about 99% of the data is from syslog.

Upgraded to Splunk v4 the day it came out, it has some nice improvements, filed a bug the day it came out too(well a few), but the most annoying one is I can’t login to v4 with Mozilla browsers(nobody in my company can). Only with IE. We suspect it’s some behavioral issue with our really basic Apache reverse proxy and Splunk. The support guys are looking at it still. That and both their Cisco and F5 apps do not show any data despite having millions of log events from both Cisco and F5 devices in our index. They are looking into that too.

1 billion logged events