ESRP. That is what I have started calling it at least. The official designation is Extreme Standby Router Protocol. It’s one of, if not the main reason I prefer Extreme switches at the core of any Layer 3 network. I’ll try to explain why here, because Extreme really doesn’t spend any time promoting this protocol, I’m still pushing them to change that.

I’ve deployed ESRP at two different companies in the past five years ranging from

- a few hundred ports (Summit X450A/E + some older Cisco gear)

- a few thousand ports (Black Diamond 10808s + lots of Summit 400s)

What are two basic needs of any modern network?

- Layer 2 loop prevention

- Layer 3 fault tolerance

Traditionally these are handled by separate protocols that are completely oblivious to one another mainly some form of STP/RSTP and VRRP(or maybe HSRP if your crazy). There have been for a long time interoperability issues with various implementations of STP as well over the years, further complicating the issue because STP often needs to run on every network device for it to work right.

With ESRP life is simpler.

Advantages of ESRP include:

- Collapsing of layer 2 loop prevention and layer 3 fault tolerance(with IP/MAC takeover) into a single protocol

- Can run in either layer 2 only mode, layer 3 only mode or in combination mode(default).

- Sub second convergence/recovery times.

- Eliminates the need to run protocols of any sort on downstream network equipment

- Virtually all down stream devices supported. Does not require an Extreme-only network. Fully inter operable with other vendors like Cisco, HP, Foundry, Linksys, Netgear etc.

- Supports both managed and unmanaged down stream switches

- Able to override loop prevention on a per-port basis(e.g. hook a firewall or load balancer directly to the core switches, and you trust they will handle loop prevention themselves in active/fail over mode)

- The “who is master?” question can be determined by setting an ESRP priority level which is a number from 0-254 with 255 being standby state.

- Set up from scratch in as little as three commands(for each core switch)

- Protect a new vlan with as little as 1 command (for each core switch)

- Only one IP address per vlan needed for layer 3 fault tolerance(IP-based management provided by dedicated out of band management port)

- Supports protecting up to 3000 vlans per ESRP instance

- Optional “load balancing” by running core switches in active-active mode with some vlans on one, and others on the other.

- Additional fail over based on tracking of pings, route table entries or vlans.

- For small to medium sized networks you can use a pair of X450A(48x1GbE) or X650(24x10GbE) switches as your core for a very low priced entry level solution.

- Mature protocol. I don’t know exactly how old it is, but doing some searches indicates at least 10 years old at this point

- Can provide significantly higher overall throughput vs ring based protocols(depending on the size of the ring), as every edge switch is directly connected to the core.

- Nobody else in the industry has a protocol that can do this. If you know of another protocol that combines layer 2 and layer 3 into a single protocol let me know. For a while I thought Foundry’s VSRP was it, but it turns out that is mainly layer 2 only. I swear I read a PDF that talked about limited layer 3 support in VSRP back in 2004/2005 time frame but not anymore. I haven’t spent the time to determine the use cases between VSRP and Foundry’s MRP which sounds similar to Extreme’s EAPS which is a layer 2 ring protocol heavily promoted by Extreme.

Downsides to ESRP:

- Extreme Proprietary protocol. To me this is not a big deal as you only run this protocol at the core. Downstream switches can be any vendor.

- Perceived complexity due to wide variety of options, but they are optional, basic configurations should work fine for most people and it is simple to configure.

- Default election algorithm includes port weighting, this can be good and bad depending on your point of view. Port weighting means if you have an equal number of active links of the same speed on each core switch, and the master switch has a link go down the network will fail over. If you have non-switches connected directly to the core(e.g. firewall) I will usually disable the port weighting on those specific ports so I can reboot the firewall without causing the core network to fail over. I like port weighting myself, viewing it as the network trying to maintain it’s highest level of performance/availability. That is, who knows why that port was disconnected, bad cable? bad ASIC, bad port? Fail over to the other switch that has all of it’s links in a healthy state.

- Not applicable to all network designs(is anything?)

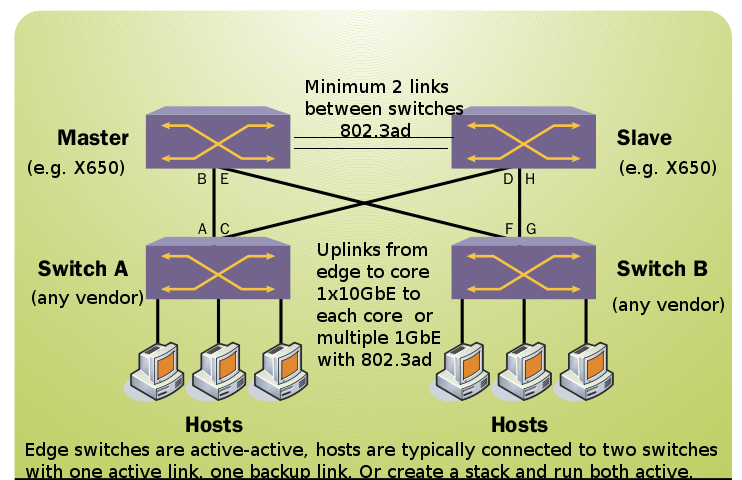

The optimal network configuration for ESRP is very simple, it involves two core switches cross connected to each other(with at least two links), with a number of edge switches, each edge switch has at least one link to each core switch. You can have as few as three switches in your network, or you can have several hundred(as many as you can connect to your core switches max today I think is say 760 switches using high density 1GbE ports on a Black Diamond 8900, plus 8x1Gbps ports for cross connect).

ESRP Domains

ESRP uses a concept of domains to scale itself. A single switch is master of a particular domain which can include any number of vlans up to 3000. Health packets are sent for the domain itself, rather than the individual vlans dramatically simplifying things and making them more scalable simultaneously.

This does mean that if there is a failure in one vlan, all of the vlans for that domain will fail over, not that one specific vlan. You can configure multiple domains if you want, I configure my networks with one domain per ESRP instance. Multiple domains can come in handy if you want to distribute the load between the core switches. A vlan can be a member of only one ESRP domain(I expect, I haven’t tried to verify).

Layer 2 loop prevention

The way ESRP loop prevention works is the links going to the slave switch are placed in a blocking state, which eliminates the need for downstream protocols and allows you to provide support for even unmanaged switches transparently.

Layer 3 fault tolerance

Layer 3 fault tolerance in ESRP operates in two different modes depending on whether or not the downstream switches are Extreme. It assumes by default they are, you can override this behavior on a per-port basis. In an all-Extreme network ESRP uses EDP [Extreme Discovery Protocol](similar to Cisco’s CDP) to inform down stream switches the core has failed over and to flush their forwarding entries for the core switch.

If downstream switches are not Extreme switches, and you decided to leave the core switch in default configuration, it will likely take some time(seconds, minutes) for those switches to expire their forwarding table entries and discover the network has changed.

Port Restart

If you know you have downstream switches that are not Extreme I suggest for best availability to configure the core switches to restart the ports those switches are on. Port restart is a feature of ESRP which will cause the core switch to reset the links of the ports you configure to try to force those switches to flush their forwarding table. This process takes more time than in an Extreme-only network. In my own tests specifically with older Cisco layer 2 switches, with F5 BigIP v9, and Cisco PIX this process takes less than one second(if you have a ping session going and trigger a fail over event to occur rarely is a ping lost).

Host attached ports

If you are connecting devices like a load balancer, or a firewall directly to the switch, you typically want to hand off loop prevention to those devices, so that the slave core switch will allow traffic to traverse those specific ports regardless of the state of the network. Host attached mode is an ESRP feature that is enabled on a per-port basis.

Integration with ELRP

ESRP does not protect you from every type of loop in the network, by design it’s intended to prevent a loop from occurring between the edge switch and the two core switches. If someone plugs an edge switch back into itself for example that will cause a loop still.

ESRP integrates with another Extreme specific protocol named ELRP or Extreme Loop Recovery Protocol. Again I know of no other protocol in the industry that is similar, if you do let me know.

What ELRP does is it sends packets out on the various ports you configure and looks for the number of responses. If there is more than it expects it sees that as a loop. There are three modes to ELRP(this is getting a bit off topic but is still related). The simplist mode is one shot mode where you can have ELRP send it’s packets once and report, the second mode is periodic mode where you configure the switch to send packets periodically, I usually use 10 seconds or something, and it will log if there are loops detected(it tells you specifically what ports the loops are originating on).

The third mode is integrated mode, which is how it relates to ESRP. Myself I don’t use integrated mode and suggest you don’t either at least if you follow an architecture that is the same as mine. What integrated mode does is if there is a loop detected it will tell ESRP to fail over, hoping that the standby switch has no such loop. In my setups the entire network is flat, so if there is a loop detected on one core switch, chances are extremely(no pun intended) high that the same loop exists on the other switch. So there’s no point in trying to fail over. But I still configure all of my Extreme switches(both edge and core) with ELRP in periodic mode, so if a loop occurs I can track it down easier.

Example of an ESRP configuration

We will start with this configuration:

- A pair of Summit X450A-48T switches as our core

- 4x1Gbps trunked cross connects between the switches (on ports 1-4)

- Two downstream switches, each with 2x1Gbps uplinks on ports 5,6 and 7,8 respectively which are trunked as well.

- One VLAN named “webservers” with a tag of 3500 and an IP address of 10.60.1.1

- An ESRP domain named esrp-prod

The non ESRP portion of this configuration is:

enable sharing 1 grouping 1-4 address-based L3_L4 enable sharing 5 grouping 5-6 address-based L3_L4 enable sharing 7 grouping 7-8 address-based L3_L4 create vlan webservers config webservers tag 3500 config webservers ipaddress 10.60.1.1 255.255.255.0 config webservers add ports 1,5,7 tagged

What this configuration does

- Creates a port sharing group(802.3ad) grouping ports 1-4 into a virtual port 1.

- Creates a port sharing group(802.3ad) grouping ports 5-6 into a virtual port 5.

- Creates a port sharing group(802.3ad) grouping ports 5-7 into a virtual port 7.

- Creates a vlan named webservers

- Assigns tag 3500 to the vlan webservers

- Assigns the IP 10.60.1.1 with the netmask 255.255.255.0 to the vlan webservers

- Adds the virtual ports 1,5,7 in a tagged mode to the vlan webservers

The ESRP portion of this configuration is:

create esrp esrp-prod config esrp-prod add master webservers config esrp-prod priority 100 config esrp-prod ports mode 1 host enable esrp

The only difference between the master and slave, is to change the priority. From 0-254 higher numbers is higher priority, 255 is reserved for putting the switch in standby state.

What this configuration does

- Creates an ESRP domain named esrp-prod.

- Adds a master vlan to the domain, I believe the master vlan carries the control traffic

- Configures the switch for a specific priority [optional – I highly recommend doing it]

- Enables host attach mode for port 1, which is a virtual trunk for ports 1-4. This allows traffic for potentially other host attached ports on the slave switch to traverse to the master to reach other hosts on the network. [optional – I highly recommend doing it]

- enables ESRP itself (you can use the command show esrp at this point to view the status)

Protecting additional vlans with ESRP

It is a simple one liner command to each core switch, extending the example above, say you added a vlan appservers with it’s associated parameters and wanted to protect it, the command is:

config esrp-prod add member appservers

That’s it.

Gotchas with ESRP

There is only one gotcha that I can think of off hand specific to ESRP. I believe it is a bug, and reported it a couple of years ago(code rev 11.6.3.3 and earlier, current code rev is 12.3.x) I don’t know if it is fixed yet. But if you are using port restart configured ports on your switches, and you add a vlan to your ESRP domain, those links will get restarted(as expected), what is not expected is this causes the network to fail over because for a moment the port weighting kicks in and detects link failure so it forces the switch to a slave state. I think the software could be aware why the ports are going down and not go to a slave state.

Somewhat related, again with port weightings, if you are connecting a new switch to the network, and you happen to connect it to the slave switch first, port weighting will kick in being that the slave switch now has more active ports than the master, and will trigger ESRP to fail over.

The workaround to this, and in general it’s a good practice anyways with ESRP, is to put the slave switch in a standby state when you are doing maintenance on it, this will prevent any unintentional network fail overs from occurring while your messing with ports/vlans etc. You can do this by setting the ESRP priority to 255. Just remember to put it back to a normal priority after you are done. Even in a standby state, if you have ports that are in host attached mode(again e.g. firewalls or load balancers) those ports are not impacted by any state changes in ESRP.

Sample Modern Network design with ESRP

Switches:

- 2 x Extreme Networks Summit X650-24t with 10GbaseT for the core

- 22 x Extreme Networks Summit X450A-48T each with an XGM2-2xn expansion module which provides 2x10GbaseT up links providing 1,056 ports of highest performance edge connectivity (optionally select X450e for lower, or X350 for lowest cost edge connectivity. Feel free to mix/match all of them use the same 10GbaseT up link module).

Cross connect the X650 switches to each other using 2x10GbE links with CAT6A UTP cable. Connect each of the edge switches to each of the core switches with CAT5e/CAT6/CAT6a UTP cable. Since we are working at 10Gbps speeds there is no link aggregation/trunking needed for the edge(there is still aggregation used between the core switches) simplifying configuration even further

Is a thousand ports not enough? Break out the 512Gbps stacking for the X650 and add another pair of X650s, your configuration changes to include:

- Two pairs of 2 x Extreme Networks X650-24t switches in stacked mode with a 512Gbps interconnect(exceeds many chassis switch backplane performance).

- 46 x 48-port edge switches providing 2,208 ports of edge connectivity.

Two thousand ports not enough, really? You can go further though the stacking interconnect performance drops in half, add another pair of X650s and your configuration changes to include:

- Two pairs of 3 x Extreme Networks X650-24t switches in stacked mode with a 256Gbps interconnect(still exceeds many chassis switch backplane performance).

- 70 x 48-port edge switches providing 3,360 ports of edge connectivity.

The maximum number of switches in an X650 stack is eight. My personal preference is with this sort of setup don’t go beyond three. There’s only so much horsepower to do all of the routing and stuff and when your talking about having more than three thousand ports connected to them, I just feel more comfortable that you have a bigger switch if you go beyond that.

Take a look at the Black Diamond 8900 series switch modules on the 8800 series chassis. It is a more traditional core switch that is chassis based. The 8900 series modules are new, providing high density 10GbE and even high density 1GbE(96 ports per slot). It does not support 10GbaseT at the moment, but I’m sure that support isn’t far off. It does offer a 24-port 10GbE line card with SFP+ ports(there is a SFP+ variant of the X650 as well). I believe the 512Gbps stacking between a pair of X650s is faster than the backplane interconnect on the Black Diamond 8900 which is between 80-128Gbps per slot depending on the size of the chassis(this performance expected to double in 2010). While the backplane is not as fast, the CPUs are much faster, and there is a lot more memory, to do routing/management tasks than is available on the X650.

The upgrade process for going from an X650-based stack to a Black Diamond based infrastructure is fairly straight forward. They run the same operating system, they have the same configuration files. You can take down your slave ESRP switch, copy the configuration to the Black Diamond, re-establish all of the links and then repeat the process with the master ESRP switch. You can do this all with approximately one second of combined downtime.

So I hope, in part with this posting you can see what draws me to the Extreme portfolio of products. It’s not just the hardware, or the lower cost, but the unique software components that tie it together. In fact as far as I know Extreme doesn’t even make their own network chipsets anymore. I think the last one was in the Black Diamond 10808 released in 2003, which is a high end FPGA-based architecture(they call it programable ASICs, I suspect that means high end FPGA but not certain). They primarily(if not exclusively) use Broadcom chipsets now. They’ve used Broadcom in their Summit series for many years, but their decision to stop making their own chips is interesting in that it does lower their costs quite a bit. And their software is modular enough to be able to adapt to many configurations (e.g. their Black Diamond 10808 uses dual processor Pentium III CPUs, the Summit X450 series uses ARM-based CPUs I think)