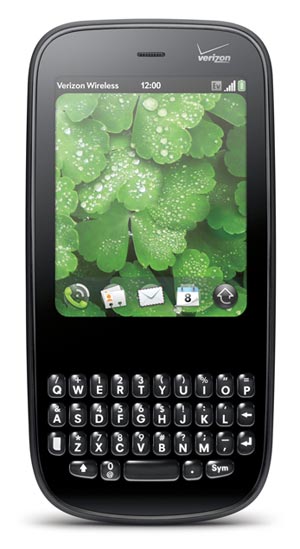

So my pair of Palm Pixi Pluses arrived yesterday. I’m by no means a hardware hacker, have never really had a whole lot of interest in “breaking in” to my systems unless I really needed to (e.g. replace Tivo hard drive that is out of warranty).

I have read a lot over the years how friendly the WebOS platform is to “hacking”. For one you don’t have to root the device, there is an official method to enable developer mode at which point you can install whatever you want.

First thing I wanted to do was upgrade the OS software to the latest revision, the units shipped with 1.4.0, latest is 1.4.5. Given Palm is working on WebOS 2 and WebOS 3, I’m not really expecting any more major updates to WebOS 1. Upgrading was very painless, just download WebOS Doctor for your version/phone/carrier and run it, it re-flashes the phone with the full operating system, then reboots it.

One of the things I didn’t really think of when I ordered my Pixis were the fact that they would require activation in order to use (on initial boot it prompts to call Verizon to register, and of course I am not a Verizon customer and have no intention of using these as Phones).

Fear not though, after a few minutes of research turns up an official tool to bypass this registration process, and is really easy to use:

nate@nate-laptop:~/Downloads$ java -jar devicetool.jar Found device: pixie-bootie Copying ram disk................ Rebooting device... Configuring device... File descriptor 3 (socket:[1587]) leaked on lvm.static invocation. Parent PID 947: novacomd Reading all physical volumes. This may take a while... Found volume group "store" using metadata type lvm2 File descriptor 3 (socket:[1587]) leaked on lvm.static invocation. Parent PID 947: novacomd 6 logical volume(s) in volume group "store" now active File descriptor 3 (socket:[1587]) leaked on lvm.static invocation. Parent PID 947: novacomd 0 logical volume(s) in volume group "store" now active Rebooting device... Device is ready.

So now I have the latest OS, and I have bypassed registration (which also includes turning on developer mode by default). I do lose some functionality in this mode such as:

- No access to online software updates (don’t care)

- No access to Palm App Catalog (not the end of the world)

I had installed OpenSSH on my Pre in the past (though never tested it), this time around I was looking how to get a shell on the Pixi, and looked high and low on how to get SSH on it, to no avail (the documentation is gone, and I can’t find any ssh packages for some reason). Anyways in the end it didn’t really matter because I could just use novaterm, another official Palm tool to get root access, I mean it doesn’t get much simpler than this:

nate@nate-laptop:~$ novaterm root@palm-webos-device:/# df -h Filesystem Size Used Available Use% Mounted on rootfs 441.7M 394.8M 46.9M 89% / /dev/root 31.0M 11.3M 19.7M 37% /boot /dev/mapper/store-root 441.7M 394.8M 46.9M 89% / /dev/mapper/store-root 441.7M 394.8M 46.9M 89% /dev/.static/dev tmpfs 2.0M 152.0k 1.9M 7% /dev /dev/mapper/store-var 248.0M 22.7M 225.3M 9% /var /dev/mapper/store-log 38.7M 4.6M 34.1M 12% /var/log tmpfs 64.0M 160.0k 63.8M 0% /tmp tmpfs 16.0M 28.0k 16.0M 0% /var/run tmpfs 97.9M 0 97.9M 0% /media/ram cryptofs 6.4G 549.6M 5.8G 8% /media/cryptofs /dev/mapper/store-media 6.4G 549.6M 5.8G 8% /media/internal

I don’t think I will need to access the shell beyond this initial configuration so I am not going to bother with SSH going forward.

I have a bunch of Apps and games on my Palm Pre and wanted to try to transfer them to my Pixis. I was hoping for an ipkg variation of dpkg-repack but was unable to find such a thing, so I had to resort to good ‘ol tar/gzip. All of the apps (as far as I can tell) are stored in /media/cryptofs/apps. So I tarred up that directory on my Pre and transferred it to my first Pixi and overwrote the apps directory on it, then rebooted to see what happened.

It worked, really much better than I had expected. Several of the games (especially the more fancy ones) did not work, I suspect because of the different screen size, a couple of the other fancy games started up, but the edges of the screen were clipped. There are probably Pixi versions for many of them, but that wasn’t a big deal, all of the apps worked.

I put the phone in Airplane mode to disable the 3G radio, installed a few patches (which modify system behavior) , and a few more free apps/games via WebOS Quick Install. Copied over some music to test it out, works awesome. The speakers on the Pixi sound really good in my opinion.

After the apps are installed there is roughly 6.3-6.5 GB of available storage for media.

Only thing missing? Touchstone charging, the custom case to support that looks like it starts at $20, I already have 3 touchstone docks, if I did not, that runs $50.

The UI in WebOS is really great, with full multi tasking, a great notification system, and has everything integrated really well.

Having all of these apps, some games, full wifi (which I can use on 3G/4G with the Sprint Mifi that I have), media playback abilities, a keyboard, nice resolution screen, camera with flash, GPS, user replaceable battery, no carrier contracts, all for $40 ?! I really wish I did buy more than two.

Really looking forward to the Pre 3 and the Touchpad.