It has been on my mind a bit recently, wondering how long my trusty Phillips Tivo Series 1 has been going, I checked just now and it’s been going about 11 years, April 24th 2001 is when Outpost.com (now Fry’s) sent me the order confirmation that my first Tivo was on the way. It was $699 for the 60-hour version that I originally purchased, plus $199 for lifetime service (lifetime service today is $499 for new customers), which at the time was still difficult to swallow given I had never used a DVR before that.

My TiVo sandwiched between a cable box with home made IR-shield and a VCR (2002)

There were rampant rumors that Tivo was dead, they’d be out of business soon, I believe that’s also about the time Replay TV (RIP) was fighting with the media industry over commercial skipping.

Tivo faithful hoped Tivo would conquer the DVR market, but that never happened, always rumors of big cable companies deploying Tivo, but I don’t recall reading about wide scale deployments(certainly none of the cable companies I had over the years offered Tivo in my service area).

To this day Tivo still is held as the strongest player from a technology standpoint (for good reason I’m sure). Tivo has been involved in many patent lawsuits over the years and to my knowledge they’ve won pretty much every one. Many folks hate them for their patents, but I’ve always thought the patents were innovative and worth having. I’m sure to some the patents were obvious, but to most, myself included – they were not.

I believe Tivo got a new CEO in recent years and they have been working more seriously with cable providers, I believe there have been much larger scale deployments in Europe vs anywhere else at this point.

Tivo recently announced support for Comcast Xfinity on demand on the Tivo platform. The one downside to something like TiVo, or anything that is not a cable box really is there is no bi directional communication with the cable company, so things like on demand or PPV are not possible directly through Tivo. I don’t think any Tivo since Series 2 supports working with an external cable box, they all use cable cards now. The cable card standard hasn’t moved very far over the years, I saw recently people talking about how difficult it is to find TVs on the market with cable card support as the race to reduce costs has cut them out of the picture.

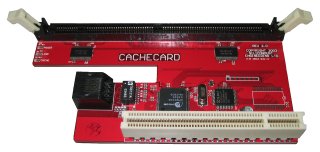

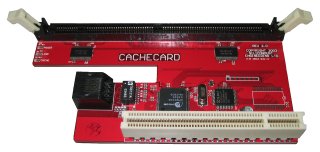

Back to my Tivo Series 1, it was a relatively “hacker friendly” box, unlike post Series 2 equipment. At one point I added a “TivoNet Cache Card” which allowed the system to get program data and software updates over ethernet instead of phone lines by plugging into an exposed PCI-like connector on the motherboard. At the same time it gave the system a 512MB read cache on a single standard DIMM, to accelerate the various databases on the system.

Tivo Cache Card plus TurboNet ethernet port

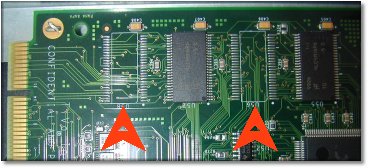

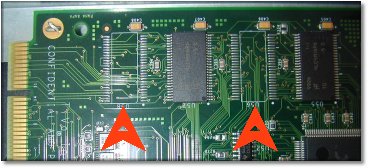

Tivo Series 1 came with only 16MB of ram and a 54Mhz(!) Power PC 403 GCX. Some people used to do more invasive hacking to upgrade the system to 32MB, that was too risky for my taste though.

Picture of process needed to upgrade TiVo Series 1 memory

I’ve been really impressed with the reliability of the hardware(and software), I replaced the internal hard disks back in 2004 because the original ones were emitting a soft but high pitched whine which was annoying. The replacements also upgraded the unit from the original 60 hour rating to 246 hours.

After one of the replacement disks was essentially DOA, I got it replaced, and the Tivo has been running 24/7 since then – 8 years of reading and writing data to that pair of disks – 4200 RPM if I remember right. I’ve treated it well, Tivo has always been connected to a UPS, occasionally I shut it off and clean out all the dust, it’s almost always had plenty of airflow around it. It tends to crash a couple times a year (power cycling fixes it). I have TV shows going back to what I think is probably 2004-2005 time frame saved on that Tivo. Including my favorite Star Trek: Original Series episodes before they wrecked it with the modern CGI (which looks so out of place!).

I’m also able to telnet into the tivo and do very limited tasks, there is a ftp application that allows you to download the shows/movies/etc that are stored on Tivo, but in my experience I could not get it to work (video was unwatchable). Tivo Series 3 and up you can download shows via their fancy desktop application or directly with HTTPS, though many titles are flagged as copyright protected and are not downloadable.

Oh yeah I remember now what got me thinking about Tivo again – I was talking to AT&T this morning adding the tethering option to my plan since my Sprint Mifi is canceled now, and they tried to up sell me to U-Verse, which as far as I know is not compatible with Tivo (maybe Series 1 would work, but I also have a Series 3 which uses cable cards). So I explained to them it’s not compatible with Tivo and I don’t have interest in leaving Tivo at this point.

There was a time when I read in a forum that the Tivo “lifetime subscription” was actually only a few years (this was back in ~2002), and they disclosed it in tiny print in the contract. I don’t recall if I tried to verify it or not, but I suspect they opted to ignore that clause in order to keep their subscriber base, in any case the lifetime service I bought in 2001 is still active today.

The Tivo has outlasted the original TV I had (and the one that followed), gone through four different apartments, two states, it even outlasted the company that sold it to me (Outpost.com). It also outlasted the analog cable technology that it relied upon, for several years I’ve had to have a digital converter box so that Tivo can get even the most basic channels.

The newest Tivos aren’t quite as interesting to me mainly because they focus so much on internet media, as well as streaming to iOS/Android (neither of which I use of course). I don’t do much internet video. My current Tivo has hookups to Netflix, Amazon, a crappy Youtube app, and a few other random things. I don’t use any of them.

The Series 3 is obviously my main unit, which was purchased almost five years ago, it too has had it’s disks replaced once (maybe twice I don’t recall) – though in that case the disks were failing, fortunately I was able to transfer all of the data to the new drives.

The main thing I’d love from Tivo after all these years (maybe they have it now on the new platforms) – is to be able to backup the season passes/wishlists and stuff, so you can migrate them to a new system (or be able to recover faster from a failed hard disk). I’ve had the option of remote scheduling via the Tivo website since I got my Series 3 – but never had a reason to use it. The software+hardware on all of my units (I bought a 2nd Series 1 unit with lifetime back in 2004-2005, eventually gave it to my Sister who uses it quite a bit) has been EOL for many years now so there’s no support now.

Eleven years and still ticking. I don’t use it (Series 1) all that much, but even if I’m not actively using it, it’s always recording (live tv buffer) regardless.