I was just reading a discussion on slashdot about IPv6 again. So apparently BT has announced plans to deploy carrier grade NAT (CGN) for some of their low tier customers. Which is of course just a larger scope higher scale deployment of NAT.

I knew how the conversation would go but I found it interesting regardless. The die hard IPv6 folks came out crying fowl

Killing IPv4 is the only solution. This is a stopgap measure like carpooling and congestion charges that don’t actually fix the original problem of a diminishing resource.

(disclaimer – I walk to work)

[..]how on earth can you make IPv6 a premium option if you don’t make IPv4 unbearably broken and inconvenient for users?

These same folks often cry out about how NAT will break the internet, because they can’t do peer to peer stuff (as easily in some cases, others may not be possible at all). At the same time they advocate a solution (IPv6) that will break FAR more things than NAT could ever hope to break. At least an order of magnitude more.

They feel the only way to make real progress is essentially to tax the usage of IPv4 high enough that people are discouraged from using it, thus somehow bringing immediate global change to the internet and get everyone to switch to IPv6. Which brings me to my next somewhat related topic.

Maybe they are right – I don’t know. I’m in no hurry to get to IPv6 myself.

Stop! Tangent time.

The environmentalists are of course doing the same thing — not long ago a law took effect here in the county I am at where they have banned plastic bags at grocery stores and stuff. You can still get paper pags at a cost of $0.10/bag but no more plastic. I was having a brief discussion on this with a friend last week and he was questioning the stores for charging folks he didn’t know it was the law that was mandating it. I have absolutely, not a shred of doubt that if the environmentalists could have their way they would of banned all disposable bags. That is their goal – the tax is only $0.10 now but it will go up in the future they will push it as high as they can for the same reason, to discourage use. Obviously customers were already paying for plastic and paper bags before – the cost was built into the margins of the products they buy – just like they were paying for the electricity to keep the dairy products cool.

In Washington state I believe there was one or two places that actually tried to ban ALL disposable bags. I don’t remember if the laws passed or not, but I remember thinking that I wanted to just go to one or more of their grocery stores, load up a cart full of stuff, go to checkout. Then they tell me I have to buy bags and I would just walk out. I wanted to soo badly though I am more polite than that so I didn’t.

Safeway gave me 3 “free” reusable bags the first time I was there after the law passed and I bought one more since. I am worried about contamination more than anything else, there have been several reports of the bags being contaminated mainly by meat and stuff because people don’t clean them regularly.

I’ll admit (as much as it pains me) that there is one good reason to use these bags over the disposable ones that didn’t really hit me until I went home that first night – they are a lot stronger, so they hold more. I was able to get a full night’s shopping in 3 bags, and those were easier to carry than the ~8 or so that would otherwise be used with disposable.

I think it’s terrible to have the tax on paper since that is relatively much more green than plastic. I read an article one time that talked about paper vs plastic and the various regions in our country at least – what is more green. The answer was it varied, on the coast lines like where I live paper is more green. In the middle parts of the country plastic was more green. I forgot the reasons given but they made sense at the time. I haven’t been able to dig up the article I have no idea where I read it.

I remember living in China almost 25 years ago now, and noticing how everyone was using reusable bags, similar to what we have now but they were, from what I remember, more like knitted plastic. They used them I believe mainly because they didn’t have an alternative – they didn’t have the machines and stuff to cheaply mass produce those bags. I believe I remember reading at some point the usage of disposable bags really went up in the following years before reversing course again towards the reusables.

Myself I have recycled my plastic bags (at Safeway) for a long time now, as long as I can remember. Sad to see them go.

I’ll end with a quote from Cartman (probably not a direct quote I tried checking)

Hippies piss me off

(Hey Hippies – go ban bottled water now too while your at it – I go through about 60 bottles a week myself, I’ve been stocking up recently because it was cheap(er than normal) I think I have more than 200 bottles in my apartment now – I like the taste of Arrowhead water). I don’t drink much soda at home these days basically replaced it with bottled water so I think cost wise it’s an improvement 🙂 )

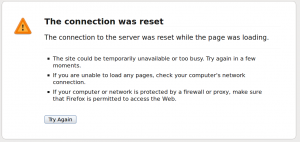

(same goes for those die hard IPv6 folks – you can go ahead, slap CGNAT on my internet connection at home – I don’t care. I already have CGNAT on my cell phone(it has a 10.x IP) and when it is in hotspot mode I notice nothing is broken. The only thing I do that is peer to peer is skype(for work, I don’t use it otherwise), everything else is pure client-server). I have a server(a real server that this blog is hosted on) in a data center (a real data center not my basement) with 100Mbps and unlimited bandwidth to do things that I can’t do on my home connection (mainly due to bandwidth constraints and dynamic IP).

I proclaim IPv6 die hards as internet hippies!

My home network has a site to site VPN with the data center, and if I need to access my home network remotely, I just VPN to the data center and access it that way. If you don’t want to host a real server(it’s not cheap), there are other cheaper solutions like VPS or whatever that are available for pennies a day.