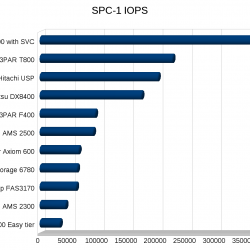

There are two main storage benchmarks I pay attention to:

- SPC-1 – Storage Performance Council’s IOPS test based on simulated OLTP database load.

- SPECsfs2008_nfs.v3 – SPEC’s NFS IOPS benchmark

Of course benchmarks are far from perfect, but they can provide a good starting point when determining what type of system you need to look towards based on your performance needs. Bringing in everything under the sun to test in house is a lot of work, much of it can be avoided by getting some reasonable expectations up front. Both of these benchmarks do a pretty good job. And it’s really nice to have the database of performance results for easy comparison. There’s tons of other benchmarks that can be used but very few have a good set of results you can check against.

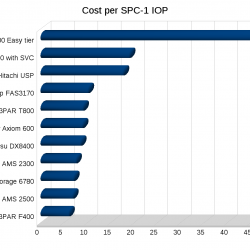

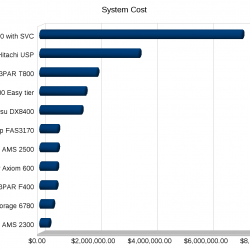

SPC-1 is better as a process primarily because it forces the vendor to disclose the cost of the configuration and 3 years of support. They could improve on this further by forcing the vendor to provide updating pricing to the configuration for 3 years, while the performance of the configuration should not change(given the same components), the price certainly should decline over time so the cost aspects become harder to compare the further apart the tests(duh).

SPC-1 also forces full disclosure of everything required to configure the system, down to the CLI commands to configure the storage. You can get a good idea on how simple or complex the system is by looking at this information.

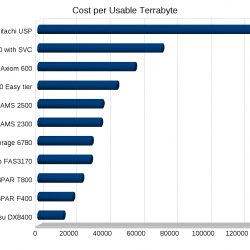

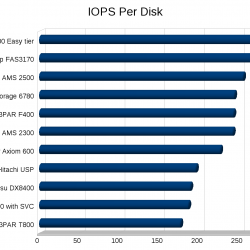

SPC-1 doesn’t have a specific disclosure field for Cost per Usable TB. But it is easy enough to extrapolate from the other numbers in the reports, it would be nice if this was called out(well nice for some vendors, not so much for others). Cost per usable TB can really make systems that utilize short stroking to get performance stand out like a sore thumb. Another metric that would be interesting would be Watts per IOP and Watts per usable TB. The SPC-1E test reports Watts per IOP, though I have hard time telling whether or not the power usage is taken at max load, seems to indicate power usage was calculated at 80% load.

Storage performance is by no means the only aspect you need to consider when getting a new array, but it usually is in at least the top 5.

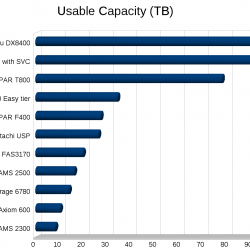

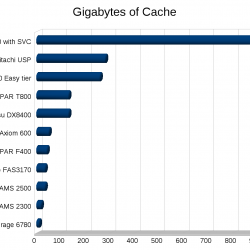

I made a few graphs for some SPC-1 numbers, note the cost numbers need to be taken with a few grains of salt of course depending on how close the systems were tested, the USP for example was tested in 2007. But you can see trends at least.

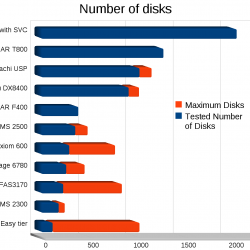

The majority of the systems tested used 146GB 15k RPM disks.

Somewhat odd configurations:

- From IBM, the easy tier config is the only one that uses SSD+SATA, and the other IBM system is using their SAN Volume Controller in a clustered configuration with two storage arrays behind it (two thousand spindles).

- Fujitsu is short stroking 300GB disks.

- NetApp is using RAID 6 (except IBM’s SSDs everyone else is RAID 1)

I’ve never been good with spreadsheets or anything so I’m sure I could make these better, but they’ll do for now.

Links to results:

- 3PAR F400 – Tested 4/27/2009

- 3PAR T800 – Tested 9/02/2008

- Fujitsu DX8400 – Tested 5/25/2010

- Hitachi AMS 2300 – Tested 3/24/2009

- Hitachi AMS 2500 – Tested 3/24/2009

- Hitachi USP – Tested 10/01/2007

- IBM DS8700 Easy Tier – Tested 4/13/2010

- IBM DS8700 with SVC – Tested 2/01/2010

- NetApp FAS3170 – Tested 6/10/2008

- Pillar Axiom 600 – Tested 1/13/2009

- Sun Storage 6780 – Tested 6/01/2010