ZFS doesn’t come up with me all that often, but with the recent news of the settlement of the suites I wanted to talk a bit about this.

It all started about two years ago, some folks at the company I was at were proposing using cheap servers and ZFS to address our ‘next generation’ storage needs, at the time we had a bunch of tier 2 storage behind some really high end NAS head units(not configured in any sort of fault tolerant manor).

Anyways in doing some research I came across a fascinating email thread, the most interesting post was this one, and I’ll put it here because really I couldn’t of said it better myself –

I think there’s a misunderstanding concerning underlying concepts. I’ll try to explain my thoughts, please excuse me in case this becomes a bit lengthy. Oh, and I am not a Sun employee or ZFS fan, I’m just a customer who loves and hates ZFS at the same time

You know, ZFS is designed for high *reliability*. This means that ZFS tries to keep your data as safe as possible. This includes faulty hardware, missing hardware (like in your testing scenario) and, to a certain degree, even human mistakes.

But there are limits. For instance, ZFS does not make a backup unnecessary. If there’s a fire and your drives melt, then ZFS can’t do anything. Or if the hardware is lying about the drive geometry. ZFS is part of the operating environment and, as a consequence, relies on the hardware.

so ZFS can’t make unreliable hardware reliable. All it can do is trying to protect the data you saved on it. But it cannot guarantee this to you if the hardware becomes its enemy.

A real world example: I have a 32 core Opteron server here, with 4 FibreChannel Controllers and 4 JBODs with a total of [64] FC drives connected to it, running a RAID 10 using ZFS mirrors. Sounds a lot like high end hardware compared to your NFS server, right? But … I have exactly the same symptom. If one drive fails, an entire JBOD with all 16 included drives hangs, and all zpool access freezes. The reason for this is the miserable JBOD hardware. There’s only one FC loop inside of it, the drives are connected serially to each other, and if one drive dies, the drives behind it go downhill, too. ZFS immediately starts caring about the data, the zpool command hangs (but I still have traffic on the other half of the ZFS mirror!), and it does the right thing by doing so: whatever happens, my data must not be damaged.

A “bad” filesystem like Linux ext2 or ext3 with LVM would just continue, even if the Volume Manager noticed the missing drive or not. That’s what you experienced. But you run in the real danger of having to use fsck at some point. Or, in my case, fsck’ing 5 TB of data on 64 drives. That’s not much fun and results in a lot more downtime than replacing the faulty drive.

What can you expect from ZFS in your case? You can expect it to detect that a drive is missing and to make sure, that your _data integrity_ isn’t compromised. By any means necessary. This may even require to make a system completely unresponsive until a timeout has passed.

But what you described is not a case of reliability. You want something completely different. You expect it to deliver *availability*.

And availability is something ZFS doesn’t promise. It simply can’t deliver this. You have the impression that NTFS and various other Filesystems do so, but that’s an illusion. The next reboot followed by a fsck run will show you why. Availability requires full reliability of every included component of your server as a minimum, and you can’t expect ZFS or any other filesystem to deliver this with cheap IDE hardware.

Usually people want to save money when buying hardware, and ZFS is a good choice to deliver the *reliability* then. But the conceptual stalemate between reliability and availability of such cheap hardware still exists – the hardware is cheap, the file system and services may be reliable, but as soon as you want *availability*, it’s getting expensive again, because you have to buy every hardware component at least twice.

So, you have the choice:

a) If you want *availability*, stay with your old solution. But you have no guarantee that your data is always intact. You’ll always be able to stream your video, but you have no guarantee that the client will receive a stream without drop outs forever.

b) If you want *data integrity*, ZFS is your best friend. But you may have slight availability issues when it comes to hardware defects. You may reduce the percentage of pain during a disaster by spending more money, e.g. by making the SATA controllers redundant and creating a mirror (than controller 1 will hang, but controller 2 will continue working), but you must not forget that your PCI bridges, fans, power supplies, etc. remain single points of failures why can take the entire service down like your pulling of the non-hotpluggable drive did.

c) If you want both, you should buy a second server and create a NFS cluster.

Hope I could help you a bit,

Ralf

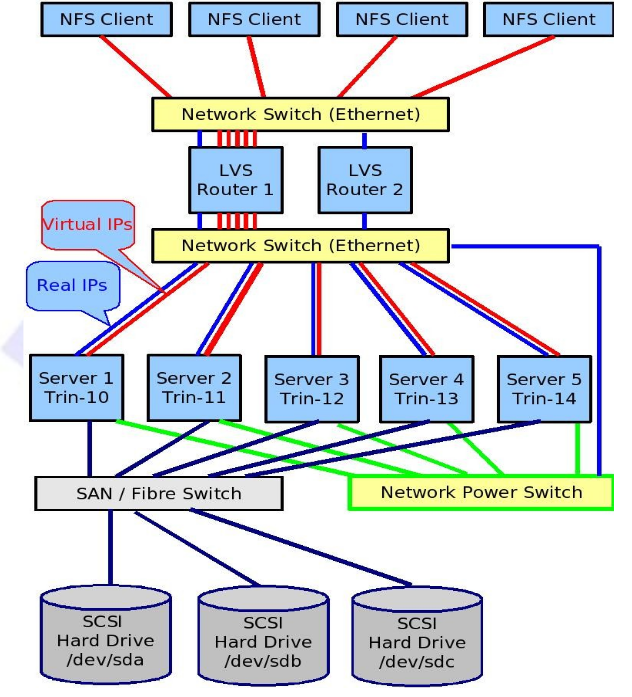

The only thing somewhat lacking from the post is that creating a NFS cluster comes across as not being a very complex thing to do either. Tightly coupling anything really is pretty complicated especially it needs to be stateful (for lack of a better word), in this case the data must be in sync(then there’s the IP-level tolerance, optional MAC takeover, handling fail over of NFS clients to the backup system, failing back, performing online upgrades etc). Just look at the guide Red Hat wrote for building a HA NFS cluster with GFS. Just look at the diagram on page 21 if you don’t want to read the whole thing! Hell I’ll put the digram here because we need more color, note that Red Hat forgot network and fiber switch fault tolerance –

That. Is. A. Lot. Of. Moving. Parts. I was actually considering deploying this at a previous company(not the one that brought up the ZFS discussion), budgets were slashed and I left shortly before the company (and economy) really nose dived.

Also take note in the above example, that only covers the NFS portion of the cluster, they do not talk about how the back end storage is protected. GFS is a shared file system, so the assumption is you are operating on a SAN of some sort. In my case I was planning to use our 3PAR E200 at the time.

Unlike say providing fault tolerance for a network device(setting aside stateful firewalls in this example), since the TCP stack in general is a very forgiving system, storage on the other hand makes so many assumptions about stuff “just working” that you know as well as I do, when storage breaks, usually everything above it breaks hard too, and in crazy complicated ways (I just love to see that “D” in the linux process list after a storage event). Stateful firewall replication is fairly simple by contrast.

Also I suspect that all of the fancy data integrity protection bits are all for naught when running ZFS with things like RAID controllers or higher end storage arrays because of the added abstraction layer(s) that ZFS has no control over, which is probably why so many folks prefer to run RAID in ZFS itself and use “raw” disks.

I think ZFS has some great concepts in it, I’ve never used it because it’s usability on Linux has been very limited (and haven’t had a need for ZFS that was big enough to justify deploying a Solaris system), but certainly give mad props to the evil geniuses who created it.

ZFS is actually available for linux, but because of its CDDL licensing they can’t put it in the kernel. So, it’s available through the FUSE userspace tool (http://zfs-fuse.net/). ZFS incorporates the filesystem, the partition manager, and “RAID-Z” into one package, so you wouldn’t run it on top of another RAID. When I was looking into it last year I saw another FS that I hadn’t run into, before, BTRFS. The way I saw people writing about it, it was the second coming, for data storage! Still, I just noticed it referenced on the Wikipedia page for ZFS (http://en.wikipedia.org/wiki/ZFS) and noticed who has been developing BTRFS. I bet you can guess.

Yep: Oracle!

So, what brought be over there in the first place, though, was the news that Oracle and NetApp have dropped their suits against each other. What’s your take on that? NetApp brought the 1st suit (vs Sun, at the time), so what do you think they might have gotten from a deal to drop the suit? Do you think they were likely to win? Ditto for Oracle, too, of course.

(I hear Larry can be pretty persuasive when he wants to be, maybe he’s just that sparkly? [SPARC-ly?] )

Comment by Charlie — September 10, 2010 @ 1:46 pm

yes, from what I recall(not sure of the current state), ZFS was mainly available through user mode tools like FUSE.

Comment by Nate — September 12, 2010 @ 8:26 am

[…] market of customers willing to risk putting their data on cheap SATA controllers on servers running ZFS with high failure rates and poor […]

Pingback by Oracle picks up Pillar « TechOpsGuys.com — June 29, 2011 @ 1:56 pm