I’ll start out by saying I’ve never been a fan of Wifi, it’s always felt like a nice gimmick-like feature to have but other than that I usually steered clear. Wifi has been deployed at all companies I have worked at in the past 7-8 years though in all cases I was never responsible for that (I haven’t done internal IT since 2002, at which time wifi was still in it’s early stages(assuming it was out at all yet? I don’t remember) and was not deployed widely at all – including at my company). I could probably count on one hand the number of public wifi networks I have used over the years, excluding hotels (of which there was probably ten).

In the early days it was mostly because of paranoia around security/encryption though over the past several years encryption has really picked up and helped that area a lot. There is still a little bit of fear in me that the encryption is not up to snuff, and I would prefer using a VPN on top of wifi to make it even more secure, only really then would I feel comfortable from a security standpoint of using wifi.

From a security standpoint I am less concerned about people intercepting my transmissions over wifi than I am about people breaking into my home network over wifi (which usually happens by intercepting transmissions – my point is more of the content of what I’m transferring, if it is important is always protected by SSL or SSH or in the case of communicating with my colo or cloud hosted server there is a OpenVPN SSL layer under that as well).

Many years ago, I want to say 2005-2006 time frame, there was quite a bit of hype around the Linksys WRT-54G wifi router, for being easy to replace the firmware with custom stuff and get more functionality out of it. So I ordered one at the time, put dd-wrt on it, which is a custom firmware that was talked a lot about back then (is there something better out there? I haven’t looked). I never ended up hooking it to my home network, just a crossover cable to my laptop to look at the features.

Then I put it back in it’s box and put it in storage.

Until earlier this week, when I decided to break it out again to play with in combination with my new HP Touchpad, which can only talk over Wifi.

My first few days with the Touchpad involved having it use my Sprint 3G/4G Mifi access point. As I mentioned earlier I don’t care about people seeing my wifi transmissions I care about protecting my home network. Since the Mifi is not even remotely related to my home network I had no problem using it for extended periods.

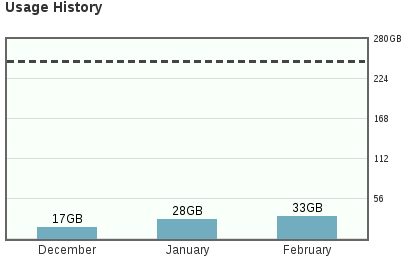

The problem with the Mifi, from my apartment is the performance. At best I can get 20% signal strength for 4G, and I can get maybe 80% signal strength for 3G, latency is quite bad in both cases, and throughput isn’t the best either, a lot of times it felt like I was on a 56k modem. Other times it was faster. For the most part I used 3G because it was more reliable for my location, however I do have a 5 gig data cap/month for 3G so considering I started using the Touchpad on the 1st of the month I got kind of concerned I may run into that playing with the new toy during the first month. I just checked Sprint’s site and I don’t see a way to see intra month data usage, only data usage for the month once it’s completed. The mifi tracks data usage while it is running but this data is not persisted across reboots, and I think it’s also reset if the mifi changes between 3G and 4G services. I have unlimited 4G data, but the signal strength where I’m at just isn’t strong enough.

I looked into the possibility of replacing my Mifi with newer technology, but after reading some customer reviews of the newer stuff it seemed unlikely I would get a significant improvement in performance at my location, enough to justify the cost of the upgrade at least so I decided against that for now.

So I broke out the WRT-54G access point and hooked it up. Installed the latest recommended version of firmware, configured the thing and hooked up the touchpad.

I knew there was a pretty high number of personal access points deployed near me, it was not uncommon to see more than 20 SSIDs being broadcast at any given time. So interference was going to be an issue. At one point my laptop showed me that 42 access points were broadcasting SSIDs. And that of course does not even count the ones that are not broadcasting, who knows how many there are there, I haven’t tried to get that number.

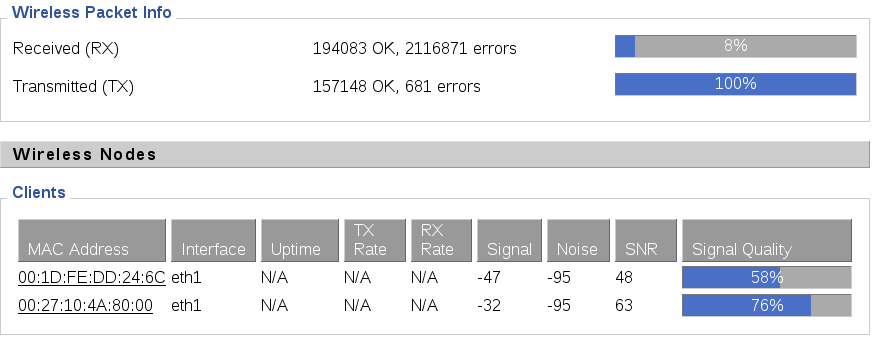

With my laptop and touchpad being located no more than 5 feet away from the AP, I had signal strengths of roughly 65-75%. To me that seemed really low given the proximity. I suspected significant interference was causing signal loss. Only when I put the touchpad within say 10 inches of the antenna from the AP did the signal strength go above 90%.

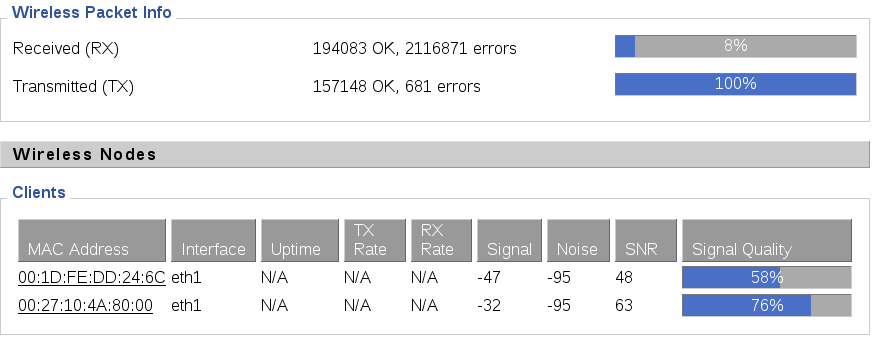

Looking into the large number of receive errors told me that those errors are caused almost entirely by interference.

So then I wanted to see what channels were most being used and try to use a channel that has less congestion, the AP defaulted to channel 6.

The last time I mucked with wifi on linux there seemed to be an endless stream of wireless scanning, cracking, hacking tools. Much to my shock and surprise these days most of those tools haven’t been maintained in 5-6-7-8+ years. There aren’t many left. Sadly enough the default Ubuntu wifi apps do not report channels they just report SSIDs. So I went on a quest to find a tool I could use. I finally came across something called wifi radar, which did the job more or less.

I counted about 25 broadcasting SSIDs using wifi radar, nearly half of them if I recall right were on channel 6. A bunch more on 11 and 1, the other two major channels. My WRT54G had channels going all the way up to 14. I recall reading several years ago about frequency restrictions in different places, but in any case I tried channel 14 (which is banned in the US). Wifi router said it was channel 14, but neither my laptop nor Touchpad would connect. I suspect since they flat out don’t support it. No big deal.

Then I went to channel 13. Laptop immediately connected, Touchpad did not. Channel 13 is banned in many areas, but is allowed in the U.S. if the power level is low.

Next I went to channel 12. Laptop immediately connected again, Touchpad did not. This time I got suspicious of the Touchpad. So I fired up my Palm Pre, which uses an older version of the same operating system. It saw my wifi router on channel 12 no problem. But the Touchpad remained unable to connect even if I manually input the SSID. Channel 12 is also allowed in the U.S. if the power level is low enough.

So I ended up on channel 11. Everything could see everything at that point. I enabled WPA2 encryption, enabled MAC address filtering (yes I know you can spoof MACs pretty easily on wifi, but at the same time I have only 2 devices I’ll ever connect so blah). I don’t have a functional VPN yet mainly because I don’t have a way (yet) to access VPN on the Touchpad, it has built in support for two types of Cisco VPNs but that’s it. I installed OpenVPN on it but I have no way to launch it on demand without being connected to the USB terminal. I suppose I could just leave it running and in theory it should automatically connect when it finds a network but I haven’t tried that.

So on to my last point on wifi – interference. As I mentioned earlier signal quality was not good even being a few feet away from the access point. I decided to try out speedtest.net to run a basic throughput test on both the Touchpad and the Laptop. All tests were using the same Comcast consumer broadband connection

| Device | Connectivity Type | Latency | Download Performance | Upload Performance |

| HP Touchpad | 802.11g Wireless | 18 milliseconds | 5.32 Megabits | 4.78 Megabits |

| Toshiba dual core Laptop with Ubuntu 10.04 and Firefox 3.6 | 802.11g Wireless | 13 milliseconds | 9.46 Megabits | 4.89 Megabits |

| Toshiba dual core Laptop with Ubuntu 10.04 and Firefox 3.6 | 1 Gigabit ethernet | 9 milliseconds | 27.48 Megabits | 5.09 Megabits |

The test runs in flash, and as you can see of course the Touchpad’s browser (or flash) is not nearly as fast as the laptop, not too unexpected.

Comparing LAN transfer speeds was even more of a joke of course, I didn’t bother involving the Touchpad in this test just the laptop. I used iperf to test throughput(no special options just default settings).

- Wireless – 7.02 Megabits/second (3.189 milliseconds latency)

- Wired – 930 Megabits/second (0.3 milliseconds latency)

What honestly surprised me though was over the WAN, how much slower wifi was on the laptop vs wired connection, it’s almost 1/3rd the performance on the same laptop/browser. I justed measured to be sure – my laptop’s screen (where I believe the antenna is at) is 52 inches from the WRT54G router.

It’s “fast enough” for the Touchpad’s casual browsing, but certainly wouldn’t want to run my home network on it, defeats the purpose of paying for the faster connectivity.

I don’t know how typical these results out there. One place I recently worked at was plagued with wireless problems, performance was soo terrible and unreliable. They upgraded the network and I wasn’t able to maintain a connection for more than two minutes which sucks for SSH. To make matters worse the vast majority of their LAN was in fact wireless, there was very little cable infrastructure in the office. Smart people hooked up switches and stuff for their own tables which made things more usable, though still a far cry from optimal.

In a world where we are getting even more dense populations and technology continues to penetrate driving more deployments of wifi, I suspect interference problems will only get worse.

I’m sure it’s great if the only APs within range are your own, if you live or work at a place that is big enough. But small/medium businesses frequently won’t be so lucky, and if you live in a condo or apartment like me, ouch…

My AP is not capable of operating in the 5Ghz range 802.11a/n, that very well could be significantly less congested. I don’t know if it is accurate or not but wifi radar claims every AP within range of my laptop(47 at the moment) is 802.11g (same as me). My laptop’s specs say it supports 802.11b/g/n, so I’d expect if anyone around me was using N then wifi radar would pick it up, assuming the data being reported by wifi radar is accurate.

Since I am moving in about two weeks I’ll wait till I’m at my new apartment before I think more about the possibility of going to a 802.11n capable device for reduced interference. On that note does any of my 3-4 readers have AP suggestions?

Hopefully my new place will get better 4G wireless coverage as well, I already checked the coverage maps and there are two towers within one mile of me, so it all depends on the apartment itself, how much interference is caused by the building and stuff around it.

I’m happy I have stuck with ethernet for as long as I have at my home, and will continue to use ethernet at home and at work wherever possible.